客戶端通過API操作HDFS

阿新 • • 發佈:2018-12-16

一、前期準備

- jar包準備

解壓hadoop的壓縮包,進入share資料夾,將其中的jar包放入一個資料夾中,在eclipse中匯入。

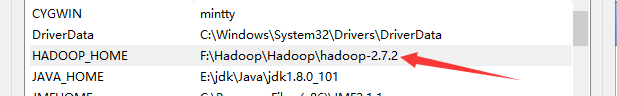

2.配置環境變數

配置HADOOP_HOME環境變數

二、API操作HDFS

操作HDFS步驟主要有三步

1.獲取檔案系統

2.對檔案進行操作

3.關閉資源

1.檔案上傳

public static void main(String[] args) throws Exception { // 1 獲取檔案系統 Configuration configuration = new Configuration(); // 配置在叢集上執行 configuration.set("fs.defaultFS", "hdfs://hadoop102:8020"); //FileSystem fileSystem = FileSystem.get(configuration); // 直接配置訪問叢集的路徑和訪問叢集的使用者名稱稱 FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop102:8020"),configuration, "atguigu"); // 2 把本地檔案上傳到檔案系統中 fileSystem.copyFromLocalFile(new Path("f:/xiyou1.txt"), new Path("/user/atguigu/xiyou1.txt")); // 3 關閉資源 fileSystem.close(); System.out.println("over"); }

2.檔案下載

public void getFileFromHDFS() throws IOException, InterruptedException, URISyntaxException{ Configuration configuration = new Configuration(); //1.獲取檔案系統 FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop102:8020"),configuration,"atguigu"); System.out.println(fileSystem.toString()); //2.執行下載檔案操作 fileSystem.copyToLocalFile(false,new Path("/user/atguigu/xiyou.txt"),new Path("F:/xiyou.txt"),true ); //3.關閉資源 fileSystem.close(); }

注意:在第二步執行下載檔案操作的時候,使用fs.copyToLocalFile(new Path("/."), new Path("e:/.**"));報空指標異常,可能是因為windows下系統環境變數引起。此時建議使用帶四個引數的copyToLocalFile。

3.建立目錄

public void mkdirAtHDFS() throws IOException, InterruptedException, URISyntaxException{ //1.獲取檔案系統 Configuration configuration = new Configuration(); FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop102:8020"), configuration, "atguigu"); //2.執行建立資料夾操作 fileSystem.mkdirs(new Path("/user/atguigu/tiantang")); fileSystem.mkdirs(new Path("/user/atguigu/sunhouzi/houzaizi")); //3.關閉資源 fileSystem.close(); }

4.刪除資料夾

public void deleteAtHDFS() throws IOException, InterruptedException, URISyntaxException{

//1.獲取檔案系統

//1.獲取檔案系統

Configuration configuration = new Configuration();

FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop102:8020"), configuration, "atguigu");

//2.執行刪除操作

fileSystem.delete(new Path("/user/atguigu/xiyou2.txt"),true);

//3.關閉資源

fileSystem.close();

}

5.更改檔名稱

public void renameAtHDFS() throws IOException, InterruptedException, URISyntaxException{

//1.獲取檔案系統

Configuration configuration = new Configuration();

FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop102:8020"), configuration, "atguigu");

//2.執行更改檔名稱

fileSystem.rename(new Path("/user/atguigu/xiyou.txt"),new Path("/user/atguigu/honglou.txt"));

//3.關閉資源

fileSystem.close();

}

6.檢視檔案詳情

public void readFileAtHDFS() throws IOException, InterruptedException, URISyntaxException{

//1.獲取檔案系統

Configuration configuration = new Configuration();

FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop102:8020"), configuration, "atguigu");

//2.執行檢視檔案詳情操作

RemoteIterator<LocatedFileStatus> listFiles = fileSystem.listFiles(new Path("/user/atguigu"), true);

while(listFiles.hasNext()){

LocatedFileStatus next = listFiles.next();

//檔名稱

System.out.println(next.getPath().getName());

//列印檔案塊大小

System.out.println(next.getBlockSize());

//檔案大小

System.out.println(next.getLen());

//檔案許可權

System.out.println(next.getPermission());

//檔案塊的具體資訊

BlockLocation[] blockLocations = next.getBlockLocations();

for(BlockLocation bl:blockLocations){

System.out.println(bl.getOffset());

String[] hosts = bl.getHosts();

for(String host:hosts) {

System.out.println(host);

}

}

System.out.println("-------------------");

}

//3.關閉資源

fileSystem.close();

}

7.檢視資料夾

public void readFolderAtHDFS() throws IOException, InterruptedException, URISyntaxException {

//1.獲取檔案系統

Configuration configuration = new Configuration();

FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop102:8020"), configuration, "atguigu");

//2.資料夾檢視

FileStatus[] listStatus = fileSystem.listStatus(new Path("/user/atguigu/"));

//判斷是檔案還是資料夾

for(FileStatus status : listStatus) {

if(status.isFile()) {

System.out.println("f--"+status.getPath().getName());

} else {

System.out.println("d--"+status.getPath().getName());

}

}

//3.關閉資源

fileSystem.close();

}

三、IO流操作HDFS

1.檔案上傳

public void putFileToHDFS() throws IOException, InterruptedException, URISyntaxException {

//1.獲取檔案系統

Configuration configuration = new Configuration();

FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop102:8020"),configuration,"atguigu");

//2.獲取輸出流

FSDataOutputStream fos = fileSystem.create(new Path("/user/atguigu/output/dongsi.txt"));

//3.獲取輸入流

FileInputStream fileInputStream = new FileInputStream(new File("f:/dongsi.txt"));

try {

//4.流對接

IOUtils.copyBytes(fileInputStream, fos, configuration);

} finally {

// TODO: handle finally clause

//5,關閉資源

IOUtils.closeStream(fos);

IOUtils.closeStream(fileInputStream);

}

}

2.檔案下載

public void getFileFromHDFS() throws IOException, InterruptedException, URISyntaxException {

//1.獲取檔案系統

Configuration configuration = new Configuration();

FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop102:8020"), configuration, "atguigu");

//2獲取輸入流

FSDataInputStream open = fileSystem.open(new Path("/user/atguigu/bajie.txt"));

//3建立輸出流

FileOutputStream fileOutputStream = new FileOutputStream(new File("F:/bajie.txt"));

//4流對接

try {

IOUtils.copyBytes(open, fileOutputStream, configuration);

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

} finally{

//5關閉資源

IOUtils.closeStream(fileOutputStream);

IOUtils.closeStream(open);

}

}

3.定位檔案讀取

1.下載大檔案第一塊資料

//下載大檔案第一塊資料

@Test

public void getFileFromHDFSSeek1() throws IOException, InterruptedException, URISyntaxException{

//1.獲取檔案系統

Configuration configuration = new Configuration();

FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop102:8020"),configuration,"atguigu");

//2.獲取輸入流

FSDataInputStream fis = fileSystem.open(new Path("/user/atguigu/input/hadoop-2.7.2.tar.gz"));

//3.建立輸出流

FileOutputStream fos = new FileOutputStream(new File("F:/hadoop-2.7.2.tar.gz.part1"));

//4.流對接(指向第一塊資料首地址)

byte[] buf = new byte[1024];

for (int i = 0; i<1024*128;i++) {

fis.read(buf);

fos.write(buf);

}

try {

IOUtils.closeStream(fis);

IOUtils.closeStream(fos);

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

2.下載大檔案第二塊

//下載第二塊

@Test

public void getFileFromHDFSSeek2() throws IOException, InterruptedException, URISyntaxException {

//1.獲取檔案系統

Configuration configuration = new Configuration();

FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadoop102:8020"),configuration,"atguigu");

//2.獲取輸入流

FSDataInputStream fis = fileSystem.open(new Path("/user/atguigu/input/hadoop-2.7.2.tar.gz"));

//3.建立輸出流

FileOutputStream fos = new FileOutputStream(new File("F:/hadoop-2.7.2.tar.gz.part2"));

//4.流對接(指向第二塊資料的首地址)

//定位到128M

fis.seek(1024*1024*128);

try {

IOUtils.copyBytes(fis, fos, configuration);

}catch(Exception e){

}finally {

IOUtils.closeStream(fis);

IOUtils.closeStream(fos);

}

}

3.合併檔案

在Windows命令列視窗執行

type hadoop-2.7.2.tar.gz.part2 >> hadoop-2.7.2.tar.gz.part1