兩臺不同雲伺服器搭建hadoop

# 不同雲伺服器搭建Hadoop

**本文主要是想幫助和我一樣的新手。因為網上內容比較多,不好尋找。憑自己經驗整合了一些內容。參考文章在最後。**

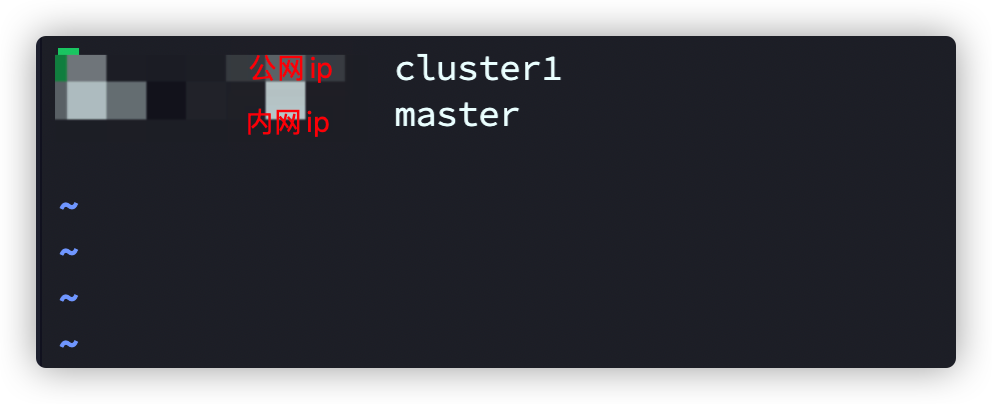

**使用的是一個阿里雲伺服器(master)以及一個騰訊雲伺服器(cluster1)。**

**在以下程式碼中帶有[root@master hadoop-3.3.1]的是自己直接在雲主機執行的。在開始安裝hadoop前推薦使用ssh進行連線,對於ssh是什麼可以百度一下。電腦為mac m1 air。因為晶片太難找到適配的軟體,所以使用雲主機嘗試。**

**廠商不同,叢集中雲主機間的通訊也比較麻煩,因為hadoop一半設計的就是執行在同一個區域網中。主要體現在hadoop檔案設定中**

**推薦讀者打程式碼多使用tab自動補全,因為寫這篇文章前,我已經設定好了,有些是靠記憶進行復現,所以有些程式碼是我自己手打的,步驟也是我記憶中的,可能會有一些錯誤。所以主要看內容理解,具體的可以百度。**

**如果發現哪有問題,或者我有錯誤和遺漏可以在評論區留言討論。謝謝**

## 1. 設定不同主機間的對映

```bash

# 相互ping一下看是否連通

# master

ping cluster1

# cluster1

ping master

```

## 2. 設定SSH免密通訊

目的:相互間通訊時可以不用密碼。(如果不設定,最後啟動hadoop後就會出現連線不了的錯誤)

上網查一下,最後驗證方法

```bash

# master

ssh cluster1 # 不需要密碼就能進

```

## 3. 設定進行分發的指令碼

目的:在一個主機上更改了檔案後可以一鍵同步到其它的主機上。本來需要輸入密碼,但是因為ssh免密登陸後可以直接分發。

```bash

[root@master hadoop-3.3.1]# echo $PATH

.:/home/java/jdk1.8/bin:/home/hadoop/hadoop-3.3.1/bin:.:/home/java/jdk1.8/bin:/bin:/usr/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin

# 1. 在上面的目錄中隨便找一個比如/usr/bin中進行以下步驟

cd /home/atguigu

mkdir bin

cd bin

vim xsync

# 2. 在xsync中寫入以下引數

#!/bin/bash

#1. 判斷引數個數

if [ $# -lt 1 ]

then

echo Not Enough Arguement!

exit;

fi

#2. 遍歷叢集所有機器

for host in hadoop102 hadoop103 hadoop104

do

echo ==================== $host ====================

#3. 遍歷所有目錄,挨個傳送

for file in $@

do

#4. 判斷檔案是否存在

if [ -e $file ]

then

#5. 獲取父目錄

pdir=$(cd -P $(dirname $file); pwd)

#6. 獲取當前檔案的名稱

fname=$(basename $file)

ssh $host "mkdir -p $pdir"

rsync -av $pdir/$fname $host:$pdir

else

echo $file does not exists!

fi

done

done

# 儲存退出

# 3. 更改許可權

chmod 777 xsync

# 使用時直接

xsync 檔名 # 在檔案或者資料夾所在的檔案

# 比如 /home/hadoop

# 在 /home目錄下

xsync hadoop

# 例子

[root@master hadoop-3.3.1]# xsync etc/

==================== master ====================

sending incremental file list

sent 1,029 bytes received 19 bytes 2,096.00 bytes/sec

total size is 113,986 speedup is 108.77

==================== cluster1 ====================

sending incremental file list

etc/hadoop/

etc/hadoop/core-site.xml

etc/hadoop/hdfs-site.xml

sent 1,392 bytes received 94 bytes 990.67 bytes/sec

total size is 113,986 speedup is 76.71

```

## 4. 下載jdk1.8以及hadoop3.3.1

### 1. 使用軟體把檔案上傳到雲伺服器上。這裡我使用terminus 中的ftsp傳到/root目錄下

JDK8下載連結:https://www.oracle.com/java/technologies/downloads/#java8

Hadoop3.3.1下載連結:https://dlcdn.apache.org/hadoop/common/hadoop-3.3.1/hadoop-3.3.1.tar.gz

### 2. 解壓到/home/java 和/home/hadoop下

```bash

tar -zxvf jdk1.8.tar.gz -C /home/java

tar -zxvf hadoop-3.3.1-tar.gz -C /home/hadoop

```

### 3. 設定環境變數

```bash

# 新建/etc/profile.d/my_env.sh

vim /etc/profile.d/my_env.sh

# my_env.sh中寫入如下內容

export PATH=/bin:/usr/bin:$PATH

#Java Configuration

export JAVA_HOME=/home/java/jdk1.8

export JRE_HOME=/home/java/jdk1.8/jre

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

export PATH=.:${JAVA_HOME}/bin:$PATH

#Hadoop Configuration

export HADOOP_HOME=/home/hadoop/hadoop-3.3.1

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export PATH=.:${JAVA_HOME}/bin:${HADOOP_HOME}/bin:$PATH

```

#### 檢驗

```bash

# java檢驗

[root@master hadoop-3.3.1]# java -version

java version "1.8.0_212"

Java(TM) SE Runtime Environment (build 1.8.0_212-b10)

Java HotSpot(TM) 64-Bit Server VM (build 25.212-b10, mixed mode)

# hadoop檢驗

# 輸入hado 會自動補全

```

## 5. hadoop的檔案設定

**在/home/hadoop/hadoop-3.3.1/etc/hadoop目錄下**

```bash

[root@master hadoop]# vim core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<!-- 由於我的是root使用者所以寫成root,如果你建立的資料夾不在root下記得換掉 -->

<value>/root/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.http.staticuser.user</name>

<!-- 指定靜態角色,HDFS上傳檔案的時候需要 -->

<value>root</value>

</property>

</configuration>

```

```bash

[root@master hadoop]# vim hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.name.dir</name>

<!-- 由於我的是root使用者所以寫成root,如果你建立的資料夾不在root下記得換掉 -->

<value>/root/hadoop/dfs/name</value>

<description>Path on the local filesystem where theNameNode stores the namespace and transactions logs persistently.</description>

</property>

<property>

<name>dfs.data.dir</name>

<value>/root/hadoop/dfs/data</value>

<description>Comma separated list of paths on the localfilesystem of a DataNode where it should store its blocks.</description>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<!-- 如果不設定的話,則需要在host檔案中配置好對映你的hostnamme -->

<name>dfs.http.address</name>

<value>0.0.0.0:50070</value>

</property>

</configuration>

```

```bash

[root@master hadoop]# vim yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

</property>

<property>

<description>The address of the scheduler interface.</description>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

</property>

<property>

<description>The http address of the RM web application.</description>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

<property>

<description>The https adddress of the RM web application.</description>

<name>yarn.resourcemanager.webapp.https.address</name>

<value>${yarn.resourcemanager.hostname}:8090</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<property>

<description>The address of the RM admin interface.</description>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>8182</value>

<discription>每個節點可用記憶體,單位MB,預設8182MB</discription>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>

```

```bash

[root@master hadoop]# vim mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

```

### **因為我使用的都是root使用者,所以要新增以下引數,不然不能啟動**

在/home/hadoop/hadoop-3.3.1/hadoop/sbin路徑下:

將start-dfs.sh,stop-dfs.sh兩個檔案頂部新增以下引數

```bash

#!/usr/bin/env bash

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

```

還有,start-yarn.sh,stop-yarn.sh頂部也需新增以下:

```bash

#!/usr/bin/env bash

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

Licensed to the Apache Software Foundation (ASF) under one or more

```

## 5. 開啟hadoop

#### 初始化

```bash

cd /home/hadoop/hadoop-3.3.1/bin

./hdfs namenode -format

./hdfs datanode -format

# 如果重新初始化,需要刪除兩個檔案

/root/hadoop/tmp

/home/hadoop/hadoop-3.3.1/logs

```

```bash

[root@master hadoop-3.3.1]# sbin/start-all.sh

# 可以分步啟動,start-hdfs.sh

# start-yarn.sh

```

### 命令列檢視叢集狀態

```hadoop dfsadmin -report```

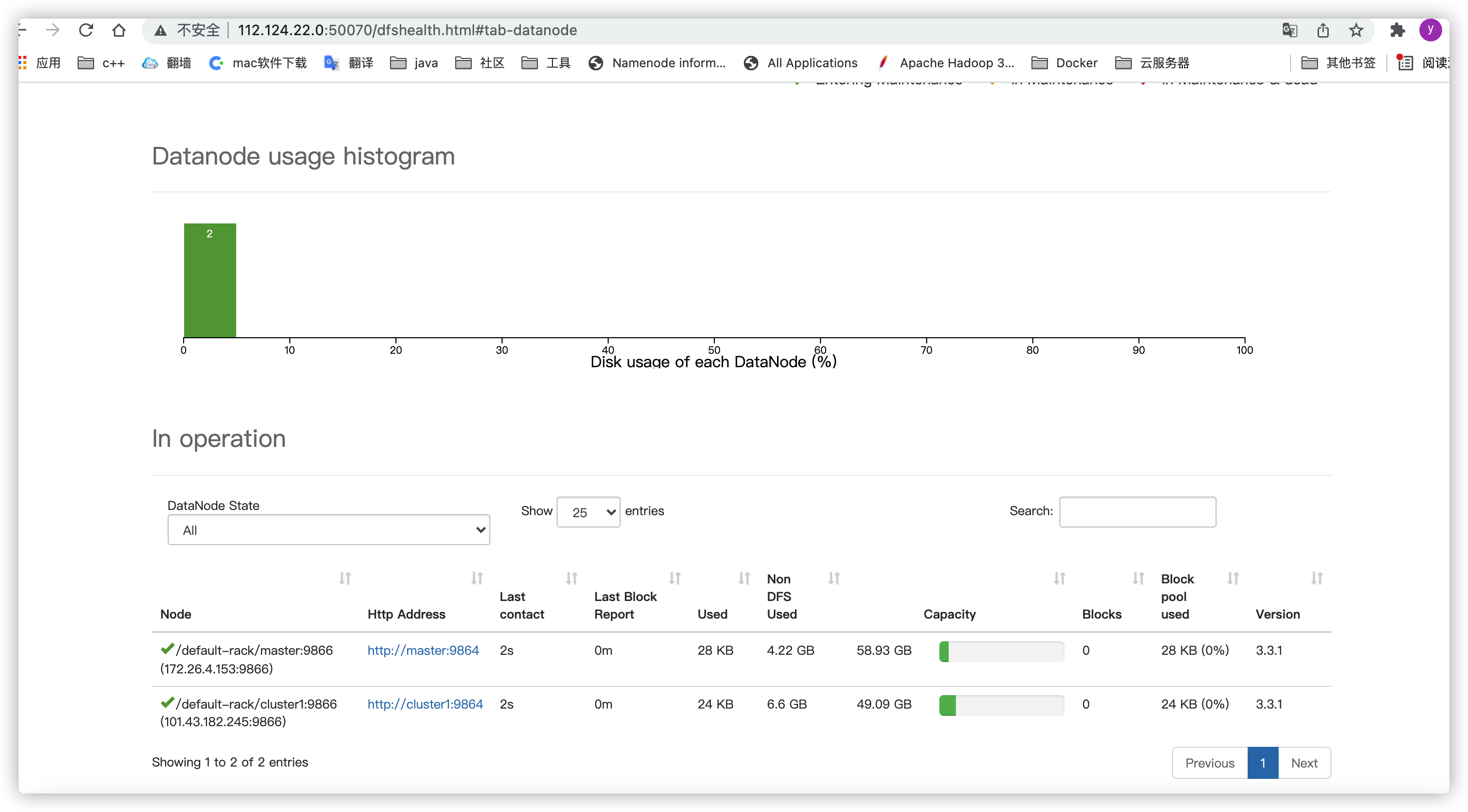

### web訪問

公網ip加設定的埠(公網ip:50070)

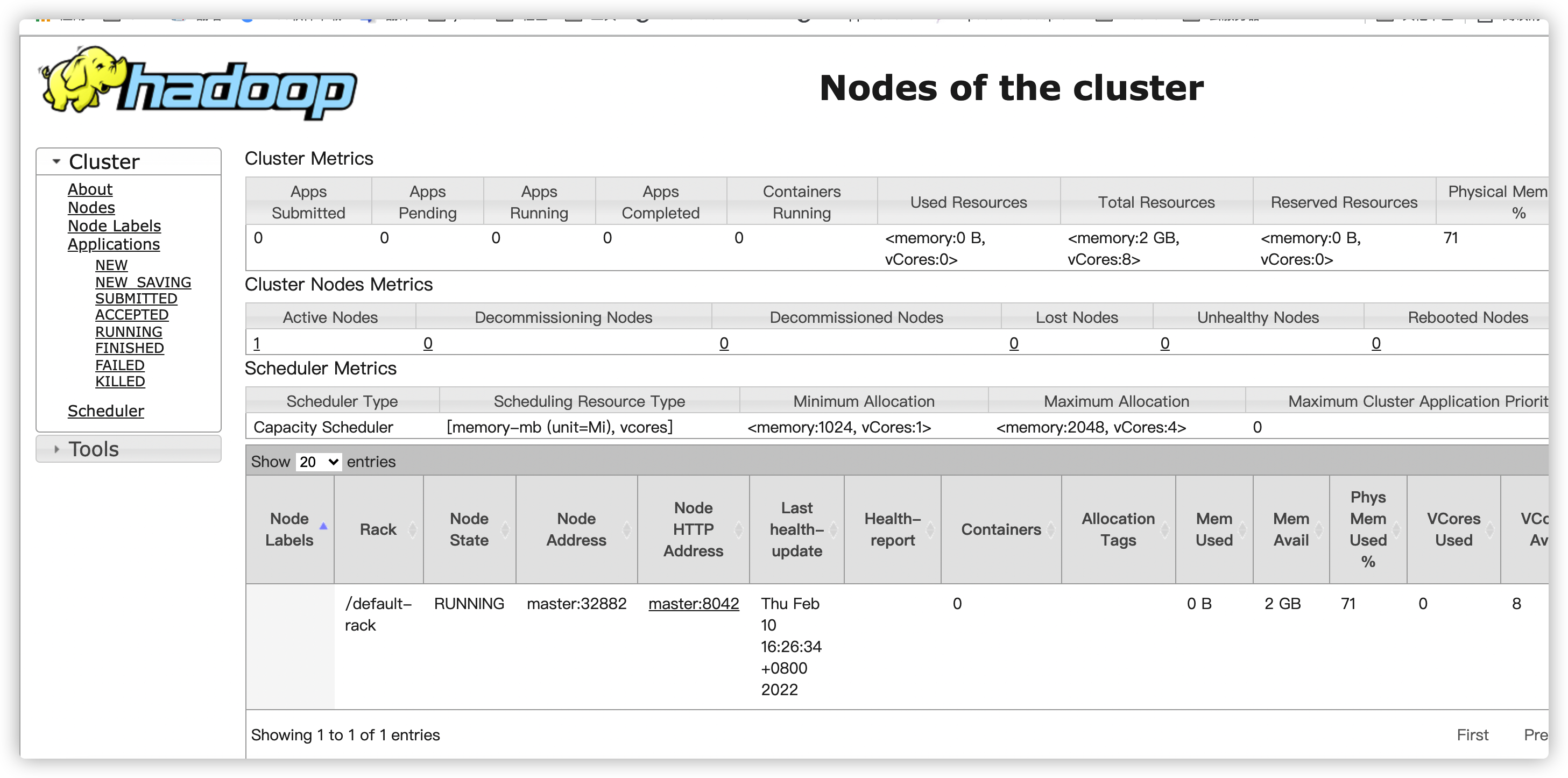

(公網ip:8088)

問題:Active Nodes 只有1 目前還不知道怎麼解決,如果有大佬會,能不能麻煩告訴下。

參考:https://www.cnblogs.com/sandaman2019/p/15513391.html

參考:b站尚矽谷hadoop的視訊:xsync指令碼的設定 hadoop的啟動和初始化