利用Tensorflow實現神經網絡模型

阿新 • • 發佈:2017-05-09

flow one 什麽 hold test ase tensor dom def

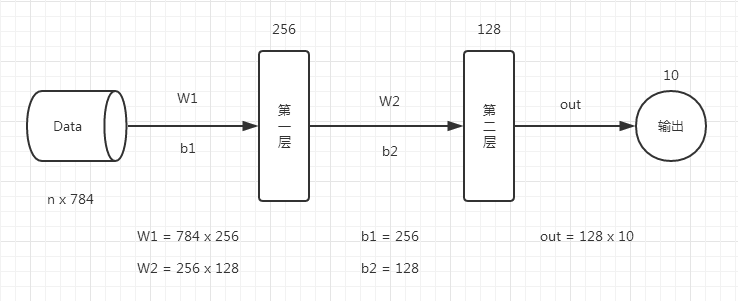

首先看一下神經網絡模型,一個比較簡單的兩層神經。

代碼如下:

# 定義參數 n_hidden_1 = 256 #第一層神經元 n_hidden_2 = 128 #第二層神經元 n_input = 784 #輸入大小,28*28的一個灰度圖,彩圖沒有什麽意義 n_classes = 10 #結果是要得到一個幾分類的任務 # 輸入和輸出 x = tf.placeholder("float", [None, n_input]) y = tf.placeholder("float", [None, n_classes]) # 權重和偏置參數stddev = 0.1 weights = { ‘w1‘: tf.Variable(tf.random_normal([n_input, n_hidden_1], stddev=stddev)), ‘w2‘: tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2], stddev=stddev)), ‘out‘: tf.Variable(tf.random_normal([n_hidden_2, n_classes], stddev=stddev)) } biases = { ‘b1‘: tf.Variable(tf.random_normal([n_hidden_1])),‘b2‘: tf.Variable(tf.random_normal([n_hidden_2])), ‘out‘: tf.Variable(tf.random_normal([n_classes])) } print ("NETWORK READY") def multilayer_perceptron(_X, _weights, _biases): #第1層神經網絡 = tf.nn.激活函數(tf.加上偏置量(tf.矩陣相乘(輸入Data, 權重W1), 偏置參數b1)) layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(_X, _weights[‘w1‘]), _biases[‘b1‘])) #第2層的格式與第1層一樣,第2層的輸入是第1層的輸出。 layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, _weights[‘w2‘]), _biases[‘b2‘])) #返回預測值 return (tf.matmul(layer_2, _weights[‘out‘]) + _biases[‘out‘]) # 預測 pred = multilayer_perceptron(x, weights, biases) # 計算損失函數和優化 cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(pred, y)) optm = tf.train.GradientDescentOptimizer(learning_rate=0.001).minimize(cost) corr = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1)) accr = tf.reduce_mean(tf.cast(corr, "float")) # 初始化 init = tf.global_variables_initializer() print ("FUNCTIONS READY") # 訓練 training_epochs = 20 batch_size = 100 display_step = 4 # LAUNCH THE GRAPH sess = tf.Session() sess.run(init) # 優化器 for epoch in range(training_epochs): avg_cost = 0. total_batch = int(mnist.train.num_examples/batch_size) # 叠代訓練 for i in range(total_batch): batch_xs, batch_ys = mnist.train.next_batch(batch_size) feeds = {x: batch_xs, y: batch_ys} sess.run(optm, feed_dict=feeds) avg_cost += sess.run(cost, feed_dict=feeds) avg_cost = avg_cost / total_batch # 打印結果 if (epoch+1) % display_step == 0: print ("Epoch: %03d/%03d cost: %.9f" % (epoch, training_epochs, avg_cost)) feeds = {x: batch_xs, y: batch_ys} train_acc = sess.run(accr, feed_dict=feeds) print ("TRAIN ACCURACY: %.3f" % (train_acc)) feeds = {x: mnist.test.images, y: mnist.test.labels} test_acc = sess.run(accr, feed_dict=feeds) print ("TEST ACCURACY: %.3f" % (test_acc)) print ("OPTIMIZATION FINISHED")

利用Tensorflow實現神經網絡模型