MySQL 高可用集群架構 MHA

MHA(Master HighAvailability)目前在MySQL高可用方面是一個相對成熟的解決方案,它由日本DeNA公司youshimaton(現就職於Facebook公司)開發,是一套優秀的作為MySQL高可用性環境下故障切換和主從提升的高可用軟件。在MySQL故障切換過程中,MHA能做到在0~30秒之內自動完成數據庫的故障切換操作,並且在進行故障切換的過程中,MHA能在最大程度上保證數據的一致性,以達到真正意義上的高可用。

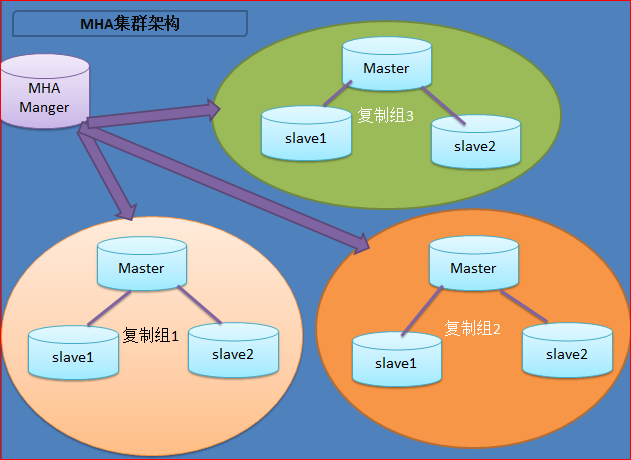

MHA裏有兩個角色一個是MHA Node(數據節點)另一個是MHA Manager(管理節點)。

MHA Manager可以單獨部署在一臺獨立的機器上管理多個master-slave集群,也可以部署在一臺

在MHA自動故障切換過程中,MHA試圖從宕機的主服務器上保存二進制日誌,最大程度的保證數據的不丟失,但這並不總是可行的。例如,如果主服務器硬件故障或無法通過

註:從MySQL5.5開始,MySQL以插件的形式支持半同步復制。如何理解半同步呢?首先我們來看看異步,全同步的概念:

異步復制(Asynchronous replication)

MySQL默認的復制即是異步的,主庫在執行完客戶端提交的事務後會立即將結果返給給客戶端,並不關心從庫是否已經接收並處理,這樣就會有一個問題,主如果

全同步復制(Fully synchronous replication)

指當主庫執行完一個事務,所有的從庫都執行了該事務才返回給客戶端。因為需要等待所有從庫執行完該事務才能返回,所以全同步復制的性能必然會受到嚴重的影響。

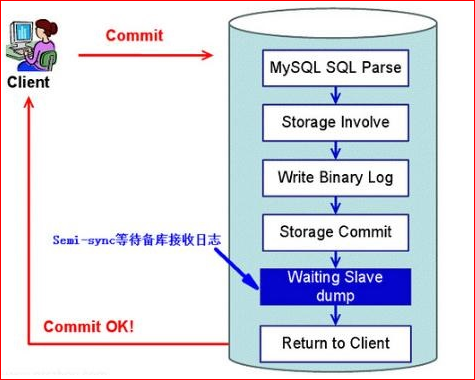

半同步復制(Semisynchronous replication)

介於異步復制和全同步復制之間,主庫在執行完客戶端提交的事務後不是立刻返回給客戶端,而是等待至少一個從庫接收到並寫到relay log中才返回給客戶端。相對於異步復制,半同步復制提高了數據的安全性,同時它也造成了一定程度的延遲,這個延遲最少是一個TCP/IP往返的時間。所以,半同步復制最好在低延時的網絡中使用。

下面來看看半同步復制的原理圖:

總結:異步與半同步異同

默認情況下MySQL的復制是異步的,Master上所有的更新操作寫入Binlog之後並不確保所有的更新都被復制到Slave之上。異步操作雖然效率高,但是在Master/Slave出現問題的時候,存在很高數據不同步的風險,甚至可能丟失數據。

MySQL5.5引入半同步復制功能的目的是為了保證在master出問題的時候,至少有一臺Slave的數據是完整的。在超時的情況下也可以臨時轉入異步復制,保障業務的正常使用,直到一臺salve追趕上之後,繼續切換到半同步模式。

工作原理:

相較於其它HA軟件,MHA的目的在於維持MySQL Replication中Master庫的高可用性,其最大特點是可以修復多個Slave之間的差異日誌,最終使所有Slave保持數據一致,然後從中選擇一個充當新的Master,並將其它Slave指向它。

-從宕機崩潰的master保存二進制日誌事件(binlogevents)。

-識別含有最新更新的slave。

-應用差異的中繼日誌(relay log)到其它slave。

-應用從master保存的二進制日誌事件(binlogevents)。

-提升一個slave為新master。

-使其它的slave連接新的master進行復制。

目前MHA主要支持一主多從的架構,要搭建MHA,要求一個復制集群中必須最少有三臺數據庫服務器,一主二從,即一臺充當master,一臺充當備用master,另外一臺充當從庫,因為至少需要三臺服務器。

部署環境如下:

| 角色 | Ip | 主機名 | os |

| master | 192.168.137.134 | master | centos 6.5 x86_64 |

| Candidate | 192.168.137.130 | Candidate | |

| slave+manage | 192.168.137.146 | slave |

其中master對外提供寫服務,Candidate為備選master,管理節點放在純slave機器上。master一旦宕機,Candidate提升為主庫

一、基礎環境準備

1、在3臺機器上配置epel源

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-6.repo

rpm -ivh http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

2、建立ssh無交互登錄環境,

[[email protected] ~]#ssh-keygen -t rsa -P ‘‘

chmod 600 .ssh/*

cat .ssh/id_rsa.pub >.ssh/authorized_keys

scp -p .ssh/id_rsa .ssh/authorized_keys 192.168.137.130:/root/.ssh

scp -p .ssh/id_rsa .ssh/authorized_keys 192.168.137.146:/root/.ssh

二、配置mysql半同步復制

註意:mysql主從復制操作此處不做演示

master授權:

grant replication slave,replication client on *.* to [email protected]%‘ identified by ‘123456‘;

grant all on *.* to [email protected]%‘ identified by ‘123456‘;

Candidate授權:

grant replication slave,replication client on *.* to [email protected]%‘ identified by ‘123456‘;

grant all on *.* to [email protected]%‘ identified by ‘123456‘;

slave授權:

grant all on *.* to [email protected]%‘ identified by ‘123456‘;

如果用mysql默認的異步模式,當主庫硬件損壞宕機造成的數據丟失,因此在配置MHA的同時建議配置成MySQL的半同步復制。

註:mysql半同步插件是由谷歌提供,具體位置/usr/local/mysql/lib/plugin/下,一個是master用的semisync_master.so,一個是slave用的semisync_slave.so,

mysql> show variables like ‘%plugin_dir%‘;

+---------------+------------------------------+

| Variable_name | Value |

+---------------+------------------------------+

| plugin_dir | /usr/local/mysql/lib/plugin/ |

+---------------+------------------------------+

1、分別在主從節點上安裝相關的插件(master,Candidate,slave)

在MySQL上安裝插件需要數據庫支持動態載入。檢查是否支持,用如下檢測:

mysql> show variables like ‘%have_dynamic_loading%‘;

+----------------------+-------+

| Variable_name | Value |

+----------------------+-------+

| have_dynamic_loading | YES

所有mysql數據庫服務器,安裝半同步插件(semisync_master.so,semisync_slave.so)

mysql> install plugin rpl_semi_sync_master soname ‘semisync_master.so‘;

mysql> install plugin rpl_semi_sync_slave soname ‘semisync_slave.so‘;

檢查Plugin是否已正確安裝:

mysql> show plugins;

或

mysql> select * from information_schema.plugins;

查看半同步相關信息

mysql> show variables like ‘%rpl_semi_sync%‘;

rpl_semi_sync_master_enabled | OFF |

| rpl_semi_sync_master_timeout | 10000 |

| rpl_semi_sync_master_trace_level | 32 |

| rpl_semi_sync_master_wait_no_slave | ON |

| rpl_semi_sync_slave_enabled | OFF |

| rpl_semi_sync_slave_trace_level | 32

上圖可以看到半同復制插件已經安裝,只是還沒有啟用,所以是OFF

2、修改my.cnf文件,配置主從同步:

註:若主MYSQL服務器已經存在,只是後期才搭建從MYSQL服務器,在置配數據同步前應先將主MYSQL服務器的要同步的數據庫拷貝到從MYSQL服務器上(如先在主MYSQL上備份數據庫,再用備份在從MYSQL服務器上恢復)

master mysql主機:

server-id = 1

log-bin=mysql-bin

binlog_format=mixed

log-bin-index=mysql-bin.index

rpl_semi_sync_master_enabled=1

rpl_semi_sync_master_timeout=10000

rpl_semi_sync_slave_enabled=1

relay_log_purge=0

relay-log= relay-bin

relay-log-index = relay-bin.index

註:

rpl_semi_sync_master_enabled=1 1表是啟用,0表示關閉

rpl_semi_sync_master_timeout=10000:毫秒單位,該參數主服務器等待確認消息10秒後,不再等待,變為異步方式。

Candidate 主機:

server-id = 2

log-bin=mysql-bin

binlog_format=mixed

log-bin-index=mysql-bin.index

relay_log_purge=0

relay-log= relay-bin

relay-log-index = slave-relay-bin.index

rpl_semi_sync_master_enabled=1

rpl_semi_sync_master_timeout=10000

rpl_semi_sync_slave_enabled=1

註:relay_log_purge=0,禁止 SQL 線程在執行完一個 relay log 後自動將其刪除,對於MHA場景下,對於某些滯後從庫的恢復依賴於其他從庫的relaylog,因此采取禁用自動刪除功能

Slave主機:

Server-id = 3

log-bin = mysql-bin

relay-log = relay-bin

relay-log-index = slave-relay-bin.index

read_only = 1

rpl_semi_sync_slave_enabled = 1

查看半同步相關信息

mysql> show variables like ‘%rpl_semi_sync%‘;

查看半同步狀態:

mysql> show status like ‘%rpl_semi_sync%‘;

| Rpl_semi_sync_master_clients | 2 |

重點關註的參數:

rpl_semi_sync_master_status :顯示主服務是異步復制模式還是半同步復制模式

rpl_semi_sync_master_clients :顯示有多少個從服務器配置為半同步復制模式

rpl_semi_sync_master_yes_tx :顯示從服務器確認成功提交的數量

rpl_semi_sync_master_no_tx :顯示從服務器確認不成功提交的數量

rpl_semi_sync_master_tx_avg_wait_time :事務因開啟 semi_sync ,平均需要額外等待的時間

rpl_semi_sync_master_net_avg_wait_time :事務進入等待隊列後,到網絡平均等待時間

三、配置mysql-mha

所有mysql節點安裝

rpm -ivh perl-DBD-MySQL-4.013-3.el6.i686.rpm [yum -y install perl-DBD-MySQL]

rpm -ivh mha4mysql-node-0.56-0.el6.noarch.rpm

2. manage需安裝依賴的perl包

rpm -ivh perl-Config-Tiny-2.12-7.1.el6.noarch.rpm

rpm -ivh perl-DBD-MySQL-4.013-3.el6.i686.rpm [yum -y install perl-DBD-MySQL]

rpm -ivh compat-db43-4.3.29-15.el6.x86_64.rpm

rpm -ivh perl-Mail-Sender-0.8.16-3.el6.noarch.rpm

rpm -ivh perl-Parallel-ForkManager-0.7.9-1.el6.noarch.rpm

rpm -ivh perl-TimeDate-1.16-11.1.el6.noarch.rpm

rpm -ivh perl-MIME-Types-1.28-2.el6.noarch.rpm

rpm -ivh perl-MailTools-2.04-4.el6.noarch.rpm

rpm -ivh perl-Email-Date-Format-1.002-5.el6.noarch.rpm

rpm -ivh perl-Params-Validate-0.92-3.el6.x86_64.rpm

rpm -ivh perl-Params-Validate-0.92-3.el6.x86_64.rpm

rpm -ivh perl-MIME-Lite-3.027-2.el6.noarch.rpm

rpm -ivh perl-Mail-Sendmail-0.79-12.el6.noarch.rpm

rpm -ivh perl-Log-Dispatch-2.27-1.el6.noarch.rpm

yum install -y perl-Time-HiRes-1.9721-144.el6.x86_64

rpm -ivh mha4mysql-manager-0.56-0.el6.noarch.rpm

3. 配置mha

配置文件位於管理節點,通常包括每一個mysql server的主機名,mysql用戶名,密碼,工作目錄等等。

mkdir /etc/masterha/

vim /etc/masterha/app1.cnf

[server default]

user=mhauser

password=123456

manager_workdir=/data/masterha/app1

manager_log=/data/masterha/app1/manager.log

remote_workdir=/data/masterha/app1

ssh_user=root

repl_user=repl

repl_password=123456

ping_interval=1

[server1]

hostname=192.168.137.134

port=3306

master_binlog_dir=/usr/local/mysql/data

candidate_master=1

[server2]

hostname=192.168.137.130

port=3306

master_binlog_dir=/usr/local/mysql/data

candidate_master=1

[server3]

hostname=192.168.137.146

port=3306

master_binlog_dir=/usr/local/mysql/data

no_master=1

配關配置項的解釋:

manager_workdir=/masterha/app1//設置manager的工作目錄

manager_log=/masterha/app1/manager.log//設置manager的日誌

user=manager//設置監控用戶manager

password=123456 //監控用戶manager的密碼

ssh_user=root //ssh連接用戶

repl_user=mharep //主從復制用戶

repl_password=123.abc//主從復制用戶密碼

ping_interval=1 //設置監控主庫,發送ping包的時間間隔,默認是3秒,嘗試三次沒有回應的時候自動進行failover

master_binlog_dir=/usr/local/mysql/data //設置master 保存binlog的位置,以便MHA可以找到master的日誌,我這裏的也就是mysql的數據目錄

candidate_master=1//設置為候選master,如果設置該參數以後,發生主從切換以後將會將此從庫提升為主庫。

檢測各節點間ssh互信通信配置是否ok

masterha_check_ssh --conf=/etc/masterha/app1.cnf

結果:All SSH connection tests passed successfully.

檢測各節點間主從復制是否ok

masterha_check_repl --conf=/etc/masterha/app1.cnf

結果:MySQL Replication Health is OK.

在驗證時,若遇到這個錯誤:Can‘t exec "mysqlbinlog" ......

解決方法是在所有服務器上執行:

ln -s /usr/local/mysql/bin/* /usr/local/bin/

啟動manager:

nohup /usr/bin/masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover > /etc/masterha/manager.log 2>&1 &

--remove_dead_master_conf 為主從切換後,老的主庫IP將會從配置文件中移除

--ignore_last_failover 忽略生成的切換完成文件,若不忽略,則8小時內無法再次切換

--ignore_fail_on_start

##當有slave 節點宕掉時,MHA默認是啟動不了的,加上此參數即使有節點宕掉也能啟動MHA,

關閉MHA:

masterha_stop --conf=/etc/masterha/app1.cnf

查看MHA狀態:

masterha_check_status --conf=/etc/masterha/app1.cnf

app1 (pid:45128) is running(0:PING_OK), master:192.168.137.134

4.模擬故障轉移

停掉master,

/etc/init.d/mysqld stop

查看 MHA 日誌 /data/masterha/app1/manager.log

----- Failover Report -----

app1: MySQL Master failover 192.168.137.134(192.168.137.134:3306) to 192.168.137.1

30(192.168.137.130:3306) succeeded

Master 192.168.137.134(192.168.137.134:3306) is down!

Check MHA Manager logs at zifuji:/data/masterha/app1/manager.log for details.

Started automated(non-interactive) failover.

The latest slave 192.168.137.130(192.168.137.130:3306) has all relay logs for reco

very.

Selected 192.168.137.130(192.168.137.130:3306) as a new master.

192.168.137.130(192.168.137.130:3306): OK: Applying all logs succeeded.

192.168.137.146(192.168.137.146:3306): This host has the latest relay log events.

Generating relay diff files from the latest slave succeeded.

192.168.137.146(192.168.137.146:3306): OK: Applying all logs succeeded. Slave star

ted, replicating from 192.168.137.130(192.168.137.130:3306)

192.168.137.130(192.168.137.130:3306): Resetting slave info succeeded.

Master failover to 192.168.137.130(192.168.137.130:3306) completed successfully.

3. 查看slave復制狀態

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.137.130

Master_User: repl

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000003

MHA Manager 端日常主要操作步驟

1)檢查是否有下列文件,有則刪除。

發生主從切換後,MHAmanager服務會自動停掉,且在manager_workdir(/data/masterha/app1/app1.failover.complete)目錄下面生成文件app1.failover.complete,若要啟動MHA,必須先確保無此文件)

find / -name ‘app1.failover.complete‘

rm -f /data/masterha/app1/app1.failover.complete

2)檢查MHA當前置:

# masterha_check_repl --conf=/etc/masterha/app1.cnf

3)啟動MHA:

#nohup masterha_manager --conf=/etc/masterha/app1.cnf&>/etc/masterha/manager.log &

當有slave 節點宕掉時,默認是啟動不了的,加上 --ignore_fail_on_start即使有節點宕掉也能啟動MHA,如下:

#nohup masterha_manager --conf=/etc/masterha/app1.cnf --ignore_fail_on_start&>/etc/masterha/manager.log &

4)停止MHA: masterha_stop --conf=/etc/masterha/app1.cnf

5)檢查狀態:

# masterha_check_status --conf=/etc/masterha/app1.cnf

6)檢查日誌:

#tail -f /etc/masterha/manager.log

7)主從切換,原主庫後續工作

vim /etc/my.cnf

read_only=ON

relay_log_purge = 0

mysql> reset slave all;

mysql> reset master;

/etc/init.d/mysqld restart

mysql> CHANGE MASTER TO MASTER_HOST=‘192.168.137.130‘,MASTER_USER=‘repl‘,MASTER_PASSWORD=‘123456‘;

##與新主庫做主從復制

masterha_check_status --conf=/etc/masterha/app1.cnf

app1 (pid:45950) is running(0:PING_OK), master:192.168.137.130

註意:如果正常,會顯示"PING_OK",否則會顯示"NOT_RUNNING",這代表MHA監控沒有開啟。

定期刪除中繼日誌

在配置主從復制中,slave上設置了參數relay_log_purge=0,所以slave節點需要定期刪除中繼日誌,建議每個slave節點刪除中繼日誌的時間錯開。

corntab -e

0 5 * * * /usr/local/bin/purge_relay_logs - -user=root --password=pwd123 --port=3306 --disable_relay_log_purge >>/var/log/purge_relay.log 2>&1

5、配置VIP

ip配置可以采用兩種方式,一種通過keepalived的方式管理虛擬ip的浮動;另外一種通過腳本方式啟動虛擬ip的方式(即不需要keepalived或者heartbeat類似的軟件)。

1、keepalived方式管理虛擬ip,keepalived配置方法如下:

在master和Candidate主機上安裝keepalived

安裝依賴包:

[[email protected] ~]# yum install openssl-devel libnfnetlink-devel libnfnetlink popt-devel kernel-devel -y

wget http://www.keepalived.org/software/keepalived-1.2.20.tar.gz

ln -s /usr/src/kernels/2.6.32-642.1.1.el6.x86_64 /usr/src/linux

tar -xzf keepalived-1.2.20.tar.gz;cd keepalived-1.2.20

./configure --prefix=/usr/local/keepalived;make && make install

ln -s /usr/local/keepalived/sbin/keepalived /usr/bin/keepalived

cp /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/keepalived

mkdir /etc/keepalived

ln -s /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf

chmod 755 /etc/init.d/keepalived

chkconfig --add keepalived

cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

service keepalived restart

echo 1 > /proc/sys/net/ipv4/ip_forward

修改Keepalived的配置文件(在master上配置)

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id mysql-ha1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.137.100

}

}

在候選master(Candidate)上配置

[[email protected] keepalived-1.2.20]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id mysql-ha2

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.137.100

}

}

啟動keepalived服務,在master上啟動並查看日誌

/etc/init.d/keepalived start

tail -f/var/log/messages

Aug 14 01:05:25 minion Keepalived_vrrp[39720]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.137.100

[[email protected] ~]# ip addr show dev eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:57:66:49 brd ff:ff:ff:ff:ff:ff

inet 192.168.137.134/24 brd 192.168.137.255 scope global eth0

inet 192.168.137.100/32 scope global eth0

inet6 fe80::20c:29ff:fe57:6649/64 scope link

valid_lft forever preferred_lft forever

[[email protected] ~]# ip addr show dev eth0 ##此時備選master上是沒有虛擬ip的

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:a5:b4:85 brd ff:ff:ff:ff:ff:ff

inet 192.168.137.130/24 brd 192.168.137.255 scope global eth0

inet6 fe80::20c:29ff:fea5:b485/64 scope link

valid_lft forever preferred_lft forever

註意:

上面兩臺服務器的keepalived都設置為了BACKUP模式,在keepalived中2種模式,分別是master->backup模式和backup->backup模式。這兩種模式有很大區別。在master->backup模式下,一旦主庫宕機,虛擬ip會自動漂移到從庫,當主庫修復後,keepalived啟動後,還會把虛擬ip搶占過來,即使設置了非搶占模式(nopreempt)搶占ip的動作也會發生。在backup->backup模式下,當主庫宕機後虛擬ip會自動漂移到從庫上,當原主庫恢復和keepalived服務啟動後,並不會搶占新主的虛擬ip,即使是優先級高於從庫的優先級別,也不會發生搶占。為了減少ip漂移次數,通常是把修復好的主庫當做新的備庫。

2、MHA引入keepalived(MySQL服務進程掛掉時通過MHA 停止keepalived):

要想把keepalived服務引入MHA,我們只需要修改切換時觸發的腳本文件master_ip_failover即可,在該腳本中添加在master發生宕機時對keepalived的處理。

編輯腳本/scripts/master_ip_failover,修改後如下。

manager編輯腳本文件:

mkdir /scripts

vim /scripts/master_ip_failover

#!/usr/bin/env perl

use strict;

use warnings FATAL => ‘all‘;

use Getopt::Long;

my (

$command, $ssh_user, $orig_master_host, $orig_master_ip,

$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);

my $vip = ‘192.168.137.100‘;

my $ssh_start_vip = "/etc/init.d/keepalived start";

my $ssh_stop_vip = "/etc/init.d/keepalived stop";

GetOptions(

‘command=s‘ => \$command,

‘ssh_user=s‘ => \$ssh_user,

‘orig_master_host=s‘ => \$orig_master_host,

‘orig_master_ip=s‘ => \$orig_master_ip,

‘orig_master_port=i‘ => \$orig_master_port,

‘new_master_host=s‘ => \$new_master_host,

‘new_master_ip=s‘ => \$new_master_ip,

‘new_master_port=i‘ => \$new_master_port,

);

exit &main();

sub main {

print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";

if ( $command eq "stop" || $command eq "stopssh" ) {

my $exit_code = 1;

eval {

print "Disabling the VIP on old master: $orig_master_host \n";

&stop_vip();

$exit_code = 0;

};

if ($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

my $exit_code = 10;

eval {

print "Enabling the VIP - $vip on the new master - $new_master_host \n

";

&start_vip();

$exit_code = 0;

};

if ($@) {

warn $@;

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK \n";

exit 0;

}

else {

&usage();

exit 1;

}

}

sub start_vip() {

`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

# A simple system call that disable the VIP on the old_master

sub stop_vip() {

`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_h

ost=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_

master_ip=ip --new_master_port=port\n";

}

現在已經修改這個腳本了,接下來我們在/etc/masterha/app1.cnf 中調用故障切換腳本

停止MHA:

masterha_stop --conf=/etc/masterha/app1.cnf

在配置文件/etc/masterha/app1.cnf 中啟用下面的參數(在[server default下面添加])

master_ip_failover_script=/scripts/master_ip_failover

啟動MHA:

#nohup masterha_manager --conf=/etc/masterha/app1.cnf &>/etc/masterha/manager.log &

檢查狀態:

]# masterha_check_status --conf=/etc/masterha/app1.cnf

app1 (pid:51284) is running(0:PING_OK), master:192.168.137.134

檢查集群復制狀態是否有報錯:

]# masterha_check_repl --conf=/etc/masterha/app1.cnf

192.168.137.134(192.168.137.134:3306) (current master)

+--192.168.137.130(192.168.137.130:3306)

+--192.168.137.146(192.168.137.146:3306)

Tue May 9 14:40:57 2017 - [info] Checking replication health on 192.168.137.130..

Tue May 9 14:40:57 2017 - [info] ok.

Tue May 9 14:40:57 2017 - [info] Checking replication health on 192.168.137.146..

Tue May 9 14:40:57 2017 - [info] ok.

Tue May 9 14:40:57 2017 - [info] Checking master_ip_failover_script status:

Tue May 9 14:40:57 2017 - [info] /scripts/master_ip_failover --command=status --ssh_user=root --orig_master_host=192.168.137.134 --orig_master_ip=192.168.137.134 --orig_master_port=3306

IN SCRIPT TEST====/etc/init.d/keepalived stop==/etc/init.d/keepalived start===

Checking the Status of the script.. OK

Tue May 9 14:40:57 2017 - [info] OK.

Tue May 9 14:40:57 2017 - [warning] shutdown_script is not defined.

Tue May 9 14:40:57 2017 - [info] Got exit code 0 (Not master dead).

MySQL Replication Health is OK.

註意: /scripts/master_ip_failover添加或者修改的內容意思是當主庫數據庫發生故障時,會觸發MHA切換,MHA Manager會停掉主庫上的keepalived服務,觸發虛擬ip漂移到備選從庫,從而完成切換。

當然可以在keepalived裏面引入腳本,這個腳本監控mysql是否正常運行,如果不正常,則調用該腳本殺掉keepalived進程(參考MySQL 高可用性keepalived+mysql雙主)。

測試:在master上停掉mysql

[[email protected] ~]# /etc/init.d/mysqld stop

Shutting down MySQL............ [ OK ]

到slave(192.168.137.146)查看slave的狀態:

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.137.130

Master_User: repl

Master_Port: 3306

Connect_Retry: 60

從上圖可以看出slave指向了新的master服務器192.168.137.130(在故障切換前指向的是192.168.137.134)

查看vip綁定:

在192.168.137.134上查看vip綁定

[[email protected] ~]# ip addr show dev eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:57:66:49 brd ff:ff:ff:ff:ff:ff

inet 192.168.137.134/24 brd 192.168.137.255 scope global eth0

inet6 fe80::20c:29ff:fe57:6649/64 scope link

valid_lft forever preferred_lft forever

在192.168.137.130上查看vip綁定

[[email protected] ~]# ip addr show dev eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:a5:b4:85 brd ff:ff:ff:ff:ff:ff

inet 192.168.137.130/24 brd 192.168.137.255 scope global eth0

inet 192.168.137.100/32 scope global eth0

從上面的顯示結果可以看出vip地址漂移到了192.168.137.130

主從切換後續工作:現在Candidate變成主,需對原master重新做只從復制操作

修復成從庫

啟動keepalived

rm -fr app1.failover.complete

啟動manager

3、通過腳本實現VIP切換

如果使用腳本管理vip的話,需要手動在master服務器上綁定一個vip

]#/sbin/ifconfig eth0:0 192.168.137.100/24

vim /scripts/master_ip_failover

my $vip = ‘192.168.137.100/24‘;

my $key = ‘0‘;

my $ssh_start_vip = "/sbin/ifconfigeth0:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfigeth0:$key down";

之後的操作同上述keepalived操作

為了防止腦裂發生,推薦生產環境采用腳本的方式來管理虛擬ip,而不是使用keepalived來完成。到此為止,基本MHA集群已經配置完畢。

MySQL 高可用集群架構 MHA