大數據的開始:安裝hadoop

為實現全棧,從今天開始研究Hadoop,個人體會是成為某方面的專家需要從三個方面著手

- 系統化的知識(需要看書或者比較系統的培訓)

- 碎片化的知識(需要根據關註點具體的深入的了解)

- 經驗的積累(需要遇到問題)

好吧,我們從安裝入手。

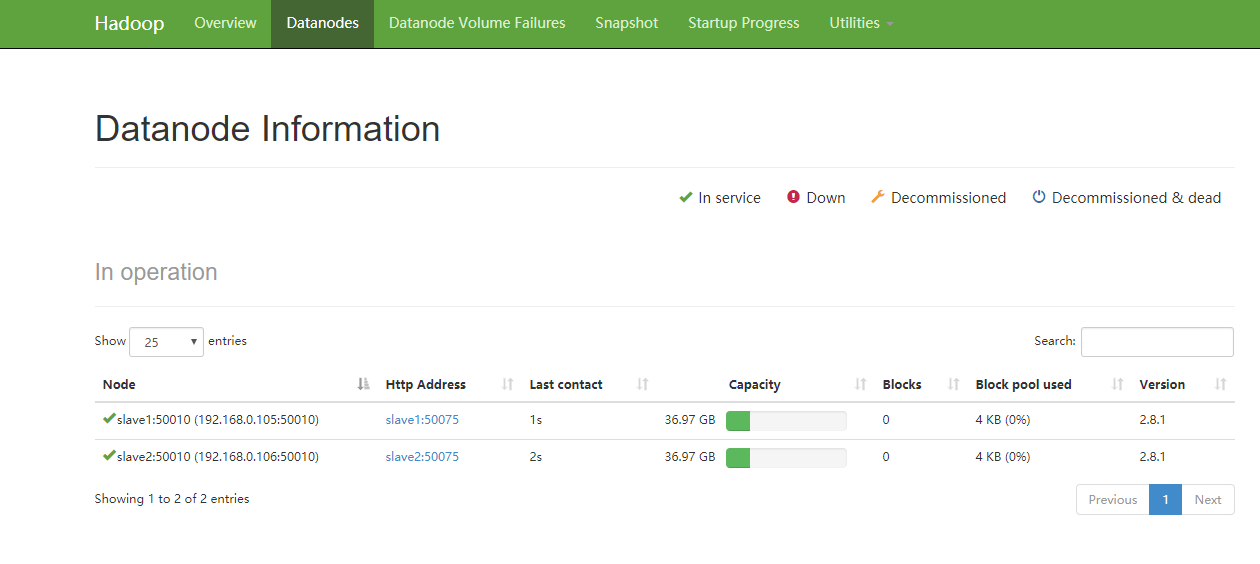

1.找三個CentOS的虛擬環境,我的是centos 7,大概的規劃如下,一個master,兩個slave

修改三臺機器的/etc/hosts文件

192.168.0.104 master 192.168.0.105 slave1 192.168.0.106 slave2

2.配置ssh互信

在三臺機器上輸入下面的命令,生成ssh key以及authorized key,為了簡單,我是在root用戶下操作,大家可以在需要啟動hadoop的用戶下操作更規範一些

ssh-keygen -t rsa

cd .ssh

cp id_rsa.pub authorized_keys

然後將三臺機器的authorized_keys合並成一個文件並且復制在三臺機器上。比如我的authorized key

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCrtxZC5VB1tyjU4nGy4+Yd//LsT3Zs2gtNtpw6z4bv7VdL6BI0tzFLs8QIHS0Q82BmiXdBIG2fkLZUHZuaAJlkE+GCPHBmQSdlS+ZvUWKFr+vpbzF86RBGwJp1HHs7GtDFtirN3Z/Qh6pKgNLFuFCxIF/Ee4sL50RUAh6wFOY/TRU4XxQissNXd9rhVFrZnOkctfA3Wek4FgNRyT+xUezSW1Vl2GliGc0siI5RCQezDhKwZNHyzY4yyiifeQYL14S4D0RrlCvv+5PIUZUrKznKc1BMYIljxMVOrAs0DsvQ0fkna/Q/pA53cuPhkD4P8ehA/fJuMCTZ+1q/Z2o1WW4j [email protected]