CAPI 初探及使用小結(4)

限於能力和時間,文中定有不少錯誤,歡迎指出,郵箱[email protected], 期待討論。由於絕大部分是原創,即使拷貝也指明了出處(如有遺漏請指出),所以轉載請表明出處http://www.cnblogs.com/e-shannon/

http://www.cnblogs.com/e-shannon/p/7495618.html

4 開放的coherent 加速接口

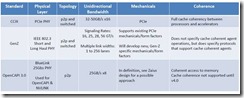

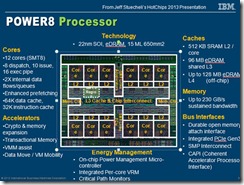

正如第一節所說的,為了滿足加速需求,業界為CPU高性能一致性接口(high performance coherence interface)定義開放的標準,2016年出現了openCAPI/Gen-Z/CCIX 三種open標準

三個標準的目的相似,側重點有少許差異,成員也互相交叉,甚至有成員在三個組裏(當然intel不在這三個組裏)。值得一提的是加速接口不但加速CPU,而且提供面向未來的高速接口,比如接以後高速內存,高速網絡存儲,高速網絡等,組成高效的計算機群。

各自的網址:www.ccixconsortium.com

http://genzconsortium.org/

www.opencapi.org

摘自CCIX,Gen-Z,penCAPI_Overview&Comparison.pdf

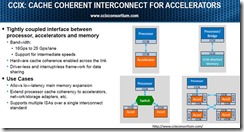

CCIX物理介質基於PCIE3.0,實現處理器和加速器全cache一致性,適用於低延遲內存擴展,CPU加速,網絡存儲。

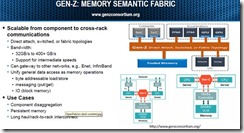

而Gen-Z則側重於機框之間的互聯一致性加速,也支持PCIE物理層和調整過的802.3電氣層,當然也聲稱支持內存,網絡設備等。“Gen-Z’s primary focus is a routable, rack-level interconnect to give access to large pools of memory, storage, or accelerator resources,

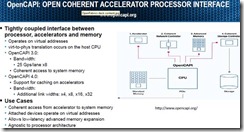

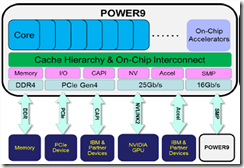

OpenCAPI則獲得了IBM的Power9支持,采用BlueLink高速接口,約25 Gb/sec,具有低延遲,以及匹配主要內存帶寬的超寬帶. OpenCAPI will be concerned primarily with attaching various kinds of compute to each other and to network and storage devices that have a need for coherent access to memory across a hybrid compute complex. . With OpenCAPI, I/O bandwidth can be proportional to main store bandwidth, and with very low 300 nanosecond to 400 nanosecond latencies, you can put storage devices out there, or big pools of GPU or FPGA accelerators and let them have access to main store and just communicate to it seamlessly。OpenCAPI is a ground-up design that enables extreme bandwidth that is on par with main memory bandwidth

Gen-z資料容易獲得,最難的是CCIX,需要會員。

Yxr註:沒有細細研究三者的區別聯系,所以就簡單的把資料拷貝出來,僅供參考,其實感覺作者也沒有深究

但是覺得

本文重點介紹OpenCAPI,因為其獲得Power9支持。

4.1 OpenCAPI

先IBM初衷是設計一個開放的標準,和CPU架構無關的加速接口,所以將OpenCAPI剝離出了OpenPower,這樣其他CPU廠家也能夠加入(不清楚intel是否加入,畢竟兩者曾經合作過infiniband) 在文中https://www.nextplatform.com/2016/10/17/opening-server-bus-coherent-acceleration/竟然也稱OpenCAPI是CAPI3.0,無語了。

OpenCAPI其層次劃分類似PCIE, 共三層,分別是 phy層,DL(data link), TL(trasaction layer),但是與PCIE不一樣的是,遵循Open CAPI is agnostic(不可知) to processor architecture,沒有定義phy層,而死由用戶自己定義,IBM的power9則采用了bluelink,其能夠與nvlink復用(詳見POWER9的圖片)。OpenCAPI僅僅定義了DL和TL,TL層也采用credit 來進行流控,openCAPI采用了virtual Adress,相比較PCIE,最大的優勢優化了延遲latency ,簡化了設計,功耗面積均優於PCIE

針對PCIE架構的局限性,延遲大,帶寬仍然跟不上內存帶寬,以及缺少coherency,在power9的openCAPI中,推出了bluelink物理接口,25Gbps x 48lanes,並且可以在其上跑nvlink2.0,支持nvidia的GPU加速。這個也是Google和服務器廠家Rackspace在“Zaius[dream1] ” server上采用OpenCAPI端口,xilinx也推出支持IP的原因。The PCI-Express stack is a limiter in terms of latency, bandwidth, and coherence. This is why Google and Rackspace are putting OpenCAPI ports on their co-developed Power9 system, and why Xilinx will add them to its FPGAs, Mellanox to its 200 Gb/sec InfiniBand cards, and Micron to its flash and 3D XPoint storage.

Yxr註:由於openCAPI沒有定義phy層,所以其他CPU廠商,arm,AMD,intel也可以定義自己的phy,在其上跑nvlink2.0和openCAPI..

以下是OpenCAPI的比較優勢,記住PCIE的round trip latency 為100ns,gen-z好像也是這個目標。

1. Server memory latency is critical TOC factor

Differential solution must provide ~equivalent effective latency of DDR standards

POWER8 DMI round trip latency ? 10ns

Typical PCIe round trip latency ? ~100s ns

Why is DMI so low?

DMI designed from ground up for minimum latency due to ld/str requirements

Open CAPI key concept

Provide DMI like latency, but with enhanced command set of CAPI

2.Virtual address based cache,

Eliminates kernel and device driver software overhead

Improves accelerator performance

Allows device to operate directly on application memory without kernel-level data copies or pinned pages

Simplifies programming effort to integrate accelerators into applications

The Virtual-to-Physical Address Translation occurs in the host CPU

Yxr註:有資料提到OpenCAPI的缺點是到OpenCAPI 4.0才能實現cache coherent,現在是memory coherent,自己不甚理解

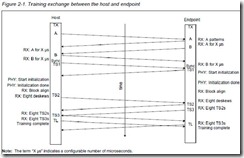

4.1.1 DL

OpenCAPI data link層支持每條lane25 Gbps的串行數據,基本的配置是8條lane,每天25.78125GHz。在host側,稱為DL,在OpenCAPI側稱為DLX

Link training由帶外信號OCDE復位開始,一個link的training分成三個部分:PHY training,PHY 初始化,DL training

通過training完成速度匹配,時鐘匹配,鏈路同步,以及lane的信息交換。

DL的流控采用flit包,應該是ACK,replay機制完成

DL采用64b/66b 編碼方式,LFSR擾碼(具體公式待查)

4.1.2 TL(待續)

核心部分,較復雜

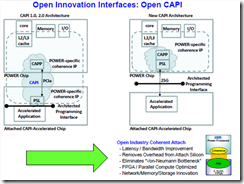

4.2 OpenCAPI和CAPI的比較

如下圖所示,OpenCAPI將PSL放入CPU側,這樣的好處是使得OpenCAPI不在與CPU架構OpenPower有關,便於其他CPU廠家采納,cache以及一致性均封裝在CPU中。物理層采用bluelink,減少了PCIE的延遲,提高了帶寬。當然PCIE的好處是采用的廠家眾多,PSL(含cache)放入到AFU側,也是為了克服PCIE的局限性。

While CAPI was governed by IBM and metered across the OpenPOWER Consortium, OpenCAPI is completely open, governed by the OpenCAPI Consortium led by the companies I listed above. The OpenCAPI consortium says they plan to make the OpenCAPI specification fully available to the public at no charge before the end of the year. Mellanox Technologies, Micron, and Xilinx were CAPI supporters, OpenPOWER members, and are now part of OpenCAPI. NVIDIA and Google were part of OpenPOWER and are now OpenCAPI members

4.3 自問自答

這些問題主要是自己在學習的時候的疑惑以及自己猜測的答案,分享之。

1) Q:既然OpenCAPI如此優秀,是否CAPI之後沒有升級的必要?

A by yxr: 猜測,CAPI是IBM主導的,與openPower綁定,而OpenCAPI與CPU ISA無關。IBM可以不用顧慮太多,專註於OpenPower架構,獨立前行。當然有人稱OpenCAPI是CAPI3.0,所以也許會被替換

2) Q:為何CAPI需要將PSL放入到accelerate 側,還做成了IP。PSL應含有cache,與CAPP一起負責cache coherent。 所以和OpenCAPI的實質區別是,cache以及地址翻譯是放在CPU側還是accelerate側,為何CAPI沒有這樣的考慮,即將PSL放在CPU側?

A by yxr: 猜測,其一如果PSL放在CPU側,導致由於cache使得芯片面積變大;其二從accelerator作為peering CPU 角度來看,cache應該緊跟CPU,效率才提升明顯(這個就是cache的意義)。Accelerator僅僅只訪問本地cache也可以避開了PCIE round trip latency過大的問題。當然一旦OpenCAPI物理鏈路(采用bluelink)的訪問延遲足夠低,這樣使得cache放在CPU側也不影響性能。

這裏引出了第三個問題,關於CCIX的問題,其采用PCIE作為物理線路,不知道如何避免延遲過大的問題!!!

3) Q: CAPI和CCIX均是使用PCIE作為物理線路,這樣latency將無可避免的大,CCIX如何克服?CCIX的cache放在哪一側?兩者的區別和各自的優點是什麽?

4) Q: OpenCAPI 3.0是否沒有實現cache coherent,而只是實現memory coherent? 什麽是memory coherent?

A by yxr:這個讓我很吃驚,一直認為coherent就是cache coherent。這裏所謂的memory coherent可能是跨機框的計算機集群之間(比如hadhoop),memory 如何保持一致性的意思吧

後期計劃

1) 熟悉OpenCAPI的協議層次,尤其TL,關註如何在PSL放入host側,完成cache coherency

關註其協議接口和CAPI的區別

[dream1]Zaius is a dual-socket platform based on the IBM POWER9 Scale Out CPU. It supports a host of new technologies including DDR4 memory, PCIE Gen4 and the OpenCAPI interface. It’s designed with a highly efficient 48V-POL power system and will be compatible with the 48v Open Rack V2.0 standard. The Zaius BMC software is being developed using Open BMC, the framework for which we’ve released on GitHub. Additionally, Zaius will support a PCIe Gen4 x16 OCP 2.0 mezzanine slot NIC

CAPI 初探及使用小結(4)