使用scrapy進行股票數據爬蟲

阿新 • • 發佈:2017-09-18

key proc txt 框架 mage 技術分享 date star self.

周末了解了scrapy框架,對上次使用requests+bs4+re進行股票爬蟲(http://www.cnblogs.com/wyfighting/p/7497985.html)的代碼,使用scrapy進行了重寫。

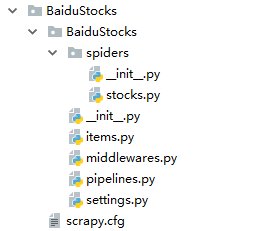

目錄結構:

stocks.py文件代碼

1 # -*- coding: utf-8 -*- 2 import scrapy 3 import re 4 5 6 class StocksSpider(scrapy.Spider): 7 name = "stocks" 8 start_urls = [‘http://quote.eastmoney.com/stocklist.html‘] 9 10 def parse(self, response): 11 for href in response.css(‘a::attr(href)‘).extract(): 12 try: 13 stock = re.findall(r"[s][hz]\d{6}", href)[0] 14 url = ‘https://gupiao.baidu.com/stock/‘ + stock + ‘.html‘ 15 yield scrapy.Request(url, callback=self.parse_stock)16 except: 17 continue 18 19 def parse_stock(self, response): 20 infoDict = {} 21 stockInfo = response.css(‘.stock-bets‘) 22 name = stockInfo.css(‘.bets-name‘).extract()[0] 23 keyList = stockInfo.css(‘dt‘).extract() 24 valueList = stockInfo.css(‘dd‘).extract() 25 for i in range(len(keyList)): 26 key = re.findall(r‘>.*</dt>‘, keyList[i])[0][1:-5] 27 try: 28 val = re.findall(r‘\d+\.?.*</dd>‘, valueList[i])[0][0:-5] 29 except: 30 val = ‘--‘ 31 infoDict[key]=val 32 33 infoDict.update( 34 {‘股票名稱‘: re.findall(‘\s.*\(‘,name)[0].split()[0] + 35 re.findall(‘\>.*\<‘, name)[0][1:-1]}) 36 yield infoDict

pipelines.py文件代碼:

1 # -*- coding: utf-8 -*- 2 3 # Define your item pipelines here 4 # 5 # Don‘t forget to add your pipeline to the ITEM_PIPELINES setting 6 # See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html 7 8 9 class BaidustocksPipeline(object): 10 def process_item(self, item, spider): 11 return item 12 13 class BaidustocksInfoPipeline(object): 14 def open_spider(self, spider): 15 self.f = open(‘BaiduStockInfo.txt‘, ‘w‘) 16 17 def close_spider(self, spider): 18 self.f.close() 19 20 def process_item(self, item, spider): 21 try: 22 line = str(dict(item)) + ‘\n‘ 23 self.f.write(line) 24 except: 25 pass 26 return item

settings.py文件中被修改的區域:

1 # Configure item pipelines 2 # See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html 3 ITEM_PIPELINES = { 4 ‘BaiduStocks.pipelines.BaidustocksInfoPipeline‘: 300, 5 }

使用scrapy進行股票數據爬蟲