01 Spark源碼編譯

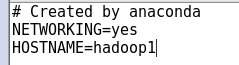

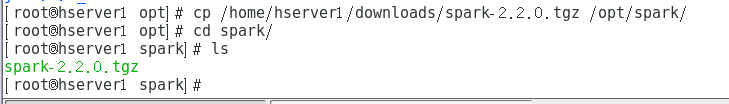

1.1設置機器名:hostname

gedit /etc/sysconfig/network

Scala

http://www.scala-lang.org/

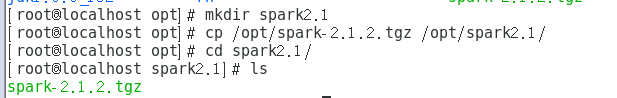

cd /opt

mkdir scala

cp /home/hserver1/desktop/scala-2.12.2.tgz /opt/scala

cd /opt/scala

tar -xvf scala-2.12.2.tgz

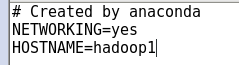

配置環境變量

gedit /etc/profile

export SCALA_HOME=/opt/scala/scala-2.12.2

source /etc/profile

驗證Scala

scala -version

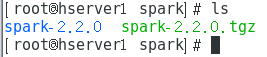

Spark源碼

* 下載http://mirror.bit.edu.cn/apache/spark/spark-2.2.0/

* tar -xvf

2、編譯代碼

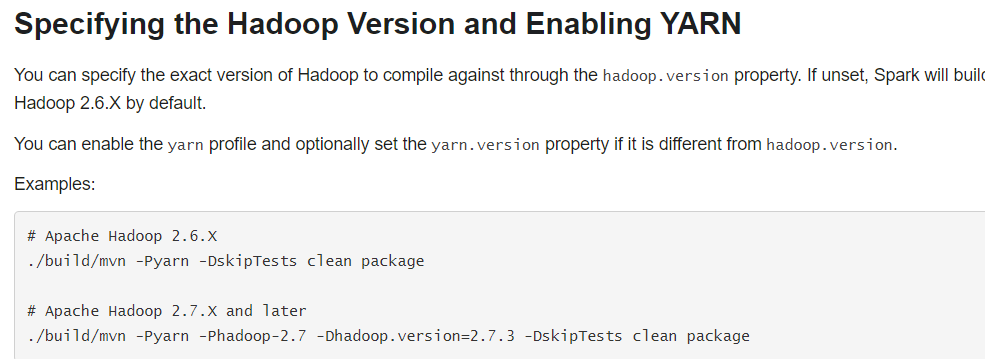

* 進入源碼根目錄,執行如下編譯命令:

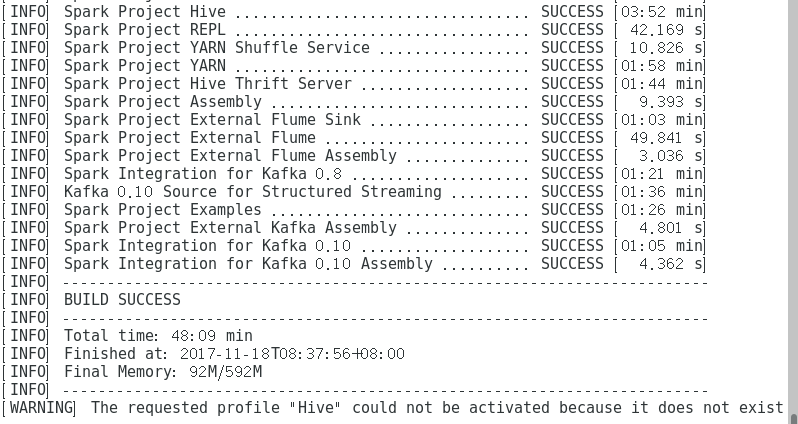

1. mvn -Pyarn -Phadoop-2.6 -DskipTests clean package

2. mvn -Pyarn -Dscala-2.10 -DskipTests clean package

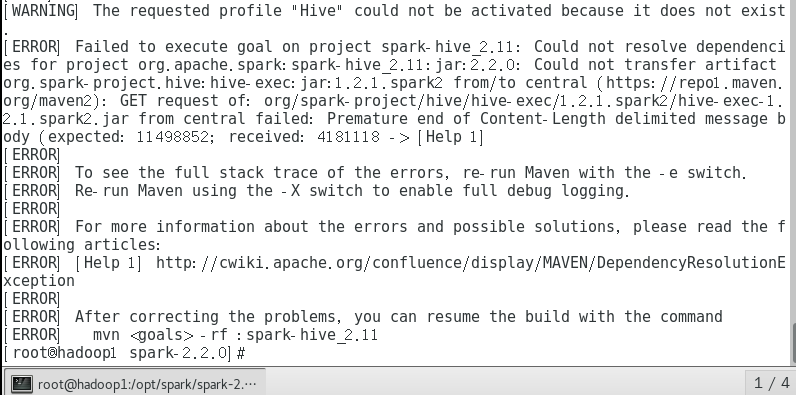

3. mvn -Pyarn -Phadoop-2.7 -PHive -Dhadoop.version=2.7.4 -DskipTests clean package 這個時間吧 大概是我從四點開始編譯到了六點半竟然還沒有編譯完。

經過修改

mvn -Pyarn -Phadoop-2.7 -Dhadoop.version=2.7.4 -PHive -Phive-thriftserver -DskipTests clean package

mvn -Pyarn -Phadoop-2.7 -Dhadoop.version=2.7.4 -PHive -Phive-thriftserver -DskipTests clean package

進行了很多次編譯,終於通過

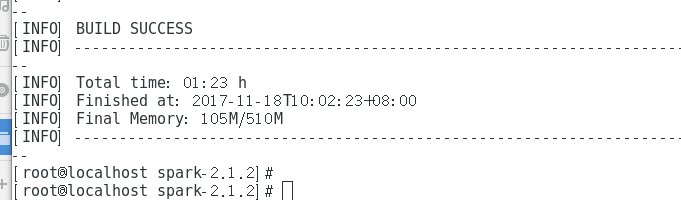

第二次編譯也成功了

3、生成部署包

./make-distribution.sh --name hadoop2.7.4 --tgz -Phadoop-2.7 -Phive -Phive-thriftserver -Pyarn

./make-distribution.sh --tgz -Phadoop-2.7 -Pyarn -DskipTests -Dhadoop.version=2.7.4 -Phive

./dev/make-distribution.sh --name hadoop2.7.4 --tgz -Psparkr -Phadoop-2.7 -Phive -Phive-thriftserver -Pmesos -Pyarn

同樣耗時略久,如無異常,會在源碼包根目錄生成安裝包

spark-2.2.0-bin-hadoop2.8.2.tgz

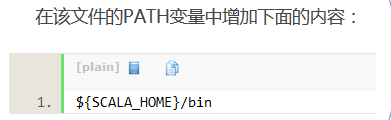

4、解壓spark安裝包至目標目錄

1. tar -xvf spark-2.2.0-bin-hadoop2.8.2.tgz -C /opt/spark/5、將spark加入到環境變量

在/etc/profile加入

gedit /etc/profile

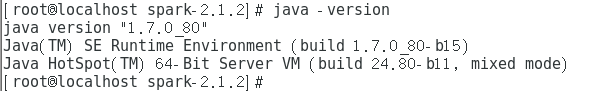

export JAVA_HOME=/opt/jdk1.8.0_152

export HADOOP_HOME=/opt/hadoop/hadoop-2.7.4

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_HOME}/lib/native

export HADOOP_OPTS="-Djava.library.path=${HADOOP_HOME}/lib"

export SCALA_HOME=/opt/scala/scala-2.12.2

export CLASS_PATH=.:${JAVA_HOME}/lib:${HIVE_HOME}/lib:$CLASS_PATH

export PATH=.:${JAVA_HOME}/bin:${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin:${SPARK_HOME}/bin:${SCALA_HOME}/bin:${MAVEN_HOME}/bin:$PATH

source /etc/profile

6、配置conf

進入conf目錄

cp slaves.template slaves

gedit slaves

添加hadoop1

cp spark-env.sh.template spark-env.sh

gedit spark-env.sh

1. export SPARK_MASTER_IP=hadoop1

2. export SPARK_LOCAL_IP=hadoop1

3. export SPARK_MASTER_PORT=7077

4. export SPARK_WORKER_CORES=1

5. export SPARK_WORKER_INSTANCES=1

6. export SPARK_WORKER_MEMORY=512M

7. export LD_LIBRARY_PATH=$JAVA_LIBRARY_PATHcp spark-defaults.conf.template spark-defaults.conf

gedit spark-defaults.conf

1. spark.master spark://master:7077

2. spark.serializer org.apache.spark.serializer.KryoSerializer

3. spark.driver.memory 512m

4. spark.executor.extraJavaOptions -XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three" 更改日誌級別:

cp log4j.properties.template log4j.properties

gedit log4j.properties

19 log4j.rootCategory=WARNING, console

7、啟動hdfs

cd $HADOOP_HOME/sbin

./start-dfs.sh

8、啟動spark

cd $SPARK_HOME/sbin

./start-all.sh

瀏覽器訪問http://hadoop1:8080/就可以訪問到spark集群的主頁

註意:需要將防火墻關閉service iptables stop

驗證啟動:

jps

驗證客戶端連接:

spark-shell --master spark://hadoop1:7077 --executor-memory 500m

01 Spark源碼編譯