GlusterFS分布式文件系統

第1章 什麽是分布式文件系統?

相對於本極端的文件系統而言,分布式文件系統DFS,夥食網絡文件系統NFS,是一種允許文件通過網絡在多臺主機上分享的文件系統你那個,可以讓多機器上的多用戶分享指定問加你和存儲空間

在這樣的文件系統中,客戶端並非直接訪問底層的數據存儲區塊,而是通過網絡,以特定的通信協議和服務器溝通,借由通信協議的設計,可以讓客戶端和服務端都根據訪問控制清單或者授權,來限制對於文件系統的訪問

第2章 glusterfs簡介

glusterfs是一個開源的分布式文件系統,具有強大的橫向擴展能力,通過擴展能夠支持數PB存儲容量和處理數千客戶端,glusterfs借助tcp/ip或者

第3章 glutserfs服務端部署:

3.1 環境準備:

[root@lb01 ~]# cat /etc/redhat-release

CentOS Linux release 7.2.1511 (Core)

[root@lb01 ~]# systemctl status firewalld.service

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Mar 18 00:27:08 lb01 systemd[1]: Starting firewalld - dynamic firewall daemon...

Mar 18 00:27:12 lb01 systemd[1]: Started firewalld - dynamic firewall daemon.

Mar 18 01:29:20 lb01 systemd[1]: Stopping firewalld - dynamic firewall daemon...

Mar 18 01:29:22 lb01 systemd[1]: Stopped firewalld - dynamic firewall daemon.

[root@lb01 ~]# getenforce

Disabled

[root@lb01 ~]# hostname -I

10.0.0.5 172.16.1.5

[root@lb02 ~]# cat /etc/redhat-release

CentOS Linux release 7.2.1511 (Core)

[root@lb02 ~]# systemctl status firewalld.service

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

[root@lb02 ~]# getenforce

Disabled

[root@lb02 ~]# hostname -I

10.0.0.6 172.16.1.6

註意:配置hosts文件這一步很關鍵,所有機器都要有

[root@web03 gv0]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.1.41 backup gluster服務端

172.16.1.17 web03 gluster服務端

172.16.1.18 web04

172.16.1.21 cache01 進行掛載的客戶端

3.2 服務端部署

backup和web03相同操作:

yum install centos-release-gluster -y glusterfs倉庫

yum -y install glusterfs-server

systemctl start glusterd

說明:可以修改鏡像源進行加速

sed -i 's#http://mirror.centos.org#https://mirrors.shuosc.org#g' /etc/yum.repos.d/CentOS-Gluster-3.12.repo

3.3 建立信任池,單向建立即可,即把服務端連接在一起使之成為一個整體

[root@backup ~]# gluster peer probe web03

peer probe: success.

3.3.1 說明:將某臺機器從信任池中分離用

gluster peer datach

查看信任池建立情況

[root@web03 gv0]# gluster peer status

Number of Peers: 1

Hostname: backup

Uuid: 55a0673a-fc85-45de-92f4-ffe4c6918806

State: Peer in Cluster (Connected)

[root@backup ~]# gluster peer status

Number of Peers: 1

Hostname: web03

Uuid: f89a8665-f94a-4246-a626-4a9e0dc6be49

State: Peer in Cluster (Connected)

3.3.2 創建分布式卷

服務端創建數據存放目錄:

[root@web03 ~]# mkdir -p /data/gv0

[root@backup ~]# mkdir –p /data/gv0

使用命令創建分布式卷,命令為test,可隨意命名

[root@lb01 ~]# gluster volume create test 172.16.1.5:/data/exp1/ 172.16.1.6:/data/exp1/ force

volume create: test: success: please start the volume to access data

說明:這條命令最後的force表示將文件存儲在系統盤上,因為默認是不允許在系統盤上進行覆蓋的

查看卷信息:服務端出入信息應為相同

[root@lb01 ~]# gluster volume info test

Volume Name: test

Type: Distribute

Volume ID: 03f9176c-4e7d-49dc-a8f5-9f9f8cc08867

Status: Created

Snapshot Count: 0

Number of Bricks: 2

Transport-type: tcp

Bricks:

Brick1: 172.16.1.5:/data/exp1

Brick2: 172.16.1.6:/data/exp1

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

啟動卷

[root@lb01 ~]# gluster volume start test

volume start: test: success

第4章 客戶端部署:

4.1 安裝軟件:

[root@cache01 ~]# yum install centos-release-gluster –y

[root@cache01 ~]# yum install -y glusterfs glusterfs-fuse

[root@cache01 ~]# mount -t glusterfs 172.16.1.17:/test /mnt/

4.2 特殊說明:

在客戶端執行掛載命令時,ip或者主機名的冒號後面接的是卷名稱,不是目錄!

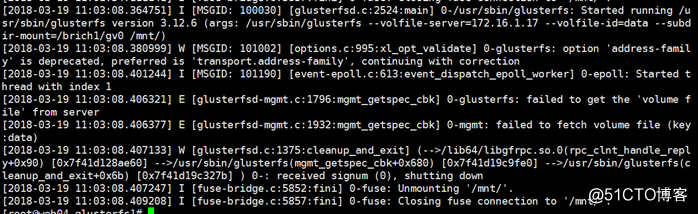

反反復復試驗了兩天的時間,全都錯在掛載命令上,如果不接卷名接的目錄,就會遇到如下報錯:

[root@cache01 ~]# mount.glusterfs 172.16.1.17:/data/gv0 /mnt/

Mount failed. Please check the log file for more details.

查看/var/log/glusterfs/mnt.log後發現如下信息:

1.1 進行檢測:

客戶端創建文件

[root@cache01 mnt]# mkdir nihao

[root@cache01 mnt]# ll

total 4

drwxr-xr-x 2 root root 4096 Mar 27 2018 nihao

1.2 在服務端進行查看:

[root@backup gv0]# ll backup服務端正常

total 0

drwxr-xr-x 2 root root 6 Mar 27 03:18 nihao

[root@web03 gv0]# ll web03服務端正常

total 0

drwxr-xr-x 2 root root 6 Mar 18 00:43 nihao

至此,簡單的glusterfs服務實現

第2章 擴展怎樣創建復制卷和條帶卷

2.1.1 創建復制卷---對比RAID1

創建數據存放目錄

[root@lb01 ~]# mkdir /data/exp3

[root@lb02 ~]# mkdir /data/exp4

使用命令創建復制卷,命令為repl

[root@lb01 data]# gluster volume create repl replica 2 transport tcp 172.16.1.5:/data/exp3/ 172.16.1.6:/data/exp4 force

volume create: repl: success: please start the volume to access data

查看卷信息

[root@lb01 data]# gluster volume info repl

Volume Name: repl

Type: Replicate

Volume ID: 98182696-e065-4bdb-9a11-f6a086092983

Status: Created

Snapshot Count: 0

Number of Bricks: 1 x 2 = 2

Transport-type: tcp

Bricks:

Brick1: 172.16.1.5:/data/exp3

Brick2: 172.16.1.6:/data/exp4

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

啟動卷

[root@lb01 data]# gluster volume start repl

volume start: repl: success

2.1.2 創建條帶卷---對比RAID0

創建數據存放目錄

[root@lb01 data]# mkdir /data/exp5

[root@lb02 data]# mkdir /data/exp6

使用命令創建條帶卷,命名為raid0

[root@lb01 data]# gluster volume create raid0 stripe 2 transport tcp 172.16.1.5:/data/exp5/ 172.16.1.6:/data/exp6 force

volume create: raid0: success: please start the volume to access data

查看卷信息

[root@lb01 data]# gluster volume info raid0

Volume Name: raid0

Type: Stripe

Volume ID: f8578fab-11c7-4aaa-b58f-b1825233cfd6

Status: Created

Snapshot Count: 0

Number of Bricks: 1 x 2 = 2

Transport-type: tcp

Bricks:

Brick1: 172.16.1.5:/data/exp5

Brick2: 172.16.1.6:/data/exp6

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

啟動卷

[root@lb01 data]# gluster volume start raid0

volume start: raid0: success

GlusterFS分布式文件系統