Linux下RIAD的實現及mdadm命令的基本用法

一、RAID簡述

磁碟陣列(Redundant Arrays of Independent Disks,RAID),是把多個物理磁碟組成一個陣列,當作一個邏輯磁碟使用,它將資料以分段或條帶的方式儲存在不同的磁碟中,這樣可以通過在多個磁碟上同時儲存和讀取資料來大幅提高儲存系統的資料吞吐量

二、RAID分類

1、外接式磁碟陣列:常被使用在大型伺服器上,專業的硬體磁碟陣列盤櫃,價格昂貴,主要廠商:IBM、HP、EMC等

2、內接式磁碟陣列:需要技術人員來使用操作,同時需要配備硬體RAID卡或者陣列卡

3、軟體模擬式磁碟陣列:通過作業系統自身提供的磁碟管理功能(軟體功能實現)將多個硬碟配置成邏輯盤,價格相對較便宜

三、RAID級別

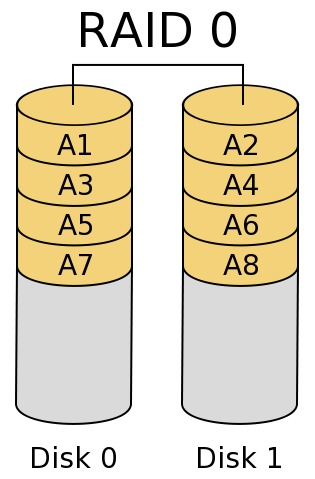

1、RAID 0

最早出現的RAID模式,屬於條帶模式,至少需要兩塊磁碟,成本低,可以提高整個磁碟的效能和吞吐量;但是沒有容錯機制,磁碟損壞易丟失資料

2、RAID 1

磁碟映象模式,資料在寫入一塊磁碟的同時,會在另一塊閒置的磁碟上生成映象檔案,通過二次讀寫實現磁碟映象,磁碟控制器的負載也相當大,所以多磁碟控制器使用可以緩解讀寫負載

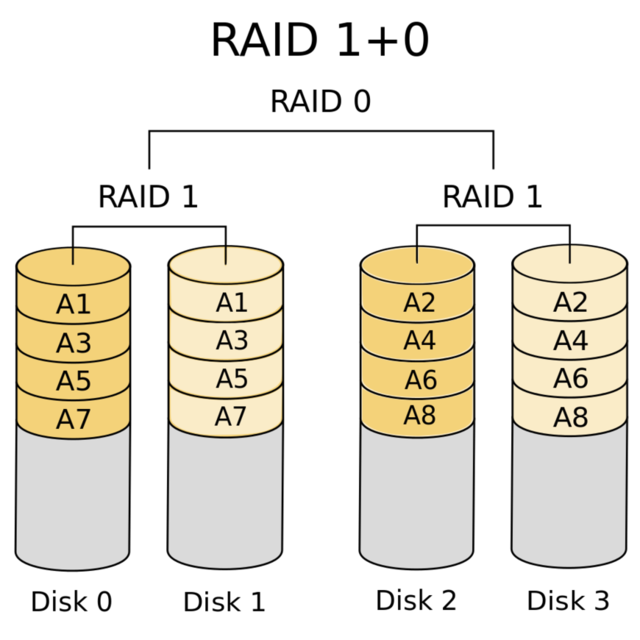

3、RAID 1 + 0

高可靠性與高效磁碟結構,下層是兩個RAID 1,兩個RAID 1之上是RAID 0,至少四塊硬碟

4、RAID 0 + 1

高效率與高效能磁碟結構, 下層是兩個RAID 0,兩個RAID 1之上是RAID 1,至少四塊硬碟

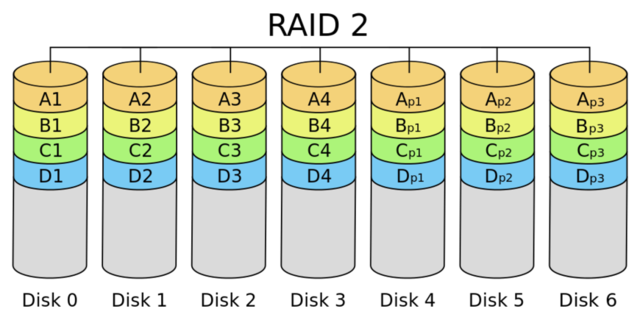

5、RAID 2

帶海明碼校驗,這種編碼技術需要多個磁碟存放檢查及恢復資訊,使得RAID 2技術實施更復雜,商業中用的比較少

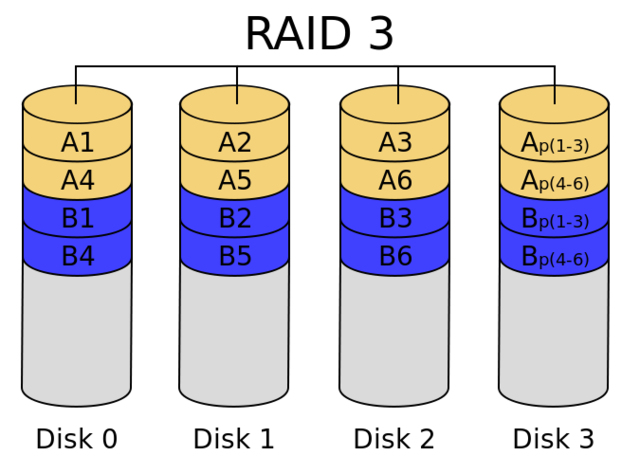

6、RAID 3

帶奇偶校驗碼的並行傳送,只能查錯不能糾錯

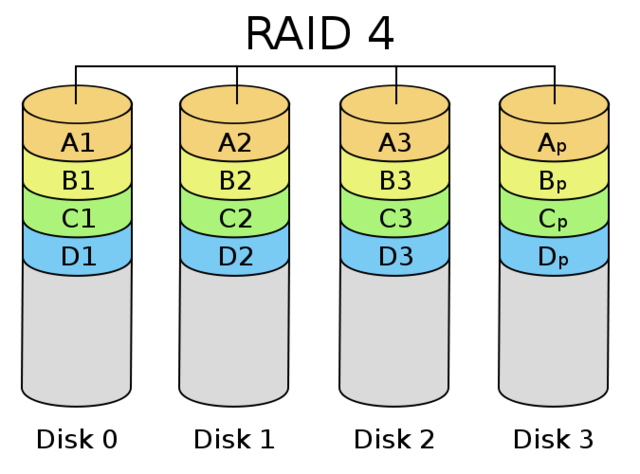

7、RAID 4

它對資料的訪問是按資料塊進行的,也就是按磁碟進行的,每次是一個盤。在圖上可以這麼看,RAID3是一次一橫條,而RAID4一次一豎條

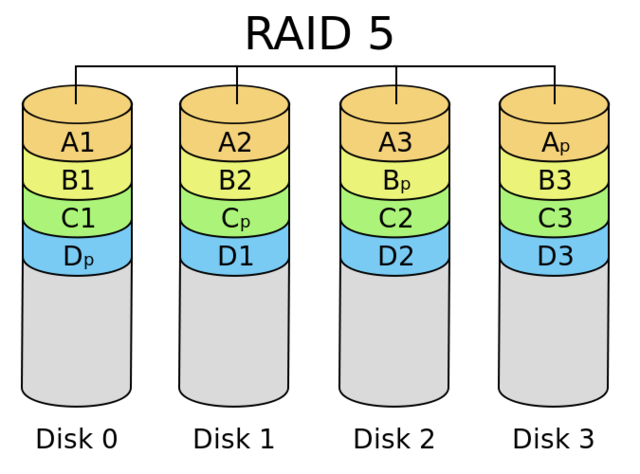

8、RAID 5

分散式奇偶校驗的獨立磁碟結構,可靠性強,只損壞一塊硬碟時,系統會根據儲存的奇偶校驗位重建資料,如果同時損壞兩塊硬碟時資料將完全損壞

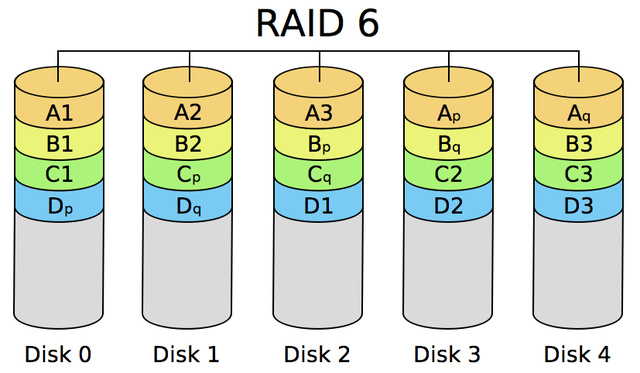

9、RAID 6

帶有兩種分佈儲存的奇偶校驗碼的獨立磁碟結構,多一種校驗主要是用於要求資料絕對不能出錯的場合

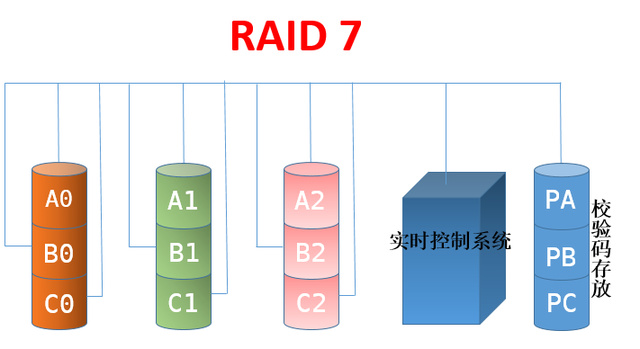

10、RAID 7

所有的I/O傳送均是同步進行的,可以分別控制,這樣提高了系統的並行性,提高系統訪問資料的速度,帶有高速緩衝儲存器,由於採用並行結構和高速緩衝儲存器,因此資料訪問效率大大提高

四、常見RAID級別總結

以上內容來源於網路

瞭解了raid的級別和各個級別下的優缺點後,我們在Linux下怎麼實現raid呢

接下來我們介紹一款軟體mdadm (multiple devices admin的簡稱),它是Linux下的一款標準的軟體 RAID 管理工具,作者是Neil Brown,它能夠診斷、監控和收集詳細的陣列資訊 ,在linux系統中目前以MD(Multiple Devices)虛擬塊裝置的方式實現軟體RAID,利用多個底層的塊裝置虛擬出一個新的虛擬裝置,並且利用條帶化(stripping)技術將資料塊均勻分佈到多個磁碟上來提高虛擬裝置的讀寫效能,利用不同的資料冗祭演算法來保護使用者資料不會因為某個塊裝置的故障而完全丟失,而且還能在裝置被替換後將丟失的資料恢復到新的裝置上.

目前MD支援linear,multipath,raid0(stripping),raid1(mirror),raid4,raid5,raid6,raid10等不同的冗餘級別和級成方式,當然也能支援多個RAID陳列的層疊組成raid1 0,raid5 1等型別的陳列。

五、在Linux上建立一個raid5的一個磁碟陣列,並有一塊盤作為熱備份盤(也就是說當我陣列裡有一塊盤壞了,這塊盤馬上就替上去,把壞了的盤替換下來)。

首先我們要建立一個raid5的磁碟陣列,我們至少需要3塊盤,這裡我們建立4塊盤,有一塊作為熱備盤。接下來我們使用fdisk 來對/dev/sdb 進行分割槽(這裡的分割槽來模擬磁碟)

[[email protected] ~]# fdisk /dev/sdb WARNING: DOS-compatible mode is deprecated. It's strongly recommended to switch off the mode (command 'c') and change display units to sectors (command 'u'). Command (m for help): m Command action a toggle a bootable flag b edit bsd disklabel c toggle the dos compatibility flag d delete a partition l list known partition types m print this menu n add a new partition o create a new empty DOS partition table p print the partition table q quit without saving changes s create a new empty Sun disklabel t change a partition's system id u change display/entry units v verify the partition table w write table to disk and exit x extra functionality (experts only) Command (m for help): p Disk /dev/sdb: 21.5 GB, 21474836480 bytes 255 heads, 63 sectors/track, 2610 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xaebe4f2c Device Boot Start End Blocks Id System /dev/sdb1 1 1306 10490413+ 5 Extended Command (m for help): n Command action l logical (5 or over) p primary partition (1-4) l First cylinder (1-1306, default 1): Using default value 1 Last cylinder, +cylinders or +size{K,M,G} (1-1306, default 1306): +2G Command (m for help): n Command action l logical (5 or over) p primary partition (1-4) l First cylinder (263-1306, default 263): Using default value 263 Last cylinder, +cylinders or +size{K,M,G} (263-1306, default 1306): +2G Command (m for help): n Command action l logical (5 or over) p primary partition (1-4) l First cylinder (525-1306, default 525): Using default value 525 Last cylinder, +cylinders or +size{K,M,G} (525-1306, default 1306): +2G Command (m for help): n Command action l logical (5 or over) p primary partition (1-4) l First cylinder (787-1306, default 787): Using default value 787 Last cylinder, +cylinders or +size{K,M,G} (787-1306, default 1306): +2G Command (m for help): p Disk /dev/sdb: 21.5 GB, 21474836480 bytes 255 heads, 63 sectors/track, 2610 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xaebe4f2c Device Boot Start End Blocks Id System /dev/sdb1 1 1306 10490413+ 5 Extended /dev/sdb5 1 262 2104452 83 Linux /dev/sdb6 263 524 2104483+ 83 Linux /dev/sdb7 525 786 2104483+ 83 Linux /dev/sdb8 787 1048 2104483+ 83 Linux

提示:我們在/dev/sdb上面分了4塊2G大小的分割槽,接下來我們要對其分割槽的型別更改為Linux raid auto

Command (m for help): m Command action a toggle a bootable flag b edit bsd disklabel c toggle the dos compatibility flag d delete a partition l list known partition types m print this menu n add a new partition o create a new empty DOS partition table p print the partition table q quit without saving changes s create a new empty Sun disklabel t change a partition's system id u change display/entry units v verify the partition table w write table to disk and exit x extra functionality (experts only) Command (m for help): t Partition number (1-8): 5 Hex code (type L to list codes): L 0 Empty 24 NEC DOS 81 Minix / old Lin bf Solaris 1 FAT12 39 Plan 9 82 Linux swap / So c1 DRDOS/sec (FAT- 2 XENIX root 3c PartitionMagic 83 Linux c4 DRDOS/sec (FAT- 3 XENIX usr 40 Venix 80286 84 OS/2 hidden C: c6 DRDOS/sec (FAT- 4 FAT16 <32M 41 PPC PReP Boot 85 Linux extended c7 Syrinx 5 Extended 42 SFS 86 NTFS volume set da Non-FS data 6 FAT16 4d QNX4.x 87 NTFS volume set db CP/M / CTOS / . 7 HPFS/NTFS 4e QNX4.x 2nd part 88 Linux plaintext de Dell Utility 8 AIX 4f QNX4.x 3rd part 8e Linux LVM df BootIt 9 AIX bootable 50 OnTrack DM 93 Amoeba e1 DOS access a OS/2 Boot Manag 51 OnTrack DM6 Aux 94 Amoeba BBT e3 DOS R/O b W95 FAT32 52 CP/M 9f BSD/OS e4 SpeedStor c W95 FAT32 (LBA) 53 OnTrack DM6 Aux a0 IBM Thinkpad hi eb BeOS fs e W95 FAT16 (LBA) 54 OnTrackDM6 a5 FreeBSD ee GPT f W95 Ext'd (LBA) 55 EZ-Drive a6 OpenBSD ef EFI (FAT-12/16/ 10 OPUS 56 Golden Bow a7 NeXTSTEP f0 Linux/PA-RISC b 11 Hidden FAT12 5c Priam Edisk a8 Darwin UFS f1 SpeedStor 12 Compaq diagnost 61 SpeedStor a9 NetBSD f4 SpeedStor 14 Hidden FAT16 <3 63 GNU HURD or Sys ab Darwin boot f2 DOS secondary 16 Hidden FAT16 64 Novell Netware af HFS / HFS+ fb VMware VMFS 17 Hidden HPFS/NTF 65 Novell Netware b7 BSDI fs fc VMware VMKCORE 18 AST SmartSleep 70 DiskSecure Mult b8 BSDI swap fd Linux raid auto 1b Hidden W95 FAT3 75 PC/IX bb Boot Wizard hid fe LANstep 1c Hidden W95 FAT3 80 Old Minix be Solaris boot ff BBT 1e Hidden W95 FAT1 Hex code (type L to list codes): fd Changed system type of partition 5 to fd (Linux raid autodetect) Command (m for help): t Partition number (1-8): 6 Hex code (type L to list codes): fd Changed system type of partition 6 to fd (Linux raid autodetect) Command (m for help): t Partition number (1-8): 7 Hex code (type L to list codes): fd Changed system type of partition 7 to fd (Linux raid autodetect) Command (m for help): t Partition number (1-8): 8 Hex code (type L to list codes): fd Changed system type of partition 8 to fd (Linux raid autodetect) Command (m for help): p Disk /dev/sdb: 21.5 GB, 21474836480 bytes 255 heads, 63 sectors/track, 2610 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xaebe4f2c Device Boot Start End Blocks Id System /dev/sdb1 1 1306 10490413+ 5 Extended /dev/sdb5 1 262 2104452 fd Linux raid autodetect /dev/sdb6 263 524 2104483+ fd Linux raid autodetect /dev/sdb7 525 786 2104483+ fd Linux raid autodetect /dev/sdb8 787 1048 2104483+ fd Linux raid autodetect Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks.

檢視分割槽情況,並通知核心

[[email protected] ~]# fdisk -l Disk /dev/sda: 42.9 GB, 42949672960 bytes 255 heads, 63 sectors/track, 5221 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00058c8c Device Boot Start End Blocks Id System /dev/sda1 * 1 52 409600 83 Linux Partition 1 does not end on cylinder boundary. /dev/sda2 52 4457 35388416 83 Linux /dev/sda3 4457 5222 6144000 82 Linux swap / Solaris Disk /dev/sdb: 21.5 GB, 21474836480 bytes 255 heads, 63 sectors/track, 2610 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xaebe4f2c Device Boot Start End Blocks Id System /dev/sdb1 1 1306 10490413+ 5 Extended /dev/sdb5 1 262 2104452 fd Linux raid autodetect /dev/sdb6 263 524 2104483+ fd Linux raid autodetect /dev/sdb7 525 786 2104483+ fd Linux raid autodetect /dev/sdb8 787 1048 2104483+ fd Linux raid autodetect Disk /dev/md0: 2152 MB, 2152857600 bytes 2 heads, 4 sectors/track, 525600 cylinders Units = cylinders of 8 * 512 = 4096 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 [[email protected] ~]# partx -a /dev/sdb5 /dev/sdb [[email protected] ~]# partx -a /dev/sdb6 /dev/sdb [[email protected] ~]# partx -a /dev/sdb7 /dev/sdb [[email protected] ~]# partx -a /dev/sdb8 /dev/sdb

建立好分割槽和分割槽型別後,我們就開始在Linux上建立raid5

[[email protected] ~]# mdadm -C /dev/md0 -l 5 -n 3 -x 1 /dev/sdb{5,6,7,8} mdadm: /dev/sdb5 appears to be part of a raid array: level=raid1 devices=2 ctime=Thu Nov 1 18:08:48 2018 mdadm: /dev/sdb6 appears to be part of a raid array: level=raid1 devices=2 ctime=Thu Nov 1 18:08:48 2018 mdadm: /dev/sdb7 appears to be part of a raid array: level=raid1 devices=2 ctime=Thu Nov 1 18:08:48 2018 Continue creating array? y mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md0 started.

檢視建立好的raid資訊

[[email protected] ~]# cat /proc/mdstat Personalities : [raid1] [raid6] [raid5] [raid4] md0 : active raid5 sdb7[4] sdb8[3](S) sdb6[1] sdb5[0] 4204544 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [UU_] [>....................] recovery = 3.9% (83552/2102272) finish=0.8min speed=41776K/sec unused devices: <none> [[email protected] ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Thu Nov 1 19:27:13 2018 Raid Level : raid5 Array Size : 4204544 (4.01 GiB 4.31 GB) Used Dev Size : 2102272 (2.00 GiB 2.15 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Thu Nov 1 19:27:23 2018 State : clean, degraded, recovering Active Devices : 2 Working Devices : 4 Failed Devices : 0 Spare Devices : 2 Layout : left-symmetric Chunk Size : 512K Rebuild Status : 43% complete Name : test:0 (local to host test) UUID : f1c7f735:ac34fde5:8ee6bb5b:55189221 Events : 7 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 1 8 22 1 active sync /dev/sdb6 4 8 23 2 spare rebuilding /dev/sdb7 3 8 24 - spare /dev/sdb8

提示:可以看出3塊盤用於陣列,還有一塊盤空閒狀態。

到此我們在Linux上建立raid5 就建立完成了,接下來我們要想使用其磁碟陣列,就得先格式化檔案系統(就是指定其磁碟的檔案系統型別),然後在掛著至根檔案系統我們就可以正常使用了

格式化磁碟陣列,需要注意的是建立好的磁碟真列,在Linux裡就是以/dev/md0 命名的,當然我們格式化指定也是/dev/md0,當然我們這裡是使用的md0 也可以使用其他的,比如md1 md2都可以,這個要看你建立raid是使用的那個了,一般我們都是順序來,方便記憶。

[[email protected] ~]# mke2fs -t ext3 /dev/md0 mke2fs 1.41.12 (17-May-2010) Filesystem label= OS type: Linux Block size=4096 (log=2) Fragment size=4096 (log=2) Stride=128 blocks, Stripe width=256 blocks 262944 inodes, 1051136 blocks 52556 blocks (5.00%) reserved for the super user First data block=0 Maximum filesystem blocks=1077936128 33 block groups 32768 blocks per group, 32768 fragments per group 7968 inodes per group Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736 Writing inode tables: done Creating journal (32768 blocks): done Writing superblocks and filesystem accounting information: done This filesystem will be automatically checked every 34 mounts or 180 days, whichever comes first. Use tune2fs -c or -i to override.

掛載和檢視其掛著情況

[[email protected] ~]# mkdir test/raid5 -p [[email protected] ~]# mount /dev/md0 test/raid5 [[email protected] ~]# ll test/raid5/ total 16 drwx------ 2 root root 16384 Nov 1 19:35 lost+found [[email protected] ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda2 34G 1.6G 30G 6% / tmpfs 1.9G 0 1.9G 0% /dev/shm /dev/sda1 380M 41M 320M 12% /boot /dev/md0 4.0G 137M 3.7G 4% /root/test/raid5

提示:可以看出我們建立的raid5 就掛載至根檔案系統了,當然這裡要提示下,我們現在掛載的,系統重新啟動後就會消失,要想其永久生效,我們可以把掛載資訊寫入/etc/fstab 裡面。這樣重新啟動系統會預設去讀/etc/fstab檔案裡的內容。這裡說下/etc/fstab 這個配置檔案的格式,第一個欄位是要掛載的裝置,第二個欄位是掛載點,第三個欄位是掛載的檔案系統型別,第四個是掛載的選項,預設是defaults如果要開啟acl或其他選項需要用逗號隔開,第五個欄位是轉儲頻率(每多少天做一次完全備份),第6個欄位是檔案系統檢測次序(只有根可以為1),瞭解了其配置檔案的格式後我們就可以把我們剛才的raid5 寫入配置檔案

/dev/md0 /root/test/raid5 ext3 defaults 0 0

提示:要掛載的裝置也可以使用uuid

接下來我們來模擬其raid5 壞了一塊盤的情況,看看我們準備的熱備盤是否能夠頂替其壞了的磁碟。

[[email protected] ~]# mdadm /dev/md0 --fail /dev/sdb6 mdadm: set /dev/sdb6 faulty in /dev/md0 [[email protected] ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Thu Nov 1 19:27:13 2018 Raid Level : raid5 Array Size : 4204544 (4.01 GiB 4.31 GB) Used Dev Size : 2102272 (2.00 GiB 2.15 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Thu Nov 1 19:55:51 2018 State : clean, degraded, recovering Active Devices : 2 Working Devices : 3 Failed Devices : 1 Spare Devices : 1 Layout : left-symmetric Chunk Size : 512K Rebuild Status : 5% complete Name : test:0 (local to host test) UUID : f1c7f735:ac34fde5:8ee6bb5b:55189221 Events : 20 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 3 8 24 1 spare rebuilding /dev/sdb8 4 8 23 2 active sync /dev/sdb7 1 8 22 - faulty /dev/sdb6 [[email protected] ~]# cat /proc/mdstat Personalities : [raid1] [raid6] [raid5] [raid4] md0 : active raid5 sdb7[4] sdb8[3] sdb6[1](F) sdb5[0] 4204544 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [U_U] [====>................] recovery = 21.4% (450048/2102272) finish=0.4min speed=56256K/sec unused devices: <none>

提示:可以看出我們給的熱備盤在其陣列中有壞的磁碟後,會自動頂替其壞的磁碟。頂替上去後,磁碟自動會恢復其陣列裡的資料,以保證其陣列能夠正常使用。

移除壞的盤

[[email protected] ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Thu Nov 1 19:27:13 2018 Raid Level : raid5 Array Size : 4204544 (4.01 GiB 4.31 GB) Used Dev Size : 2102272 (2.00 GiB 2.15 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Thu Nov 1 19:56:20 2018 State : clean Active Devices : 3 Working Devices : 3 Failed Devices : 1 Spare Devices : 0 Layout : left-symmetric Chunk Size : 512K Name : test:0 (local to host test) UUID : f1c7f735:ac34fde5:8ee6bb5b:55189221 Events : 37 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 3 8 24 1 active sync /dev/sdb8 4 8 23 2 active sync /dev/sdb7 1 8 22 - faulty /dev/sdb6 [[email protected] ~]# mdadm /dev/md0 --remove /dev/sdb6 mdadm: hot removed /dev/sdb6 from /dev/md0 [[email protected] ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Thu Nov 1 19:27:13 2018 Raid Level : raid5 Array Size : 4204544 (4.01 GiB 4.31 GB) Used Dev Size : 2102272 (2.00 GiB 2.15 GB) Raid Devices : 3 Total Devices : 3 Persistence : Superblock is persistent Update Time : Thu Nov 1 19:59:54 2018 State : clean Active Devices : 3 Working Devices : 3 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 512K Name : test:0 (local to host test) UUID : f1c7f735:ac34fde5:8ee6bb5b:55189221 Events : 38 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 3 8 24 1 active sync /dev/sdb8 4 8 23 2 active sync /dev/sdb7

接下來我們在新增一塊盤至raid裡(這裡不再演示怎麼去重新分割槽,我們這裡就用/dev/sdb6就可以)

[[email protected] ~]# mdadm /dev/md0 --add /dev/sdb6 mdadm: added /dev/sdb6 [[email protected] ~]# mdadm --detail /dev/md0 /dev/md0: Version : 1.2 Creation Time : Thu Nov 1 19:27:13 2018 Raid Level : raid5 Array Size : 4204544 (4.01 GiB 4.31 GB) Used Dev Size : 2102272 (2.00 GiB 2.15 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Thu Nov 1 20:03:12 2018 State : clean Active Devices : 3 Working Devices : 4 Failed Devices : 0 Spare Devices : 1 Layout : left-symmetric Chunk Size : 512K Name : test:0 (local to host test) UUID : f1c7f735:ac34fde5:8ee6bb5b:55189221 Events : 39 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 3 8 24 1 active sync /dev/sdb8 4 8 23 2 active sync /dev/sdb7 5 8 22 - spare /dev/sdb6

停止raid

[[email protected] ~]# mdadm -S /dev/md0 mdadm: Cannot get exclusive access to /dev/md0:Perhaps a running process, mounted filesystem or active volume group? [[email protected] ~]# umount /dev/md0 [[email protected] ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda2 34G 1.6G 30G 6% / tmpfs 1.9G 0 1.9G 0% /dev/shm /dev/sda1 380M 41M 320M 12% /boot [[email protected] ~]# mdadm -S /dev/md0 mdadm: stopped /dev/md0 [[email protected] ~]# mdadm -D /dev/md0 mdadm: cannot open /dev/md0: No such file or directory [[email protected] ~]# cat /proc/mdstat Personalities : [raid6] [raid5] [raid4] unused devices: <none> [[email protected] ~]#

提示:要想停止其陣列,首先要解除安裝其檔案系統,然後在停止,通過上面的例子我們可以看出,當我們停止掉md0後,其/dev/md0檔案也不存在。也就是說我們停止掉其磁碟陣列,也就把其磁碟陣列的組合關係給解散了,所以對其的檔案也會相應消失。這樣一來我們如果要想再使用其陣列怎麼辦呢?只有重新建立磁碟陣列或裝配,在建立好後,我們要做一件事 ,就是把其raid資訊儲存至配置檔案,這樣我們以後就算停止掉其raid磁碟陣列,也可以快速進行裝配。

[[email protected] ~]# fdisk -l Disk /dev/sda: 42.9 GB, 42949672960 bytes 255 heads, 63 sectors/track, 5221 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00058c8c Device Boot Start End Blocks Id System /dev/sda1 * 1 52 409600 83 Linux Partition 1 does not end on cylinder boundary. /dev/sda2 52 4457 35388416 83 Linux /dev/sda3 4457 5222 6144000 82 Linux swap / Solaris Disk /dev/sdb: 21.5 GB, 21474836480 bytes 255 heads, 63 sectors/track, 2610 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xaebe4f2c Device Boot Start End Blocks Id System /dev/sdb1 1 1306 10490413+ 5 Extended /dev/sdb5 1 262 2104452 fd Linux raid autodetect /dev/sdb6 263 524 2104483+ fd Linux raid autodetect /dev/sdb7 525 786 2104483+ fd Linux raid autodetect /dev/sdb8 787 1048 2104483+ fd Linux raid autodetect [[email protected] ~]# mdadm -C /dev/md0 -l 5 -n 3 -x 1 /dev/sdb{5,6,7,8} mdadm: /dev/sdb5 appears to be part of a raid array: level=raid5 devices=3 ctime=Thu Nov 1 19:27:13 2018 mdadm: /dev/sdb6 appears to be part of a raid array: level=raid5 devices=3 ctime=Thu Nov 1 19:27:13 2018 mdadm: /dev/sdb7 appears to be part of a raid array: level=raid5 devices=3 ctime=Thu Nov 1 19:27:13 2018 mdadm: /dev/sdb8 appears to be part of a raid array: level=raid5 devices=3 ctime=Thu Nov 1 19:27:13 2018 Continue creating array? y mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md0 started. [[email protected] ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Thu Nov 1 20:20:44 2018 Raid Level : raid5 Array Size : 4204544 (4.01 GiB 4.31 GB) Used Dev Size : 2102272 (2.00 GiB 2.15 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Thu Nov 1 20:21:03 2018 State : clean, degraded, recovering Active Devices : 2 Working Devices : 4 Failed Devices : 0 Spare Devices : 2 Layout : left-symmetric Chunk Size : 512K Rebuild Status : 68% complete Name : test:0 (local to host test) UUID : 6074e103:c5bae142:7c9fa17c:8cc5495d Events : 11 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 1 8 22 1 active sync /dev/sdb6 4 8 23 2 spare rebuilding /dev/sdb7 3 8 24 - spare /dev/sdb8 [[email protected] ~]# cat /proc/mdstat Personalities : [raid1] [raid6] [raid5] [raid4] md0 : active raid5 sdb7[4] sdb8[3](S) sdb6[1] sdb5[0] 4204544 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU] unused devices: <none> [[email protected] ~]# mdadm -D --scan > /etc/mdadm.conf [[email protected] ~]# cat /etc/mdadm.conf ARRAY /dev/md0 metadata=1.2 spares=1 name=test:0 UUID=6074e103:c5bae142:7c9fa17c:8cc5495d

提示:以上是重新建立md0,在我們使用mdadm這個工具時,它有個模式可以方便我們重新裝載-A

[[email protected] ~]# cat /proc/mdstat Personalities : [raid1] [raid6] [raid5] [raid4] md0 : active raid5 sdb7[4] sdb8[3](S) sdb6[1] sdb5[0] 4204544 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU] unused devices: <none> [[email protected] ~]# mdadm -S /dev/md0 mdadm: stopped /dev/md0 [[email protected] ~]# cat /proc/mdstat Personalities : [raid1] [raid6] [raid5] [raid4] unused devices: <none> [[email protected] ~]# mdadm -A /dev/md0 /dev/sdb{5,6,7,8} mdadm: /dev/md0 has been started with 3 drives and 1 spare. [[email protected] ~]# cat /proc/mdstat Personalities : [raid1] [raid6] [raid5] [raid4] md0 : active raid5 sdb5[0] sdb8[3](S) sdb7[4] sdb6[1] 4204544 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU] unused devices: <none> [[email protected] ~]#

提示:當我們儲存的有配置檔案資訊我們也可直接進行裝配,不需要指定其磁碟資訊。

[[email protected] ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Thu Nov 1 20:20:44 2018 Raid Level : raid5 Array Size : 4204544 (4.01 GiB 4.31 GB) Used Dev Size : 2102272 (2.00 GiB 2.15 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Thu Nov 1 20:21:13 2018 State : clean Active Devices : 3 Working Devices : 4 Failed Devices : 0 Spare Devices : 1 Layout : left-symmetric Chunk Size : 512K Name : test:0 (local to host test) UUID : 6074e103:c5bae142:7c9fa17c:8cc5495d Events : 18 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 1 8 22 1 active sync /dev/sdb6 4 8 23 2 active sync /dev/sdb7 3 8 24 - spare /dev/sdb8 [[email protected] ~]# cat /proc/mdstat Personalities : [raid1] [raid6] [raid5] [raid4] md0 : active raid5 sdb5[0] sdb8[3](S) sdb7[4] sdb6[1] 4204544 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU] unused devices: <none> [[email protected] ~]# mdadm -S /dev/md0 mdadm: stopped /dev/md0 [[email protected] ~]# cat /proc/mdstat Personalities : [raid1] [raid6] [raid5] [raid4] unused devices: <none> [[email protected] ~]# mdadm -D /dev/md0 mdadm: cannot open /dev/md0: No such file or directory [[email protected] ~]# mdadm -A /dev/md0 mdadm: /dev/md0 has been started with 3 drives and 1 spare. [[email protected] ~]# cat /proc/mdstat Personalities : [raid1] [raid6] [raid5] [raid4] md0 : active raid5 sdb5[0] sdb8[3](S) sdb7[4] sdb6[1] 4204544 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU] unused devices: <none> [[email protected] ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Thu Nov 1 20:20:44 2018 Raid Level : raid5 Array Size : 4204544 (4.01 GiB 4.31 GB) Used Dev Size : 2102272 (2.00 GiB 2.15 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Thu Nov 1 20:21:13 2018 State : clean Active Devices : 3 Working Devices : 4 Failed Devices : 0 Spare Devices : 1 Layout : left-symmetric Chunk Size : 512K Name : test:0 (local to host test) UUID : 6074e103:c5bae142:7c9fa17c:8cc5495d Events : 18 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 1 8 22 1 active sync /dev/sdb6 4 8 23 2 active sync /dev/sdb7 3 8 24 - spare /dev/sdb8 [[email protected] ~]#

提示:當我們有儲存的有裝載的配置資訊,我們就直接重新裝載,不用指定其磁碟,以上就是最最基本的管理raid以及建立raid,當然我們也可以建立多個不同級別的raid,其方法同上。

以上是我個人的一些總結和看法,如有錯誤,歡迎大家指出,謝謝大家。