001.hadoop及hbase部署

阿新 • • 發佈:2018-11-01

一 環境準備

1.1 相關環境

- 系統:CentOS 7

#CentOS 6.x系列也可參考,轉換相關命令即可。

- hadoop包:hadoop-2.7.0.tar.gz

#下載官方地址:http://www.apache.org/dyn/closer.cgi/hadoop/common/

- hbase包:hbase-1.0.3-bin.tar.gz

#下載官方地址:http://www.apache.org/dyn/closer.cgi/hbase

- java環境:jdk-8u111-linux-x64.tar.gz

#下載官方地址:http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

- 其他:zookeeper-3.4.9.tar.gz

#下載官方地址:http://www.apache.org/dyn/closer.cgi/zookeeper/

1.2 網路環境

二 基礎環境配置

2.1 配置相關主機名

1 [[email protected] ~]# hostnamectl set-hostname master 2 [[email protected] ~]# hostnamectl set提示:三臺主機都需要配置。-hostname slave01 3 [[email protected] ~]# hostnamectl set-hostname slave02

1 [[email protected] ~]# vi /etc/hosts 2 …… 3 172.24.8.12 master 4 172.24.8.13 slave01 5 172.24.8.14 slave02 6 [[email protected] ~]# scp /etc/hosts [email protected]:/etc/hosts 7 [[email protected] ~]# scp /etc/hosts [email protected]:/etc/hosts提示:直接將hosts檔案複製到slave01和slave02。

2.2 防火牆及SELinux

1 [[email protected]er ~]# systemctl stop firewalld.service #關閉防火牆 2 [[email protected] ~]# systemctl disable firewalld.service #禁止開機啟動防火牆 3 [[email protected] ~]# vi /etc/selinux/config 4 …… 5 SELINUX=disabled 6 [[email protected] ~]# setenforce 0提示:三臺主機都需要配置,配置相關的埠放行和SELinux上下文,也可不關閉。

2.3 時間同步

1 [[email protected] ~]# yum -y install ntpdate 2 [[email protected] ~]# ntpdate cn.ntp.org.cn提示:三臺主機都需要配置。

三 jdk安裝配置

注意:將所需安裝包上傳至/usr/即可。3.1 jdk環境安裝

1 [[email protected] ~]# cd /usr/ 2 [[email protected] usr]# tar -zxvf jdk-8u111-linux-x64.tar.gz提示:三臺都需要配置。

3.2 jdk系統變數增加

1 [[email protected] ~]# vi .bash_profile 2 …… 3 PATH=$PATH:$HOME/bin:$JAVA_HOME/bin 4 export PATH 5 export JAVA_HOME=/usr/jdk1.8.0_111 6 [[email protected] ~]# scp /root/.bash_profile [email protected]:/root/.bash_profile 7 [[email protected] ~]# scp /root/.bash_profile [email protected]:/root/.bash_profile 8 [[email protected] ~]# source /root/.bash_profile #重新載入環境變數提示: 1 直接將變數配置複製到slave01和slave02; 2 也可在/etc/profile新增變數。

1 [[email protected] ~]# java -version #測試 2 [[email protected] ~]# java -version #測試 3 [[email protected] ~]# java -version #測試

四 SSH無金鑰訪問

4.1 master-slave01之間無金鑰登入

1 [[email protected] ~]# ssh-keygen -t rsa 2 [[email protected] ~]# ssh-keygen -t rsa 3 [[email protected] ~]# ssh-keygen -t rsa提示:三臺都需要生成key金鑰。

1 [[email protected] ~]# scp /root/.ssh/id_rsa.pub [email protected]:/root/.ssh/slave01.id_rsa.pub 2 #將slave01主機的公鑰複製給master,並命名為slave01.id_rsa.pub。 3 [[email protected] ~]# cat /root/.ssh/id_rsa.pub >>/root/.ssh/authorized_keys 4 [[email protected] ~]# cat /root/.ssh/slave01.id_rsa.pub >>/root/.ssh/authorized_keys 5 [[email protected] ~]# scp /root/.ssh/authorized_keys slave01:/root/.ssh/ 6 #將master中關於master的公鑰和slave01的公鑰同時寫入authorized_keys檔案,並將此檔案傳送至slave01。提示:以上實現master<---->slave01雙向無金鑰登入。 4.2 master-slave02之間無金鑰登入

1 [[email protected] ~]# scp /root/.ssh/id_rsa.pub [email protected]:/root/.ssh/slave02.id_rsa.pub 2 #將slave02主機的公鑰複製給master,並命名為slave02.id_rsa.pub。 3 [[email protected] ~]# cat /root/.ssh/id_rsa.pub >/root/.ssh/authorized_keys 4 注意:此處為防止slave之間互相登入,採用覆蓋方式寫入。 5 [[email protected] ~]# cat /root/.ssh/slave02.id_rsa.pub >>/root/.ssh/authorized_keys 6 [[email protected] ~]# scp /root/.ssh/authorized_keys slave02:/root/.ssh/ 7 #將master中關於master的公鑰和slave01的公鑰同時寫入authorized_keys檔案,並將此檔案傳送至slave01。 8 #以上實現master<---->slave01雙向無金鑰登入。 9 [[email protected] .ssh]# cat slave01.id_rsa.pub >>authorized_keys 10 #為實現master和slave01、slave02雙向無金鑰登入,將slave01的公鑰重新寫入。 11 [[email protected] ~]# cat /root/.ssh/authorized_keys #存在master和slave01、slave02的三組公鑰 12 [[email protected] ~]# cat /root/.ssh/authorized_keys #存在master和slave01的公鑰 13 [[email protected] ~]# cat /root/.ssh/authorized_keys #存在master和slave02的公鑰

五 安裝hadoop及配置

5.1 解壓hadoop

1 [[email protected] ~]# cd /usr/ 2 [[email protected] usr]# tar -zxvf hadoop-2.7.0.tar.gz

5.2 建立相應目錄

1 [[email protected] usr]# mkdir /usr/hadoop-2.7.0/tmp #存放叢集臨時資訊 2 [[email protected] usr]# mkdir /usr/hadoop-2.7.0/logs #存放叢集相關日誌 3 [[email protected] usr]# mkdir /usr/hadoop-2.7.0/hdf #存放叢集資訊 4 [[email protected] usr]# mkdir /usr/hadoop-2.7.0/hdf/data #儲存資料節點資訊 5 [[email protected] usr]# mkdir /usr/hadoop-2.7.0/hdf/name #儲存Name節點資訊

5.3 master修改相關配置

5.3.1 修改slaves

1 [[email protected] ~]# vi /usr/hadoop-2.7.0/etc/hadoop/slaves 2 slave01 3 slave02 4 #刪除localhost,新增相應的主機名。

5.3.2 修改core-site.xml

1 [[email protected] ~]# vi /usr/hadoop-2.7.0/etc/hadoop/core-site.xml 2 …… 3 <configuration> 4 <property> 5 <name>fs.default.name</name> 6 <value>hdfs://master:9000</value> 7 </property> 8 <property> 9 <name>hadoop.tmp.dir</name> 10 <value>file:/usr/hadoop-2.7.0/tmp</value> 11 <description> 12 Abase for other temporary directories. 13 </description> 14 </property> 15 <property> 16 <name>hadoop.proxyuser.root.hosts</name> 17 <value>master</value> 18 </property> 19 <property> 20 <name>hadoop.proxyuser.root.groups</name> 21 <value>*</value> 22 </property> 23 </configuration>

5.3.3 修改hdfs-site.xml

1 [[email protected] ~]# vi /usr/hadoop-2.7.0/etc/hadoop/hdfs-site.xml 2 …… 3 <configuration> 4 <property> 5 <name>dfs.datanode.data.dir</name> 6 <value>/usr/hadoop-2.7.0/hdf/data</value> 7 <final>true</final> 8 </property> 9 <property> 10 <name>dfs.namenode.name.dir</name> 11 <value>/usr/hadoop-2.7.0/hdf/name</value> 12 <final>true</final> 13 </property> 14 </configuration>

5.3.4 修改mapred-site.xml

1 [[email protected] ~]# cp /usr/hadoop-2.7.0/etc/hadoop/mapred-site.xml.template /usr/hadoop-2.7.0/etc/hadoop/mapred-site.xml 2 [[email protected] ~]# vi /usr/hadoop-2.7.0/etc/hadoop/mapred-site.xml 3 …… 4 <configuration> 5 <property> 6 <name>mapreduce.framework.name</name> 7 <value>yarn</value> 8 </property> 9 <property> 10 <name>mapreduce.jobhistory.address</name> 11 <value>master:10020</value> 12 </property> 13 <property> 14 <name>mapreduce.jobhistory.webapp.address</name> 15 <value>master:19888</value> 16 </property> 17 </configuration>

5.3.5 修改yarn-site.xml

1 [[email protected] ~]# vi /usr/hadoop-2.7.0/etc/hadoop/yarn-site.xml 2 <configuration> 3 …… 4 <property> 5 <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> 6 <value>org.apache.mapred.ShuffleHandler</value> 7 </property> 8 <property> 9 <name>yarn.resourcemanager.address</name> 10 <value>master:8032</value> 11 </property> 12 <property> 13 <name>yarn.resourcemanager.scheduler.address</name> 14 <value>master:8030</value> 15 </property> 16 <property> 17 <name>yarn.resourcemanager.resource-tracker.address</name> 18 <value>master:8031</value> 19 </property> 20 <property> 21 <name>yarn.resourcemanager.admin.address</name> 22 <value>master:8033</value> 23 </property> 24 <property> 25 <name>yarn.resourcemanager.webapp.address</name> 26 <value>master:8088</value> 27 </property> 28 </configuration>

5.4 slave節點安裝hadoop

1 [[email protected] ~]# scp -r /usr/hadoop-2.7.0 [email protected]:/usr/ 2 [[email protected] ~]# scp -r /usr/hadoop-2.7.0 [email protected]:/usr/提示:直接將master的hadoop目錄複製到slave01和slave02。

六 系統變數及環境修改

6.1 hadoop環境變數

1 [[email protected] ~]# vi /usr/hadoop-2.7.0/etc/hadoop/hadoop-env.sh 2 export JAVA_HOME=/usr/jdk1.8.0_111 3 [[email protected] ~]# vi /usr/hadoop-2.7.0/etc/hadoop/yarn-env.sh 4 export JAVA_HOME=/usr/jdk1.8.0_111

6.2 系統環境變數

1 [[email protected] ~]# vi /root/.bash_profile 2 …… 3 PATH=$PATH:$HOME/bin:$JAVA_HOME/bin 4 export PATH 5 export JAVA_HOME=/usr/jdk1.8.0_111 6 export HADOOP_HOME=/usr/hadoop-2.7.0 7 export HADOOP_LOG_DIR=/usr/hadoop-2.7.0/logs 8 export YARN_LOG_DIR=$HADOOP_LOG_DIR 9 [[email protected] ~]# scp /root/.bash_profile [email protected]:/root/ 10 [[email protected] ~]# scp /root/.bash_profile [email protected]:/root/ 11 [[email protected] ~]# source /root/.bash_profile 12 [[email protected] ~]# source /root/.bash_profile 13 [[email protected] ~]# source /root/.bash_profile提示:三臺主機都需要配置,直接將master的profile檔案複製到slave01和slave02。

七 格式化namenode並啟動

1 [[email protected] ~]# cd /usr/hadoop-2.7.0/bin/ 2 [[email protected] bin]# ./hadoop namenode -format #或者./hdfs namenode -format 3 提示:其他主機不需要格式化。 4 [[email protected] ~]# cd /usr/hadoop-2.7.0/sbin 5 [[email protected] sbin]# ./start-all.sh #啟動提示:其他節點不需要啟動,主節點啟動時,會啟動其他節點,檢視其他節點程序,slave也可以單獨啟動datanode和nodemanger程序即可,如下——

1 [[email protected] ~]# cd /usr/hadoop-2.7.0/sbin 2 [[email protected] ~]# hadoop-daemon.sh start datanode 3 [[email protected] ~]# yarn-daemon.sh start nodemanager

八 檢測hadoop

8.1 確認驗證

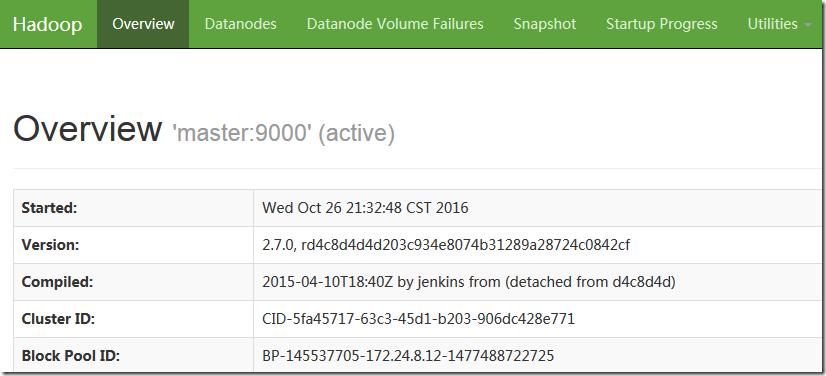

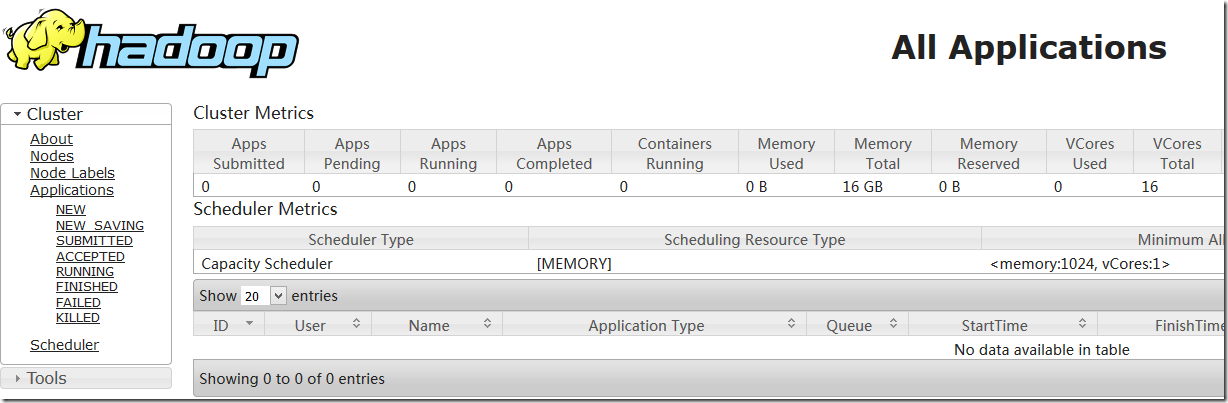

http://172.24.8.12:8088/cluster

8.2 java檢測

1 [[email protected] ~]# /usr/jdk1.8.0_111/bin/jps 2 21346 NameNode 3 21703 ResourceManager 4 21544 SecondaryNameNode 5 21977 Jps 6 [[email protected] ~]# jps 7 16038 NodeManager 8 15928 DataNode 9 16200 Jps 10 15533 SecondaryNameNode 11 [[email protected] ~]# jps 12 15507 SecondaryNameNode 13 16163 Jps 14 16013 NodeManager 15 15903 DataNode

九 安裝Zookeeper

9.1 安裝並配置zookeeper

1 [[email protected] ~]# cd /usr/ 2 [[email protected] usr]# tar -zxvf zookeeper-3.4.9.tar.gz #解壓zookeeper 3 [[email protected] usr]# mkdir /usr/zookeeper-3.4.9/data #建立zookeeper資料儲存目錄 4 [[email protected] usr]# mkdir /usr/zookeeper-3.4.9/logs #建立zookeeper日誌儲存目錄

9.2 修改zookeeper相關配置項

1 [[email protected] ~]# cp /usr/zookeeper-3.4.9/conf/zoo_sample.cfg /usr/zookeeper-3.4.9/conf/zoo.cfg 2 #從模板複製zoo配置檔案 3 [[email protected] ~]# vi /usr/zookeeper-3.4.9/conf/zoo.cfg 4 tickTime=2000 5 initLimit=10 6 syncLimit=5 7 dataDir=/usr/zookeeper-3.4.9/data 8 dataLogDir=/usr/zookeeper-3.4.9/logs 9 clientPort=2181 10 server.1=master:2888:3888 11 server.2=slave01:2888:3888 12 server.3=slave02:2888:3888

9.3 建立myid

1 [[email protected] ~]# vi /usr/zookeeper-3.4.9/data/myid 2 1注意:此處建立的檔案myid內容為zoo.cfg配置中server.n中的n。即master為1,slave01為2,slave02為3。

9.4 修改環境變數

1 [[email protected] ~]# vi /root/.bash_profile #修改環境變數 2 PATH=$PATH:$HOME/bin:$JAVA_HOME/bin:$ZOOKEEPER_HOME/bin #增加zookeeper變數路徑 3 export PATH 4 export JAVA_HOME=/usr/jdk1.8.0_111 5 export HADOOP_HOME=/usr/hadoop-2.7.0 6 export HADOOP_LOG_DIR=/usr/hadoop-2.7.0/logs 7 export YARN_LOG_DIR=$HADOOP_LOG_DIR 8 export ZOOKEEPER_HOME=/usr/zookeeper-3.4.9/ #增加zookeeper路徑 9 [[email protected] ~]# source /root/.bash_profile

9.5 Slave節點的zookeeper部署

1 [[email protected] ~]# scp -r /usr/zookeeper-3.4.9/ [email protected]:/usr/ 2 [[email protected] ~]# scp -r /usr/zookeeper-3.4.9/ [email protected]:/usr/ 3 [[email protected] ~]# scp /root/.bash_profile [email protected]:/root/ 4 [[email protected] ~]# scp /root/.bash_profile [email protected]:/root/提示:三臺主機都需要配置,依次將zookeeper和環境變數檔案profile複製到slave01和slave02。

9.6 Slave主機修改myid

1 [[email protected] ~]# vi /usr/zookeeper-3.4.9/data/myid 2 2 3 [[email protected] ~]# vi /usr/zookeeper-3.4.9/data/myid 4 3

9.7 啟動zookeeper

1 [[email protected] ~]# cd /usr/zookeeper-3.4.9/bin/ 2 [[email protected] bin]# ./zkServer.sh start 3 [[email protected] ~]# cd /usr/zookeeper-3.4.9/bin/ 4 [[email protected] bin]# ./zkServer.sh start 5 [[email protected] ~]# cd /usr/zookeeper-3.4.9/bin/ 6 [[email protected] bin]# ./zkServer.sh start提示:三臺主機都需要啟動,命令方式一樣。

十 安裝及配置hbase

10.1 安裝hbase

1 [[email protected] ~]# cd /usr/ 2 [[email protected] usr]# tar -zxvf hbase-1.0.3-bin.tar.gz #解壓hbase 3 [[email protected] ~]# mkdir /usr/hbase-1.0.3/logs #建立hbase的日誌存放目錄 4 [[email protected] ~]# mkdir /usr/hbase-1.0.3/temp #hbase的臨時檔案存放目錄 5 [[email protected] ~]# mkdir /usr/hbase-1.0.3/temp/pid #hbase相關pid檔案存放目錄

10.2 修改環境變數

1 [[email protected] ~]# vi /root/.bash_profile 2 PATH=$PATH:$HOME/bin:$JAVA_HOME/bin:$ZOOKEEPER_HOME/bin:$HBASE_HOME/bin 3 export PATH 4 export JAVA_HOME=/usr/jdk1.8.0_111 5 export HADOOP_HOME=/usr/hadoop-2.7.0 6 export HADOOP_LOG_DIR=/usr/hadoop-2.7.0/logs 7 export YARN_LOG_DIR=$HADOOP_LOG_DIR 8 export ZOOKEEPER_HOME=/usr/zookeeper-3.4.9/ 9 export HBASE_HOME=/usr/hbase-1.0.3 10 export HBASE_LOG_DIR=$HBASE_HOME/logs 11 [[email protected] ~]# source /root/.bash_profile 12 [[email protected] ~]# scp /root/.bash_profile slave01:/root/ 13 [[email protected] ~]# scp /root/.bash_profile slave02:/root/提示:三臺主機都需要配置,可直接將環境變數複製給slave01和slave02。

1 [[email protected] ~]# vi /usr/hbase-1.0.3/conf/hbase-env.sh 2 export JAVA_HOME=/usr/jdk1.8.0_111 3 export HBASE_MANAGES_ZK=true 4 export HBASE_CLASSPATH=/usr/hadoop-2.7.0/etc/hadoop/ 5 export HBASE_PID_DIR=/usr/hbase-1.0.3/temp/pid注意:分散式執行的一個Hbase依賴一個zookeeper叢集。所有的節點和客戶端都必須能夠訪問zookeeper。預設Hbase會管理一個zookeep叢集,即HBASE_MANAGES_ZK=true,這個叢集會隨著 Hbase 的啟動而啟動。也可以採用獨立的 zookeeper 來管理 hbase,即HBASE_MANAGES_ZK=false。

10.4 修改hbase-site.xml

1 [[email protected] ~]# vi /usr/hbase-1.0.3/conf/hbase-site.xml 2 …… 3 <configuration> 4 <property> 5 <name>hbase.rootdir</name> 6 <value>hdfs://master:9000/hbase</value> 7 </property> 8 <property> 9 <name>hbase.cluster.distributed</name> 10 <value>true</value> 11 </property> 12 <property> 13 <name>hbase.zookeeper.quorum</name> 14 <value>slave01,slave02</value> 15 </property> 16 <property> 17 <name>hbase.master.maxclockskew</name> 18 <value>180000</value> 19 </property> 20 <property> 21 <name>hbase.zookeeper.property.dataDir</name> 22 <value>/usr/zookeeper-3.4.9/data</value> 23 </property> 24 <property> 25 <name>hbase.tmp.dir</name> 26 <value>/usr/hbase-1.0.3/temp</value> 27 </property> 28 <property> 29 <name>hbase.master</name> 30 <value>hdfs://master:60000</value> 31 </property> 32 <property> 33 <name>hbase.master.info.port</name> 34 <value>60010</value> 35 </property> 36 <property> 37 <name>hbase.regionserver.info.port</name> 38 <value>60030</value> 39 </property> 40 <property> 41 <name>hbase.client.scanner.caching</name> 42 <value>200</value> 43 </property> 44 <property> 45 <name>hbase.balancer.period</name> 46 <value>300000</value> 47 </property> 48 <property> 49 <name>hbase.client.write.buffer</name> 50 <value>10485760</value> 51 </property> 52 <property> 53 <name>hbase.hregion.majorcompaction</name> 54 <value>7200000</value> 55 </property> 56 <property> 57 <name>hbase.hregion.max.filesize</name> 58 <value>67108864</value> 59 </property> 60 <property> 61 <name>hbase.hregion.memstore.flush.size</name> 62 <value>1048576</value> 63 </property> 64 <property> 65 <name>hbase.server.thread.wakefrequency</name> 66 <value>30000</value> 67 </property> 68 </configuration> 69 #直接將imxhy01的相關配置傳送給imxhy02即可。 70 [[email protected] ~]# scp -r /usr/hbase-1.0.3 [email protected]:/usr/

10.5 配置 regionservers

1 [[email protected] ~]# vi /usr/hbase-1.0.3/conf/regionservers 2 slave01 3 slave02

十一 啟動hbase

1 [[email protected] ~]# cd /usr/hbase-1.0.3/bin/ 2 [[email protected] bin]# ./start-hbase.sh 3 [[email protected] ~]# cd /usr/hbase-1.0.3/bin/ 4 [[email protected] bin]# ./hbase-daemon.sh start regionserver 5 [[email protected] ~]# cd /usr/hbase-1.0.3/bin/ 6 [[email protected] bin]# ./hbase-daemon.sh start regionserver

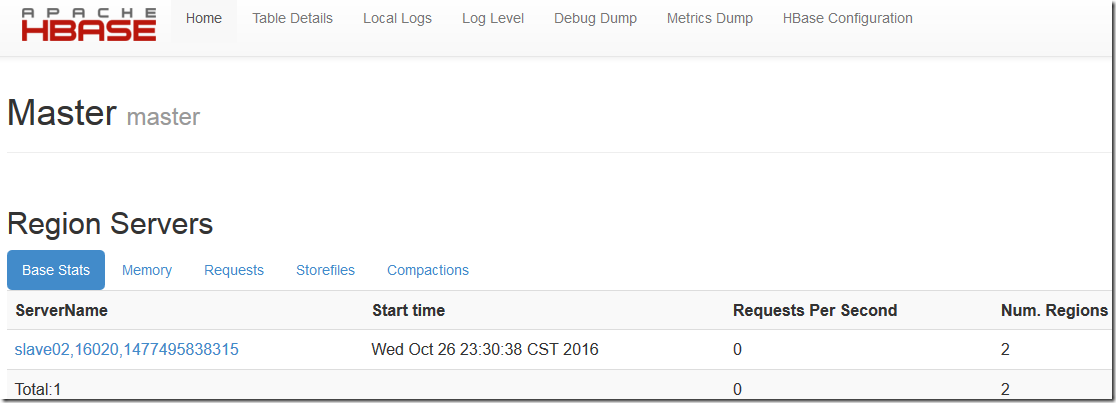

十二 測試

12.1 瀏覽器檢測

瀏覽器訪問:http://172.24.8.12:60010/master-status

12.2 java檢測

1 [[email protected] ~]# /usr/jdk1.8.0_111/bin/jps 2 21346 NameNode 3 23301 Jps 4 21703 ResourceManager 5 21544 SecondaryNameNode 6 22361 QuorumPeerMain 7 23087 HMaster 8 [[email protected] ~]# jps 9 17377 HRegionServer 10 17457 Jps 11 16038 NodeManager 12 15928 DataNode 13 16488 QuorumPeerMain 14 15533 SecondaryNameNode 15 [[email protected] ~]# jps 16 16400 QuorumPeerMain 17 15507 SecondaryNameNode 18 16811 HRegionServer 19 17164 Jps 20 16013 NodeManager 21 15903 DataNode