利用scrapy建立代理池

阿新 • • 發佈:2018-11-05

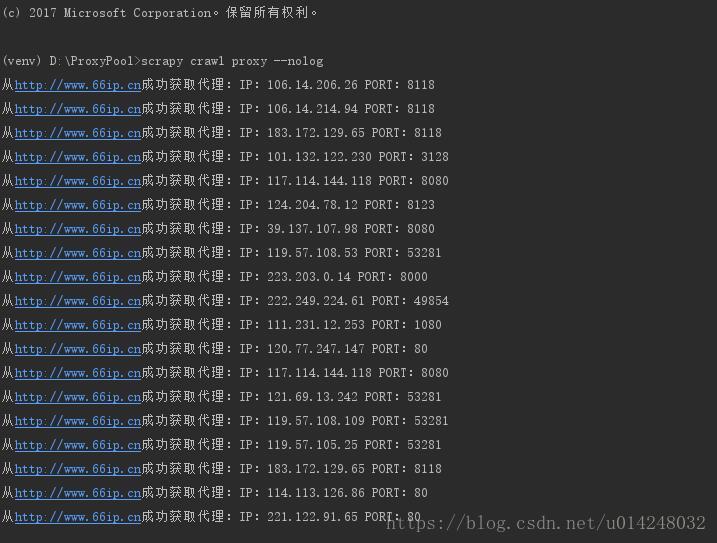

一開始爬取xicidaili,頻率太快ip被禁了。。。,只能回家爬取。明天把爬取的ip存到redis裡做持久化,並且爬取後自動測試代理評級。

proxypool.py

# -*- coding: utf-8 -*- import scrapy from scrapy import Request,Spider from pyquery import PyQuery from ..items import ProxyItem class ProxySpider(Spider): name = 'proxy' allowed_domains = ['proxy'] start_urls = ['http://proxy/'] page = 1 xicidail_url = "http://www.xicidaili.com/nn/{page}" kuaidaili_url = "https://www.kuaidaili.com/free/inha/{page}/" _66daili_url = "http://www.66ip.cn/areaindex_{page}/1.html" ip3366_url = "http://www.ip3366.net/?stype=1&page={page}" def start_requests(self): yield Request(url=self.kuaidaili_url.format(page=self.page), callback=self.kuaidaili_parse) yield Request(url=self._66daili_url.format(page=self.page), callback=self._66_daili_parse) yield Request(url=self.ip3366_url.format(page=self.page), callback=self.ip3366_parse) # yield Request(url=self.xicidail_url.format(page=1), callback=self.xicidaili_parse) def kuaidaili_parse(self, response): pq = PyQuery(response.text) item = ProxyItem() proxies = pq.find("#list .table-bordered tbody").find("tr") for proxy in proxies.items(): ip = proxy.find("td").eq(0).text() port = proxy.find("td").eq(1).text() item["proxy"] = ip + ":" + port print("從%s成功獲取代理:IP:%s PORT:%s" % ("www.kuaidaili.com", ip, port)) yield item now_page = int(response.url.split("/")[-2]) next_page = now_page + 1 if next_page <= 10: yield Request(url=self.kuaidaili_url.format(page=str(next_page)), callback=self.kuaidaili_parse, dont_filter=True) def _66_daili_parse(self, response): pq = PyQuery(response.text) item = ProxyItem() proxies = pq.find("#footer table tr:gt(0)") for proxy in proxies.items(): ip = proxy.find("td").eq(0).text() port = proxy.find("td").eq(1).text() item["proxy"] = ip + ":" + port print("從%s成功獲取代理:IP:%s PORT:%s" % ("http://www.66ip.cn", ip, port)) yield item now_page = int(response.url.split("/")[-2].split("_")[1]) next_page = now_page + 1 if next_page <= 34: yield Request(url=self._66daili_url.format(page=str(next_page)), callback=self._66_daili_parse, dont_filter=True) def ip3366_parse(self, response): pq = PyQuery(response.text) item = ProxyItem() proxyies = pq.find("#list table tbody tr:gt(0)") for proxy in proxyies.items(): ip = proxy.find("td").eq(0).text() port = proxy.find("td").eq(1).text() item["proxy"] = ip + ":" + port print("從%s成功獲取代理:IP:%s PORT:%s" % ("www.ip3366.net", ip, port)) yield item now_page = int(response.url.split("=")[2]) next_page = now_page + 1 if next_page <= 10: yield Request(url=self.ip3366_url.format(page=str(next_page)), callback=self.ip3366_parse, dont_filter=True) # def xicidaili_parse(self, response): # print(response) # page = int(response.url.split("/")[-1]) # pq = PyQuery(response.text) # item = XicidailiItem() # proxies = pq.find("#ip_list").find("tr") # total_page = int(pq.find("#body .pagination a").eq(-2).text()) # self.page += 1 # for proxy in proxies.items(): # ip = proxy.find("td").eq(1).text() # port = proxy.find("td").eq(2).text() # item["proxy_info"] = ip + ":"+ port # # print(item["proxy_info"]) # yield item # page += 1 # if page <= 10: # yield Request(url=self.xicidail_url.format(page=str(page)), callback=self.xicidaili_parse, dont_filter=True)

items.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

from scrapy import Item,Field

class ProxyItem(Item):

ip = Field()

port = Field()settings.py

# -*- coding: utf-8 -*- # Scrapy settings for ProxyPool project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'ProxyPool' SPIDER_MODULES = ['ProxyPool.spiders'] NEWSPIDER_MODULE = 'ProxyPool.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'ProxyPool (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) CONCURRENT_REQUESTS = 4 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: DEFAULT_REQUEST_HEADERS = { 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 'Accept-Language': 'en', "User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36" } # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'ProxyPool.middlewares.ProxypoolSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'ProxyPool.middlewares.ProxypoolDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html # ITEM_PIPELINES = { # 'ProxyPool.pipelines.ProxyPipeline': 300, # } # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html AUTOTHROTTLE_ENABLED = True # The initial download delay AUTOTHROTTLE_START_DELAY = 30 # The maximum download delay to be set in case of high latencies AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'