hadoop cdh安裝

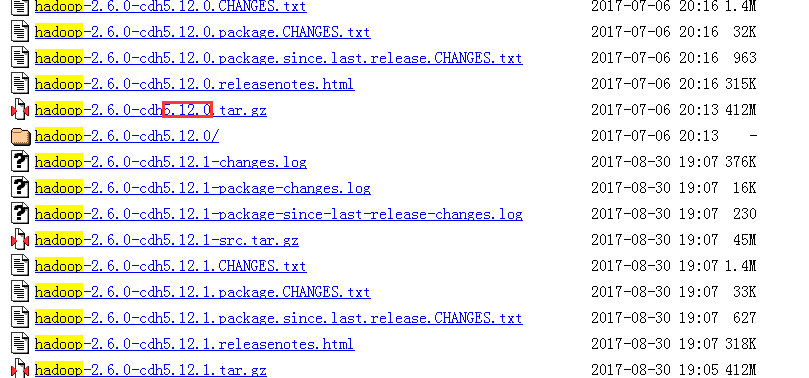

1.下載元件

首先去CDH網站上下載hadoop元件

地址:http://archive.cloudera.com/cdh5/cdh/5/

注意版本號要與其他的元件CDH版本一致

2.環境配置

設定主機名和使用者名稱

配置靜態IP

配置SSH免密登入

配置JDK

3.配置HADOOP

1.新建使用者hadoop,從root使用者獲取/opt資料夾的許可權,所有節點都要執行

useradd -m hadoop -s /bin/bash passwd hadoop chown -R hadoop /opt/module/hadoop chown -R hadoop /usr/sunny

為hadoop使用者新增管理許可權

visudo

## Next comes the main part: which users can run what software on ## which machines (the sudoers file can be shared between multiple ## systems). ## Syntax: ## ## user MACHINE=COMMANDS ## ## The COMMANDS section may have other options added to it. ## ## Allow root to run any commands anywhere root ALL=(ALL) ALL hadoop ALL=(ALL) ALL

2.hadoop的安裝路徑不推薦安裝在/home/hadoop目錄下,推薦安裝在/opt目錄下,然後切換到hadoop使用者,解壓檔案後將hadoop轉移到/opt/module下,並修改資料夾名稱為hadoop

tar -zxvf hadoop-2.6.0-cdh5.12.0.tar.gz mv hadoop-2.6.0-cdh5.12.0 /opt/module/hadoop

修改hadoop資料夾的許可權

sudo chown -R hadoop:hadoop hadoop

3.配置環境變數

vim ~/.bash_profile

export HADOOP_HOME=/opt/module/hadoop export HADOOP_INSTALL=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

source ~/.bash_profile

4.修改配置檔案

配置檔案的位置為hadoop-2.6.0-cdh5.12.0/etc/hadoop目錄下,主要檔案:

| 配置名稱 |

型別 |

說明 |

| hadoop-env.sh |

Bash指令碼 |

Hadoop執行環境變數設定 |

| core-site.xml |

xml |

Hadoop的配置項,例如HDFS和MapReduce常用的I/O設定等 |

| hdfs-site.xml |

xml |

HDFS守護程序的配置項,包括NameNode、SecondaryNameNode、DataNode、JN等 |

| yarn-env.sh |

Bash指令碼 |

Yarn執行環境變數設定 |

| yarn-site.xml |

xml |

YARN守護程序的配置項,包括ResourceManager和NodeManager等 |

| mapred-site.xml |

xml |

MapReduce計算框架的配置項 |

| capacity-scheduler.xml |

xml |

Yarn排程屬性設定 |

| container-executor.cfg |

Cfg |

Yarn Container配置 |

| mapred-queues.xml |

xml |

MR佇列設定 |

| hadoop-metrics.properties |

Java屬性 |

控制metrics在Hadoop上如何釋出的屬性 |

| hadoop-metrics2.properties |

Java屬性 |

控制metrics在Hadoop上如何釋出的屬性 |

| slaves |

Plain Text |

執行DataNode和NodeManager的機器列表,每行一個 |

| exclude |

Plain Text |

移除DN節點配置檔案 |

| log4j.properties |

系統日誌檔案、NameNode審計日誌DataNode子程序的任務日誌的屬性 |

|

| configuration.xsl |

(1)修改hadoop-env.sh檔案,在檔案末尾增加環境變數

#--------------------Java Env------------------------------

export JAVA_HOME=/opt/module/jdk1.8.0_144

#--------------------Hadoop Env----------------------------

export HADOOP_HOME=/opt/module/hadoop-2.6.0-cdh5.12.0

#--------------------Hadoop Daemon Options-----------------

# export HADOOP_NAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS"

# export HADOOP_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS $HADOOP_DATANODE_OPTS"

#--------------------Hadoop Logs---------------------------

#export HADOOP_LOG_DIR=${HADOOP_LOG_DIR}/$USER

#--------------------SSH PORT-------------------------------

#export HADOOP_SSH_OPTS="-p 6000" #如果你修改了SSH登入埠,一定要修改此配置。

(2)修改core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://node1.sunny.cn:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/sunny/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

</configuration>

這一步是設定提供HDFS服務的主機名和埠號,也就是說HDFS通過master的9000埠提供服務,這項配置也指明瞭NameNode所執行的節點,即主節點

(3)修改hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node1.sunny.cn:50090</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/usr/sunny/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/usr/sunny/hadoop/hdfs/data</value>

</property>

</configuration>

以下為網路方案,僅供參考

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!--開啟web hdfs -->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<!-- 存放name table本地目錄 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>/usr/sunny/hadoop/hdfs/name</value>

</property>

<!-- NameNode存放reansaction file(edits)本地目錄 -->

<property>

<name>dfs.namenode.edits.dir</name>

<value>${dfs.namenode.name.dir}</value>

</property>

<!-- DataNode存放block本地目錄 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoopuser/hadoop-2.6.0-cdh5.6.0/data/dfs/data</value>

</property>

<!-- 檔案副本個數,預設為3份 -->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<!-- 塊大小 256M(預設) -->

<property>

<name>dfs.blocksize</name>

<value>268435456</value>

</property>

<!--======================================================================= -->

<!--HDFS高可用配置 -->

<!--nameservices邏輯名 -->

<property>

<name>dfs.nameservices</name>

<value>hadoop-cluster</value>

</property>

<!-- 設定NameNode IDs 5.6版本只支援2個NameNode-->

<property>

<name>dfs.ha.namenodes.hadoop-cluster</name>

<value>namenode1,namenode2</value>

</property>

<!-- Hdfs HA: dfs.namenode.rpc-address.[nameservice ID] rpc 通訊地址 -->

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1</name>

<value>namenode1:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2</name>

<value>namenode2:8020</value>

</property>

<!-- Hdfs HA: dfs.namenode.http-address.[nameservice ID] http 通訊地址 -->

<property>

<name>dfs.namenode.http-address.hadoop-cluster.namenode1</name>

<value>namenode1:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.hadoop-cluster.namenode2</name>

<value>namenode2:50070</value>

</property>

<!--==================Namenode editlog同步 ============================================ -->

<!--保證資料恢復 -->

<property>

<name>dfs.journalnode.http-address</name>

<value>0.0.0.0:8480</value>

</property>

<property>

<name>dfs.journalnode.rpc-address</name>

<value>0.0.0.0:8485</value>

</property>

<!--設定JournalNode伺服器地址,QuorumJournalManager用於儲存editlog -->

<!--格式:qjournal://<host1:port1>;<host2:port2>;<host3:port3>/<journalId> 埠同journalnode.rpc-address -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://namenode1:8485;namenode2:8485;namenode3:8485/hadoop-cluster</value>

</property>

<!--JournalNode存放資料地址 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/usr/sunny/hadoop/hdfs/journal</value>

</property>

<!--==================DataNode editlog同步 ============================================ -->

<property>

<!--DataNode,Client連線Namenode識別選擇Active NameNode策略 -->

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!--==================Namenode fencing:=============================================== -->

<!--Failover後防止停掉的Namenode啟動,造成兩個服務 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoopuser/.ssh/id_rsa</value>

</property>

<property>

<!--多少milliseconds 認為fencing失敗 -->

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<!--==================NameNode auto failover base ZKFC and Zookeeper====================== -->

<!--開啟基於Zookeeper及ZKFC程序的自動備援設定,監視程序是否死掉 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<!--<value>Zookeeper-01:2181,Zookeeper-02:2181,Zookeeper-03:2181</value>-->

<value>Hadoop-DN-01:2181,Hadoop-DN-02:2181,Hadoop-DN-03:2181</value>

</property>

<property>

<!--指定ZooKeeper超時間隔,單位毫秒 -->

<name>ha.zookeeper.session-timeout.ms</name>

<value>2000</value>

</property>

</configuration>

dfs.replication配置hdfs中檔案的副本數為3,HDFS會自動對檔案做冗餘處理,這項配置就是配置檔案的冗餘數,3表示有2份冗餘。

dfs.name.dir設定NameNode的元資料存放的本地檔案系統路徑

dfs.data.dir設定DataNode存放資料的本地檔案系統路徑

(4)修改mapred-site.xml

目錄中只有一個mapred-site.xml.template檔案,cp一份出來

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>node1.sunny.cn:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>node1.sunny.cn:19888</value>

</property>

</configuration>

以下為網路方案,僅供參考

<configuration>

<!-- 配置JVM大小 -->

<property>

<name>mapred.child.java.opts</name>

<value>-Xmx1000m</value>

<final>true</final>

<description>final=true表示禁止使用者修改JVM大小</description>

</property>

<!-- 配置 MapReduce Applications -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- JobHistory Server ============================================================== -->

<!-- 配置 MapReduce JobHistory Server 地址 ,預設埠10020 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>0.0.0.0:10020</value>

</property>

<!-- 配置 MapReduce JobHistory Server web ui 地址, 預設埠19888 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>0.0.0.0:19888</value>

</property>

</configuration>

(5)修改yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>node1.sunny.cn</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

以下為網路方案,僅供參考

<configuration>

<!-- nodemanager 配置 ================================================= -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<description>Address where the localizer IPC is.</description>

<name>yarn.nodemanager.localizer.address</name>

<value>0.0.0.0:23344</value>

</property>

<property>

<description>NM Webapp address.</description>

<name>yarn.nodemanager.webapp.address</name>

<value>0.0.0.0:23999</value>

</property>

<!-- HA 配置 =============================================================== -->

<!-- Resource Manager Configs -->

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>2000</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 使嵌入式自動故障轉移。HA環境啟動,與 ZKRMStateStore 配合 處理fencing -->

<property>

<name>yarn.resourcemanager.ha.automatic-failover.embedded</name>

<value>true</value>

</property>

<!-- 叢集名稱,確保HA選舉時對應的叢集 -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yarn-cluster</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!--這裡RM主備結點需要單獨指定,(可選)

<property>

<name>yarn.resourcemanager.ha.id</name>

<value>rm2</value>

</property>

-->

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms</name>

<value>5000</value>

</property>

<!-- ZKRMStateStore 配置 -->

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<!--<value>Zookeeper-01:2181,Zookeeper-02:2181,Zookeeper-03:2181</value>-->

<value>Hadoop-DN-01:2181,Hadoop-DN-02:2181,Hadoop-DN-03:2181</value>

</property>

<property>

<name>yarn.resourcemanager.zk.state-store.address</name>

<!--<value>Zookeeper-01:2181,Zookeeper-02:2181,Zookeeper-03:2181</value>-->

<value>Hadoop-DN-01:2181,Hadoop-DN-02:2181,Hadoop-DN-03:2181</value>

</property>

<!-- Client訪問RM的RPC地址 (applications manager interface) -->

<property>

<name>yarn.resourcemanager.address.rm1</name>

<value>Hadoop-NN-01:23140</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm2</name>

<value>Hadoop-NN-02:23140</value>

</property>

<!-- AM訪問RM的RPC地址(scheduler interface) -->

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>Hadoop-NN-01:23130</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>Hadoop-NN-02:23130</value>

</property>

<!-- RM admin interface -->

<property>

<name>yarn.resourcemanager.admin.address.rm1</name>

<value>Hadoop-NN-01:23141</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.rm2</name>

<value>Hadoop-NN-02:23141</value>

</property>

<!--NM訪問RM的RPC埠 -->

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm1</name>

<value>Hadoop-NN-01:23125</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm2</name>

<value>Hadoop-NN-02:23125</value>

</property>

<!-- RM web application 地址 -->

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>Hadoop-NN-01:23188</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>Hadoop-NN-02:23188</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.rm1</name>

<value>Hadoop-NN-01:23189</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.rm2</name>

<value>Hadoop-NN-02:23189</value>

</property>

</configuration>

(6)修改slaves檔案

配置的都是datanode

node2.sunny.cn node3.sunny.cn

5.初始化namenode

hdfs namenode -format

6.啟動Hadoop

start-dfs.sh start-yarn.sh (可以start-all.sh) mr-jobhistory-daemon.sh start historyserver

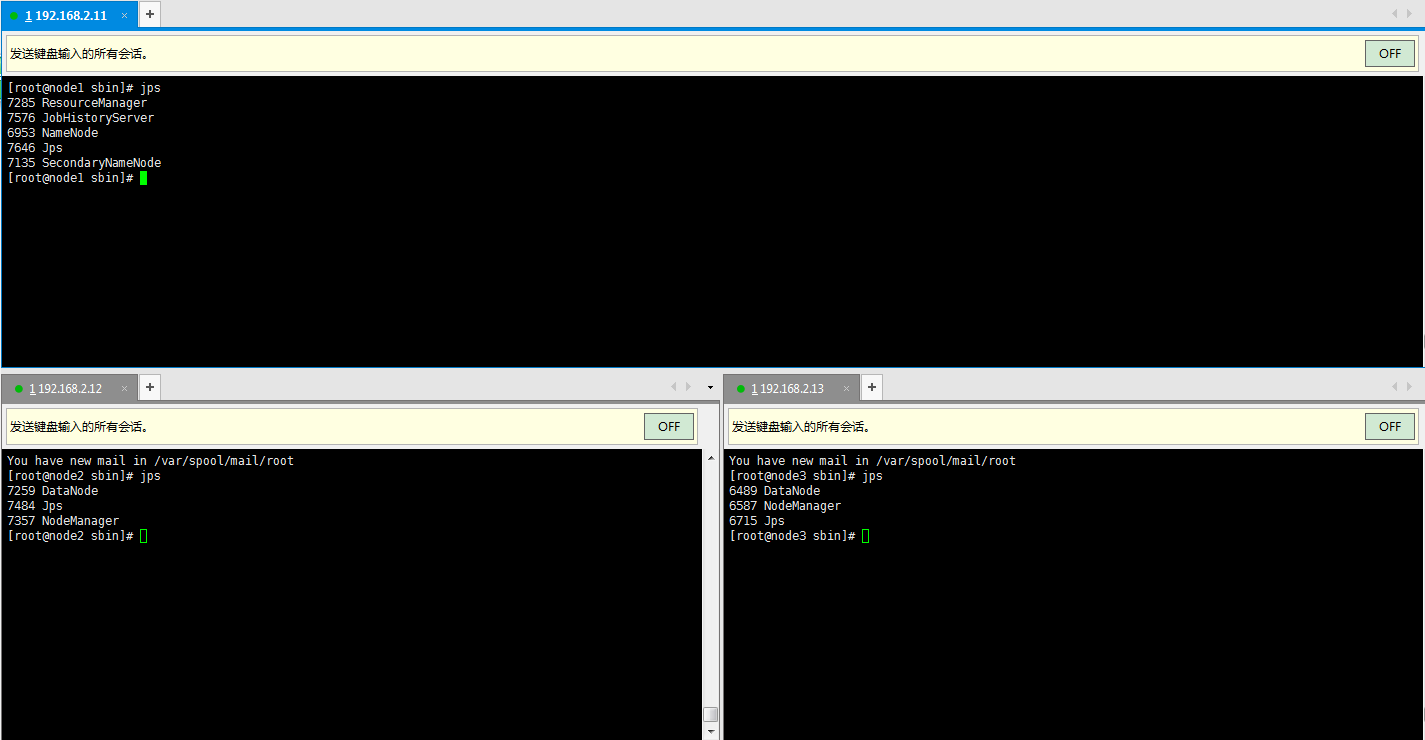

啟動後的程序

如果datanode啟動不成功,需要把所有hadoop下tmp資料夾刪掉再重新格式化namenode

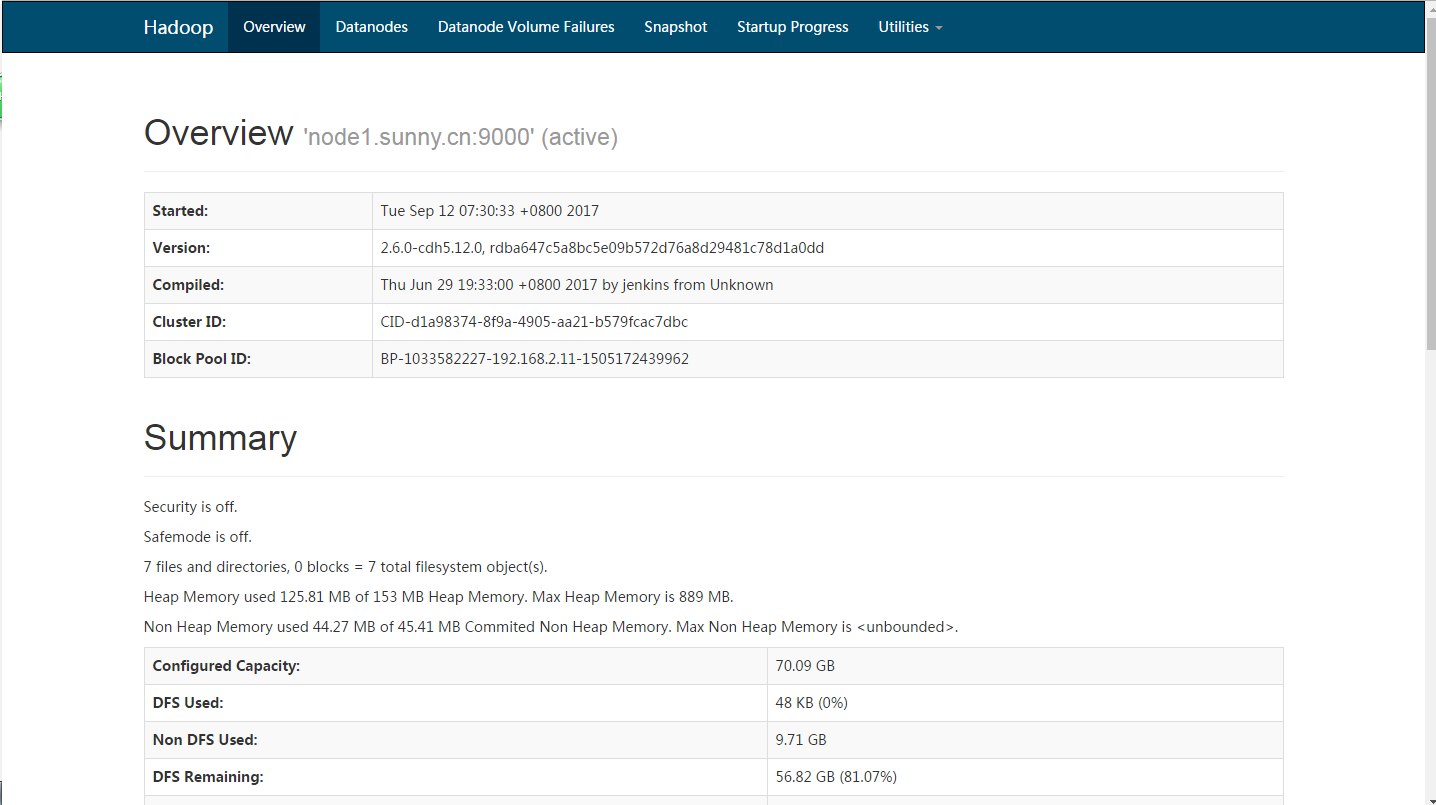

訪問地址:http://192.168.2.11:50070

7.執行分散式例項

(1) 在HDFS 上建立使用者目錄

hdfs dfs -mkdir -p /user/hadoop

==============================前方高能,建議先行掃描====================================================

在此過程中可能會報出警告:

WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

意思是無法載入本地native庫,位於hadoop/lib/native目錄,這時候就需要去下載原始碼編譯hadoop-2.6.0-cdh5.12.0-src.tar.gz

下載後上傳到伺服器並解壓,進入到解壓後的原始碼目錄下執行命令

mvn package -Dmaven.javadoc.skip=true -Pdist,native -DskipTests -Dtar

打包過程中出現錯誤

Detected JDK Version: 1.8.0-144 is not in the allowed range [1.7.0,1.7.1000}

表示原始碼編譯需要1.7的JDK,而實際是1.8,所以把jdk降級下

source ~/.bash_profile後jdk依然是1.8,關掉所有程序後依然沒變,所以reboot,然後解決

繼續編譯原始碼,大概20、30、40、50、60、70多分鐘,注意修改mvn的映象地址,使用國內地址的會快一些

...

好吧,編譯到common時繼續報錯

[ERROR] Failed to execute goal org.apache.hadoop:hadoop-maven-plugins:2.6.0-cdh5.12.0:protoc (compile-protoc) on project hadoop-common: org.apache.maven.plugin.MojoExecutionException: 'protoc --version' did not return a version -> [Help 1]

查閱資料得知:

protobuf是google提供的一個可以編碼格式化結構資料方法,Google大部分的RPC端通訊協議都是基於protocol buffers的。同時現Hadoop中master和slave中的RPC通訊協議也都是基於它實現的。所以下載吧。

需要安裝protoc,版本protobuf-2.5.0,但目前google官方連結下載不了。好人的下載連結:http://pan.baidu.com/s/1pJlZubT

上傳到伺服器之後解壓,然後準備編譯

編譯protoc之前還要先安裝gcc, gcc-c++, make,否則又是一堆錯誤

yum install gcc yum intall gcc-c++ yum install make tar -xvf protobuf-2.5.0.tar.bz2 cd protobuf-2.5.0 ./configure --prefix=/opt/module/protoc/ make && make install

編譯好之後記得配置下環境變數

PROTOC_HOME="/opt/module/protoc" export PATH=$PATH:$PROTOC_HOME/bin

然後source下

然後可以繼續編譯hadoop原始碼了

OK

終於編譯成功,大約持續了N久

編譯好的包在hadoop-src/hadoop-dist/target/hadoop-2.4.1.tar.gz

把tar包複製到本地hadoop/lib/native目錄下並將tar包的native裡的檔案複製到本地hadoop/lib/native下

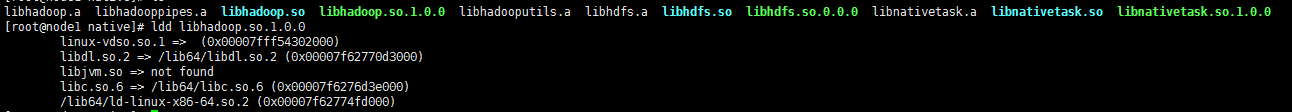

使用命令檢視native庫的版本

ldd libhadoop.so.1.0.0

然後將這些檔案分發到其他的節點上

scp * [email protected]:/opt/module/hadoop/lib/native/ scp * [email protected]:/opt/module/hadoop/lib/native/

然後需要修改下~/.bash_profile檔案

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib:$HADOOP_COMMON_LIB_NATIVE_DIR"

然後source下

驗證OK

==============================高能區域結束====================================================

繼續執行

(2)

將 /opt/moudle/hadoop/etc/hadoop 中的配置檔案作為輸入檔案複製到分散式檔案系統中

hdfs dfs -mkdir input hdfs dfs -put /usr/local/hadoop/etc/hadoop/*.xml input hadoop jar /opt/moudle/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-*.jar grep input output 'dfs[a-z.]+'

檢視進度:http://192.168.2.11:8088/cluster

檢視輸出結果

hdfs dfs -cat output/*

若要再次執行需要把output刪除掉

hdfs dfs -rm -r output

附註:

關閉hadoop叢集

在node1上執行:

stop-yarn.sh stop-dfs.sh (stop-all.sh) mr-jobhistory-daemon.sh stop historyserver

具體操作:

hadoop上基礎操作

hadoop fs -ls *** (hdfs dfs –ls ***) 檢視列表 hadoop fs -mkdir *** (hdfs dfs –mkdir ***) 建立資料夾 hadoop fs -rm -r *** (hdfs dfs –rm -r ***) 刪除資料夾 hadoop fs -put *** (hdfs dfs –put ***) 上傳檔案到hdfs hadoop fs -get *** (hdfs dfs –get ***) 下載檔案 hadoop fs –cp *** (hdfs dfs –cp ***) 拷貝檔案 hadoop fs -cat *** (hdfs dfs –cat ***) 檢視檔案 hadoop fs -touchz *** (hdfs dfs –touchz ***) 建立空檔案

列出所有Hadoop Shell支援的命令:

hadoop fs –help

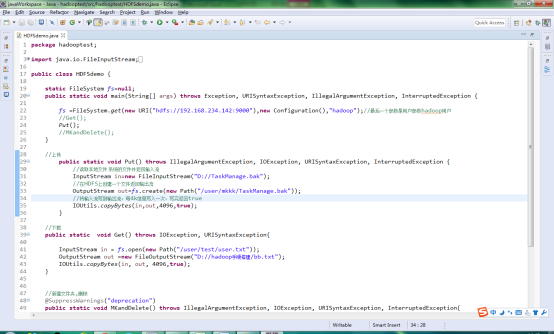

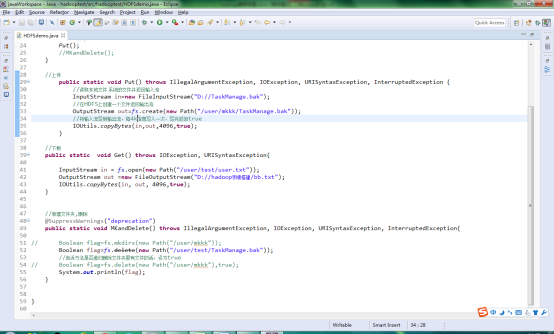

Java結合例子:

java程式操作

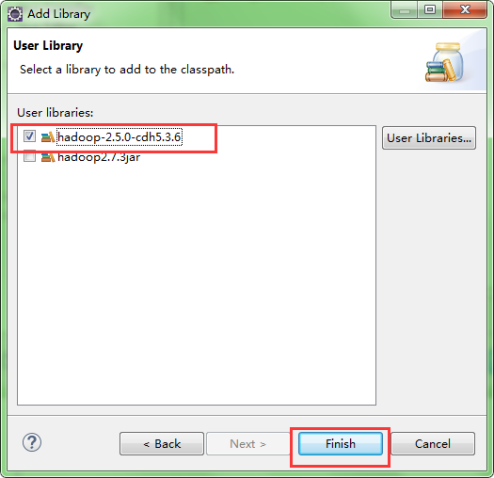

首先匯入hadoop相關jar包

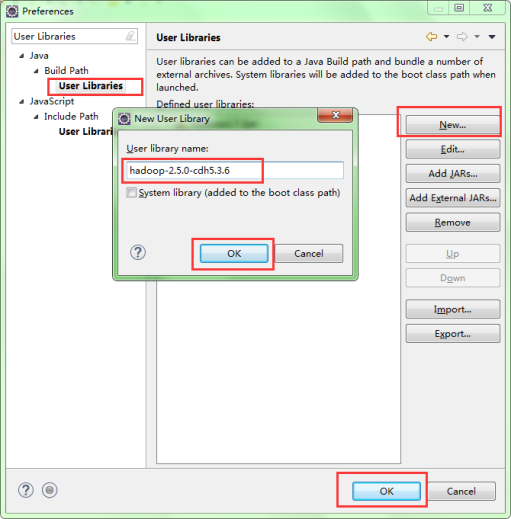

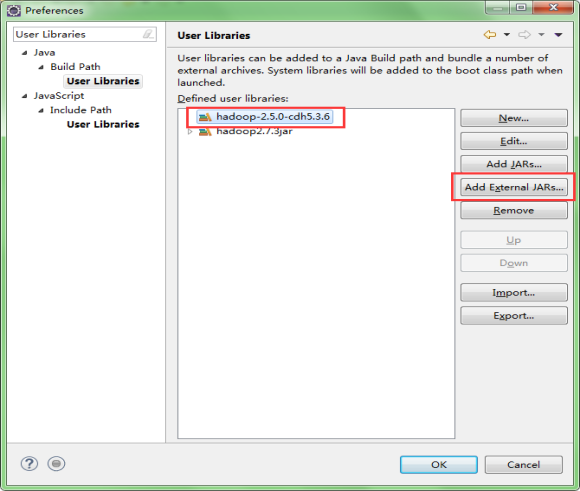

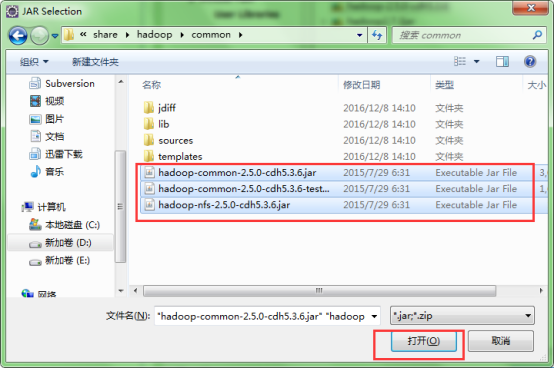

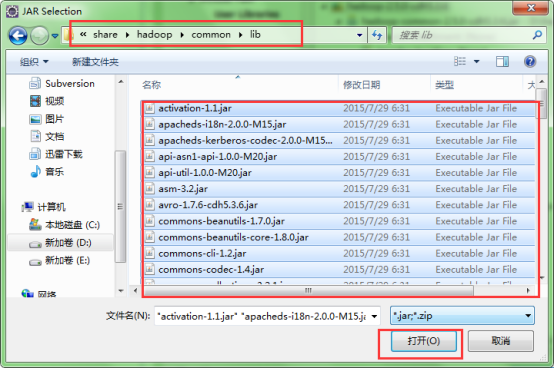

新建一個User library

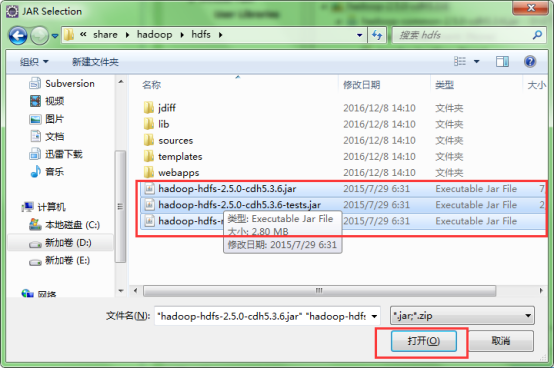

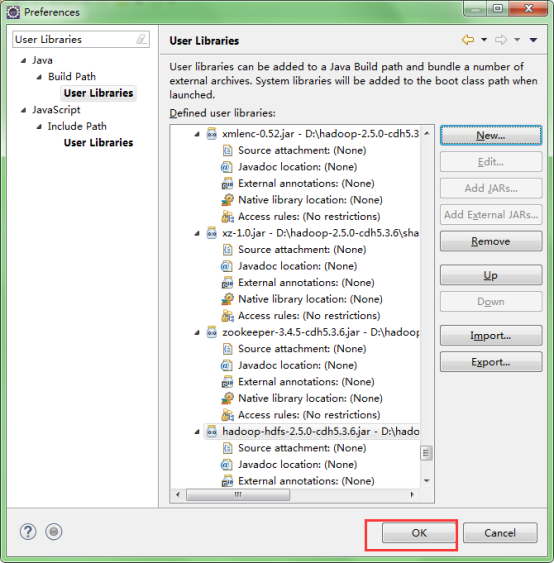

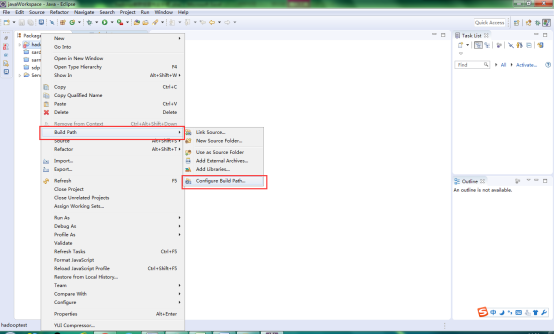

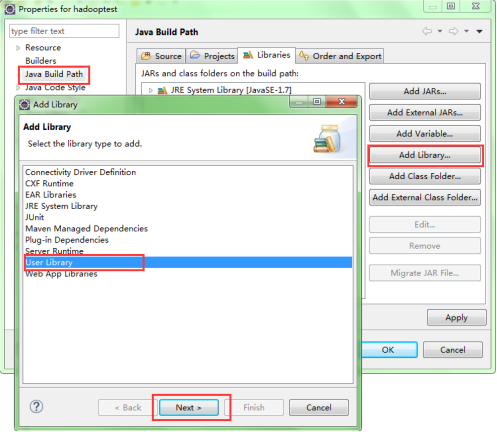

點選Window-->Preferences 搜尋框中輸入User Libraries