Linux夥伴系統原理-記憶體分配和釋放

主要分析Linux夥伴系統演算法,記憶體的分配和釋放

1.夥伴系統簡介

Linux核心記憶體管理的一項重要工作就是如何在頻繁申請釋放記憶體的情況下,避免碎片的產生,

Linux採用夥伴系統解決外部碎片的問題,採用slab解決內 部碎片的問題.

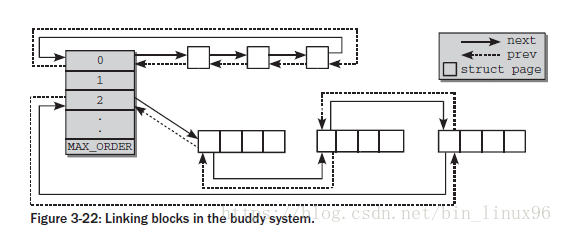

夥伴演算法(Buddy system)把所有的空閒頁框分為11個塊連結串列,每塊連結串列中分佈包含特定的連續頁框地址空間,比如第0個塊連結串列包含大小為2^0個連續的頁框,第1個塊連結串列中,每個連結串列元素包含2個頁框大小的連續地址空間,….,第10個塊連結串列中,每個連結串列元素代表4M的連續地址空間。每個連結串列中元素的個數在系統初始化時決定,在執行過程中,動態變化。夥伴演算法每次只能分配2的冪次頁的空間,比如一次分配1頁,2頁,4頁,8頁,…,1024頁(2^10)等等,每頁大小一般為4K,因此,夥伴演算法最多一次能夠分配4M的記憶體空間。

1.1.1 關鍵資料結構

struct zone {

struct free_area free_area[MAX_ORDER];

}

struct free_area {

struct list_head free_list[MIGRATE_TYPES];

unsigned long nr_free;

};

free_area共有MAX_ORDER個元素,其中第order個元素記錄了2^order的空閒塊,這些空閒塊在free_list中以雙向連結串列的形式組織起來,對於同等大小的空閒塊,其型別不同,將組織在不同的free_list中,nr_free記錄了該free_area中總共的空閒記憶體塊的數量。MAX_ORDER的預設值為11,這意味著最大記憶體塊的大小為2^10=1024個頁框。對於同等大小的記憶體塊,每個記憶體塊的起始頁框用於連結串列的節點進行相連,這些節點對應的著struct page中的lru域

struct page {

struct list_head lru; /* Pageout list, eg. active_list

* protected by zone->lru_lock !

*/

}

1.1.2 遷移型別

不可移動頁(Non-movable pages):這類頁在記憶體當中有固定的位置,不能移動。核心的核心分配的記憶體大多屬於這種型別

可回收頁(Reclaimable pages):這類頁不能直接移動,但可以刪除,其內容頁可以從其他地方重新生成,例如,對映自檔案的資料屬於這種型別,針對這種頁,核心有專門的頁面回收處理

可移動頁:這類頁可以隨意移動,使用者空間應用程式所用到的頁屬於該類別。它們通過頁表來對映,如果他們複製到新的位置,頁表項也會相應的更新,應用程式不會注意到任何改變。

當一個指定的遷移型別所對應的連結串列中沒有空閒塊時,將會按以下定義的順序到其他遷移型別的連結串列中尋找

static int fallbacks[MIGRATE_TYPES][MIGRATE_TYPES-1] = {

[MIGRATE_UNMOVABLE] = { MIGRATE_RECLAIMABLE, MIGRATE_MOVABLE, MIGRATE_RESERVE },

[MIGRATE_RECLAIMABLE] = { MIGRATE_UNMOVABLE, MIGRATE_MOVABLE, MIGRATE_RESERVE },

[MIGRATE_MOVABLE] = { MIGRATE_RECLAIMABLE, MIGRATE_UNMOVABLE, MIGRATE_RESERVE },

[MIGRATE_RESERVE] = { MIGRATE_RESERVE, MIGRATE_RESERVE, MIGRATE_RESERVE }, /* Never used */

};

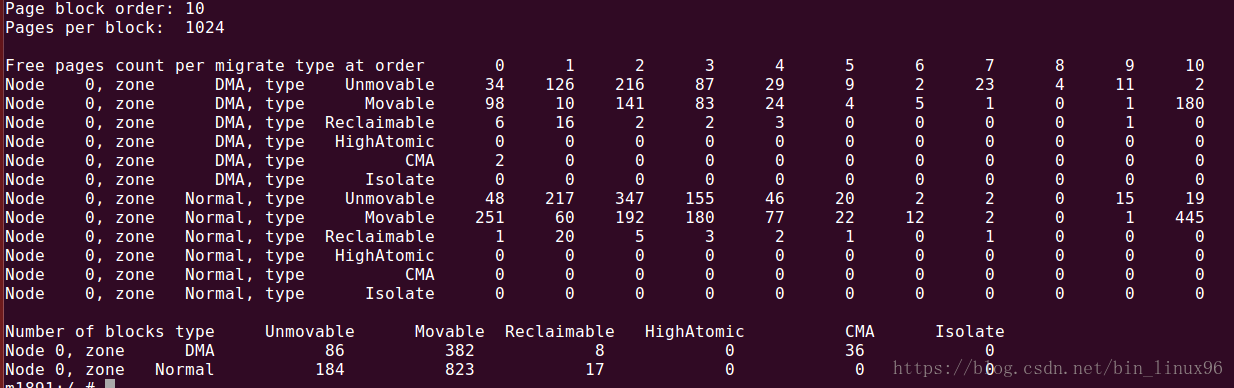

通過cat /proc/pagetypeinfo可以看到遷移型別和order的關係

1.2 記憶體的分配

分配函式為alloc_pages,最終呼叫到__alloc_pages_nodemask

struct page *

__alloc_pages_nodemask(gfp_t gfp_mask, unsigned int order,

struct zonelist *zonelist, nodemask_t *nodemask)

{

struct zoneref *preferred_zoneref;

struct page *page = NULL;

unsigned int cpuset_mems_cookie;

int alloc_flags = ALLOC_WMARK_LOW|ALLOC_CPUSET|ALLOC_FAIR;

gfp_t alloc_mask; /* The gfp_t that was actually used for allocation */

struct alloc_context ac = {

/*根據gfp_mask,找到合適的zone idx和migrate型別 */

.high_zoneidx = gfp_zone(gfp_mask),

.nodemask = nodemask,

.migratetype = gfpflags_to_migratetype(gfp_mask),

};

retry_cpuset:

cpuset_mems_cookie = read_mems_allowed_begin();

/* We set it here, as __alloc_pages_slowpath might have changed it */

ac.zonelist = zonelist;

/* Dirty zone balancing only done in the fast path */

ac.spread_dirty_pages = (gfp_mask & __GFP_WRITE);

/*根據分配掩碼,確認先從哪個zone分配 */

/* The preferred zone is used for statistics later */

preferred_zoneref = first_zones_zonelist(ac.zonelist, ac.high_zoneidx,

ac.nodemask ? : &cpuset_current_mems_allowed,

&ac.preferred_zone);

if (!ac.preferred_zone)

goto out;

ac.classzone_idx = zonelist_zone_idx(preferred_zoneref);

/* First allocation attempt */

alloc_mask = gfp_mask|__GFP_HARDWALL;

/*快速分配 */

page = get_page_from_freelist(alloc_mask, order, alloc_flags, &ac);

if (unlikely(!page)) {

/*慢速分配,涉及到記憶體回收,暫不分析 */

page = __alloc_pages_slowpath(alloc_mask, order, &ac);

}

out:

if (unlikely(!page && read_mems_allowed_retry(cpuset_mems_cookie)))

goto retry_cpuset;

return page;

}1.2.1 水位控制

每個zone有三個水位(watermark),用以標識系統記憶體存量,由陣列 watermark[NR_WMARK]表示.

WMARK_MIN,WMARK_LOW,WMARK_HIGH,當記憶體存量低於對應水位時,就會呼叫zone_reclaim()進行記憶體回收.

mark = zone->watermark[alloc_flags & ALLOC_WMARK_MASK];

if (!zone_watermark_ok(zone, order, mark,//判斷水位是否正常

ac->classzone_idx, alloc_flags)) {

ret = zone_reclaim(zone, gfp_mask, order);

}

1.2.2 單個頁面的分配

get_page_from_freelist->buffered_rmqueue:

order=0時,單個頁面直接從per cpu的free list分配,這樣效率最高.

if (likely(order == 0)) {

struct per_cpu_pages *pcp;

struct list_head *list;

local_irq_save(flags);

pcp = &this_cpu_ptr(zone->pageset)->pcp;

list = &pcp->lists[migratetype];

/*如果對應list為空,則從夥伴系統拿記憶體*/

if (list_empty(list)) {

pcp->count += rmqueue_bulk(zone, 0,

pcp->batch, list,

migratetype, gfp_flags);

if (unlikely(list_empty(list)))

goto failed;

}

/*分配一個頁面 */

if ((gfp_flags & __GFP_COLD) != 0)

page = list_entry(list->prev, struct page, lru);

else

page = list_entry(list->next, struct page, lru);

if (!(gfp_flags & __GFP_CMA) &&

is_migrate_cma(get_pcppage_migratetype(page))) {

page = NULL;

local_irq_restore(flags);

} else {

list_del(&page->lru);

pcp->count--;

}

}1.2.3 多個頁面的分配(order>1)

get_page_from_freelist->buffered_rmqueue->__rmqueue:

static struct page *__rmqueue(struct zone *zone, unsigned int order,

int migratetype, gfp_t gfp_flags)

{

struct page *page = NULL;

/*CMA記憶體的分配 */

if ((migratetype == MIGRATE_MOVABLE) && (gfp_flags & __GFP_CMA))

page = __rmqueue_cma_fallback(zone, order);

if (!page)/*根據order和migrate type找到對應的freelist分配記憶體 */

page = __rmqueue_smallest(zone, order, migratetype);

if (unlikely(!page))/*當對應的migrate type無法滿足order分配時,進行fallback規則分配

,分配規則定義在fallbacks陣列中。 */

page = __rmqueue_fallback(zone, order, migratetype);

return page;

}

這裡分析migrate type能夠滿足記憶體分配的情況

static inline

struct page *__rmqueue_smallest(struct zone *zone, unsigned int order,

int migratetype)

{

unsigned int current_order;

struct free_area *area;

struct page *page;

/* 根據order,逐級向上查詢*/

for (current_order = order; current_order < MAX_ORDER; ++current_order) {

area = &(zone->free_area[current_order]);

if (list_empty(&area->free_list[migratetype]))

continue;

page = list_entry(area->free_list[migratetype].next,

struct page, lru);

list_del(&page->lru);

rmv_page_order(page);

area->nr_free--;

/* 切蛋糕,如果current_order大於目標order,則要把多餘的記憶體掛到對應的order連結串列.*/

expand(zone, page, order, current_order, area, migratetype);

set_pcppage_migratetype(page, migratetype);

return page;

}

return NULL;

}

static inline void expand(struct zone *zone, struct page *page,

int low, int high, struct free_area *area,

int migratetype)

{

unsigned long size = 1 << high;//先一分為2

while (high > low) {

area--;/* 回退到下一級連結串列*/

high--;

size >>= 1;/*Size減半 */

/*接入下一級空閒連結串列 */

list_add(&page[size].lru, &area->free_list[migratetype]);

area->nr_free++;

set_page_order(&page[size], high);

}

}1.2.4 fallback分配

當前migrate type不能滿足記憶體分配需求時,需要到其他migrate type空閒連結串列分配記憶體.

規則如下:

static int fallbacks[MIGRATE_TYPES][4] = {

[MIGRATE_UNMOVABLE] = { MIGRATE_RECLAIMABLE, MIGRATE_MOVABLE, MIGRATE_TYPES },

[MIGRATE_RECLAIMABLE] = { MIGRATE_UNMOVABLE, MIGRATE_MOVABLE, MIGRATE_TYPES },

[MIGRATE_MOVABLE] = { MIGRATE_RECLAIMABLE, MIGRATE_UNMOVABLE, MIGRATE_TYPES },

#ifdef CONFIG_CMA

[MIGRATE_CMA] = { MIGRATE_TYPES }, /* Never used */

#endif

#ifdef CONFIG_MEMORY_ISOLATION

[MIGRATE_ISOLATE] = { MIGRATE_TYPES }, /* Never used */

#endif

};

__rmqueue_fallback(struct zone *zone, unsigned int order, int start_migratetype)

{

struct free_area *area;

unsigned int current_order;

struct page *page;

int fallback_mt;

bool can_steal;

/* 先從最大的order list中找到合適的塊 */

for (current_order = MAX_ORDER-1;

current_order >= order && current_order <= MAX_ORDER-1;

--current_order) {

area = &(zone->free_area[current_order]);

/*從fallbacks陣列中找到合適的migrate type連結串列 */

fallback_mt = find_suitable_fallback(area, current_order,

start_migratetype, false, &can_steal);

if (fallback_mt == -1)

continue;

page = list_entry(area->free_list[fallback_mt].next,

struct page, lru);

if (can_steal &&/*改變pageblock的migrate type */

get_pageblock_migratetype(page) != MIGRATE_HIGHATOMIC)

steal_suitable_fallback(zone, page, start_migratetype);

/* Remove the page from the freelists */

area->nr_free--;

list_del(&page->lru);

rmv_page_order(page);

/*切蛋糕 */

expand(zone, page, order, current_order, area,

start_migratetype);

set_pcppage_migratetype(page, start_migratetype);

trace_mm_page_alloc_extfrag(page, order, current_order,

start_migratetype, fallback_mt);

return page;

}

return NULL;

}

如UNMOVABLE連結串列記憶體不足時,優先從RECLAIMABLE連結串列分配,再從MOVABLE連結串列分配

1.3 記憶體的釋放

頁面的釋放,最終呼叫到__free_one_page函式.

首先兩塊記憶體是夥伴塊,必須滿足以下條件:

1. 夥伴不能在空洞頁面中,要有實實在在的物理頁面/the buddy is not in a hole/

2. 夥伴塊在夥伴系統中,也就是夥伴塊要是空閒的,沒有被分配出去的記憶體塊/the buddy is in the buddy system/

3. 要有相同的order /a page and its buddy have the same order/

4. 位於同一個zone./a page and its buddy are in the same zone/

5. 物理上要相連.且兩個page的起始pfn號一定相差2^order,則有計算公式B2 = B1 ^ (1 << O)

static inline unsigned long

__find_buddy_index(unsigned long page_idx, unsigned int order)

{

return page_idx ^ (1 << order);//計算buddy page的index

}

static inline void __free_one_page(struct page *page,

unsigned long pfn,

struct zone *zone, unsigned int order,

int migratetype)

{

unsigned long page_idx;

unsigned long combined_idx;

unsigned long uninitialized_var(buddy_idx);

struct page *buddy;

unsigned int max_order;

max_order = min_t(unsigned int, MAX_ORDER, pageblock_order + 1);

/*計算page_idx, */

page_idx = pfn & ((1 << MAX_ORDER) - 1);

VM_BUG_ON_PAGE(page_idx & ((1 << order) - 1), page);

VM_BUG_ON_PAGE(bad_range(zone, page), page);

continue_merging:

while (order < max_order - 1) {

/*找到buddy ix */

buddy_idx = __find_buddy_index(page_idx, order);

buddy = page + (buddy_idx - page_idx);

/*判斷是否是夥伴塊 */

if (!page_is_buddy(page, buddy, order))

goto done_merging;

/*

* Our buddy is free or it is CONFIG_DEBUG_PAGEALLOC guard page,

* merge with it and move up one order.

*/

if (page_is_guard(buddy)) {

clear_page_guard(zone, buddy, order, migratetype);

} else {

list_del(&buddy->lru);

zone->free_area[order].nr_free--;

rmv_page_order(buddy);

}

/*繼續向上合併 */

combined_idx = buddy_idx & page_idx;

page = page + (combined_idx - page_idx);

page_idx = combined_idx;

order++;

}

done_merging:

set_page_order(page, order);

/*

* If this is not the largest possible page, check if the buddy

* of the next-highest order is free. If it is, it's possible

* that pages are being freed that will coalesce soon. In case,

* that is happening, add the free page to the tail of the list

* so it's less likely to be used soon and more likely to be merged

* as a higher order page

*/

if ((order < MAX_ORDER-2) && pfn_valid_within(page_to_pfn(buddy))) {

struct page *higher_page, *higher_buddy;

/*這裡檢查更上一級是否存在夥伴關係,如果是的,則把page新增到連結串列末尾,這樣有利於頁面回收. */

combined_idx = buddy_idx & page_idx;

higher_page = page + (combined_idx - page_idx);

buddy_idx = __find_buddy_index(combined_idx, order + 1);

higher_buddy = higher_page + (buddy_idx - combined_idx);

if (page_is_buddy(higher_page, higher_buddy, order + 1)) {

list_add_tail(&page->lru,

&zone->free_area[order].free_list[migratetype]);

goto out;

}

}

/*連結到對應的free list 連結串列 */

list_add(&page->lru, &zone->free_area[order].free_list[migratetype]);

out:

zone->free_area[order].nr_free++;

}