Python3利用Dlib19.7實現攝像頭人臉識別的方法

0.引言

利用python開發,藉助Dlib庫捕獲攝像頭中的人臉,提取人臉特徵,通過計算歐氏距離來和預存的人臉特徵進行對比,達到人臉識別的目的;

可以自動從攝像頭中摳取人臉圖片儲存到本地,然後提取構建預設人臉特徵;

根據摳取的 / 已有的同一個人多張人臉圖片提取128D特徵值,然後計算該人的128D特徵均值;

然後和攝像頭中實時獲取到的人臉提取出的特徵值,計算歐氏距離,判定是否為同一張人臉;

人臉識別 / face recognition的說明:

wikipedia 關於人臉識別系統 / face recognition system 的描述:theywork by comparing selected facial featuresfrom given image with faces within a database.

本專案中就是比較 預設的人臉的特徵和 攝像頭實時獲取到的人臉的特徵;

核心就是提取128D人臉特徵,然後計算攝像頭人臉特徵和預設的特徵臉的歐式距離,進行比對;

效果如下(攝像頭認出來我是default_person預設的人臉 / 另一個人不是預設人臉顯示diff):

圖1 攝像頭人臉識別效果gif

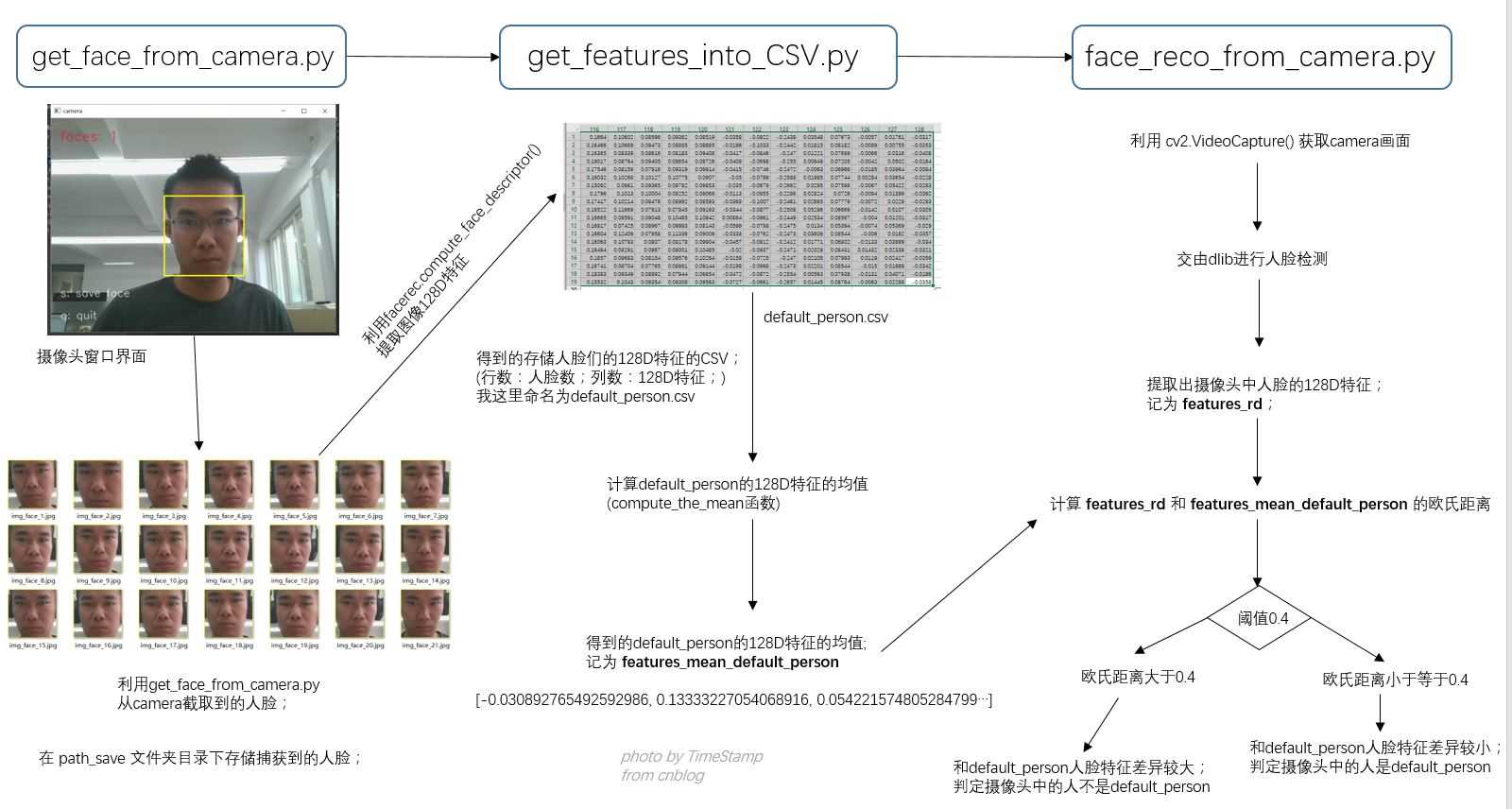

1.總體流程

先說下 人臉檢測 (face detection) 和 人臉識別 (face recognition) ,前者是達到檢測出場景中人臉的目的就可以了,而後者不僅需要檢測出人臉,還要和已有人臉資料進行比對,識別出是否在資料庫中,或者進行身份標註之類處理,人臉檢測和人臉識別兩者有時候可能會被理解混淆;

我的之前一些專案都是用dlib做人臉檢測這塊,這個專案想要實現的功能是人臉識別功能,藉助的是 dlib官網中 face_recognition.py這個例程 (link:http://dlib.net/face_recognition.py.html);

核心在於 利用 “dlib_face_recognition_resnet_model_v1.dat” 這個model,提取人臉影象的128D特徵,然後比對不同人臉圖片的128D特徵,設定閾值計算歐氏距離來判斷是否為同一張臉;

# face recognition model, the object maps human faces into 128D vectors

facerec = dlib.face_recognition_model_v1("dlib_face_recognition_resnet_model_v1.dat")

shape = predictor(img, dets[0])

face_descriptor = facerec.compute_face_descriptor(img, shape)圖2 總體設計流程

2.原始碼介紹

主要有

- get_face_from_camera.py ,

- get_features_into_CSV.py,

- face_reco_from_camera.py

這三個py檔案;

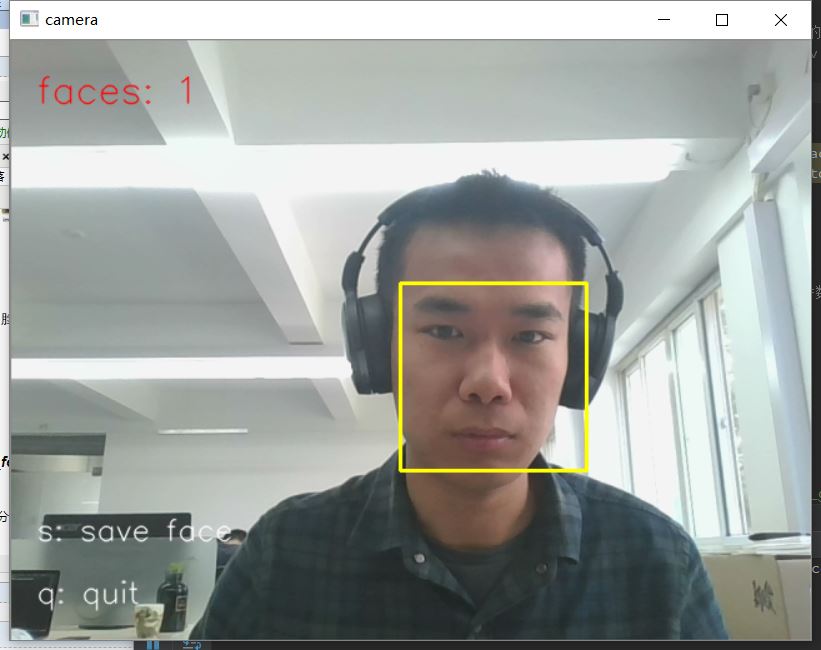

2.1get_face_from_camera.py / 採集構建XXX人臉資料

人臉識別需要將 提取到的影象資料 和已有影象資料進行比對分析,所以這個py檔案實現的功能就是採集構建XXX的人臉資料;

程式會生成一個視窗,顯示呼叫的攝像頭實時獲取的影象(關於攝像頭的呼叫方式可以參考我的另一部落格//www.jb51.net/article/135512.htm);

按s鍵可以儲存當前視訊流中的人臉影象,儲存的路徑由 path_save = “xxxx/get_from_camera/” 規定;

按q鍵退出視窗;

攝像頭的呼叫是利用opencv庫的cv2.VideoCapture(0), 此處引數為0代表呼叫的是筆記本的預設攝像頭,你也可以讓它呼叫傳入已有視訊檔案;

圖3get_face_from_camera.py 的介面

這樣的話,你就可以在 path_save指定的目錄下得到一組捕獲到的人臉;

圖4 捕獲到的一組人臉

原始碼如下:

# 2018-5-11

# By TimeStamp

# cnblogs: http://www.cnblogs.com/AdaminXie

import dlib # 人臉識別的庫dlib

import numpy as np # 資料處理的庫numpy

import cv2 # 影象處理的庫OpenCv

# dlib預測器

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor('shape_predictor_68_face_landmarks.dat')

# 建立cv2攝像頭物件

cap = cv2.VideoCapture(0)

# cap.set(propId, value)

# 設定視訊引數,propId設定的視訊引數,value設定的引數值

cap.set(3, 480)

# 截圖screenshoot的計數器

cnt_ss = 0

# 人臉截圖的計數器

cnt_p = 0

# 儲存

path_save = "F:/code/python/P_dlib_face_reco/data/get_from_camera/"

# cap.isOpened() 返回true/false 檢查初始化是否成功

while cap.isOpened():

# cap.read()

# 返回兩個值:

# 一個布林值true/false,用來判斷讀取視訊是否成功/是否到視訊末尾

# 影象物件,影象的三維矩陣q

flag, im_rd = cap.read()

# 每幀資料延時1ms,延時為0讀取的是靜態幀

kk = cv2.waitKey(1)

# 取灰度

img_gray = cv2.cvtColor(im_rd, cv2.COLOR_RGB2GRAY)

# 人臉數rects

rects = detector(img_gray, 0)

# print(len(rects))

# 待會要寫的字型

font = cv2.FONT_HERSHEY_SIMPLEX

if (len(rects) != 0):

# 檢測到人臉

# 矩形框

for k, d in enumerate(rects):

# 計算矩形大小

# (x,y), (寬度width, 高度height)

pos_start = tuple([d.left(), d.top()])

pos_end = tuple([d.right(), d.bottom()])

# 計算矩形框大小

height = d.bottom() - d.top()

width = d.right() - d.left()

# 根據人臉大小生成空的影象

im_blank = np.zeros((height, width, 3), np.uint8)

im_rd = cv2.rectangle(im_rd, tuple([d.left(), d.top()]), tuple([d.right(), d.bottom()]), (0, 255, 255), 2)

im_blank = np.zeros((height, width, 3), np.uint8)

# 儲存人臉到本地

if (kk == ord('s')):

cnt_p += 1

for ii in range(height):

for jj in range(width):

im_blank[ii][jj] = im_rd[d.top() + ii][d.left() + jj]

print(path_save + "img_face_" + str(cnt_p) + ".jpg")

cv2.imwrite(path_save + "img_face_" + str(cnt_p) + ".jpg", im_blank)

cv2.putText(im_rd, "faces: " + str(len(rects)), (20, 50), font, 1, (0, 0, 255), 1, cv2.LINE_AA)

else:

# 沒有檢測到人臉

cv2.putText(im_rd, "no face", (20, 50), font, 1, (0, 0, 255), 1, cv2.LINE_AA)

# 新增說明

im_rd = cv2.putText(im_rd, "s: save face", (20, 400), font, 0.8, (255, 255, 255), 1, cv2.LINE_AA)

im_rd = cv2.putText(im_rd, "q: quit", (20, 450), font, 0.8, (255, 255, 255), 1, cv2.LINE_AA)

# 按下q鍵退出

if (kk == ord('q')):

break

# 視窗顯示

cv2.imshow("camera", im_rd)

# 釋放攝像頭

cap.release()

# 刪除建立的視窗

cv2.destroyAllWindows()

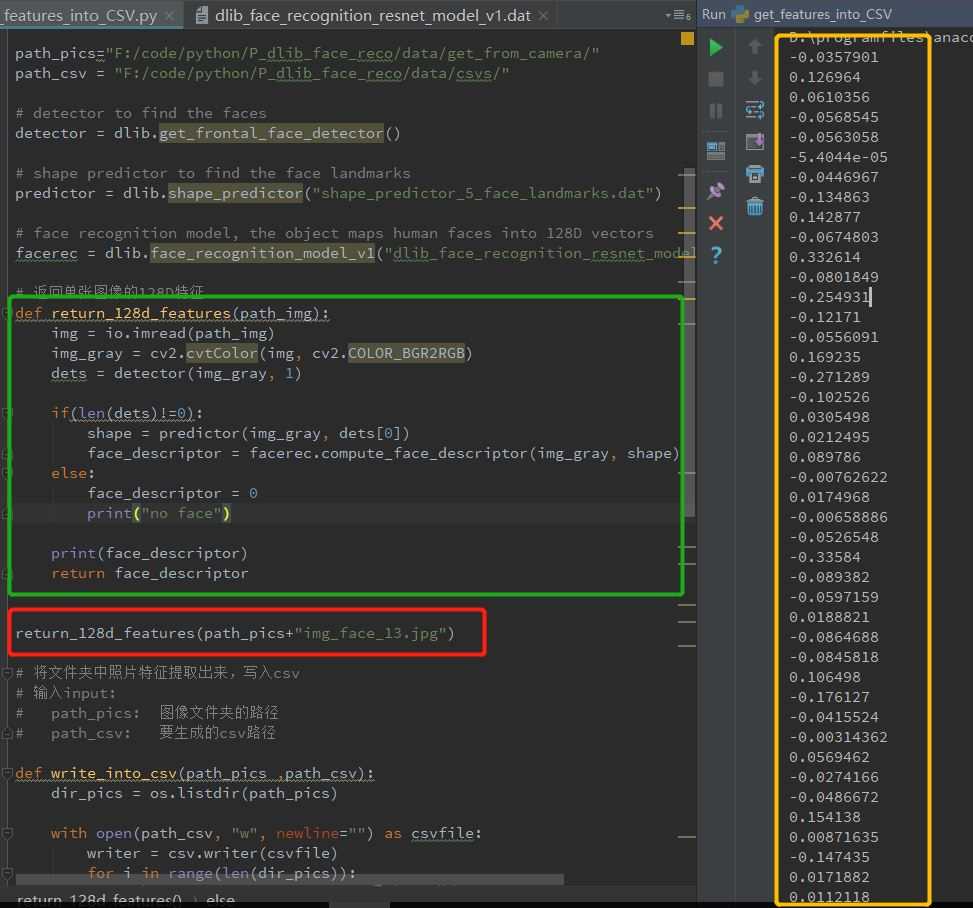

2.2get_features_into_CSV.py / 提取特徵存入CSV

已經得到了XXX的一組人臉影象,現在就需要把他的面部特徵提取出來;

這裡藉助 dlib 庫的 face recognition model 人臉識別模型;

# face recognition model, the object maps human faces into 128D vectors

facerec = dlib.face_recognition_model_v1("dlib_face_recognition_resnet_model_v1.dat")

# detector to find the faces

detector = dlib.get_frontal_face_detector()

# shape predictor to find the face landmarks

predictor = dlib.shape_predictor("shape_predictor_5_face_landmarks.dat")

# 讀取圖片

img = io.imread(path_img)

img_gray = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

dets = detector(img_gray, 1)

shape = predictor(img_gray, dets[0])

face_descriptor = facerec.compute_face_descriptor(img_gray, shape)

我們可以看下對於某張圖片,face_descriptor的輸出結果:

綠色框內是我們的返回128D特徵的函式;

在紅色框內呼叫該函式來計算img_face_13.jpg;

可以看到黃色框中的輸出為128D的向量;

圖5 返回單張影象的128D特徵的計算結果

所以我們就可以把path_save中的影象,進行批量的特徵計算,然後寫入CSV中(利用 write_into_CSV函式),我這邊csv的命名為default_person.csv;

就可以得到行數(人臉數)*128列的一個特徵CSV;

這是某個人的人臉特徵,然後計算128D特徵的均值,求mean(利用 compute_the_mean函式)

執行的輸出結果,這個128D的特徵值,就是default_person的特徵;

也就是我們內建/預設的人臉,之後攝像頭捕獲的人臉將要拿過來和這個特徵值進行比對,進行人臉識別的處理;

複製程式碼 程式碼如下: [-0.030892765492592986, 0.13333227054068916, 0.054221574805284799, -0.050820438289328626, -0.056331159841073189, 0.0039378538311116004, -0.044465327145237675, -0.13096490031794497, 0.14215188983239627, -0.084465635842398593, 0.34389359700052363, -0.062936659118062566, -0.24372901571424385, -0.13270603316394905, -0.0472818422866495, 0.15475224742763921, -0.24415240554433121, -0.11213862150907516, 0.032288033417180964, 0.023676671577911628, 0.098508275653186594, -0.010117797634417289, 0.0048202000815715448, -0.014808513420192819, -0.060100053486071135, -0.34934839135722112, -0.095795629448012301, -0.050788544706608117, 0.032316677762489567, -0.099673464894294739, -0.080181991975558434, 0.096361607705291952, -0.1823408101734362, -0.045472671817007815, -0.0066827326326778062, 0.047393877549391041, -0.038414973079373964, -0.039067085930391363, 0.15961966781239761, 0.0092458106136243598, -0.16182226570029007, 0.026322136191945327, -0.0039144184832510193, 0.2492692768573761, 0.19180528427425184, 0.022950534855848866, -0.019220497949342979, -0.15331173021542399, 0.047744840089427795, -0.17038608616904208, 0.026140184680882254, 0.19366614363695445, 0.066497623724372762, 0.07038829416820877, -0.0549700813073861, -0.11961311768544347, -0.032121153940495695, 0.083507449611237169, -0.14934051350543373, 0.011458799806668571, 0.10686114273573223, -0.10744074888919529, -0.04377919611962218, -0.11030520381111848, 0.20804878441910996, 0.093076545941202266, -0.11621182490336268, -0.1991656830436305, 0.10751579348978244, -0.11251544991606161, -0.12237925866716787, 0.058218707869711672, -0.15829276019021085, -0.17670038891466042, -0.2718416170070046, 0.034569320955166689, 0.30443575821424784, 0.061833358712886512, -0.19622498672259481, 0.011373612000361868, -0.050225612756453063, -0.036157087079788507, 0.12961127491373764, 0.13962576616751521, -0.0074232793168017737, 0.020964263007044792, -0.11185114399382942, 0.012502493042694894, 0.17834208513561048, -0.072658227462517586, -0.041312719401168194, 0.25095899873658228, -0.056628625839948654, 0.10285118379090961, 0.046701753217923012, 0.042323612264896691, 0.0036216247826814651, 0.066720707440062574, -0.16388990533979317, -0.0193739396421925, 0.027835704435251261, -0.086023958105789985, -0.05472404568603164, 0.14802298341926776, -0.10644183582381199, 0.098863413851512108, 0.00061285014778963834, 0.062096107555063146, 0.051960245755157973, -0.099548895108072383, -0.058173993112225285, -0.065454461562790375, 0.14721672511414477, -0.25363486848379435, 0.20384312381869868, 0.16890435312923632, 0.097537552447695477, 0.087824966562421697, 0.091438713434495431, 0.093809676797766431, -0.034379941362299417, -0.085149037210564868, -0.24900743130006289, 0.021165960517368819, 0.076710369830068792, -0.0061752907196549996, 0.028413473285342519, -0.029983982541843465]

原始碼:

# 2018-5-11

# By TimeStamp

# cnblogs: http://www.cnblogs.com/AdaminXie

# return_128d_features() 獲取某張影象的128d特徵

# write_into_csv() 將某個資料夾中的影象讀取特徵兵寫入csv

# compute_the_mean() 從csv中讀取128d特徵,並計算特徵均值

import cv2

import os

import dlib

from skimage import io

import csv

import numpy as np

import pandas as pd

path_pics = "F:/code/python/P_dlib_face_reco/data/get_from_camera/"

path_csv = "F:/code/python/P_dlib_face_reco/data/csvs/"

# detector to find the faces

detector = dlib.get_frontal_face_detector()

# shape predictor to find the face landmarks

predictor = dlib.shape_predictor("shape_predictor_5_face_landmarks.dat")

# face recognition model, the object maps human faces into 128D vectors

facerec = dlib.face_recognition_model_v1("dlib_face_recognition_resnet_model_v1.dat")

# 返回單張影象的128D特徵

def return_128d_features(path_img):

img = io.imread(path_img)

img_gray = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

dets = detector(img_gray, 1)

if(len(dets)!=0):

shape = predictor(img_gray, dets[0])

face_descriptor = facerec.compute_face_descriptor(img_gray, shape)

else:

face_descriptor = 0

print("no face")

# print(face_descriptor)

return face_descriptor

#return_128d_features(path_pics+"img_face_13.jpg")

# 將資料夾中照片特徵提取出來,寫入csv

# 輸入input:

# path_pics: 影象資料夾的路徑

# path_csv: 要生成的csv路徑

def write_into_csv(path_pics ,path_csv):

dir_pics = os.listdir(path_pics)

with open(path_csv, "w", newline="") as csvfile:

writer = csv.writer(csvfile)

for i in range(len(dir_pics)):

# 呼叫return_128d_features()得到128d特徵

print(path_pics+dir_pics[i])

features_128d = return_128d_features(path_pics+dir_pics[i])

# print(features_128d)

# 遇到沒有檢測出人臉的圖片跳過

if features_128d==0:

i += 1

else:

writer.writerow(features_128d)

#write_into_csv(path_pics, path_csv+"default_person.csv")

path_csv_rd = "F:/code/python/P_dlib_face_reco/data/csvs/default_person.csv"

# 從csv中讀取資料,計算128d特徵的均值

def compute_the_mean(path_csv_rd):

column_names = []

for i in range(128):

column_names.append("features_" + str(i + 1))

rd = pd.read_csv(path_csv_rd, names=column_names)

# 存放128維特徵的均值

feature_mean = []

for i in range(128):

tmp_arr = rd["features_"+str(i+1)]

tmp_arr = np.array(tmp_arr)

# 計算某一個特徵的均值

tmp_mean = np.mean(tmp_arr)

feature_mean.append(tmp_mean)

print(feature_mean)

return feature_mean

compute_the_mean(path_csv_rd)

2.3 face_reco_from_camera.py / 實時人臉識別對比分析

這個py就是呼叫攝像頭,捕獲攝像頭中的人臉,然後如果檢測到人臉,將攝像頭中的人臉提取出128D的特徵,然後和預設的default_person的128D特徵進行計算歐式距離,如果比較小,可以判定為一個人,否則不是一個人;

歐氏距離對比的閾值設定,是在 return_euclidean_distance函式的dist變數;

我這裡程式裡面指定的是0.4,具體閾值可以根據實際情況或者測得結果進行修改;

原始碼:

# 2018-5-11

# By TimeStamp

# cnblogs: http://www.cnblogs.com/AdaminXie

import dlib # 人臉識別的庫dlib

import numpy as np # 資料處理的庫numpy

import cv2 # 影象處理的庫OpenCv

# face recognition model, the object maps human faces into 128D vectors

facerec = dlib.face_recognition_model_v1("dlib_face_recognition_resnet_model_v1.dat")

# 計算兩個向量間的歐式距離

def return_euclidean_distance(feature_1,feature_2):

feature_1 = np.array(feature_1)

feature_2 = np.array(feature_2)

dist = np.sqrt(np.sum(np.square(feature_1 - feature_2)))

print(dist)

if dist > 0.4:

return "diff"

else:

return "same"

features_mean_default_person = [-0.030892765492592986, 0.13333227054068916, 0.054221574805284799, -0.050820438289328626, -0.056331159841073189, 0.0039378538311116004, -0.044465327145237675, -0.13096490031794497, 0.14215188983239627, -0.084465635842398593, 0.34389359700052363, -0.062936659118062566, -0.24372901571424385, -0.13270603316394905, -0.0472818422866495, 0.15475224742763921, -0.24415240554433121, -0.11213862150907516, 0.032288033417180964, 0.023676671577911628, 0.098508275653186594, -0.010117797634417289, 0.0048202000815715448, -0.014808513420192819, -0.060100053486071135, -0.34934839135722112, -0.095795629448012301, -0.050788544706608117, 0.032316677762489567, -0.099673464894294739, -0.080181991975558434, 0.096361607705291952, -0.1823408101734362, -0.045472671817007815, -0.0066827326326778062, 0.047393877549391041, -0.038414973079373964, -0.039067085930391363, 0.15961966781239761, 0.0092458106136243598, -0.16182226570029007, 0.026322136191945327, -0.0039144184832510193, 0.2492692768573761, 0.19180528427425184, 0.022950534855848866, -0.019220497949342979, -0.15331173021542399, 0.047744840089427795, -0.17038608616904208, 0.026140184680882254, 0.19366614363695445, 0.066497623724372762, 0.07038829416820877, -0.0549700813073861, -0.11961311768544347, -0.032121153940495695, 0.083507449611237169, -0.14934051350543373, 0.011458799806668571, 0.10686114273573223, -0.10744074888919529, -0.04377919611962218, -0.11030520381111848, 0.20804878441910996, 0.093076545941202266, -0.11621182490336268, -0.1991656830436305, 0.10751579348978244, -0.11251544991606161, -0.12237925866716787, 0.058218707869711672, -0.15829276019021085, -0.17670038891466042, -0.2718416170070046, 0.034569320955166689, 0.30443575821424784, 0.061833358712886512, -0.19622498672259481, 0.011373612000361868, -0.050225612756453063, -0.036157087079788507, 0.12961127491373764, 0.13962576616751521, -0.0074232793168017737, 0.020964263007044792, -0.11185114399382942, 0.012502493042694894, 0.17834208513561048, -0.072658227462517586, -0.041312719401168194, 0.25095899873658228, -0.056628625839948654, 0.10285118379090961, 0.046701753217923012, 0.042323612264896691, 0.0036216247826814651, 0.066720707440062574, -0.16388990533979317, -0.0193739396421925, 0.027835704435251261, -0.086023958105789985, -0.05472404568603164, 0.14802298341926776, -0.10644183582381199, 0.098863413851512108, 0.00061285014778963834, 0.062096107555063146, 0.051960245755157973, -0.099548895108072383, -0.058173993112225285, -0.065454461562790375, 0.14721672511414477, -0.25363486848379435, 0.20384312381869868, 0.16890435312923632, 0.097537552447695477, 0.087824966562421697, 0.091438713434495431, 0.093809676797766431, -0.034379941362299417, -0.085149037210564868, -0.24900743130006289, 0.021165960517368819, 0.076710369830068792, -0.0061752907196549996, 0.028413473285342519, -0.029983982541843465]

# dlib預測器

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor('shape_predictor_68_face_landmarks.dat')

# 建立cv2攝像頭物件

cap = cv2.VideoCapture(0)

# cap.set(propId, value)

# 設定視訊引數,propId設定的視訊引數,value設定的引數值

cap.set(3, 480)

def get_128d_features(img_gray):

dets = detector(img_gray, 1)

if (len(dets) != 0):

shape = predictor(img_gray, dets[0])

face_descriptor = facerec.compute_face_descriptor(img_gray, shape)

else:

face_descriptor=0

return face_descriptor

# cap.isOpened() 返回true/false 檢查初始化是否成功

while (cap.isOpened()):

# cap.read()

# 返回兩個值:

# 一個布林值true/false,用來判斷讀取視訊是否成功/是否到視訊末尾

# 影象物件,影象的三維矩陣

flag, im_rd = cap.read()

# 每幀資料延時1ms,延時為0讀取的是靜態幀

kk = cv2.waitKey(1)

# 取灰度

img_gray = cv2.cvtColor(im_rd, cv2.COLOR_RGB2GRAY)

# 人臉數rects

rects = detector(img_gray, 0)

# print(len(rects))

# 待會要寫的字型

font = cv2.FONT_HERSHEY_SIMPLEX

cv2.putText(im_rd, "q: quit", (20, 400), font, 0.8, (0, 255, 255), 1, cv2.LINE_AA)

if (len(rects) != 0):

# 檢測到人臉

# 將捕獲到的人臉提取特徵和內建特徵進行比對

features_rd = get_128d_features(im_rd)

compare = return_euclidean_distance(features_rd, features_mean_default_person)

im_rd = cv2.putText(im_rd, compare.replace("same", "default_person"), (20, 350), font, 0.8, (0, 255, 255), 1, cv2.LINE_AA)

# 矩形框

for k, d in enumerate(rects):

# 繪製矩形框

im_rd = cv2.rectangle(im_rd, tuple([d.left(), d.top()]), tuple([d.right(), d.bottom()]), (0, 255, 255), 2)

cv2.putText(im_rd, "faces: " + str(len(rects)), (20, 50), font, 1, (0, 0, 255), 1, cv2.LINE_AA)

else:

# 沒有檢測到人臉

cv2.putText(im_rd, "no face", (20, 50), font, 1, (0, 0, 255), 1, cv2.LINE_AA)

# 按下q鍵退出

if (kk == ord('q')):

break

# 視窗顯示

cv2.imshow("camera", im_rd)

# 釋放攝像頭

cap.release()

# 刪除建立的視窗

cv2.destroyAllWindows()

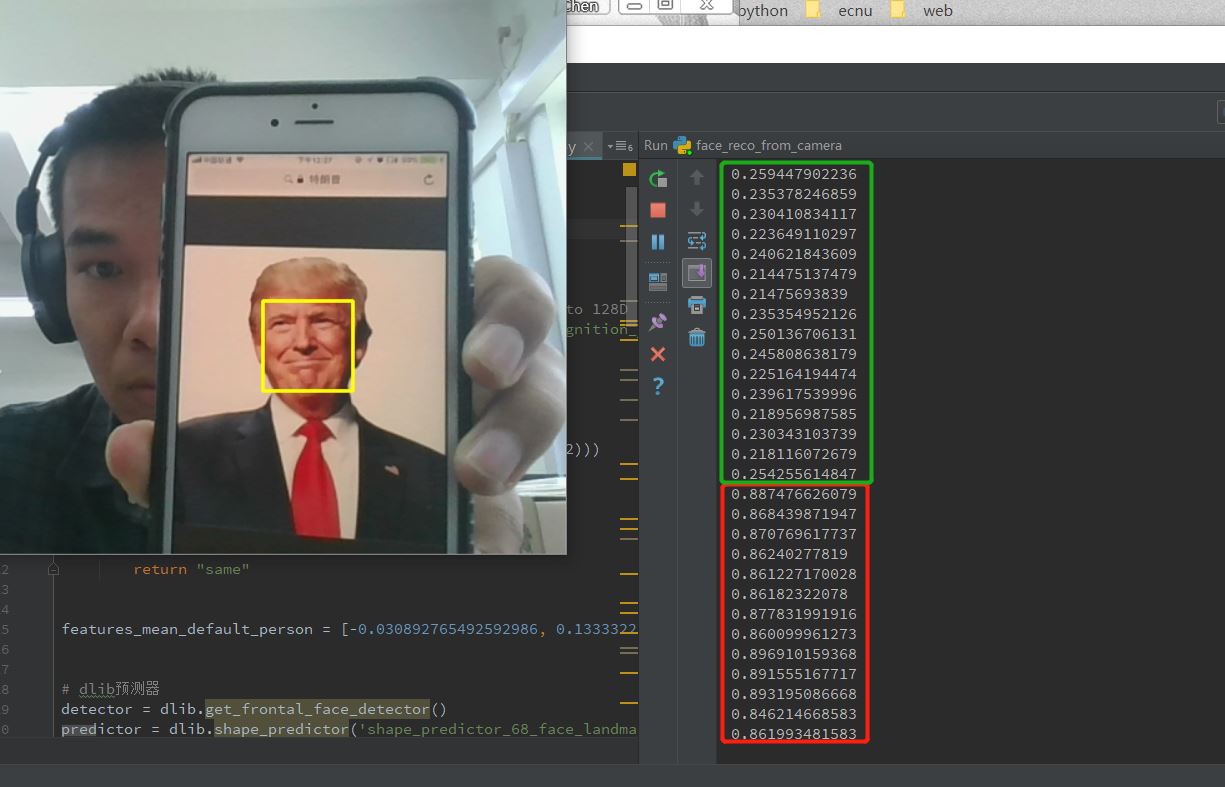

實時輸出結果:

圖6 實時輸出的歐氏距離結果

通過實時的輸出結果,看的比較明顯;

輸出綠色部分:當是我自己(即之前分析提取特徵的default_person)時,計算出來的歐式距離基本都在0.2 左右;

輸出紅色部分:而換一張圖片上去比如特朗普,明顯看到歐式距離計算結果達到了0.8,此時就可以判定,後來這張人臉不是我們預設的人臉;

所以之前提到的歐式距離計算對比的閾值可以由此設定,本專案中取的是0.4;

3.總結

之前接著那個攝像頭人臉檢測寫的,不過拖到現在才更新,寫的也比較粗糙,大家有具體需求和應用場景可以加以修改,有什麼問題可以留言或者直接mail 我。。。不好意思

核心就是提取人臉特徵,然後計算歐式距離和預設的特徵臉進行比對;

不過這個實時獲取攝像頭人臉進行比對,要實時的進行計算攝像頭臉的特徵值,然後還要計算歐氏距離,所以計算量比較大,可能攝像頭視訊流會出現卡頓;

# 程式碼已上傳到了我的GitHub,如果對您有幫助歡迎star下:https://github.com/coneypo/Dlib_face_recognition_from_camera

以上就是本文的全部內容,希望對大家的學習有所幫助,也希望大家多多支援指令碼之家。