python Scrapy網路爬蟲實戰(存Json檔案以及存到mysql資料庫)

阿新 • • 發佈:2018-11-15

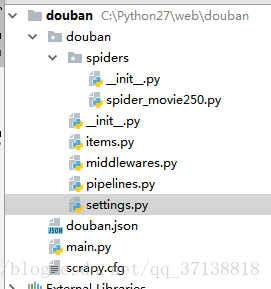

1-Scrapy建立新工程

在開始爬取之前,您必須建立一個新的 Scrapy 專案。 進入您打算儲存程式碼的目錄中【工作目錄】,執行下列命令,如下是我建立的一個爬取豆瓣的工程douban【儲存路徑為:C:\python27\web】:

命令: scrapy startproject douban

2-目錄 如下

3--items的編寫

首先,檔案中有items.py,這個裡面這要是用來封裝爬蟲所要爬的欄位,如爬豆瓣電影,需要爬電影的ID,url,電影名稱等。

# -*- coding:utf-8 -*- import scrapy class MovieItem(scrapy.Item): rank = scrapy.Field() title = scrapy.Field() link = scrapy.Field() rate = scrapy.Field() quote = scrapy.Field()

4-spider_movie250.py 的編寫

# -*- coding:utf-8 -*- import scrapy from douban.items import MovieItem class Movie250Spider(scrapy.Spider): # 定義爬蟲的名稱,主要main方法使用 name = 'doubanmovie' allowed_domains = ["douban.com"] start_urls = [ "http://movie.douban.com/top250/" ] # 解析資料 def parse(self, response): items = [] for info in response.xpath('//div[@class="item"]'): item = MovieItem() item['rank'] = info.xpath('div[@class="pic"]/em/text()').extract() item['title'] = info.xpath('div[@class="pic"]/a/img/@alt').extract() item['link'] = info.xpath('div[@class="pic"]/a/@href').extract() item['rate'] = info.xpath('div[@class="info"]/div[@class="bd"]/div[@class="star"]/span/text()').extract() item['quote'] = info.xpath('div[@class="info"]/div[@class="bd"]/p[@class="quote"]/span/text()').extract() items.append(item) yield item # 翻頁 next_page = response.xpath('//span[@class="next"]/a/@href') if next_page: url = response.urljoin(next_page[0].extract()) #爬每一頁 yield scrapy.Request(url, self.parse)

5-編寫pipelines

# -*- coding: utf-8 -*- import json import codecs #以Json的形式儲存 class JsonWithEncodingCnblogsPipeline(object): def __init__(self): self.file = codecs.open('douban.json', 'w', encoding='utf-8') def process_item(self, item, spider): line = json.dumps(dict(item), ensure_ascii=False) + "\n" self.file.write(line) return item def spider_closed(self, spider): self.file.close() #將資料儲存到mysql資料庫 from twisted.enterprise import adbapi import MySQLdb import MySQLdb.cursors class MySQLStorePipeline(object): #資料庫引數 def __init__(self): dbargs = dict( host = '127.0.0.1', db = '資料庫名', user = 'root', passwd = 'root', cursorclass = MySQLdb.cursors.DictCursor, charset = 'utf8', use_unicode = True ) self.dbpool = adbapi.ConnectionPool('MySQLdb',**dbargs) ''' The default pipeline invoke function ''' def process_item(self, item,spider): res = self.dbpool.runInteraction(self.insert_into_table,item) return item #插入的表,此表需要事先建好 def insert_into_table(self,conn,item): conn.execute('insert into douban(rank,title,rate,qute,link) values(%s,%s,%s,%s,%s)', ( item['rank'][0], item['title'][0], item['rate'][0], item['quote'][0], item['link'][0]) )

6-settings的編寫

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1) XXXXXXX) Chrome/70.0.3538.67 Safari/537.36'

# start MySQL database configure setting

MYSQL_HOST = '127.0.0.1'

MYSQL_DBNAME = '資料庫名'

MYSQL_USER = 'root'

MYSQL_PASSWD = 'root'

# end of MySQL database configure setting

ITEM_PIPELINES = {

'douban.pipelines.JsonWithEncodingCnblogsPipeline': 300,

'douban.pipelines.MySQLStorePipeline': 300,

}7-main 的編寫

from scrapy import cmdline

cmdline.execute("scrapy crawl doubanmovie".split())