ORB-SLAM2原始碼解讀(1):系統入口System

阿新 • • 發佈:2018-11-15

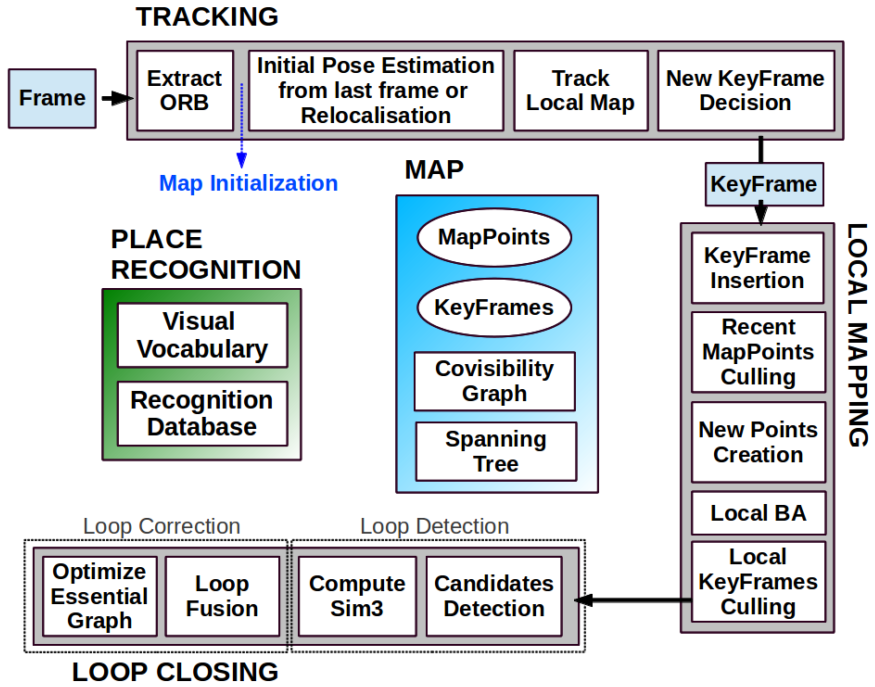

先要拿大名鼎鼎的ORB-SLAM系統框圖鎮樓,看著這張圖能夠完美的串起來整個流程。

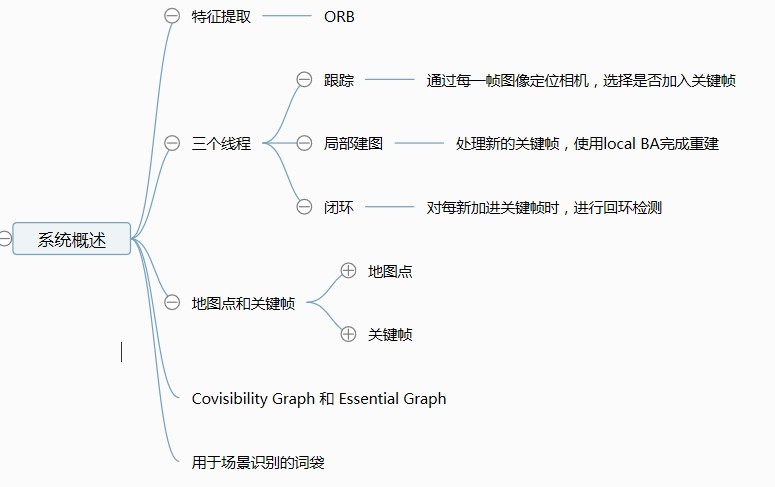

ORB-SLAM分三個執行緒,分別是Tracking、LocalMapping和LoopClosing。

(1)Tracking:在主執行緒上,輸入視訊流,輸出相機位姿並跟蹤區域性地圖。提取ORB特徵子,根據上一幀進行位姿估計或全域性重定位,然後跟蹤區域性地圖優化位姿,確定新的關鍵幀。

(2)LocalMapping:維護優化區域性地圖。輸入關鍵幀,輸出修正的關鍵幀和地圖點。插入關鍵幀,生成新的地圖點,使用BA優化,去除冗餘的關鍵幀

(3)LoopClosing:閉環檢測和閉環矯正。BOW檢測,再用Sim3計算相似變換;閉環融合與優化Essential Graph的圖優化。

#ifndef SYSTEM_H #define SYSTEM_H #include<string> #include<thread> #include<opencv2/core/core.hpp> #include "Tracking.h" #include "FrameDrawer.h" #include "MapDrawer.h" #include "Map.h" #include "LocalMapping.h" #include "LoopClosing.h" #include "KeyFrameDatabase.h" #include "ORBVocabulary.h" #include "Viewer.h" namespace ORB_SLAM2 { class Viewer; class FrameDrawer; class Map; class Tracking; class LocalMapping; class LoopClosing; class System { public: // 三種感測器選擇 enum eSensor{ MONOCULAR=0, STEREO=1, RGBD=2 }; //成員函式 public: // 宣告 System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor, const bool bUseViewer = true); // Tracking函式:輸出相機位姿 cv::Mat TrackStereo(const cv::Mat &imLeft, const cv::Mat &imRight, const double ×tamp); cv::Mat TrackRGBD(const cv::Mat &im, const cv::Mat &depthmap, const double ×tamp); cv::Mat TrackMonocular(const cv::Mat &im, const double ×tamp); // 啟用定位模組;停止Local Mapping,只相機追蹤??? void ActivateLocalizationMode(); // 失效定位模組;恢復Local Mapping,再次執行SLAM??? void DeactivateLocalizationMode(); // 清空地圖,重啟系統 void Reset(); // 儲存軌跡之前執行 void Shutdown(); // 儲存相機軌跡 Only stereo and RGB-D. // Call first Shutdown() // See format details at: http://vision.in.tum.de/data/datasets/rgbd-dataset void SaveTrajectoryTUM(const string &filename); // See format details at: http://www.cvlibs.net/datasets/kitti/eval_odometry.php void SaveTrajectoryKITTI(const string &filename); // 儲存關鍵幀位姿 // Call first Shutdown() // See format details at: http://vision.in.tum.de/data/datasets/rgbd-dataset void SaveKeyFrameTrajectoryTUM(const string &filename); //成員變數 private: // Input sensor eSensor mSensor; // ORB詞彙表用於場景識別和特徵匹配 ORBVocabulary* mpVocabulary; // 關鍵幀資料庫用於位置識別 (重定位和迴環檢測). KeyFrameDatabase* mpKeyFrameDatabase; // 儲存關鍵幀和地圖特徵子 Map* mpMap; // Tracker. 接受幀計算相機位姿 // 決定何時插入新的關鍵幀,建立新的地圖特徵子 // 重定位 Tracking* mpTracker; // Local Mapper.管理本地地圖,執行BA LocalMapping* mpLocalMapper; // Loop Closer. 搜尋每個新的關鍵幀的迴圈 LoopClosing* mpLoopCloser; // Pangolin.繪製地圖和當前相機位姿 Viewer* mpViewer; // 畫圖用的 FrameDrawer* mpFrameDrawer; MapDrawer* mpMapDrawer; // 3個執行緒: Local Mapping, Loop Closing, Viewer. // Tracking執行緒在System主程式執行緒中 std::thread* mptLocalMapping; std::thread* mptLoopClosing; std::thread* mptViewer; // 重置Flag??? std::mutex mMutexReset; bool mbReset; //更改模型flags std::mutex mMutexMode; bool mbActivateLocalizationMode; bool mbDeactivateLocalizationMode; }; }// namespace ORB_SLAM #endif // SYSTEM_H

#include "System.h" #include "Converter.h" #include <thread> #include <pangolin/pangolin.h> #include <iostream> // std::cout, std::fixed #include <iomanip> // std::setprecision bool has_suffix(const std::string &str, const std::string &suffix) { std::size_t index = str.find(suffix, str.size() - suffix.size()); return (index != std::string::npos); } namespace ORB_SLAM2 { //列表初始化建構函式:詞袋檔案、引數檔案、感測器型別、是否用Viewer System::System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor, const bool bUseViewer):mSensor(sensor),mbReset(false),mbActivateLocalizationMode(false), mbDeactivateLocalizationMode(false) { // Output welcome message cout << endl << "ORB-SLAM2 Copyright (C) 2014-2016 Raul Mur-Artal, University of Zaragoza." << endl << "This program comes with ABSOLUTELY NO WARRANTY;" << endl << "This is free software, and you are welcome to redistribute it" << endl << "under certain conditions. See LICENSE.txt." << endl << endl; cout << "Input sensor was set to: "; if(mSensor==MONOCULAR) cout << "Monocular" << endl; else if(mSensor==STEREO) cout << "Stereo" << endl; else if(mSensor==RGBD) cout << "RGB-D" << endl; //1.讀取引數檔案,內參、幀率、基線、深度, XXX.yaml cv::FileStorage fsSettings(strSettingsFile.c_str(), cv::FileStorage::READ); if(!fsSettings.isOpened()) { cerr << "Failed to open settings file at: " << strSettingsFile << endl; exit(-1); } //2.下載ORB詞袋 .txt cout << endl << "Loading ORB Vocabulary. This could take a while..." << endl; mpVocabulary = new ORBVocabulary();//這個詞袋類在哪裡定義的??? bool bVocLoad = false; // 基於副檔名載入 if (has_suffix(strVocFile, ".txt")) bVocLoad = mpVocabulary->loadFromTextFile(strVocFile); else if(has_suffix(strVocFile, ".bin")) bVocLoad = mpVocabulary->loadFromBinaryFile(strVocFile); else bVocLoad = false; if(!bVocLoad) { cerr << "Wrong path to vocabulary. " << endl; cerr << "Failed to open at: " << strVocFile << endl; exit(-1); } cout << "Vocabulary loaded!" << endl << endl; //3.建立關鍵幀資料庫 mpKeyFrameDatabase = new KeyFrameDatabase(*mpVocabulary); //4.建立地圖 mpMap = new Map(); //Create Drawers. mpFrameDrawer = new FrameDrawer(mpMap); mpMapDrawer = new MapDrawer(mpMap, strSettingsFile); //5.1初始化 Tracking //(it will live in the main thread of execution, the one that called this constructor) mpTracker = new Tracking(this, mpVocabulary, mpFrameDrawer, mpMapDrawer, mpMap, mpKeyFrameDatabase, strSettingsFile, mSensor); //5.2初始化併發布 Local Mapping 執行緒 mpLocalMapper = new LocalMapping(mpMap, mSensor==MONOCULAR); mptLocalMapping = new thread(&ORB_SLAM2::LocalMapping::Run,mpLocalMapper); //5.3初始化併發布 Loop Closing 執行緒 mpLoopCloser = new LoopClosing(mpMap, mpKeyFrameDatabase, mpVocabulary, mSensor!=MONOCULAR); mptLoopClosing = new thread(&ORB_SLAM2::LoopClosing::Run, mpLoopCloser); //5.4初始化併發布 Viewer 執行緒 mpViewer = new Viewer(this, mpFrameDrawer,mpMapDrawer,mpTracker,strSettingsFile); if(bUseViewer) mptViewer = new thread(&Viewer::Run, mpViewer); mpTracker->SetViewer(mpViewer); //5.5執行緒之間相互設定指標??? mpTracker->SetLocalMapper(mpLocalMapper); mpTracker->SetLoopClosing(mpLoopCloser); mpLocalMapper->SetTracker(mpTracker); mpLocalMapper->SetLoopCloser(mpLoopCloser); mpLoopCloser->SetTracker(mpTracker); mpLoopCloser->SetLocalMapper(mpLocalMapper); } cv::Mat System::TrackStereo(const cv::Mat &imLeft, const cv::Mat &imRight, const double ×tamp) { if(mSensor!=STEREO) { cerr << "ERROR: you called TrackStereo but input sensor was not set to STEREO." << endl; exit(-1); } // Check mode change { unique_lock<mutex> lock(mMutexMode); if(mbActivateLocalizationMode) { mpLocalMapper->RequestStop(); // Wait until Local Mapping has effectively stopped while(!mpLocalMapper->isStopped()) { //usleep(1000); std::this_thread::sleep_for(std::chrono::milliseconds(1)); } mpTracker->InformOnlyTracking(true);// 定位時,只跟蹤 mbActivateLocalizationMode = false; } if(mbDeactivateLocalizationMode) { mpTracker->InformOnlyTracking(false); mpLocalMapper->Release(); mbDeactivateLocalizationMode = false; } } // Check reset { unique_lock<mutex> lock(mMutexReset); if(mbReset) { mpTracker->Reset(); mbReset = false; } } return mpTracker->GrabImageStereo(imLeft,imRight,timestamp); } cv::Mat System::TrackRGBD(const cv::Mat &im, const cv::Mat &depthmap, const double ×tamp) { if(mSensor!=RGBD) { cerr << "ERROR: you called TrackRGBD but input sensor was not set to RGBD." << endl; exit(-1); } // Check mode change { unique_lock<mutex> lock(mMutexMode); if(mbActivateLocalizationMode) { mpLocalMapper->RequestStop(); // Wait until Local Mapping has effectively stopped while(!mpLocalMapper->isStopped()) { //usleep(1000); std::this_thread::sleep_for(std::chrono::milliseconds(1)); } mpTracker->InformOnlyTracking(true);// 定位時,只跟蹤 mbActivateLocalizationMode = false; } if(mbDeactivateLocalizationMode) { mpTracker->InformOnlyTracking(false); mpLocalMapper->Release(); mbDeactivateLocalizationMode = false; } } // Check reset { unique_lock<mutex> lock(mMutexReset); if(mbReset) { mpTracker->Reset(); mbReset = false; } } return mpTracker->GrabImageRGBD(im,depthmap,timestamp); } //定義跟蹤單目函式 cv::Mat System::TrackMonocular(const cv::Mat &im, const double ×tamp) { if(mSensor!=MONOCULAR) { cerr << "ERROR: you called TrackMonocular but input sensor was not set to Monocular." << endl; exit(-1); } // 檢查模型 { unique_lock<mutex> lock(mMutexMode); //如果啟用定位模組,休眠1000ms直到停止建圖 if(mbActivateLocalizationMode) { mpLocalMapper->RequestStop(); // Wait until Local Mapping has effectively stopped while(!mpLocalMapper->isStopped()) { //usleep(1000); std::this_thread::sleep_for(std::chrono::milliseconds(1)); } mpTracker->InformOnlyTracking(true);// 定位時,只跟蹤 mbActivateLocalizationMode = false;// 防止重複執行 } //如果定位模組失效,重啟建圖 if(mbDeactivateLocalizationMode) { mpTracker->InformOnlyTracking(false); mpLocalMapper->Release(); mbDeactivateLocalizationMode = false;// 防止重複執行 } } // 檢查重啟 { unique_lock<mutex> lock(mMutexReset); if(mbReset) { mpTracker->Reset(); mbReset = false; } } //這個Grab函式哪裡來的??? return mpTracker->GrabImageMonocular(im,timestamp); } //啟用定位模組 void System::ActivateLocalizationMode() { unique_lock<mutex> lock(mMutexMode); mbActivateLocalizationMode = true; } //失效定位模組 void System::DeactivateLocalizationMode() { //加鎖 unique_lock<mutex> lock(mMutexMode); mbDeactivateLocalizationMode = true; } //重置系統 void System::Reset() { unique_lock<mutex> lock(mMutexReset); mbReset = true; } //關閉整個系統 void System::Shutdown() { mpLocalMapper->RequestFinish(); mpLoopCloser->RequestFinish(); if(mpViewer) { mpViewer->RequestFinish(); while(!mpViewer->isFinished()) std::this_thread::sleep_for(std::chrono::milliseconds(5)); } // Wait until all thread have effectively stopped while(!mpLocalMapper->isFinished() || !mpLoopCloser->isFinished() || mpLoopCloser->isRunningGBA()) { //usleep(5000); std::this_thread::sleep_for(std::chrono::milliseconds(5)); } if(mpViewer) pangolin::BindToContext("ORB-SLAM2: Map Viewer"); } //儲存軌跡 void System::SaveTrajectoryTUM(const string &filename) { cout << endl << "Saving camera trajectory to " << filename << " ..." << endl; if(mSensor==MONOCULAR) { cerr << "ERROR: SaveTrajectoryTUM cannot be used for monocular." << endl; return; } //得到所有關鍵幀,重新排序第一幀位於原點 vector<KeyFrame*> vpKFs = mpMap->GetAllKeyFrames(); sort(vpKFs.begin(),vpKFs.end(),KeyFrame::lId); cv::Mat Two = vpKFs[0]->GetPoseInverse(); ofstream f; f.open(filename.c_str()); f << fixed; // 先獲得關鍵幀,當前幀相對於關鍵幀 //每一幀都有引用關鍵幀、時間戳和標誌 list<ORB_SLAM2::KeyFrame*>::iterator lRit = mpTracker->mlpReferences.begin(); list<double>::iterator lT = mpTracker->mlFrameTimes.begin(); list<bool>::iterator lbL = mpTracker->mlbLost.begin(); for(list<cv::Mat>::iterator lit=mpTracker->mlRelativeFramePoses.begin(), lend=mpTracker->mlRelativeFramePoses.end();lit!=lend;lit++, lRit++, lT++, lbL++) { if(*lbL) continue; KeyFrame* pKF = *lRit; cv::Mat Trw = cv::Mat::eye(4,4,CV_32F); // 如果參考關鍵幀被剔除,遍歷生成樹獲得合適關鍵幀 while(pKF->isBad()) { Trw = Trw*pKF->mTcp; pKF = pKF->GetParent(); } Trw = Trw*pKF->GetPose()*Two; cv::Mat Tcw = (*lit)*Trw; cv::Mat Rwc = Tcw.rowRange(0,3).colRange(0,3).t(); cv::Mat twc = -Rwc*Tcw.rowRange(0,3).col(3); vector<float> q = Converter::toQuaternion(Rwc); f << setprecision(6) << *lT << " " << setprecision(9) << twc.at<float>(0) << " " << twc.at<float>(1) << " " << twc.at<float>(2) << " " << q[0] << " " << q[1] << " " << q[2] << " " << q[3] << endl; } f.close(); cout << endl << "trajectory saved!" << endl; } void System::SaveKeyFrameTrajectoryTUM(const string &filename) { cout << endl << "Saving keyframe trajectory to " << filename << " ..." << endl; vector<KeyFrame*> vpKFs = mpMap->GetAllKeyFrames(); sort(vpKFs.begin(),vpKFs.end(),KeyFrame::lId); // Transform all keyframes so that the first keyframe is at the origin. // After a loop closure the first keyframe might not be at the origin. //cv::Mat Two = vpKFs[0]->GetPoseInverse(); ofstream f; f.open(filename.c_str()); f << fixed; for(size_t i=0; i<vpKFs.size(); i++) { KeyFrame* pKF = vpKFs[i]; // pKF->SetPose(pKF->GetPose()*Two); if(pKF->isBad()) continue; cv::Mat R = pKF->GetRotation().t(); vector<float> q = Converter::toQuaternion(R); cv::Mat t = pKF->GetCameraCenter(); f << setprecision(6) << pKF->mTimeStamp << setprecision(7) << " " << t.at<float>(0) << " " << t.at<float>(1) << " " << t.at<float>(2) << " " << q[0] << " " << q[1] << " " << q[2] << " " << q[3] << endl; } f.close(); cout << endl << "trajectory saved!" << endl; } void System::SaveTrajectoryKITTI(const string &filename) { cout << endl << "Saving camera trajectory to " << filename << " ..." << endl; if(mSensor==MONOCULAR) { cerr << "ERROR: SaveTrajectoryKITTI cannot be used for monocular." << endl; return; } vector<KeyFrame*> vpKFs = mpMap->GetAllKeyFrames(); sort(vpKFs.begin(),vpKFs.end(),KeyFrame::lId); // Transform all keyframes so that the first keyframe is at the origin. // After a loop closure the first keyframe might not be at the origin. cv::Mat Two = vpKFs[0]->GetPoseInverse(); ofstream f; f.open(filename.c_str()); f << fixed; // Frame pose is stored relative to its reference keyframe (which is optimized by BA and pose graph). // We need to get first the keyframe pose and then concatenate the relative transformation. // Frames not localized (tracking failure) are not saved. // For each frame we have a reference keyframe (lRit), the timestamp (lT) and a flag // which is true when tracking failed (lbL). list<ORB_SLAM2::KeyFrame*>::iterator lRit = mpTracker->mlpReferences.begin(); list<double>::iterator lT = mpTracker->mlFrameTimes.begin(); for(list<cv::Mat>::iterator lit=mpTracker->mlRelativeFramePoses.begin(), lend=mpTracker->mlRelativeFramePoses.end();lit!=lend;lit++, lRit++, lT++) { ORB_SLAM2::KeyFrame* pKF = *lRit; cv::Mat Trw = cv::Mat::eye(4,4,CV_32F); while(pKF->isBad()) { // cout << "bad parent" << endl; Trw = Trw*pKF->mTcp; pKF = pKF->GetParent(); } Trw = Trw*pKF->GetPose()*Two; cv::Mat Tcw = (*lit)*Trw; cv::Mat Rwc = Tcw.rowRange(0,3).colRange(0,3).t(); cv::Mat twc = -Rwc*Tcw.rowRange(0,3).col(3); f << setprecision(9) << Rwc.at<float>(0,0) << " " << Rwc.at<float>(0,1) << " " << Rwc.at<float>(0,2) << " " << twc.at<float>(0) << " " << Rwc.at<float>(1,0) << " " << Rwc.at<float>(1,1) << " " << Rwc.at<float>(1,2) << " " << twc.at<float>(1) << " " << Rwc.at<float>(2,0) << " " << Rwc.at<float>(2,1) << " " << Rwc.at<float>(2,2) << " " << twc.at<float>(2) << endl; } f.close(); cout << endl << "trajectory saved!" << endl; } } //namespace ORB_SLAM

總結:

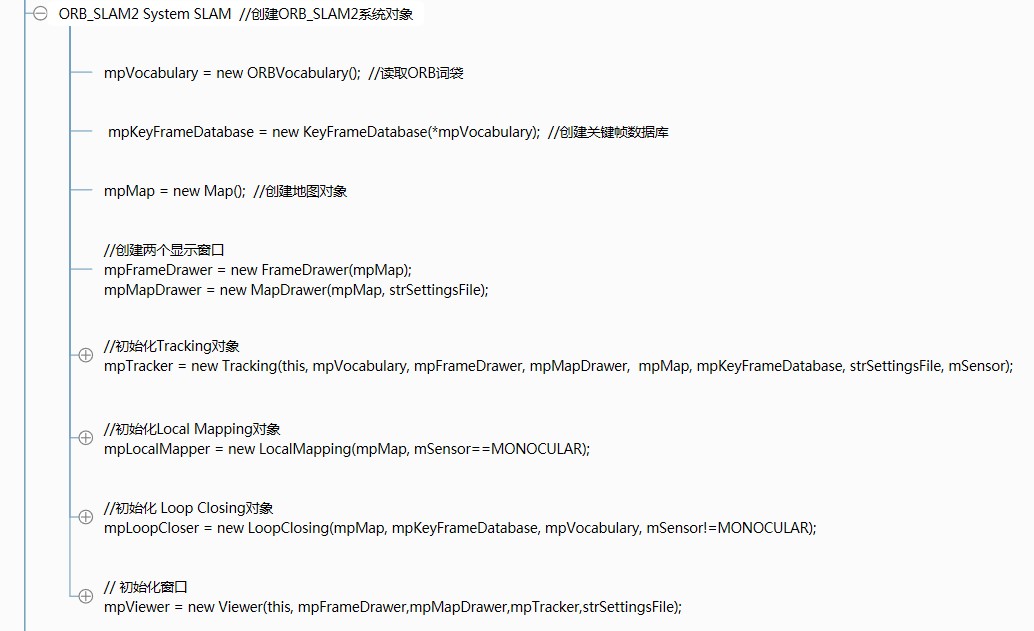

我們可以看到在這個物件的例項化過程中,我們建立了以下物件:

(1)建立了ORB詞袋的物件

(2)建立了關鍵幀的資料庫

(3)建立地圖物件

(4)建立兩個顯示視窗

(5)初始化Tracking物件

(6)初始化Local Mapping物件,並開啟執行緒執行

(7)初始化Loop Closing物件,並開啟執行緒執行

(8)初始化視窗,開啟執行緒顯示影象和地圖點