HDFS中JavaAPI對檔案的上傳、查詢

阿新 • • 發佈:2018-11-16

Ubuntu + Hadoop2.7.3叢集搭建:https://blog.csdn.net/qq_38038143/article/details/83050840

Ubuntu配置Eclipse + Hadoop環境:https://blog.csdn.net/qq_38038143/article/details/83412196

操作環境:Hadoop叢集,4個DataNode。

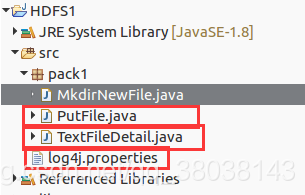

1.建立專案

注:在 Ubuntu 上的 eclipse 操作:

專案組成:

PutFile.java:上傳本地檔案到HDFS

程式碼:

package pack1; import java.io.IOException; import java.net.URI; import java.net.URISyntaxException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileStatus; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; /** * @author: Gu Yongtao * @Description: HDFS * @date: 2018年10月24日 * FileName: PutFile.java */ public class PutFile { public static void main(String[] args) throws IOException, URISyntaxException { Configuration conf = new Configuration(); URI uri = new URI("hdfs://master:9000"); FileSystem fs = FileSystem.get(uri, conf); // 本地檔案 Path src = new Path("/home/hadoop/file"); //HDFS存放位置 Path dst = new Path("/"); fs.copyFromLocalFile(src, dst); System.out.println("Upload to " + conf.get("fs.defaultFS")); //相當於hdfs dfs -ls / FileStatus files[] = fs.listStatus(dst); for (FileStatus file:files) { System.out.println(file.getPath()); } } }

TextFileDetail.java:檢視檔案詳細資訊

程式碼:

package pack1; import java.io.IOException; import java.net.URI; import java.net.URISyntaxException; import java.text.SimpleDateFormat; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.BlockLocation; import org.apache.hadoop.fs.FileStatus; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; /** * @author: Gu Yongtao * @Description: * @date: 2018年10月26日 下午7:20:08 * @Filename: TextFileDetail.java */ public class TextFileDetail { public static void main(String[] args) throws IOException, URISyntaxException { FileSystem fileSystem = FileSystem.get(new URI("hdfs://master:9000"), new Configuration()); Path fpPath = new Path("/file/english.txt"); FileStatus fileStatus = fileSystem.getFileStatus(fpPath); /* * 獲取檔案在HDFS叢集的位置: * FileStatus.getFileBlockLocation(FileSystem file, long start, long len) * 查詢指定檔案在HDFS叢集上的位置,file為檔案完整路徑,start和len為標識路徑 */ BlockLocation[] blockLocations = fileSystem.getFileBlockLocations(fileStatus, 0, fileStatus.getLen()); fileStatus.getAccessTime(); // 輸出塊所在IP for (int i=0; i<blockLocations.length; i++) { String[] hosts = blockLocations[i].getHosts(); // 擁有備份 if (hosts.length>=2) { System.out.println("--------"+"block_"+i+"_location's replications:"+"---------"); for (int j=0; j<hosts.length; j++) { System.out.println("replication"+(j+1)+": "+hosts[j]); } System.out.println("------------------------------"); } else {// 沒有備份 System.out.println("block_"+i+"_location: "+hosts[0]); } } // 格式化輸出日期 SimpleDateFormat formatter = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss"); // 獲取檔案訪問時間,返回long long accessTime = fileStatus.getAccessTime(); System.out.println("access: "+formatter.format(accessTime)); // 獲取檔案修改時間,返long long modificationTime = fileStatus.getModificationTime(); System.out.println("modification: "+formatter.format(modificationTime)); // 獲取檔案大小,單位B long blockSize = fileStatus.getBlockSize(); System.out.println("blockSize: "+blockSize); // 獲取檔案大小 long len = fileStatus.getLen(); System.out.println("length: "+len); // 獲取檔案所在使用者組 String group = fileStatus.getGroup(); System.out.println("group: "+group); // 獲取檔案擁有者 String owner = fileStatus.getOwner(); System.out.println("owner: "+owner); // 檔案拷貝份數 short replication = fileStatus.getReplication(); System.out.println("replicatioin: "+replication); } }

log4j.properties:hadoop輸出配置(控制警告、除錯等)

程式碼:

# Configure logging for testing: optionally with log file #可以設定級別:debug>info>error #debug:可以顯式debug,info,error #info:可以顯式info,error #error:可以顯式error #log4j.rootLogger=debug,appender1 #log4j.rootLogger=info,appender1 log4j.rootLogger=error,appender1 #輸出到控制檯 log4j.appender.appender1=org.apache.log4j.ConsoleAppender #樣式為TTCCLayout log4j.appender.appender1.layout=org.apache.log4j.TTCCLayout

2.執行:

檢視HDFS系統:沒有file目錄

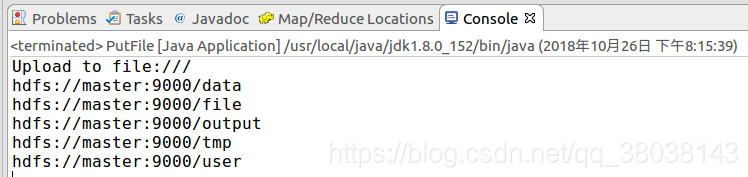

執行 PutFile.java 檔案:

結果:

執行 TextFileDetail.java 檔案:

結果:

--------block_0_location's replications:---------

replication1: slave4

replication2: slave6

replication3: slave5

------------------------------

--------block_1_location's replications:---------

replication1: slave6

replication2: slave5

replication3: slave

------------------------------

--------block_2_location's replications:---------

replication1: slave4

replication2: slave5

replication3: slave

------------------------------

--------block_3_location's replications:---------

replication1: slave6

replication2: slave5

replication3: slave4

------------------------------

--------block_4_location's replications:---------

replication1: slave4

replication2: slave

replication3: slave5

------------------------------

access: 2018-10-26 20:15:41

modification: 2018-10-26 20:18:27

blockSize: 134217728

length: 664734060

group: supergroup

owner: hadoop

replicatioin: 3

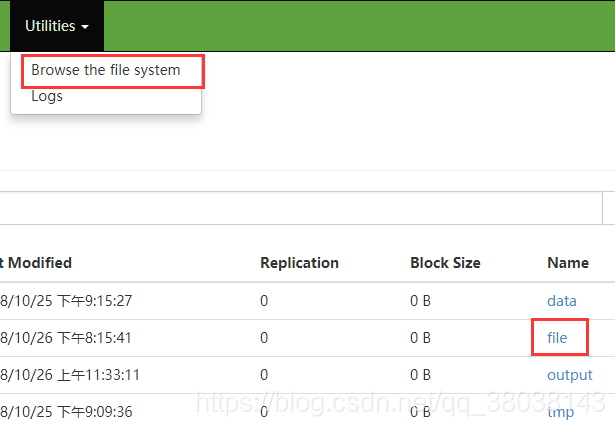

在瀏覽器端檢驗:

埠: http://master:50070

點選english.txt

從上圖發現Block0,備份位置有 slave4, slave5, slve6.

與 TextFileDetail.java 執行結果相同:

--------block_0_location's replications:---------

replication1: slave4

replication2: slave6

replication3: slave5

------------------------------