實驗四:Tensorflow實現了四個對抗圖像制作算法--readme

文章來源:Github

Four adversarial image crafting algorithms are implemented with Tensorflow. The four attacking algorithms can be found in attacks folder. The implementation adheres to the principle tensor-in, tensor-out. They all return a Tensorflow operation which could be run through sess.run(...).

使用Tensorflow實現了四種對抗性圖像制作算法。 攻擊文件夾中可以找到四種攻擊算法。 實施遵循原則tensor-in, tensor-out。 它們都返回一個Tensorflow操作,可以通過sess.run(...)運行。

-

Fast Gradient Sign Method (FGSM) basic/iterative 快速梯度符號方法(FGSM)

fgsm(model, x, eps=0.01, epochs=1, clip_min=0.0, clip_max=1.0)

論文5.Explaining and Harnessing Adversarial Examples:https://arxiv.org/abs/1412.6572/

論文4.Adversarial examples in the physical world:https://arxiv.org/abs/1607.02533

-

Target class Gradient Sign Method (TGSM)目標類梯度符號方法

tgsm(model, x, y=None, eps=0.01, epochs=1, clip_min=0.0, clip_max=1.0)

-

When y=None, this implements the least-likely class method.

-

If yis an integer or a list of integers, the source image is modified towards label y.

論文4.Adversarial examples in the physical world: https://arxiv.org/abs/1607.02533

-

Jacobian-based Saliency Map Approach (JSMA) 基於雅可比的顯著性圖方法

jsma(model, x, y, epochs=1.0, eps=1., clip_min=0.0, clip_max=1.0, pair=False, min_proba=0.0)

y is the target label, could be an integer or a list. when epochsis a floating number in the range [0, 1], it denotes the maximum percentage distortion allowed and epochsis automatically deduced. min_probadenotes the minimum confidence of target image. If pair=True, then modifies two pixels at a time.

- y是目標標簽,可以是整數或列表。 當epochsis在[0,1]範圍內的浮點數時,它表示允許的最大失真百分比和自動推斷出的epochsis。 min_proba表示目標圖像的最小置信度。 如果pair = True,則一次修改兩個像素。

論文21.The Limitations of Deep Learning in Adversarial Settings: https://arxiv.org/abs/1511.07528

-

Saliency map difference approach (SMDA) 顯著圖差異法

smda(model, x, y, epochs=1.0, eps=1., clip_min=0.0, clip_max=1.0, min_proba=0.0)

Interface is the same as jsma. This algorithm differs from the JSMA in how the saliency score is calculated. In JSMA, saliency score is calculated as dt/dx * (-do/dx), while in SMDA, the saliency score is dt/dx - do/dx, thus the name “saliency map difference”.

- 接口與jsma相同。 該算法與JSMA的不同之處在於如何計算顯著性得分。 在JSMA中,顯著性得分計算為dt / dx *( - do / dx),而在SMDA中,顯著性得分為dt / dx - do / dx,因此名稱為“顯著性圖差異”。

The model

Notice that we have model as the first parameter for every method. The modelis a wrapper function. It should have the following signature:

請註意,我們將模型作為每個方法的第一個參數。 該模型是一個包裝函數。 它應該有以下簽名:

def model(x, logits=False): # x is the input to the network, usually a tensorflow placeholder y = your_model(x) logits_ = ... # get the logits before softmax if logits: return y, logits return y

We need the logits because some algorithms (FGSM and TGSM) rely on the logits to compute the loss.

我們需要logits,因為一些算法(FGSM和TGSM)依賴於logits來計算損失。

How to Use

Implementation of each attacking method is self-contained, and depends only on tensorflow. Copy the attacking method file to the same folder as your source code and import it.

The implementation should work on any framework that is compatiblewith Tensorflow. I provide example code for Tensorflow and Keras in the folder tf_example and keras_example, respectively. Each code example is also self-contained.

每種攻擊方法的實現都是自包含的,並且僅取決於張量流。 將攻擊方法文件復制到與源代碼相同的文件夾並導入。

該實現應該適用於與Tensorflow兼容的任何框架。 我分別在文件夾tf_example和keras_example中提供了Tensorflow和Keras的示例代碼。 每個代碼示例也是自包含的。

https://github.com/gongzhitaao/tensorflow-adversarial

本地下載路徑為 C:\Users\Josie\AppData\Local\Programs\Python\Python35\Scripts\1\tensorflow-adversarial-master

And example code with the same file name implements the same function. For example, tf_example/ex_00.py and keras_example/ex_00.py implement exactly the same function, the only difference is that the former uses Tensorflow platform while the latter uses Keras platform.

具有相同文件名的示例代碼實現相同的功能。 例如,tf_example / ex_00.py和keras_example / ex_00.py實現完全相同的功能,唯一的區別是前者使用Tensorflow平臺而後者使用Keras平臺。

https://github.com/gongzhitaao/tensorflow-adversarial/blob/master/tf_example/ex_00.py

https://github.com/gongzhitaao/tensorflow-adversarial/blob/master/keras_example/ex_00.py

Results

-

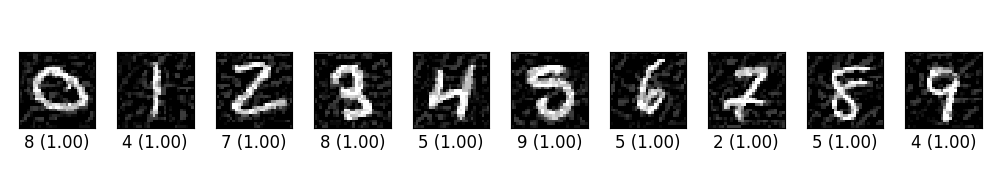

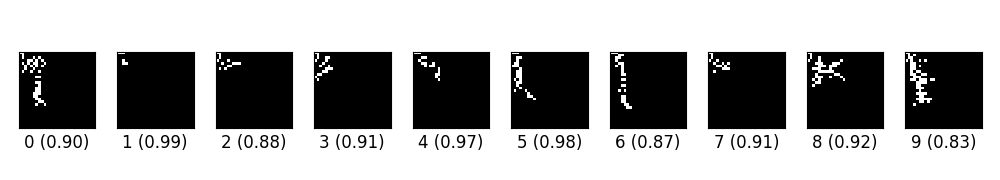

ex_00.py trains a simple CNN on MNIST. Then craft adversarial samples from test data vis FGSM. The original label for the following digits are 0 through 9 originally, and the predicted label with probability are shown below each digit.

- ex_00.py在MNIST上訓練一個簡單的CNN。 然後從FGSM的測試數據中制作對抗樣本。 以下數字的原始標簽最初為0到9,並且具有概率的預測標簽顯示在每個數字下方。

-

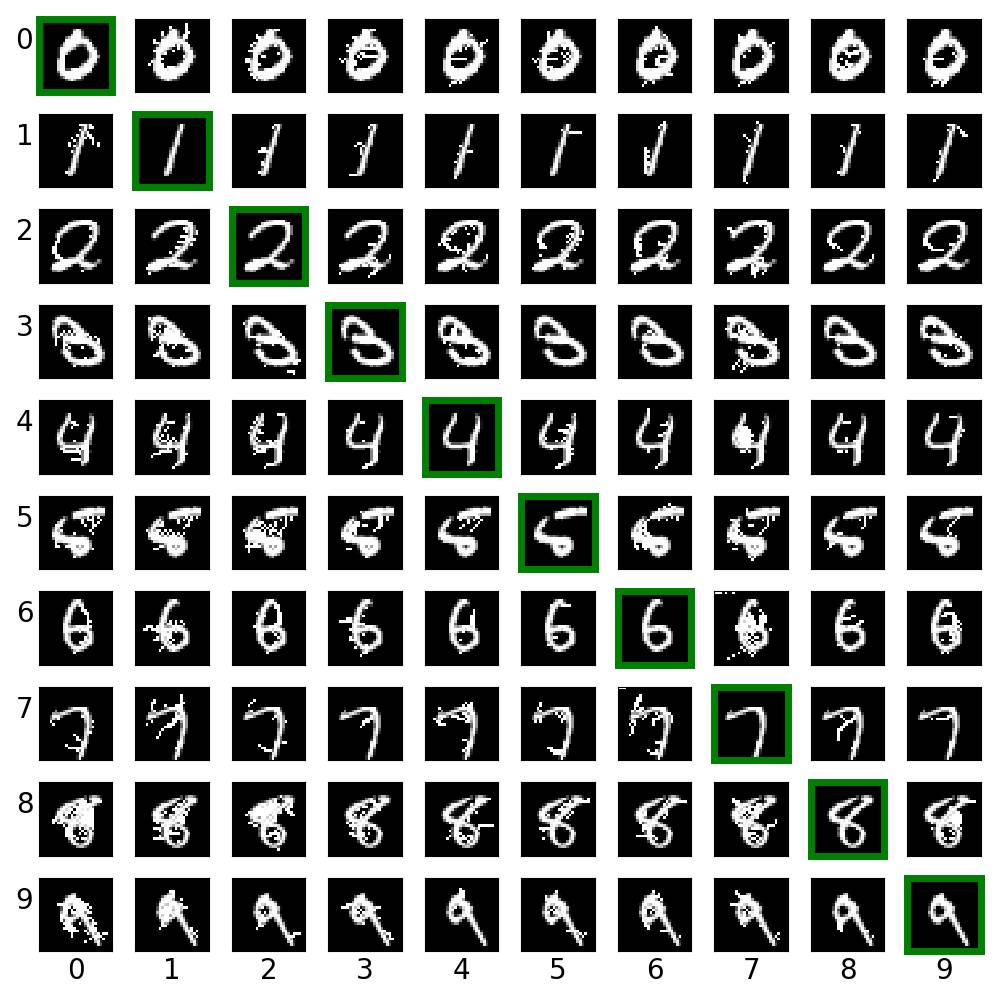

ex_01.py creates cross label adversarial images via saliency map approach (JSMA). For each row, the digit in green box is the clean image. Other images on the same row are created from it.

- ex_01.py通過顯著圖方法(JSMA)創建交叉標簽對抗圖像。 對於每一行,綠色框中的數字是幹凈的圖像。 從中創建同一行上的其他圖像。

-

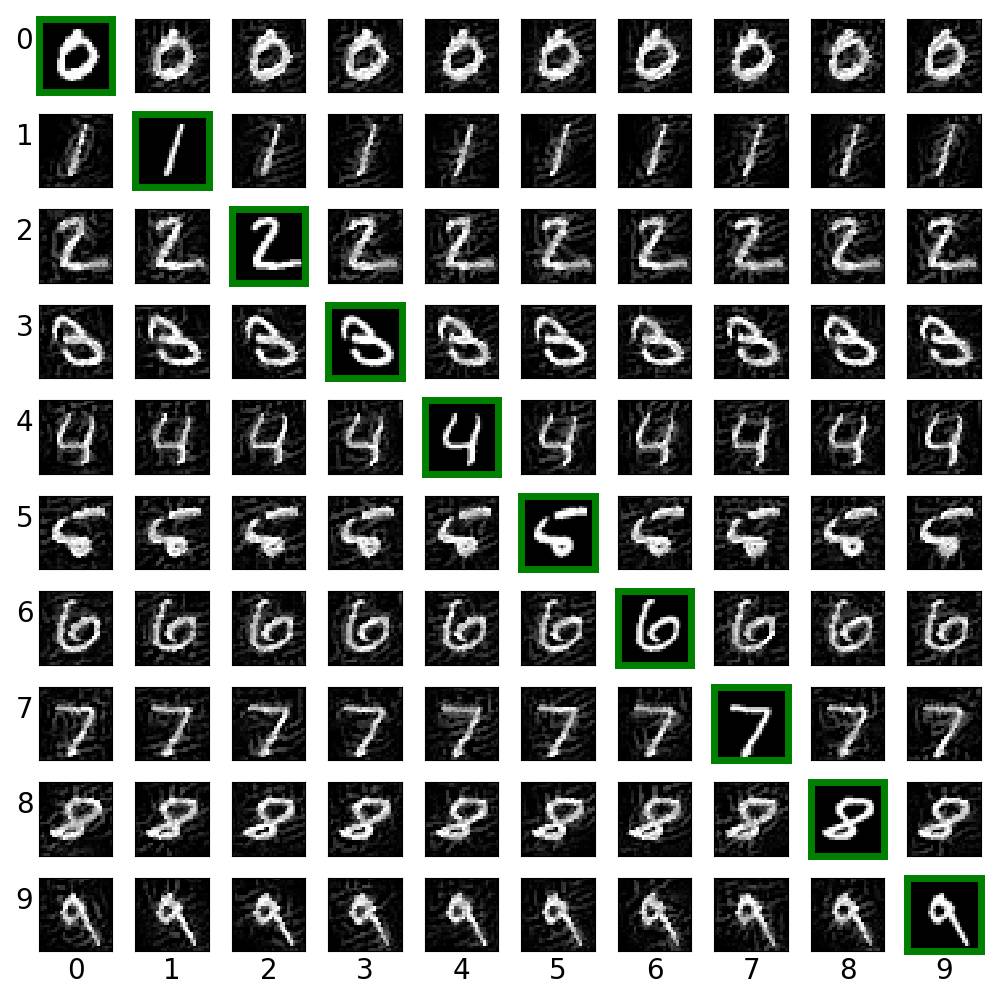

ex_02.py creates cross label adversarial images via target class gradient sign method (TGSM).

- ex_02.py通過目標類梯度符號方法(TGSM)創建交叉標簽對抗圖像。

-

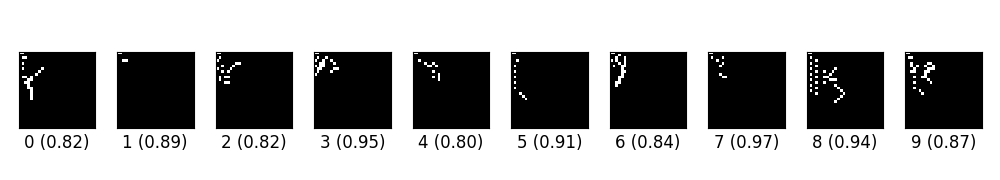

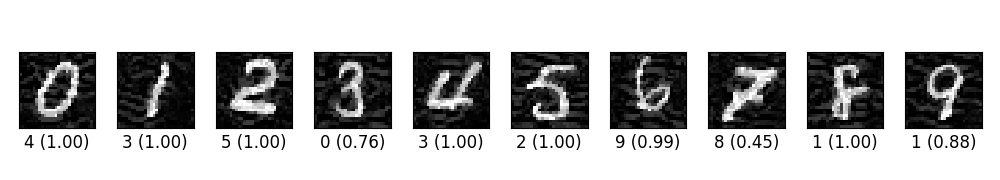

ex_03.py creates digits from blank images via saliency different algorithm (SMDA).

- ex_03.py通過顯著性不同算法(SMDA)從空白圖像創建數字。

-

These images look weird. And I have no idea why I could not reproduce the result in the original paper. My guess is that

However various experiments seem to suggest that my implementation work properly. I have to try more examples to figure out what is going wrong here.

-

這些圖像看起來很奇怪。 我不知道為什麽我無法在原始論文中重現結果。 我的猜測是

然而,各種實驗似乎表明我的實施工作正常。 我必須嘗試更多的例子來弄清楚這裏出了什麽問題。

-

either my model is too simple to catch the features of the dataset, 我的模型太簡單了,無法捕捉到數據集的特征

-

there is a flaw in my implementation.我的實施存在缺陷。

-

ex_04.py creates digits from blank images via paired saliency map algorithm, i.e., modify two pixels at one time (refer to the original paper for rational http://arxiv.org/abs/1511.07528).ex_04.py通過成對的顯著圖算法從空白圖像創建數字,即一次修改兩個像素(論文21.The Limitations of Deep Learning in Adversarial Settings)

-

ex_05.py trains a simple CNN on MNIST and then crafts adversarial samples via LLCM. The original label for the following digits are 0 through 9 originally, and the predicted label with probability are shown below each digit.ex_05.py 在MNIST上訓練一個簡單的CNN,然後通過LLCM制作對抗樣本。 以下數字的原始標簽最初為0到9,並且具有概率的預測標簽顯示在每個數字下方。

-

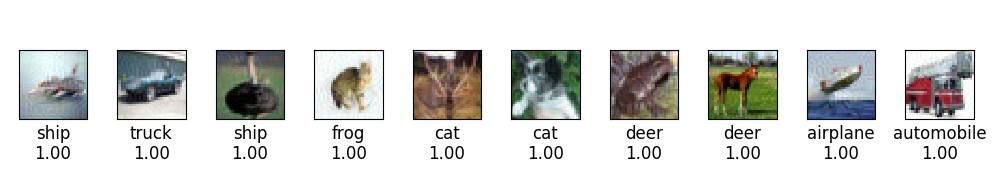

ex_06.py trains a CNN on CIFAR10 and then crafts adversarial image via FGSM.

- ex_06.py在CIFAR10上訓練CNN,然後通過FGSM制作對抗圖像。

Related Work

-

openai/cleverhans

https://github.com/openai/cleverhans

實驗四:Tensorflow實現了四個對抗圖像制作算法--readme