物聯網架構成長之路(27)-Docker練習之Zookeeper安裝

0. 前言

準備瞭解一下訊息佇列MQ,對比了一些開源的中介軟體,最後選擇Kafka作為以後用到的訊息佇列,訊息佇列的應用場景及Kafka與其他訊息佇列的優缺點這裡就不細說了,具體的可以參考其他部落格說明。不過Kafka依賴Zookeeper,因此先練習一些用Docker構建Zookeeper。

1. 安裝ZooKeeper

使用Kafka前,要安裝ZooKeeper。這裡利用最近剛學的Docker,我構建成一個ZooKeeper Image,供以後使用。

1 FROM openjdk:8-jdk-alpine 2 3 RUN apk add --no-cache bash && rm -rf /var/cache/apk/* && /bin/bash4 RUN wget http://mirrors.aliyun.com/apache/zookeeper/zookeeper-3.4.13/zookeeper-3.4.13.tar.gz && \ 5 tar -zxvf zookeeper-3.4.13.tar.gz && \ 6 rm -rf zookeeper-3.4.13.tar.gz && \ 7 mv zookeeper-3.4.13 zookeeper && \ 8 cd /zookeeper && rm -rf contrib dist-maven docs recipes src *.txt *.md *.xml && \9 cp /zookeeper/conf/zoo_sample.cfg /zookeeper/conf/zoo.cfg && \ 10 sed -i "s#dataDir=/tmp/zookeeper#dataDir=/data#g" /zookeeper/conf/zoo.cfg 11 ENV PATH /zookeeper/bin:$PATH 12 13 EXPOSE 2181 14 15 CMD ["zkServer.sh", "start-foreground"]

構建、執行

1 docker build -t zookeeper:3.4.13 .2 docker run -d -p 2181:2181 zookeeper:3.4.13

掛載 -v /my_data:/data

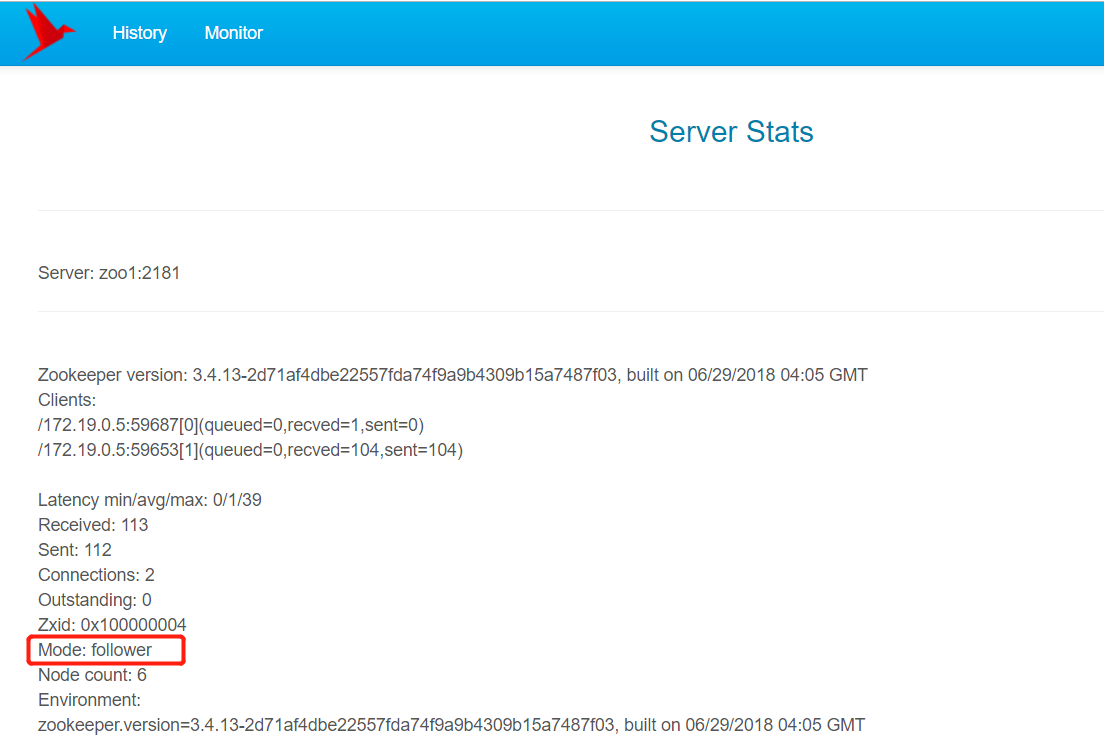

2. Zookeeper Cluster模式

具體的Cluster模式,可以參考我之前的部落格 https://www.cnblogs.com/wunaozai/p/8249657.html

現在是測試3個node的Zookeeper節點

zoo.cfg

1 tickTime=2000 2 initLimit=10 3 syncLimit=5 4 dataDir=/data 5 clientPort=2181 6 server.1=zoo1:2888:3888 7 server.2=zoo2:2888:3888 8 server.3=zoo3:2888:3888

docker-compose.yml

1 version: '3' 2 services: 3 zoo1: 4 image: zookeeper:3.4.13 5 volumes: 6 - /root/workspace/docker/kafka/zookeeper/zoo.cfg:/zookeeper/conf/zoo.cfg 7 - /root/workspace/docker/kafka/zookeeper/myid1:/data/myid 8 zoo2: 9 image: zookeeper:3.4.13 10 volumes: 11 - /root/workspace/docker/kafka/zookeeper/zoo.cfg:/zookeeper/conf/zoo.cfg 12 - /root/workspace/docker/kafka/zookeeper/myid2:/data/myid 13 zoo3: 14 image: zookeeper:3.4.13 15 volumes: 16 - /root/workspace/docker/kafka/zookeeper/zoo.cfg:/zookeeper/conf/zoo.cfg 17 - /root/workspace/docker/kafka/zookeeper/myid3:/data/myid

準備執行3個節點的Zookeeper。要現在目錄準備zoo.cfg檔案,在該配置檔案最後面,要配置server.id=server:port,然後還要為每個Zookeeper節點準備一個myid檔案

1 echo "1" > myid1 2 echo "2" > myid2 3 echo "3" > myid3 4 docker-componse up

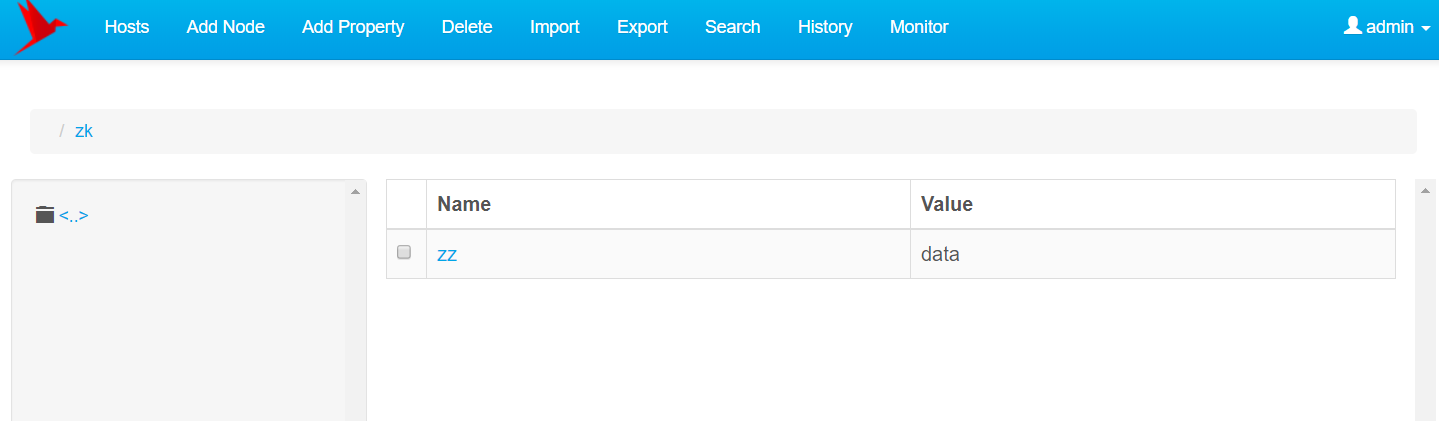

啟動後,我們進入任意一臺主機,然後create path data,這樣這份資料都會同步到各個節點上。

3. zkui Zookeeper視覺化Web客戶端

我使用這個Zookeeper視覺化客戶端,https://github.com/DeemOpen/zkui 在原始碼之上,

mvn package -Dmaven.test.skip=true

利用Dockerfile構建zkui Image

Dockerfile

1 FROM openjdk:8-jdk-alpine 2 3 MAINTAINER wunaozai <[email protected]> 4 5 WORKDIR /var/app 6 ADD zkui-*.jar /var/app/zkui.jar 7 ADD config.cfg /var/app/config.cfg 8 ADD bootstrap.sh /var/app/bootstrap.sh 9 10 EXPOSE 9090 11 12 ENTRYPOINT ["/var/app/bootstrap.sh"]

bootstrap.sh

1 #!/bin/sh 2 3 ZK_SERVER=${ZK_SERVER:-"localhost:2181"} 4 5 USER_SET=${USER_SET:-"{\"users\": [{ \"username\":\"admin\" , \"password\":\"manager\",\"role\": \"ADMIN\" \},{ \"username\":\"appconfig\" , \"password\":\"appconfig\",\"role\": \"USER\" \}]\}"} 6 LOGIN_MESSAGE=${LOGIN_MESSAGE:-"Please login using admin/manager or appconfig/appconfig."} 7 8 sed -i "s/^zkServer=.*$/zkServer=$ZK_SERVER/" /var/app/config.cfg 9 10 sed -i "s/^userSet = .*$/userSet = $USER_SET/" /var/app/config.cfg 11 sed -i "s/^loginMessage=.*$/loginMessage=$LOGIN_MESSAGE/" /var/app/config.cfg 12 13 echo "Starting zkui with server $ZK_SERVER" 14 15 exec java -jar /var/app/zkui.jar

config.cfg

1 #Server Port 2 serverPort=9090 3 #Comma seperated list of all the zookeeper servers 4 zkServer=localhost:2181,localhost:2181 5 #Http path of the repository. Ignore if you dont intent to upload files from repository. 6 scmRepo=http://myserver.com/@rev1= 7 #Path appended to the repo url. Ignore if you dont intent to upload files from repository. 8 scmRepoPath=//appconfig.txt 9 #if set to true then userSet is used for authentication, else ldap authentication is used. 10 ldapAuth=false 11 ldapDomain=mycompany,mydomain 12 #ldap authentication url. Ignore if using file based authentication. 13 ldapUrl=ldap://<ldap_host>:<ldap_port>/dc=mycom,dc=com 14 #Specific roles for ldap authenticated users. Ignore if using file based authentication. 15 ldapRoleSet={"users": [{ "username":"domain\\user1" , "role": "ADMIN" }]} 16 userSet = {"users": [{ "username":"admin" , "password":"manager","role": "ADMIN" },{ "username":"appconfig" , "password":"appconfig","role": "USER" }]} 17 #Set to prod in production and dev in local. Setting to dev will clear history each time. 18 env=prod 19 jdbcClass=org.h2.Driver 20 jdbcUrl=jdbc:h2:zkui 21 jdbcUser=root 22 jdbcPwd=manager 23 #If you want to use mysql db to store history then comment the h2 db section. 24 #jdbcClass=com.mysql.jdbc.Driver 25 #jdbcUrl=jdbc:mysql://localhost:3306/zkui 26 #jdbcUser=root 27 #jdbcPwd=manager 28 loginMessage=Please login using admin/manager or appconfig/appconfig. 29 #session timeout 5 mins/300 secs. 30 sessionTimeout=300 31 #Default 5 seconds to keep short lived zk sessions. If you have large data then the read will take more than 30 seconds so increase this accordingly. 32 #A bigger zkSessionTimeout means the connection will be held longer and resource consumption will be high. 33 zkSessionTimeout=5 34 #Block PWD exposure over rest call. 35 blockPwdOverRest=false 36 #ignore rest of the props below if https=false. 37 https=false 38 keystoreFile=/home/user/keystore.jks 39 keystorePwd=password 40 keystoreManagerPwd=password 41 # The default ACL to use for all creation of nodes. If left blank, then all nodes will be universally accessible 42 # Permissions are based on single character flags: c (Create), r (read), w (write), d (delete), a (admin), * (all) 43 # For example defaultAcl={"acls": [{"scheme":"ip", "id":"192.168.1.192", "perms":"*"}, {"scheme":"ip", id":"192.168.1.0/24", "perms":"r"}] 44 defaultAcl= 45 # Set X-Forwarded-For to true if zkui is behind a proxy 46 X-Forwarded-For=false

4. Zookeeper與zkui結合

用Zookeeper自帶的zkCli.sh -server 127.0.0.1 可以正常的對Zookeeper資料進行增刪改查了。但是有時候測試的時候,還是要Web的客戶端比較方便,前期除錯開發都是比較友好的。

docker-compose.yml

1 version: '3' 2 services: 3 zoo: 4 image: registry.cn-shenzhen.aliyuncs.com/wunaozai/zookeeper 5 ports: 6 - 2181:2181 7 zkui: 8 image: registry.cn-shenzhen.aliyuncs.com/wunaozai/zkui 9 environment: 10 - ZK_SERVER=zoo:2181 11 ports: 12 - 8080:9090

docker-compose.yml

1 version: '3' 2 services: 3 zoo1: 4 image: registry.cn-shenzhen.aliyuncs.com/wunaozai/zookeeper 5 volumes: 6 - /home/lmx/workspace/docker/zookeeper/zoo.cfg:/zookeeper/conf/zoo.cfg 7 - /home/lmx/workspace/docker/zookeeper/myid1:/data/myid 8 zoo2: 9 image: registry.cn-shenzhen.aliyuncs.com/wunaozai/zookeeper 10 volumes: 11 - /home/lmx/workspace/docker/zookeeper/zoo.cfg:/zookeeper/conf/zoo.cfg 12 - /home/lmx/workspace/docker/zookeeper/myid2:/data/myid 13 zoo3: 14 image: registry.cn-shenzhen.aliyuncs.com/wunaozai/zookeeper 15 volumes: 16 - /home/lmx/workspace/docker/zookeeper/zoo.cfg:/zookeeper/conf/zoo.cfg 17 - /home/lmx/workspace/docker/zookeeper/myid3:/data/myid 18 zkui: 19 image: registry.cn-shenzhen.aliyuncs.com/wunaozai/zkui 20 environment: 21 - ZK_SERVER=zoo1:2181,zoo2:2181,zoo3:2181 22 ports: 23 - 8080:9090

參考資料:

https://www.cnblogs.com/wunaozai/p/8249657.html

http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_singleAndDevSetup

https://hub.docker.com/r/library/openjdk/

https://hub.docker.com/r/wurstmeister/zookeeper