HttpClient解析伺服器返回的response出現亂碼

引用處:

【問題解決】HttpClient解析伺服器返回的response出現亂碼

問題場景

最近在用httpClient做網路爬蟲的時候,遇到了一個不大不小的問題,當使用HttpGet向指定網址傳送請求後,接收到的Response無法正常解析,出現 口口??這樣的亂碼,編碼也考慮到了中文編碼,具體程式碼如下:

//處理邏輯 HttpResponse response = HttpUtils.doGet(baseUrl + title + postUrl, headers); InputStream is = getInputStreamFromResponse(response); responseText = Utils.getStringFromInputStream(in); result = EncodeUtils.unicdoeToGB2312(responseText); //上面使用到的函式 public static HttpResponse doGet(String url, Map<String, String> headers) { HttpClient client = createHttpClient(); HttpGet getMethod = new HttpGet(url); HttpResponse response = null; response = client.execute(getMethod); return response; } public static String getStringFromStream(InputStream in) { StringBuilder buffer = new StringBuilder(); BufferedReader reader = null; reader = new BufferedReader(new InputStreamReader(in, "UTF-8")); String line = null; while ((line = reader.readLine()) != null) { buffer.append(line + "\n"); } reader.close(); return buffer.toString(); }

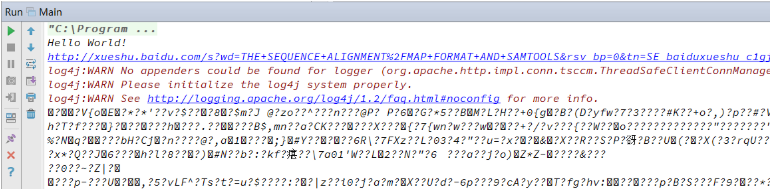

解析到的結果如下圖所示,一團亂麻:

解決方案

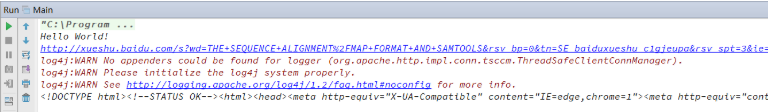

上面的程式碼基本邏輯是沒有問題的,也考慮到了中文的編碼,但是卻有一個很隱祕的陷阱在裡面,一般的網站都先將網頁壓縮後再傳回給瀏覽器,減少傳輸時間,如下圖所示:

而上面的處理邏輯則沒有考慮到Response的inputStream是經過壓縮的,需要使用對應的資料流物件處理,圖中使用的content-encoding是gzip格式,則需要使用GZIPInputStream對其進行處理,只需要對上文中的函式public static String getStringFromStream(InputStream in)改進即可,如下所示:

public static String getStringFromResponse(HttpResponse response) { if (response == null) { return null; } String responseText = ""; InputStream in = getInputStreamFromResponse(response); Header[] headers = response.getHeaders("Content-Encoding"); for(Header h : headers){ if(h.getValue().indexOf("gzip") > -1){ //For GZip response try{ GZIPInputStream gzin = new GZIPInputStream(is); InputStreamReader isr = new InputStreamReader(gzin,"utf-8"); responseText = Utils.getStringFromInputStreamReader(isr); }catch (IOException exception){ exception.printStackTrace(); } break; } } responseText = Utils.getStringFromStream(in); return responseText; }

最終得到的結果就是人能夠看懂的了:

問題原因

在分析伺服器返回response時,只注意到content-type是text/html,表明我們可以用文字解析的方式來獲取response的內容,如果content-type是excel,則表明我們可以用excel軟體來讀取內容。content-type表明的是內容是以何種格式組織的。但是我卻忽略了content-encoding這個欄位,content-encoding欄位表明的是伺服器以何種方式來對傳輸的內容進行額外編碼,例如壓縮,如果content-encoding是gzip,則表明伺服器是以Gzip格式壓縮資料,而資料本身的格式可以是文字,也可以是視訊等。兩者需要區分對待。但是需要注意的是,有的伺服器雖然返回的是gzip的content-encoding,而實際上卻並沒有對內容進行gzip編碼,所以有可能會出現gzip解碼失敗。

(官方原文解釋)

RFC 2616 for HTTP 1.1 specifies how web servers must indicate encoding transformations using the Content-Encoding header. Although on the surface, Content-Encoding (e.g., gzip, deflate, compress) and Content-Type(e.g., x-application/x-gzip) sound similar, they are, in fact, two distinct pieces of information. Whereas servers use Content-Type to specify the data type of the entity body, which can be useful for client applications that want to open the content with the appropriate application, Content-Encoding is used solely to specify any additional encoding done by the server before the content was transmitted to the client. Although the HTTP RFC outlines these rules pretty clearly, some web sites respond with “gzip” as the Content-Encoding even though the server has not gzipped the content.

Our testing has shown this problem to be limited to some sites that serve Unix/Linux style “tarball” files. Tarballs are gzip compressed archives files. By setting the Content-Encoding header to “gzip” on a tarball, the server is specifying that it has additionally gzipped the gzipped file. This, of course, is unlikely but not impossible or non-compliant.

Therein lies the problem. A server responding with content-encoding, such as “gzip,” is specifying the necessary mechanism that the client needs in order to decompress the content. If the server did not actually encode the content as specified, then the client’s decompression would fail.