Android Camera fw學習(五)-takepicutre(STILL_TAKEPICTURE)流程分析

備註:博文仍然是分析Android5.1的程式碼所寫的學習筆記。

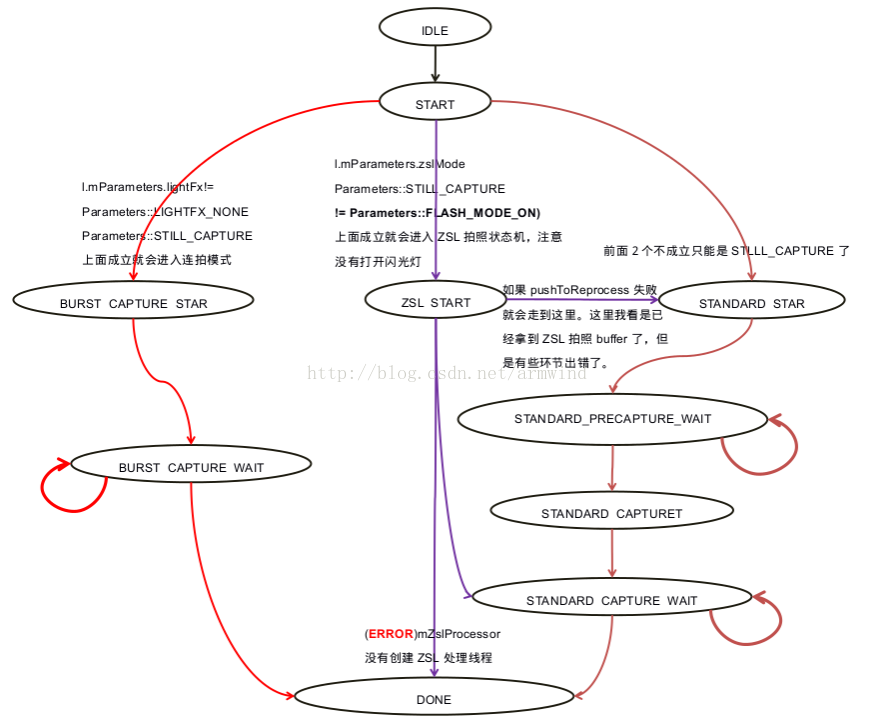

前面已經瞭解過API1大概過程,這裡直奔主題。與TakePicture息息相關的主要有4個執行緒CaptureSequencer,JpegProcessor,Camera3Device::RequestThread,FrameProcessorBase如下面的程式碼可以發現,在Camera2client物件初始化後,已經有3個執行緒已經run起來了,還有有一個RequestThread執行緒會在Camera3Device初始化時建立的。他們工作非常密切,如下大概畫了一個他們的工作機制,4個執行緒都是通過Conditon條件變數來同步的。

針對該圖有下面幾點注意的( 這裡拍照狀態機只針對是STILL_TAKEPICTURE)

- 1.所有事件驅動源都是在HAL3回幀動作啟用的。當hal3回幀後,會啟用mResultSignal以及在onFrameAvailable啟用mCaptureAvailableSignal條件。如果沒有回幀,所以拍照執行緒都在阻塞等待狀態。

- 2.STLL_TakePicture狀態機在standardStart狀態時,會註冊一個幀監聽物件給FrameProcessor執行緒,如圖所示。

- 3.在準備抓取拍照幀時,首先要判斷AE是否收斂,如果沒有收斂,要等待AE收斂才能進行圖片捕獲狀態。

- 4.當進行圖片捕獲動作後,要阻塞等待拍照資料回傳上來,這就是我們開始子第一條中強調的。如果等待超時會迴圈等待下去(100ms為一單位,等待3.5s應用就會報錯)。

1、拍照TakePicture準備工作

1).建立拍照有關的各個執行緒

status_t Camera2Client::initialize(camera_module_t *module)

{

//-----此處省略N行

mFrameProcessor = new FrameProcessor(mDevice, this);

threadName = String8::format("C2-%d-FrameProc" 上面可以看到我們在建立CaptureSequencer物件時,傳入了當前的Camera2client物件。這裡是由於後面狀態機中的方法中,都需要使用Camera2Client物件來獲取一些引數,以及一些其它操作,這裡就先不深入分析了,等到分析狀態機方法時,在來好好分析吧。

建立JpegProcessor物件時,傳入的有當前Camera2Client物件和剛才我們建立的CaptureSequencer物件,這裡就有必要看一下它的構造函數了。

JpegProcessor::JpegProcessor(

sp<Camera2Client> client,

wp<CaptureSequencer> sequencer):

Thread(false),

mDevice(client->getCameraDevice()),

mSequencer(sequencer),

mId(client->getCameraId()),

mCaptureAvailable(false),

mCaptureStreamId(NO_STREAM) {

}上面可以看到傳入的CaptureSequencer直接儲存下來了,但是Camera2Client物件用於獲取Camera3Device物件和當前CameraId.

2)、建立jpeg_Stream

下面是建立和更新jpeg流的方法,我們知道在前面建立預覽流的時候,系統已經建立了一組預設的jpeg流了,當時那個流的size是預設情況下的size。當我們在應用層選擇不同picture-size時,這裡在拍照時就會刪除之前的流物件,重新建立一個jpeg流物件。

status_t JpegProcessor::updateStream(const Parameters ¶ms) {

ATRACE_CALL();

ALOGV("%s", __FUNCTION__);

status_t res;

Mutex::Autolock l(mInputMutex);

sp<CameraDeviceBase> device = mDevice.promote();

// Find out buffer size for JPEG

ssize_t maxJpegSize = device->getJpegBufferSize(params.pictureWidth, params.pictureHeight);

if (mCaptureConsumer == 0) {

// Create CPU buffer queue endpoint

sp<IGraphicBufferProducer> producer;

sp<IGraphicBufferConsumer> consumer;

BufferQueue::createBufferQueue(&producer, &consumer);

//下面注意buffer數量是1

mCaptureConsumer = new CpuConsumer(consumer, 1);

mCaptureConsumer->setFrameAvailableListener(this);

mCaptureConsumer->setName(String8("Camera2Client::CaptureConsumer"));

mCaptureWindow = new Surface(producer);

}

// Since ashmem heaps are rounded up to page size, don't reallocate if

// the capture heap isn't exactly the same size as the required JPEG buffer

const size_t HEAP_SLACK_FACTOR = 2;

if (mCaptureHeap == 0 ||

(mCaptureHeap->getSize() < static_cast<size_t>(maxJpegSize)) ||

(mCaptureHeap->getSize() >

static_cast<size_t>(maxJpegSize) * HEAP_SLACK_FACTOR) ) {

// Create memory for API consumption

mCaptureHeap.clear();

mCaptureHeap =

new MemoryHeapBase(maxJpegSize, 0, "Camera2Client::CaptureHeap");

}

ALOGV("%s: Camera %d: JPEG capture heap now %d bytes; requested %d bytes",

__FUNCTION__, mId, mCaptureHeap->getSize(), maxJpegSize);

if (mCaptureStreamId != NO_STREAM) {

// Check if stream parameters have to change

uint32_t currentWidth, currentHeight;

res = device->getStreamInfo(mCaptureStreamId,

¤tWidth, ¤tHeight, 0);

if (res != OK) {

ALOGE("%s: Camera %d: Error querying capture output stream info: "

"%s (%d)", __FUNCTION__,

mId, strerror(-res), res);

return res;

}//如果流的size變化了,則需要刪除之前的jpeg stream,建立新的steam

//這經常發生在我們在app選擇了不同的picture-size。

if (currentWidth != (uint32_t)params.pictureWidth ||

currentHeight != (uint32_t)params.pictureHeight) {

ALOGV("%s: Camera %d: Deleting stream %d since the buffer dimensions changed",

__FUNCTION__, mId, mCaptureStreamId);

res = device->deleteStream(mCaptureStreamId);

//省去錯誤檢查機制

mCaptureStreamId = NO_STREAM;

}

}

//這發生在第一建立jpeg steram,拍照請求。

if (mCaptureStreamId == NO_STREAM) {

// Create stream for HAL production

res = device->createStream(mCaptureWindow,

params.pictureWidth, params.pictureHeight,

HAL_PIXEL_FORMAT_BLOB, &mCaptureStreamId);

if (res != OK) {

return res;

}

}

return OK;

}上面值得注意的是當是第一次建立jpegStream時,會建立一個BufferQueue物件,注意mCaptureConsumer = new CpuConsumer(consumer, 1);這裡設定buffer數量是1,STLL_CAPTURE只有一張圖嘛。

二、拍照之-STILL_CAPTURE

APP點選拍照按鍵後,ICamera代理物件就會調到這裡了。這裡可以看到它最後啟動拍照狀態機。

status_t Camera2Client::takePicture(int msgType) {

ATRACE_CALL();

Mutex::Autolock icl(mBinderSerializationLock);

status_t res;

if ( (res = checkPid(__FUNCTION__) ) != OK) return res;

//------------------------------------------------------

//這裡幹了一些事情,會重新check picture-size是否和當前的

//的jpeg流是一樣的size,如果不一樣的話,會刪除之前jpeg流物件

//重新根據新的picture-size建立jpeg流物件。建立好後就啟動

//拍照狀態機了。

res = mCaptureSequencer->startCapture(msgType);

return res;

}上面startCapture方法調動之後,就會發送一個訊號啟用CaptureSequencer執行緒。

- 1.一開始CaptureSequencer執行緒執行之後,預設是在IDEL狀態,這個時候執行緒會在IDEL狀態機方法中等待。

- 2.當呼叫了startCapture方法後,會啟用CaptureSequencer執行緒。將狀態機切換到START狀態。狀態機處理方法就是下面這個方法。

1.拍照狀態機-manageStart()

CaptureSequencer::CaptureState CaptureSequencer::manageStart(

sp<Camera2Client> &client) {

ALOGV("%s", __FUNCTION__);

status_t res;

ATRACE_CALL();

SharedParameters::Lock l(client->getParameters());

CaptureState nextState = DONE;

//下面這個方法就是用來建立拍照的metadata的介面。

//如果狀態機就會從hal中獲取一個型別為CAMERA2_TEMPLATE_STILL_CAPTURE的metadata物件,並根據引數更新metadata相應的縮圖、flash狀態、拍照質量等資訊。它的主要作用就是確保狀態機有一個可以使用的Medatadata資料包。

res = updateCaptureRequest(l.mParameters, client);

//連拍模式狀態機

if(l.mParameters.lightFx != Parameters::LIGHTFX_NONE &&

l.mParameters.state == Parameters::STILL_CAPTURE) {

nextState = BURST_CAPTURE_START;

}

else if (l.mParameters.zslMode &&

l.mParameters.state == Parameters::STILL_CAPTURE &&

l.mParameters.flashMode != Parameters::FLASH_MODE_ON) {//ZSL拍照模式,注意ZSL是不開閃光燈的。

nextState = ZSL_START;

} else { //正常模式-靜態拍照

nextState = STANDARD_START;

}

mShutterNotified = false;

return nextState;

}上面我們先分析STANDARD_START的狀態機情況。

2.拍照狀態機-manageStandardStart()

CaptureSequencer::CaptureState CaptureSequencer::manageStandardStart(

sp<Camera2Client> &client) {

ATRACE_CALL();

bool isAeConverged = false;

// Get the onFrameAvailable callback when the requestID == mCaptureId

// We don't want to get partial results for normal capture, as we need

// Get ANDROID_SENSOR_TIMESTAMP from the capture result, but partial

// result doesn't have to have this metadata available.

// TODO: Update to use the HALv3 shutter notification for remove the

// need for this listener and make it faster. see bug 12530628.

client->registerFrameListener(mCaptureId, mCaptureId + 1,

this,

/*sendPartials*/false);

{

Mutex::Autolock l(mInputMutex);

isAeConverged = (mAEState == ANDROID_CONTROL_AE_STATE_CONVERGED);

}

{

SharedParameters::Lock l(client->getParameters());

// Skip AE precapture when it is already converged and not in force flash mode.

if (l.mParameters.flashMode != Parameters::FLASH_MODE_ON && isAeConverged) {

return STANDARD_CAPTURE;

}

mTriggerId = l.mParameters.precaptureTriggerCounter++;

}

client->getCameraDevice()->triggerPrecaptureMetering(mTriggerId);

mAeInPrecapture = false;

mTimeoutCount = kMaxTimeoutsForPrecaptureStart;

return STANDARD_PRECAPTURE_WAIT;

}上面做了下面幾件事情。

- 1. 註冊拍照圖片可用監聽物件

- 2.判斷當前AE狀態是否收斂,如果收斂了,拍照狀態機為STANDARD_CAPTURE。如果AE已經收斂那麼拍照狀態機就是STANDARD_PRECAPTURE_WAIT,就會下發AE收斂訊息,等待HAL AE收斂完成。

1).程式碼片段1-註冊拍照幀可用監聽物件

status_t Camera2Client::registerFrameListener(int32_t minId, int32_t maxId,

wp<camera2::FrameProcessor::FilteredListener> listener, bool sendPartials) {

return mFrameProcessor->registerListener(minId, maxId, listener, sendPartials);

}

//----------------------------------------------------------

struct FilteredListener: virtual public RefBase {

virtual void onResultAvailable(const CaptureResult &result) = 0;

};上面我們根據引數可以發現監聽物件是FilteredListener,必須實現onResultAvailable()方法,這裡CaptureSequencer類已經實現了這個方法,如下所示。

void CaptureSequencer::onResultAvailable(const CaptureResult &result) {

ATRACE_CALL();

ALOGV("%s: New result available.", __FUNCTION__);

Mutex::Autolock l(mInputMutex);

mNewFrameId = result.mResultExtras.requestId;

mNewFrame = result.mMetadata;

if (!mNewFrameReceived) {

mNewFrameReceived = true;

mNewFrameSignal.signal();

}

}上面onResultAvailable()方法中將mNewFrameReceived標誌設定為true,併發送條件mNewFrameSignal,啟用正在等待這個條件的方法。

//這裡由於FrameProcessor繼承了FrameProcessorBase類,所以我們直接

//在FrameProcessor中查不到registerListener方法。

status_t FrameProcessorBase::registerListener(int32_t minId,

int32_t maxId, wp<FilteredListener> listener, bool sendPartials) {

Mutex::Autolock l(mInputMutex);

//這裡會檢查監聽物件是否之前已經註冊過,如果已經註冊過就不會

//在註冊了。

List<RangeListener>::iterator item = mRangeListeners.begin();

while (item != mRangeListeners.end()) {

if (item->minId == minId && item->maxId == maxId && item->listener == listener) {

return OK;

}

item++;

}

//將當前監聽物件存放到佇列中。

RangeListener rListener = { minId, maxId, listener, sendPartials };

mRangeListeners.push_back(rListener);

return OK;

}上面是註冊拍照幀監聽物件實現方法,注意這裡是將拍照狀態儲存到了mRangeListeners列表中。這裡為什麼會註冊多個拍照幀可用監聽物件呢?

3.拍照狀態機-manageStandardCapture()

CaptureSequencer::CaptureState CaptureSequencer::manageStandardCapture(

sp<Camera2Client> &client) {

status_t res;

ATRACE_CALL();

SharedParameters::Lock l(client->getParameters());

Vector<int32_t> outputStreams;

uint8_t captureIntent = static_cast<uint8_t>(ANDROID_CONTROL_CAPTURE_INTENT_STILL_CAPTURE);

/**

* Set up output streams in the request

* - preview

* - capture/jpeg

* - callback (if preview callbacks enabled)

* - recording (if recording enabled)

*/

//下面預設會將preview和capture流id儲存到臨時陣列outputStreams中。

outputStreams.push(client->getPreviewStreamId());

outputStreams.push(client->getCaptureStreamId());

if (l.mParameters.previewCallbackFlags &

CAMERA_FRAME_CALLBACK_FLAG_ENABLE_MASK) {

outputStreams.push(client->getCallbackStreamId());

}

//這裡如果是video_snapshot模式,則會修改捕獲意圖為ANDROID_CONTROL_CAPTURE_INTENT_VIDEO_SNAPSHOT

if (l.mParameters.state == Parameters::VIDEO_SNAPSHOT) {

outputStreams.push(client->getRecordingStreamId());

captureIntent = static_cast<uint8_t>(ANDROID_CONTROL_CAPTURE_INTENT_VIDEO_SNAPSHOT);

}

//將各種需要的流ID,儲存到metadata中,為了在Camera3Device中查詢到對應流物件。

res = mCaptureRequest.update(ANDROID_REQUEST_OUTPUT_STREAMS,

outputStreams);

if (res == OK) {//儲存到請求ID

res = mCaptureRequest.update(ANDROID_REQUEST_ID,

&mCaptureId, 1);

}

if (res == OK) {//儲存捕獲意圖到metadata中。

res = mCaptureRequest.update(ANDROID_CONTROL_CAPTURE_INTENT,

&captureIntent, 1);

}

if (res == OK) {

res = mCaptureRequest.sort();

}

if (res != OK) {//如果出問題,拍照結束,一般不會走到這裡。

ALOGE("%s: Camera %d: Unable to set up still capture request: %s (%d)",

__FUNCTION__, client->getCameraId(), strerror(-res), res);

return DONE;

}

// Create a capture copy since CameraDeviceBase#capture takes ownership

CameraMetadata captureCopy = mCaptureRequest;

//此處省略一些metadata檢查程式碼,無礙於分析。

/**

* Clear the streaming request for still-capture pictures

* (as opposed to i.e. video snapshots)

*/

if (l.mParameters.state == Parameters::STILL_CAPTURE) {

// API definition of takePicture() - stop preview before taking pic

res = client->stopStream();//如果是靜態拍照,注意是非ZSL模式,則會停止preview預覽流。

if (res != OK) {

ALOGE("%s: Camera %d: Unable to stop preview for still capture: "

"%s (%d)",

__FUNCTION__, client->getCameraId(), strerror(-res), res);

return DONE;

}

}

// TODO: Capture should be atomic with setStreamingRequest here

//注意這裡會啟動捕獲了,使用拷貝的metadata資料,而且上面的google註釋也說了,這是一個原子操作。

//注意這裡會在Camera3Device中根據metadata中的資料,建立請求,並將請求傳送給hal.

res = client->getCameraDevice()->capture(captureCopy);

if (res != OK) {

ALOGE("%s: Camera %d: Unable to submit still image capture request: "

"%s (%d)",

__FUNCTION__, client->getCameraId(), strerror(-res), res);

return DONE;

}

mTimeoutCount = kMaxTimeoutsForCaptureEnd;

return STANDARD_CAPTURE_WAIT;

}4.拍照狀態機-manageStandardCaptureWait()

CaptureSequencer::CaptureState CaptureSequencer::manageStandardCaptureWait(

sp<Camera2Client> &client) {

status_t res;

ATRACE_CALL();

Mutex::Autolock l(mInputMutex);

// Wait for new metadata result (mNewFrame)

// 這裡mNewFrameReceived在拍照幀沒準備好之前為false,一旦拍照幀準備好了,FrameProcessor會回撥之前註冊的onResultAvailable()方法,在該方法中將mNewFrameReceived設定為true,並激活mNewFrameSignal條件,下面的等待操作就會繼續往下走了。

while (!mNewFrameReceived) {

//這裡會等待100ms

res = mNewFrameSignal.waitRelative(mInputMutex, kWaitDuration);

if (res == TIMED_OUT) {

mTimeoutCount--;

break;

}

}

// Approximation of the shutter being closed

// - TODO: use the hal3 exposure callback in Camera3Device instead

//下面是通知shutter事件,即播放拍照音,並將CAMERA_MSG_SHUTTER,CAMERA_MSG_RAW_IMAGE_NOTIFY回傳給上層。

if (mNewFrameReceived && !mShutterNotified) {

SharedParameters::Lock l(client->getParameters());

/* warning: this also locks a SharedCameraCallbacks */

shutterNotifyLocked(l.mParameters, client, mMsgType);

mShutterNotified = true;//已經通知shutter事件了。

}

// Wait until jpeg was captured by JpegProcessor

//mNewCaptureSignal該條件是在jpeg 圖片資料enqueue到bufferqueue時啟用的。這裡做一下小結

//這裡會用到兩個contiion物件mNewFrameSignal和mNewCaptureSignal,其中當hal回傳jpeg幀時,會先

//return buffer即先啟用mNewCaptureSignal,才會啟用mNewFrameSignal。所以到了下面條件,直接

//為真。

while (mNewFrameReceived && !mNewCaptureReceived) {

res = mNewCaptureSignal.waitRelative(mInputMutex, kWaitDuration);

if (res == TIMED_OUT) {

mTimeoutCount--;

break;

}

}

if (mTimeoutCount <= 0) {

ALOGW("Timed out waiting for capture to complete");

return DONE;

}

//如果mNewFrameReceived && mNewCaptureReceived為真,說明真的收到jepg幀了。

if (mNewFrameReceived && mNewCaptureReceived) {

//這裡主要做了2件事情

//1.檢查捕獲ID是否一樣,否則就直接報錯。

//2.檢查事件戳是否正確,這個一般是不會錯的。

client->removeFrameListener(mCaptureId, mCaptureId + 1, this);

mNewFrameReceived = false;

mNewCaptureReceived = false;

return DONE;//返回狀態機DONE.

}

//如果還沒準備好,則會繼續等待下去,一般發生在等待100ms超時。

return STANDARD_CAPTURE_WAIT;

}

5.拍照狀態機-manageDone()

CaptureSequencer::CaptureState CaptureSequencer::manageDone(sp<Camera2Client> &client) {

status_t res = OK;

ATRACE_CALL();

mCaptureId++;

if (mCaptureId >= Camera2Client::kCaptureRequestIdEnd) {

mCaptureId = Camera2Client::kCaptureRequestIdStart;

}

{

Mutex::Autolock l(mInputMutex);

mBusy = false;

}

int takePictureCounter = 0;

{

SharedParameters::Lock l(client->getParameters());

switch (l.mParameters.state) {

case Parameters::DISCONNECTED:

ALOGW("%s: Camera %d: Discarding image data during shutdown ",

__FUNCTION__, client->getCameraId());

res = INVALID_OPERATION;

break;

case Parameters::STILL_CAPTURE:

res = client->getCameraDevice()->waitUntilDrained();

if (res != OK) {

ALOGE("%s: Camera %d: Can't idle after still capture: "

"%s (%d)", __FUNCTION__, client->getCameraId(),

strerror(-res), res);

}

l.mParameters.state = Parameters::STOPPED;

break;

case Parameters::VIDEO_SNAPSHOT:

l.mParameters.state = Parameters::RECORD;

break;

default:

ALOGE("%s: Camera %d: Still image produced unexpectedly "

"in state %s!",

__FUNCTION__, client->getCameraId(),

Parameters::getStateName(l.mParameters.state));

res = INVALID_OPERATION;

}

takePictureCounter = l.mParameters.takePictureCounter;

}

sp<ZslProcessorInterface> processor = mZslProcessor.promote();

if (processor != 0) {

ALOGV("%s: Memory optimization, clearing ZSL queue",

__FUNCTION__);

processor->clearZslQueue();

}

/**

* Fire the jpegCallback in Camera#takePicture(..., jpegCallback)

*/

if (mCaptureBuffer != 0 && res == OK) {

ATRACE_ASYNC_END(Camera2Client::kTakepictureLabel, takePictureCounter);

Camera2Client::SharedCameraCallbacks::Lock

l(client->mSharedCameraCallbacks);

ALOGV("%s: Sending still image to client", __FUNCTION__);

if (l.mRemoteCallback != 0) {//將圖片資料回傳到上層,上層會去做一下拷貝。

l.mRemoteCallback->dataCallback(CAMERA_MSG_COMPRESSED_IMAGE,

mCaptureBuffer, NULL);

} else {

ALOGV("%s: No client!", __FUNCTION__);

}

}

mCaptureBuffer.clear();

return IDLE;//拍照狀態機返回到IDLE.

}6.拍照狀態機列表:

其中可以看到有ZSL的拍照狀態機處理函式,具體ZSL的狀態機放到下篇部落格分析吧。

const CaptureSequencer::StateManager

CaptureSequencer::kStateManagers[CaptureSequencer::NUM_CAPTURE_STATES-1] = {

&CaptureSequencer::manageIdle,

&CaptureSequencer::manageStart,

&CaptureSequencer::manageZslStart,

&CaptureSequencer::manageZslWaiting,

&CaptureSequencer::manageZslReprocessing,

&CaptureSequencer::manageStandardStart,

&CaptureSequencer::manageStandardPrecaptureWait,

&CaptureSequencer::manageStandardCapture,

&CaptureSequencer::manageStandardCaptureWait,

&CaptureSequencer::manageBurstCaptureStart,

&CaptureSequencer::manageBurstCaptureWait,

&CaptureSequencer::manageDone,

};拍照狀態機如下:

三、幀可用監聽物件何時被呼叫

1.結果可用通知onResultAvailable何時呼叫

這裡倒著分析

void CaptureSequencer::onResultAvailable(const CaptureResult &result) {

ATRACE_CALL();

ALOGV("%s: New result available.", __FUNCTION__);

Mutex::Autolock l(mInputMutex);

mNewFrameId = result.mResultExtras.requestId;

mNewFrame = result.mMetadata;

if (!mNewFrameReceived) {

mNewFrameReceived = true;

mNewFrameSignal.signal();

}

}下面可以看到processListeners會去找到幀監聽物件,然後會呼叫相應的onResultAvailable回撥。

status_t FrameProcessorBase::processListeners(const CaptureResult &result,

const sp<CameraDeviceBase> &device) {

//------------

entry = result.mMetadata.find(ANDROID_REQUEST_ID);

int32_t requestId = entry.data.i32[0];

//這裡會根據hal返回來的資料,查詢到requestId,並根據requestId查詢到當前幀的監聽物件。

//找到後,就呼叫了onResultAvailable()方法,如下。

List<sp<FilteredListener> >::iterator item = listeners.begin();

for (; item != listeners.end(); item++) {

(*item)->onResultAvailable(result);

} processSingleFrame()方法中直接呼叫了processListeners()方法。

bool FrameProcessorBase::processSingleFrame(CaptureResult &result,

const sp<CameraDeviceBase> &device) {

ALOGV("%s: Camera %d: Process single frame (is empty? %d)",

__FUNCTION__, device->getId(), result.mMetadata.isEmpty());

return processListeners(result, device) == OK;

}

//而

void FrameProcessorBase::processNewFrames(const sp<CameraDeviceBase> &device) {

status_t res;

ATRACE_CALL();

CaptureResult result;

//從Device物件中獲取到result物件,即hal返回來的幀資料。

while ( (res = device->getNextResult(&result)) == OK) {

// TODO: instead of getting frame number from metadata, we should read

// this from result.mResultExtras when CameraDeviceBase interface is fixed.

camera_metadata_entry_t entry;

entry = result.mMetadata.find(ANDROID_REQUEST_FRAME_COUNT);

//此處省略一些錯誤檢查程式碼,不影響分析程式碼。

if (!processSingleFrame(result, device)) {

break;

}

}

return;

}上面2段程式碼只是體現出呼叫流程。

bool FrameProcessorBase::threadLoop() {

status_t res;

sp<CameraDeviceBase> device;

{

device = mDevice.promote();

if (device == 0) return false;

}

res = device->waitForNextFrame(kWaitDuration);

if (res == OK) {

processNewFrames(device);

} else if (res != TIMED_OUT) {

ALOGE("FrameProcessorBase: Error waiting for new "

"frames: %s (%d)", strerror(-res), res);

}

return true;

}上面程式碼可以看到直接呼叫了Camera3Device的waitForNextFrame()方法,用來等待幀結果。

status_t Camera3Device::waitForNextFrame(nsecs_t timeout) {

status_t res;

Mutex::Autolock l(mOutputLock);

while (mResultQueue.empty()) {

res = mResultSignal.waitRelative(mOutputLock, timeout);

if (res == TIMED_OUT) {

return res;

} else if (res != OK) {

ALOGW("%s: Camera %d: No frame in %" PRId64 " ns: %s (%d)",

__FUNCTION__, mId, timeout, strerror(-res), res);

return res;

}

}

return OK;

}上面可以看到這裡在等待mResultSignal條件,那它什麼時候會會為真呢。這兩個函式都會在hal callback回來資料時調動。

bool Camera3Device::processPartial3AResult(

uint32_t frameNumber,

const CameraMetadata& partial, const CaptureResultExtras& resultExtras) {

//-------------此處省略N行程式碼-------------

// We only send the aggregated partial when all 3A related metadata are available

// For both API1 and API2.

// TODO: we probably should pass through all partials to API2 unconditionally.

mResultSignal.signal();

}

void Camera3Device::sendCaptureResult(CameraMetadata &pendingMetadata,

CaptureResultExtras &resultExtras,

CameraMetadata &collectedPartialResult,

uint32_t frameNumber) {

//-------------此處省略N行程式碼-------------

mResultSignal.signal();

}由此可以看出,當hal3有幀過來後,就會呼叫onResultAvailable()回撥。

2.捕獲幀可用通知onCaptureAvailable何時呼叫

void CaptureSequencer::onCaptureAvailable(nsecs_t timestamp,

sp<MemoryBase> captureBuffer) {

ATRACE_CALL();

ALOGV("%s", __FUNCTION__);

Mutex::Autolock l(mInputMutex);

mCaptureTimestamp = timestamp;

mCaptureBuffer = captureBuffer;

if (!mNewCaptureReceived) {

mNewCaptureReceived = true;

mNewCaptureSignal.signal();

}

}首先來看看捕獲可用通知方法中幹了什麼,做了4件事情,也是我們上面看到的。

- 1.獲取當前幀事件戳

- 2.儲存當前幀buffer.

- 3.設定mNewCaptureReceived標誌位為true.

- 4.啟用mNewCaptureSignal條件,拍照狀態機會一直會等待這個條件。

該方法是在JpegProcessor處理執行緒中被呼叫的。

status_t JpegProcessor::processNewCapture() {

ATRACE_CALL();

status_t res;

sp<Camera2Heap> captureHeap;

sp<MemoryBase> captureBuffer;

CpuConsumer::LockedBuffer imgBuffer;

//由於之前我們建立bufferQueue時,我們只允許有一個buffer,所以

//下面ACQUIRE buffer操作,獲取到的肯定是jpeg圖片buffer.

res = mCaptureConsumer->lockNextBuffer(&imgBuffer);//

//此處省略部分功能程式碼,不過不影響分析程式碼

//下面mCaptureHeap就是在更新jpeg流的時候建立的一個匿名共享記憶體,

// TODO: Optimize this to avoid memcopy

captureBuffer = new MemoryBase(mCaptureHeap, 0, jpegSize);

void* captureMemory = mCaptureHeap->getBase();

// 下面將圖片資料拷貝到匿名共享記憶體中,不知道為什麼還要有這一步,

// ION buffer也可以直接用的。

memcpy(captureMemory, imgBuffer.data, jpegSize);

//release buffer操作,將buffer歸還給bufferQueue.

mCaptureConsumer->unlockBuffer(imgBuffer);

sp<CaptureSequencer> sequencer = mSequencer.promote();

if (sequencer != 0) {

//下面看到了吧,onCaptureAvailable()方法就是在這裡呼叫的,同時將buffer的

//時間戳以及buffer傳入了進去。

sequencer->onCaptureAvailable(imgBuffer.timestamp, captureBuffer);

}

return OK;

}詳細的註釋都在程式碼中,這裡只是將jpeg buffer拷貝了一下,然後子傳給上層。下面看看processNewCapture()

方法是在哪裡呼叫的。下面可以看到是在JpegProcessor執行緒處理函式中迴圈處理的。

bool JpegProcessor::threadLoop() {

status_t res;

{

Mutex::Autolock l(mInputMutex);

while (!mCaptureAvailable) {

//這裡會等待mCaptureAvailableSignal條件100ms,

res = mCaptureAvailableSignal.waitRelative(mInputMutex,

kWaitDuration);

if (res == TIMED_OUT) return true;

}

mCaptureAvailable = false;

}

do {//是在這裡迴圈處理的

res = processNewCapture();

} while (res == OK);

return true;

} 上面JpegProcessor處理執行緒一直在等待mCaptureAvailableSignal條件可用,但是它在哪裡被啟用的呢。

由於JpegProcessor執行緒在更新jpeg流資訊時,建立了一個bufferQueue,這樣的話當buffer進行ENQUENU操作時

都會呼叫onFrameAvailable()方法。進行ENQUEUE操作時,說明幀資料已經歸還到BufferQueue中了。消費者可以去拿了。

void JpegProcessor::onFrameAvailable(const BufferItem& /*item*/) {

Mutex::Autolock l(mInputMutex);

if (!mCaptureAvailable) {

mCaptureAvailable = true;

mCaptureAvailableSignal.signal();//這裡看到激活了mCaptureAvailableSignal條件。

}

}小總結:

- 1.當hal將jpeg資料返回到framework時,就會呼叫onCaptureAvailable(),設定一些狀態以及啟用一些條件(Condition)