ECS linux 搭建NFS服務實現磁碟共享的具體操作

目前在使用雲磁碟的時候,無法實現一塊雲磁碟同時掛載到多臺伺服器上的需求,只能實現一塊磁碟掛載到一臺伺服器使用。

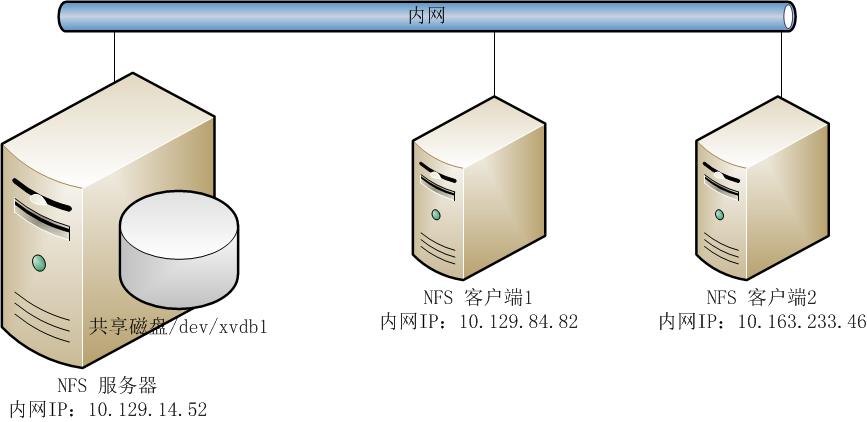

但可通過搭建NFS服務,通過內網(默認同一個帳號下同一個region伺服器內網互通)實現一塊磁碟共享給多臺伺服器使用。

NFS的搭建環境如下所示:

系統環境:

伺服器和客戶端都是使用CentOS 6.5 64位系統。

[[email protected] ~]# cat /etc/issue

CentOS release 6.5 (Final)

Kernel \r on an \m

Linux iZ28c6rit3oZ 2.6.32-431.23.3.el6.x86_64 #1 SMP Thu Jul 31 17:20:51 UTC 2014 x86_64 x86_64 x86_64 GNU/Linux

假設,使用者需把10.129.14.52這臺伺服器中的/dev/xvdb1磁碟共享出來給10.129.84.82和10.163.233.46這兩臺伺服器使用。

NFS安裝:

NFS伺服器和客戶端安裝的軟體包都是一致的,所以在此就僅以伺服器端的安裝舉例說明。

預設的公共映象生成的ECS Linux伺服器是沒有安裝NFS需要的元件,映象市場中的映象是否安裝就需要事先做判斷。

rpm -qa | grep "rpcbind" #檢視rpcbind軟體包是否安裝。

yum -y install rpcbind #手動安裝rpcbind軟體。

#安裝完成的提示:

Installed:

rpcbind.x86_64 0:0.2.0-11.el6

Dependency Installed:

libgssglue.x86_64 0:0.1-11.el6 libtirpc.x86_64 0:0.2.1-10.el6

Complete!

rpm -qa | grep "rpcbind" #再次確認rpcbind是否安裝成功。

rpcbind-0.2.0-11.el6.x86_64 #可以看到rpcbind軟體已經安裝成功。

rpm -qa | grep "nfs" #檢視nfs軟體包是否安裝,預設CentOS 6.5系統是沒有安裝。

yum -y install nfs-utils #手動安裝nfs軟體包

#安裝成功的提示:

Installed:

nfs-utils.x86_64 1:1.2.3-64.el6

Dependency Installed:

keyutils.x86_64 0:1.4-5.el6 libevent.x86_64 0:1.4.13-4.el6

nfs-utils-lib.x86_64 0:1.1.5-11.el6 python-argparse.noarch 0:1.2.1-2.1.el6

Dependency Updated:

keyutils-libs.x86_64 0:1.4-5.el6

Complete!

rpm -qa | grep "nfs" #再次確認nfs軟體包是否安裝成功。

nfs-utils-lib-1.1.5-11.el6.x86_64

nfs-utils-1.2.3-64.el6.x86_64

磁碟情況及掛載:

[[email protected] ~]# fdisk -l

Disk /dev/xvda: 21.5 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00078f9c

Device Boot Start End Blocks Id System

/dev/xvda1 * 1 2611 20970496 83 Linux

Disk /dev/xvdb: 5368 MB, 5368709120 bytes

255 heads, 63 sectors/track, 652 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

[[email protected] ~]# fdisk /dev/xvdb

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0xb0112161.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): p

Disk /dev/xvdb: 5368 MB, 5368709120 bytes

255 heads, 63 sectors/track, 652 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0xb0112161

Device Boot Start End Blocks Id System

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-652, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-652, default 652):

Using default value 652

Command (m for help): p

Disk /dev/xvdb: 5368 MB, 5368709120 bytes

255 heads, 63 sectors/track, 652 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0xb0112161

Device Boot Start End Blocks Id System

/dev/xvdb1 1 652 5237158+ 83 Linux

Command (m for help): wq

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[[email protected] ~]# mkfs.ext4 /dev/xvdb1

mke2fs 1.41.12 (17-May-2010)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

327680 inodes, 1309289 blocks

65464 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=1342177280

40 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 38 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

[[email protected] ~]# cp -a /etc/fstab /etc/fstab.bak

[[email protected] ~]# vim /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Aug 14 21:16:42 2014

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

UUID=94e4e384-0ace-437f-bc96-057dd64f42ee / ext4 defaults,barrier=0 1 1

tmpfs /dev/shm tmpfs defaults 0 0

devpts /dev/pts devpts gid=5,mode=620 0 0

sysfs /sys sysfs defaults 0 0

proc /proc proc defaults 0 0

/dev/xvdb1 /mnt ext4 defaults 0 0

[[email protected] ~]# mount -a

[[email protected] ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda1 20G 1.5G 18G 8% /

tmpfs 938M 0 938M 0% /dev/shm

/dev/xvdb1 5.0G 138M 4.6G 3% /mnt

可看到磁碟已分割槽成功,並且掛載到/mnt目錄中。

若對於磁碟分割槽操作有疑問,可參見幫助中心磁碟及分割槽。

NFS伺服器建立共享目錄:

[[email protected] ~]# mkdir /mnt/share #建立共享目錄為/mnt/share

[[email protected] ~]# vim /etc/exports #修改/etc/exports檔案,輸出共享目錄

/mnt/share *(rw,sync,no_root_squash)

說明:

/etc/exports檔案內容格式:

[客戶端1 選項(訪問許可權,使用者對映,其他)] [客戶端2 選項(訪問許可權,使用者對映,其他)]

a. 輸出目錄:

輸出目錄是指NFS系統中需要共享給客戶機使用的目錄;

b. 客戶端:

客戶端是指網路中可以訪問這個NFS輸出目錄的計算機

客戶端常用的指定方式

指定ip地址的主機:192.168.0.200

指定子網中的所有主機:192.168.0.0/24 192.168.0.0/255.255.255.0

指定域名的主機:david.bsmart.cn

指定域中的所有主機:*.bsmart.cn

所有主機:*

c. 選項:

選項用來設定輸出目錄的訪問許可權、使用者對映等。

NFS主要有3類選項:

訪問許可權選項

設定輸出目錄只讀:ro

設定輸出目錄讀寫:rw

使用者對映選項

anongid=xxx:將遠端訪問的所有使用者組都對映為匿名使用者組賬戶,並指定該匿名使用者組賬戶為本地使用者組賬戶(GID=xxx);

all_squash:將遠端訪問的所有普通使用者及所屬組都對映為匿名使用者或使用者組(nfsnobody);

anonuid=xxx:將遠端訪問的所有使用者都對映為匿名使用者,並指定該使用者為本地使用者(UID=xxx);

no_root_squash:與rootsquash取反;

no_all_squash:與all_squash取反(預設設定);

root_squash:將root使用者及所屬組都對映為匿名使用者或使用者組(預設設定);

其它選項

async:將資料先儲存在記憶體緩衝區中,必要時才寫入磁碟;

insecure:允許客戶端從大於1024的tcp/ip埠連線伺服器;

no_subtree:即使輸出目錄是一個子目錄,nfs伺服器也不檢查其父目錄的許可權,這樣可以提高效率;

no_wdelay:若有寫操作則立即執行,應與sync配合使用;

secure:限制客戶端只能從小於1024的tcp/ip埠連線nfs伺服器(預設設定);

subtree:若輸出目錄是一個子目錄,則nfs伺服器將檢查其父目錄的許可權(預設設定);

sync:將資料同步寫入記憶體緩衝區與磁碟中,效率低,但可以保證資料的一致性;

wdelay:檢查是否有相關的寫操作,如果有則將這些寫操作一起執行,這樣可以提高效率(預設設定);

每個客戶端後面必須跟一個小括號,裡面定義了此客戶端的訪問特性,比如訪問許可權等。

例如:

172.16.0.0/16(ro,async) 192.168.0.0/24(rw,sync)

[[email protected] ~]# /etc/init.d/rpcbind start #啟動rpcbind服務

Starting rpcbind: [ OK ]

[[email protected] ~]# /etc/init.d/nfs start #啟動nfs服務

Starting NFS services: [ OK ]

Starting NFS quotas: [ OK ]

Starting NFS mountd: rpc.mountd: svc_tli_create: could not open connection for udp6

rpc.mountd: svc_tli_create: could not open connection for tcp6

rpc.mountd: svc_tli_create: could not open connection for udp6

rpc.mountd: svc_tli_create: could not open connection for tcp6

rpc.mountd: svc_tli_create: could not open connection for udp6

rpc.mountd: svc_tli_create: could not open connection for tcp6

[ OK ]

Starting NFS daemon: rpc.nfsd: address family inet6 not supported by protocol TCP

[ OK ]

Starting RPC idmapd: [ OK ]

[[email protected] ~]# chkconfig rpcbind on #配置rpcbind開機自動啟動

[[email protected] ~]# chkconfig nfs on #配置nfs開機自動啟動

NFS客戶端掛載伺服器共享的目錄:

客戶端1掛載伺服器端的NFS資源:

因為操作方法和伺服器端一致,先就不做說明,如果有疑問,可以參見NFS伺服器的安裝說明。

[[email protected] ~]# yum -y install rpcbind

[[email protected] ~]# rpm -qa | grep rpcbind

rpcbind-0.2.0-11.el6.x86_64

[[email protected] ~]# rpm -qa | grep nfs

[[email protected] ~]# yum -y install nfs-utils

[[email protected] ~]# /etc/init.d/rpcbind start

Starting rpcbind: [ OK ]

[[email protected] ~]# /etc/init.d/nfs

nfs nfslock

[[email protected] ~]# /etc/init.d/nfs start

Starting NFS services: [ OK ]

Starting NFS quotas: [ OK ]

Starting NFS mountd: rpc.mountd: svc_tli_create: could not open connection for udp6

rpc.mountd: svc_tli_create: could not open connection for tcp6

rpc.mountd: svc_tli_create: could not open connection for udp6

rpc.mountd: svc_tli_create: could not open connection for tcp6

rpc.mountd: svc_tli_create: could not open connection for udp6

rpc.mountd: svc_tli_create: could not open connection for tcp6

[ OK ]

Starting NFS daemon: rpc.nfsd: address family inet6 not supported by protocol TCP

[ OK ]

Starting RPC idmapd: [ OK ]

[[email protected] ~]# chkconfig rpcbind on

[[email protected] ~]# chkconfig nfs on

[[email protected] ~]# showmount -e 10.129.14.52 #檢視NFS伺服器共享出來的目錄

Export list for 10.129.14.52:

/mnt/share *

[[email protected] ~]# mount -t nfs 10.129.14.52:/mnt/share /mnt #掛載NFS伺服器上共享出來的目錄

[[email protected] ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda1 20G 1.5G 18G 8% /

tmpfs 938M 0 938M 0% /dev/shm

10.129.14.52:/mnt/share

5.0G 138M 4.6G 3% /mnt

可以看到伺服器共享出來的目錄已經掛載成功。

客戶端2掛載伺服器端的NFS資源:

操作方法和客戶端1是一致的,所以如果有疑問請參看客戶端1的說明。

[[email protected] ~]# cat /etc/issue

CentOS release 6.5 (Final)

Kernel \r on an \m

[[email protected] ~]# uname -a

Linux iZ28q9kp4g6Z 2.6.32-431.23.3.el6.x86_64 #1 SMP Thu Jul 31 17:20:51 UTC 2014 x86_64 x86_64 x86_64 GNU/Linux

[[email protected] ~]# ifconfig | grep inet

inet addr:10.163.233.46 Bcast:10.163.239.255 Mask:255.255.240.0

inet addr:121.42.27.129 Bcast:121.42.27.255 Mask:255.255.252.0

inet addr:127.0.0.1 Mask:255.0.0.0

[[email protected] ~]# showmount -e 10.129.14.52

Export list for 10.129.14.52:

/mnt/share *

[[email protected] ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda1 20G 1.5G 18G 8% /

tmpfs 938M 0 938M 0% /dev/shm

[[email protected] ~]# mount -t nfs 10.129.14.52:/mnt/share /mnt

[[email protected] ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda1 20G 1.5G 18G 8% /

tmpfs 938M 0 938M 0% /dev/shm

10.129.14.52:/mnt/share

5.0G 138M 4.6G 3% /mnt

可看到已成功掛載到NFS 伺服器共享出來的目錄。

在NFS伺服器中共享目錄中建立檔案,測試客戶端掛載的目錄中能否訪問到。

NFS伺服器:

[[email protected] ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda1 20G 1.5G 18G 8% /

tmpfs 938M 0 938M 0% /dev/shm

/dev/xvdb1 5.0G 138M 4.6G 3% /mnt

[[email protected] ~]# cd /mnt

[[email protected] mnt]# echo "This is a test file" >test.txt

[[email protected] mnt]# cat test.txt

This is a test file

[[email protected] mnt]# mv test.txt share/

[[email protected] mnt]# cd share/

[[email protected] share]# ls #可以看到共享目錄中已經建立了一個檔案,名為test.txt

test.txt

NFS 客戶端:

NFS客戶端1:

[[email protected] ~]# ifconfig | grep inet

inet addr:10.129.84.82 Bcast:10.129.95.255 Mask:255.255.240.0

inet addr:42.96.202.186 Bcast:42.96.203.255 Mask:255.255.252.0

inet addr:127.0.0.1 Mask:255.0.0.0

[[email protected] ~]# cd /mnt

[[email protected] mnt]# ls

test.txt

[[email protected] mnt]# cat test.txt #可以看到在NFS客戶端中可以看到NFS伺服器共享出來的檔案

This is a test file

[[email protected] mnt]# vim test.txt #可以看到NFS客戶端可以修改NFS 伺服器共享出來的目錄,這個因為在NFS伺服器端是賦予了客戶端w(寫)許可權

This is a test file

Client1 add test file.

NFS客戶端2上面訪問:

[email protected] mnt]# cat test.txt

This is a test file

Client1 add test file.

[[email protected] ~]# cd /mnt/

[[email protected] mnt]# ls

test.txt

[[email protected] mnt]# cat test.txt

This is a test file

Client1 add test file.

[[email protected] mnt]# vim test.txt

This is a test file

Client1 add test file.

Client2 add test file too.

將NFS伺服器端共享出來的目錄實現開機自動掛載:

NFS客戶端1:

[[email protected] mnt]# ifconfig | grep inet

inet addr:10.129.84.82 Bcast:10.129.95.255 Mask:255.255.240.0

inet addr:42.96.202.186 Bcast:42.96.203.255 Mask:255.255.252.0

inet addr:127.0.0.1 Mask:255.0.0.0

[[email protected] mnt]# cp -a /etc/fstab /etc/fstab.bak

[[email protected] mnt]# vim /etc/fstab #設定NFS伺服器端共享的目錄開機自動掛載

#

# /etc/fstab

# Created by anaconda on Thu Aug 14 21:16:42 2014

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

UUID=94e4e384-0ace-437f-bc96-057dd64f42ee / ext4 defaults,barrier=0 1 1

tmpfs /dev/shm tmpfs defaults 0 0

devpts /dev/pts devpts gid=5,mode=620 0 0

sysfs /sys sysfs defaults 0 0

proc /proc proc defaults 0 0

10.129.14.52:/mnt/share /mnt nfs defaults 0 0

[[email protected] mnt]# mount -a

[[email protected] mnt]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda1 20G 1.5G 18G 8% /

tmpfs 938M 0 938M 0% /dev/shm

10.129.14.52:/mnt/share

5.0G 138M 4.6G 3% /mnt

[[email protected] ~]# cp -a /etc/rc.d/rc.local /etc/rc.d/rc.local.bak

[[email protected] ~]# vim /etc/rc.d/rc.local

#!/bin/sh

#

# This script will be executed *after* all the other init scripts.

# You can put your own initialization stuff in here if you don't

# want to do the full Sys V style init stuff.

touch /var/lock/subsys/local

mount –a

重啟測試,看看能實現自動掛載:

init 6

[[email protected] ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda1 20G 1.5G 18G 8% /

tmpfs 938M 0 938M 0% /dev/shm

10.129.14.52:/mnt/share

5.0G 138M 4.6G 3% /mnt

可看到實現了開機自動掛載。