Spark執行SQL報錯GC問題

阿新 • • 發佈:2018-11-30

java.lang.OutOfMemoryError: GC overhead limit exceeded at org.apache.spark.unsafe.types.UTF8String.fromAddress(UTF8String.java:102) at org.apache.spark.sql.catalyst.expressions.UnsafeRow.getUTF8String(UnsafeRow.java:419) at org.apache.spark.sql.catalyst.expressions.JoinedRow.getUTF8String(JoinedRow.scala:102) at org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificPredicate.eval(Unknown Source) at org.apache.spark.sql.execution.joins.SortMergeJoinExec$$anonfun$doExecute$1$$anonfun$1$$anonfun$apply$1.apply(SortMergeJoinExec.scala:114) at org.apache.spark.sql.execution.joins.SortMergeJoinExec$$anonfun$doExecute$1$$anonfun$1$$anonfun$apply$1.apply(SortMergeJoinExec.scala:114) at org.apache.spark.sql.execution.joins.OneSideOuterIterator.advanceBufferUntilBoundConditionSatisfied(SortMergeJoinExec.scala:874) at org.apache.spark.sql.execution.joins.OneSideOuterIterator.advanceStream(SortMergeJoinExec.scala:855) at org.apache.spark.sql.execution.joins.OneSideOuterIterator.advanceNext(SortMergeJoinExec.scala:881) at org.apache.spark.sql.execution.RowIteratorToScala.hasNext(RowIterator.scala:68) at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:408) at scala.collection.Iterator$class.foreach(Iterator.scala:893) at scala.collection.AbstractIterator.foreach(Iterator.scala:1336) at org.apache.spark.sql.hive.SparkHiveWriterContainer.writeToFile(hiveWriterContainers.scala:184) at org.apache.spark.sql.hive.execution.InsertIntoHiveTable$$anonfun$saveAsHiveFile$3.apply(InsertIntoHiveTable.scala:210) at org.apache.spark.sql.hive.execution.InsertIntoHiveTable$$anonfun$saveAsHiveFile$3.apply(InsertIntoHiveTable.scala:210) at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:87) at org.apache.spark.scheduler.Task.run(Task.scala:99) at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:325) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:745) 18/11/26 17:24:37 INFO executor.Executor: Not reporting error to driver during JVM shutdown. 18/11/26 17:24:37 ERROR util.SparkUncaughtExceptionHandler: [Container in shutdown] Uncaught exception in thread Thread[Executor task launch worker for task 451,5,main] java.lang.OutOfMemoryError: GC overhead limit exceeded at org.apache.spark.unsafe.types.UTF8String.fromAddress(UTF8String.java:102) at org.apache.spark.sql.catalyst.expressions.UnsafeRow.getUTF8String(UnsafeRow.java:419) at org.apache.spark.sql.catalyst.expressions.JoinedRow.getUTF8String(JoinedRow.scala:102) at org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificPredicate.eval(Unknown Source) at org.apache.spark.sql.execution.joins.SortMergeJoinExec$$anonfun$doExecute$1$$anonfun$1$$anonfun$apply$1.apply(SortMergeJoinExec.scala:114) at org.apache.spark.sql.execution.joins.SortMergeJoinExec$$anonfun$doExecute$1$$anonfun$1$$anonfun$apply$1.apply(SortMergeJoinExec.scala:114) at org.apache.spark.sql.execution.joins.OneSideOuterIterator.advanceBufferUntilBoundConditionSatisfied(SortMergeJoinExec.scala:874) at org.apache.spark.sql.execution.joins.OneSideOuterIterator.advanceStream(SortMergeJoinExec.scala:855) at org.apache.spark.sql.execution.joins.OneSideOuterIterator.advanceNext(SortMergeJoinExec.scala:881) at org.apache.spark.sql.execution.RowIteratorToScala.hasNext(RowIterator.scala:68) at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:408) at scala.collection.Iterator$class.foreach(Iterator.scala:893) at scala.collection.AbstractIterator.foreach(Iterator.scala:1336) at org.apache.spark.sql.hive.SparkHiveWriterContainer.writeToFile(hiveWriterContainers.scala:184) at org.apache.spark.sql.hive.execution.InsertIntoHiveTable$$anonfun$saveAsHiveFile$3.apply(InsertIntoHiveTable.scala:210) at org.apache.spark.sql.hive.execution.InsertIntoHiveTable$$anonfun$saveAsHiveFile$3.apply(InsertIntoHiveTable.scala:210) at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:87) at org.apache.spark.scheduler.Task.run(Task.scala:99) at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:325) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:745)

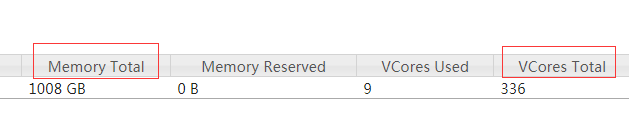

叢集節點數:20臺

可用記憶體大小:1024G

每臺核心:48核

使用SparkSQL讀取資料寫入Hive表

spark2-submit \

--class com.lhx.dac.test \

--master yarn \

dac-test.jar

預設方式執行,叢集使用記憶體800G,報錯OOM

檢視CM的Hive配置:

--conf spark.yarn.driver.memoryOverhead=2048m \ --conf spark.yarn.executor.memoryOverhead=2048m \ --conf spark.dynamicAllocation.enabled=false \

檢視記憶體和核數比:3:1

設定引數提高GC記憶體,

spark.executor.memory 調大引數擴大記憶體(預設512M,調整為2G),

修改後Spark執行命令:

spark2-submit \ --class com.lhx.dac.test \ --master yarn \ --deploy-mode cluster \ --driver-memory 6g \ --executor-memory 6g \ --executor-cores 2 \ --conf spark.yarn.driver.memoryOverhead=2048m \ --conf spark.yarn.executor.memoryOverhead=2048m \ dac-test.jar

叢集使用記憶體900多G,成功執行程式。

總結:記憶體分配採用動態,堆疊記憶體是靜態分配。