loss function之用Dice-coefficient loss function or cross-entropy

答案出處:https://stats.stackexchange.com/questions/321460/dice-coefficient-loss-function-vs-cross-entropy

cross entropy 是普遍使用的loss function,但是做分割的時候很多使用Dice, 二者的區別如下;

One compelling reason for using cross-entropy over dice-coefficient or the similar IoU metric is that the gradients are nicer.

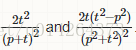

The gradients of cross-entropy wrt the logits is something like p−t, where p is the softmax outputs and t is the target. Meanwhile, if we try to write the dice coefficient in a differentiable form:

It's easy to imagine a case where both p and t are small, and the gradient blows up to some huge value. In general, it seems likely that training will become more unstable.

It's easy to imagine a case where both p and t are small, and the gradient blows up to some huge value. In general, it seems likely that training will become more unstable.

The main reason that people try to use dice coefficient or IoU directly is that the actual goal is maximization of those metrics

However, class imbalance is typically taken care of simply by assigning loss multipliers to each class, such that the network is highly disincentivized to simply ignore a class which appears infrequently, so it's unclear that Dice coefficient is really necessary in these cases.

I would start with cross-entropy loss, which seems to be the standard loss for training segmentation networks, unless there was a really compelling reason to use Dice coefficient.

簡單來說,就是DICE並沒有真正使結果更優秀,而是結果讓人看起來更優秀。