Kubernetes彈性伸縮全場景解析(三) - HPA實踐手冊

前言

在上一篇文章中,給大家介紹和剖析了HPA的實現原理以及演進的思路與歷程。在本文中,我們會為大家講解如何使用HPA以及一些需要注意的細節。

autoscaling/v1實踐

v1的模板可能是大家平時見到最多的也是最簡單的,v1版本的HPA只支援一種指標 —— CPU。傳統意義上,彈性伸縮最少也會支援CPU與Memory兩種指標,為什麼在Kubernetes中只放開了CPU呢?其實最早的HPA是計劃同時支援這兩種指標的,但是實際的開發測試中發現,記憶體不是一個非常好的彈性伸縮判斷條件。因為和CPU不同,很多記憶體型的應用,並不會因為HPA彈出新的容器而帶來記憶體的快速回收,因為很多應用的記憶體都要交給語言層面的VM進行管理,也就是記憶體的回收是由VM的GC來決定的。這就有可能因為GC時間的差異導致HPA在不恰當的時間點震盪,因此在v1的版本中,HPA就只支援了CPU一種指標。

一個標準的v1模板大致如下:

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 50其中scaleTargetRef

apps/v1版本的Deployment。targetCPUUtilizationPercentage表示當整體的資源利用率超過50%的時候,會進行擴容。接下來我們做一個簡單的Demo來實踐下。

-

登入容器服務控制檯,首先建立一個應用部署,選擇使用模板建立,模板內容如下。

apiVersion: apps/v1beta1 kind: Deployment metadata: name: php-apache labels: app: php-apache spec: replicas: 1 selector: matchLabels: app: php-apache template: metadata: labels: app: php-apache spec: containers: - name: php-apache image: registry.cn-hangzhou.aliyuncs.com/ringtail/hpa-example:v1.0 ports: - containerPort: 80 resources: requests: memory: "300Mi" cpu: "250m" --- apiVersion: v1 kind: Service metadata: name: php-apache labels: app: php-apache spec: selector: app: php-apache ports: - protocol: TCP name: http port: 80 targetPort: 80 type: ClusterIP -

部署壓測模組HPA模板

apiVersion: autoscaling/v1 kind: HorizontalPodAutoscaler metadata: name: php-apache namespace: default spec: scaleTargetRef: apiVersion: apps/v1beta1 kind: Deployment name: php-apache minReplicas: 1 maxReplicas: 10 targetCPUUtilizationPercentage: 50 - 開啟壓力測試

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: load-generator

labels:

app: load-generator

spec:

replicas: 1

selector:

matchLabels:

app: load-generator

template:

metadata:

labels:

app: load-generator

spec:

containers:

- name: load-generator

image: busybox

command:

- "sh"

- "-c"

- "while true; do wget -q -O- http://php-apache.default.svc.cluster.local; done"- 檢查擴容狀態

- 關閉壓測應用

- 檢查縮容狀態

這樣一個使用autoscaling/v1的HPA就完成了,相比而言,這個版本的HPA是目前最簡單的,無論是否升級Metrics-Server都可以實現。

autoscaling/v2beta1實踐

在前面的內容中為大家講解了HPA還有autoscaling/v2beta1和autoscaling/v2beta2這兩個版本,這兩個版本的區別是autoscaling/v1beta1支援了Resource Metrics和Custom Metrics。而在autoscaling/v2beta2的版本中額外增加了External Metrics的支援。對於External Metrics在本文中就不過多贅述,因為External Metrics目前在社群裡面沒有太多成熟的實現,比較成熟的實現是Prometheus Custom Metrics。

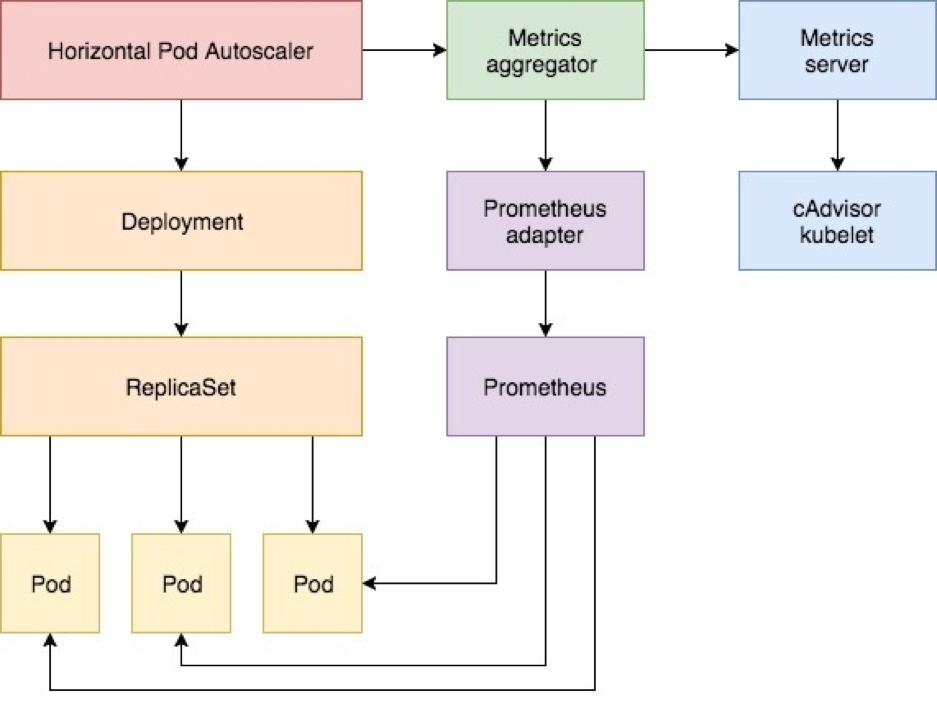

上面這張圖為大家展現了開啟Metrics Server後HPA是如何使用不同的型別的Metrics的,如果需要使用Custom Metrics則需要配置安裝相應的Custom Metrics Adapter,在本篇文章中,主要為大家介紹一個基於QPS來進行彈性伸縮的例子。

- 安裝

Metrics Server並在kube-controller-manager中進行開啟

目前預設的阿里雲容器服務Kubernetes叢集使用還是Heapster,容器服務計劃在1.12中更新Metrics Server,這個地方需要特別說明下,社群雖然已經逐漸開始廢棄Heapster,但是目前社群中還有大量的元件是在強依賴Heapster的API,因此阿里雲基於Metrics Server進行了Heapster完整的相容,既可以讓開發者使用Metrics Server的新功能,又可以無需擔心其他元件的宕機。

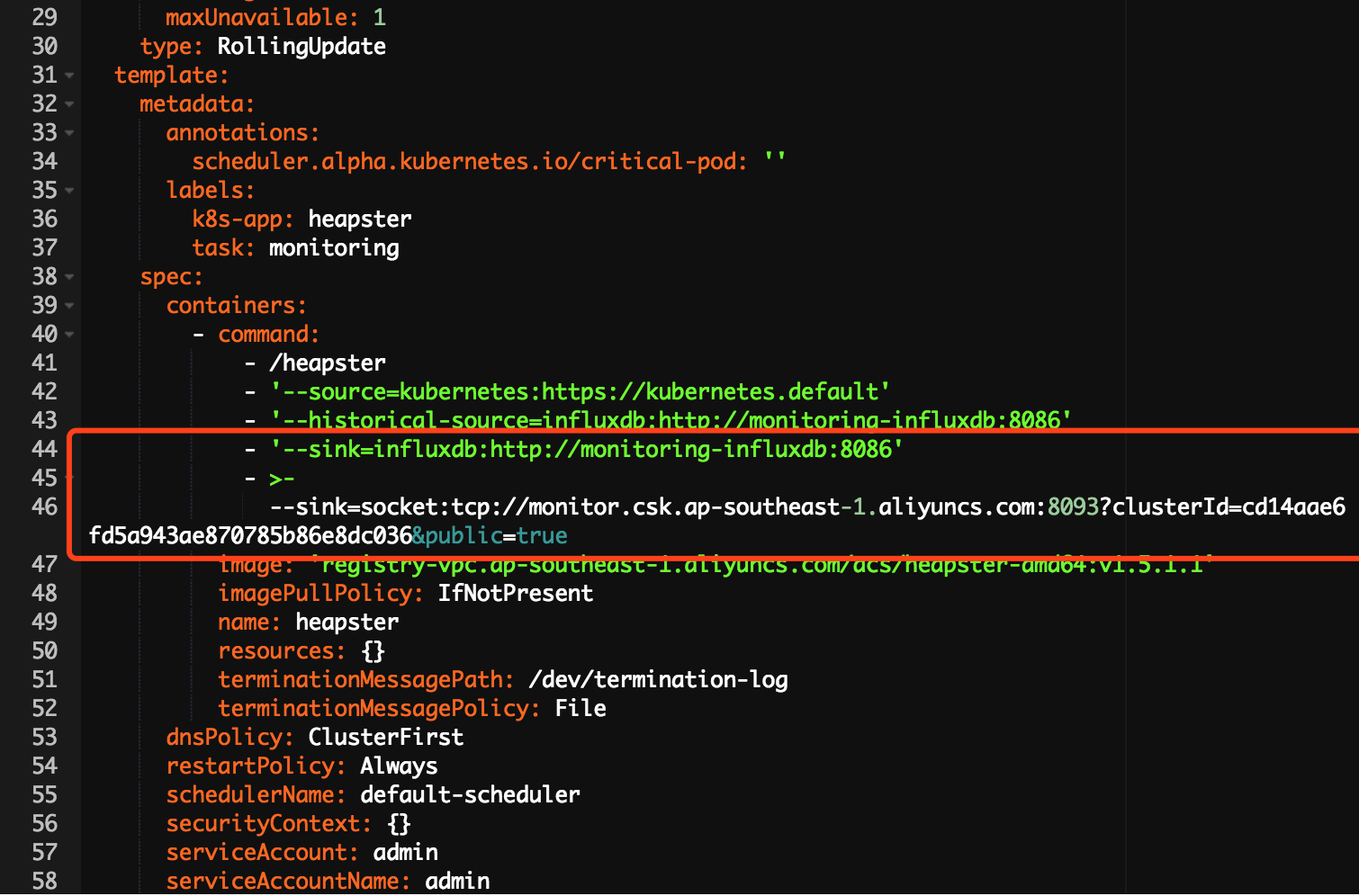

在部署新的Metrics Server之前,我們首先要備份一下Heapster中的一些啟動引數,因為這些引數稍後會直接用在Metrics Server的模板中,其中重點關心的是兩個Sink,如果需要使用Influxdb的開發者,可以保留第一個Sink,如果需要保留雲監控整合能力的開發者,則保留第二個Sink。

將這兩個引數拷貝到Metrics Server的啟動模板中,在本例中是兩個都相容,並下發部署。

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: 443

---

apiVersion: apiregistration.k8s.io/v1beta1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: admin

containers:

- name: metrics-server

image: registry.cn-hangzhou.aliyuncs.com/ringtail/metrics-server:1.1

imagePullPolicy: Always

command:

- /metrics-server

- '--source=kubernetes:https://kubernetes.default'

- '--sink=influxdb:http://monitoring-influxdb:8086'

- '--sink=socket:tcp://monitor.csk.[region_id].aliyuncs.com:8093?clusterId=[cluster_id]&public=true'接下來我們修改下Heapster的Service,將服務的後端從Heapster轉移到Metrics Server。

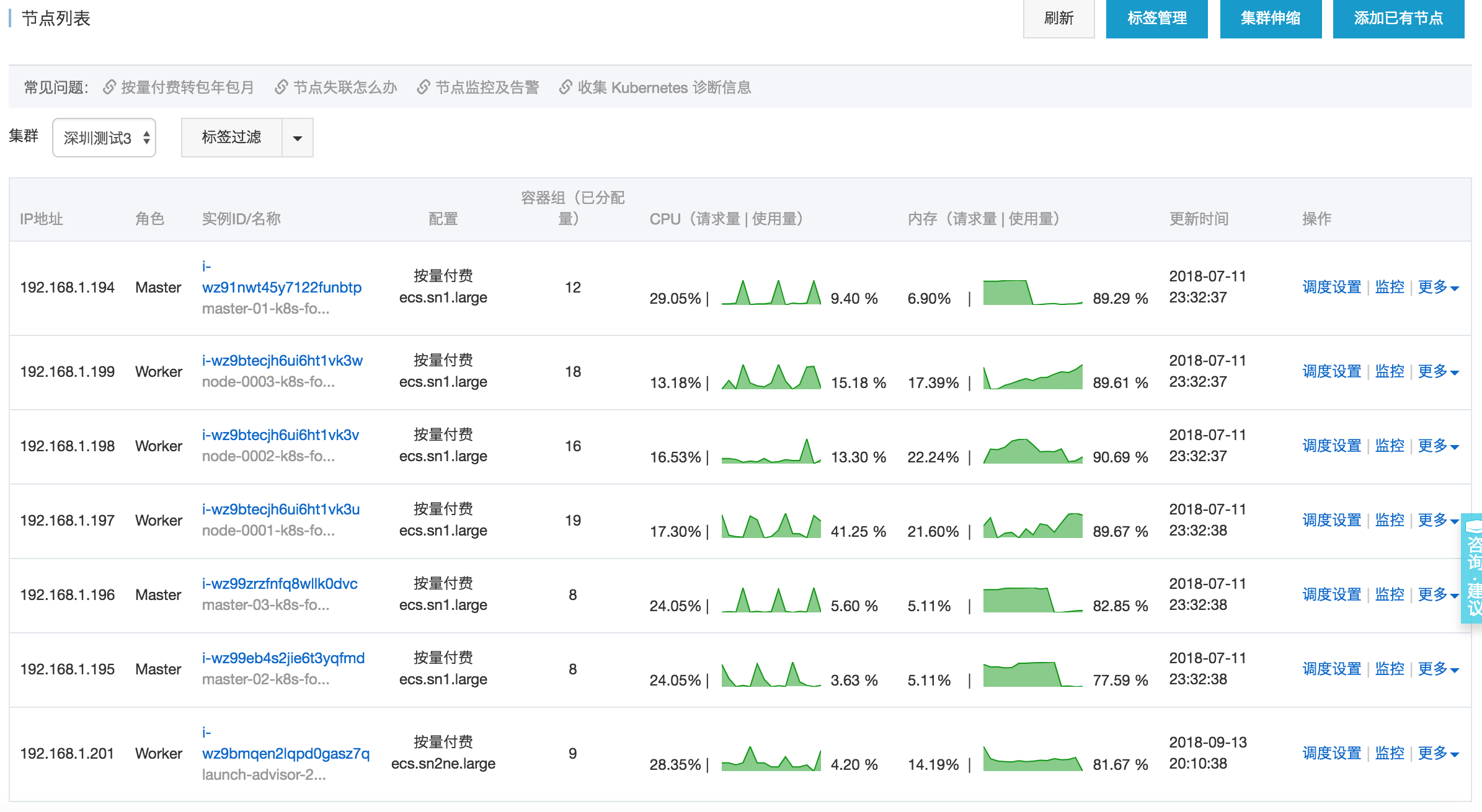

如果此時從控制檯的節點頁面可以獲取到右側的監控資訊的話,說明Metrics Server以及完全相容Heapster。

此時通過kubectl get apiservice,如果可以看到註冊的v1beta1.metrics.k8s.io的api,則說明已經註冊成功。

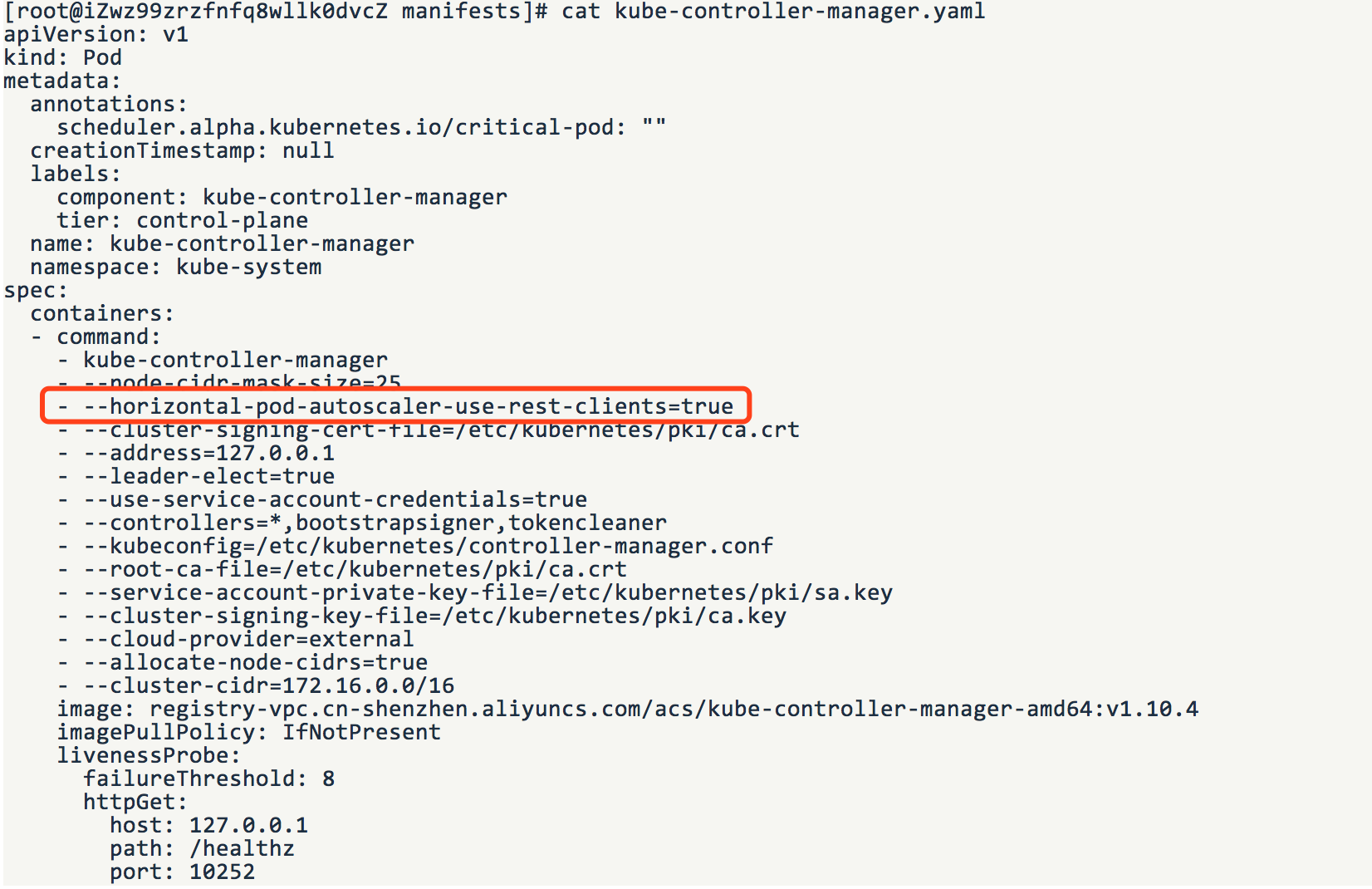

接下來我們需要在kube-controller-manager上切換Metrics的資料來源。kube-controller-manger部署在每個master上,是通過Static Pod的託管給kubelet的。因此只需要修改kube-controller-manager的配置檔案,kubelet就會自動進行更新。kube-controller-manager在主機上的路徑是/etc/kubernetes/manifests/kube-controller-manager.yaml。

需要將--horizontal-pod-autoscaler-use-rest-clients=true,這裡有一個注意點,因為如果使用vim進行編輯,vim會自動生成一個快取檔案影響最終的結果,所以比較建議的方式是將這個配置檔案移動到其他的目錄下進行修改,然後再移回原來的目錄。至此,Metrics Server已經可以為HPA進行服務了,接下來我們來做自定義指標的部分。

- 部署

Custom Metrics Adapter

如叢集中未部署Prometheus,可以參考《阿里雲容器Kubernetes監控(七) - Prometheus監控方案部署》先部署Prometheus。接下來我們部署Custom Metrics Adapter。

kind: Namespace

apiVersion: v1

metadata:

name: custom-metrics

---

kind: ServiceAccount

apiVersion: v1

metadata:

name: custom-metrics-apiserver

namespace: custom-metrics

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: custom-metrics:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: custom-metrics-apiserver

namespace: custom-metrics

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: custom-metrics-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: custom-metrics-apiserver

namespace: custom-metrics

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: custom-metrics-resource-reader

rules:

- apiGroups:

- ""

resources:

- namespaces

- pods

- services

verbs:

- get

- list

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: custom-metrics-apiserver-resource-reader

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: custom-metrics-resource-reader

subjects:

- kind: ServiceAccount

name: custom-metrics-apiserver

namespace: custom-metrics

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: custom-metrics-getter

rules:

- apiGroups:

- custom.metrics.k8s.io

resources:

- "*"

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: hpa-custom-metrics-getter

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: custom-metrics-getter

subjects:

- kind: ServiceAccount

name: horizontal-pod-autoscaler

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: custom-metrics-apiserver

namespace: custom-metrics

labels:

app: custom-metrics-apiserver

spec:

replicas: 1

selector:

matchLabels:

app: custom-metrics-apiserver

template:

metadata:

labels:

app: custom-metrics-apiserver

spec:

tolerations:

- key: beta.kubernetes.io/arch

value: arm

effect: NoSchedule

- key: beta.kubernetes.io/arch

value: arm64

effect: NoSchedule

serviceAccountName: custom-metrics-apiserver

containers:

- name: custom-metrics-server

image: luxas/k8s-prometheus-adapter:v0.2.0-beta.0

args:

- --prometheus-url=http://prometheus-k8s.monitoring.svc:9090

- --metrics-relist-interval=30s

- --rate-interval=60s

- --v=10

- --logtostderr=true

ports:

- containerPort: 443

securityContext:

runAsUser: 0

---

apiVersion: v1

kind: Service

metadata:

name: api

namespace: custom-metrics

spec:

ports:

- port: 443

targetPort: 443

selector:

app: custom-metrics-apiserver

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.custom.metrics.k8s.io

spec:

insecureSkipTLSVerify: true

group: custom.metrics.k8s.io

groupPriorityMinimum: 1000

versionPriority: 5

service:

name: api

namespace: custom-metrics

version: v1beta1

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: custom-metrics-server-resources

rules:

- apiGroups:

- custom-metrics.metrics.k8s.io

resources: ["*"]

verbs: ["*"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: hpa-controller-custom-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: custom-metrics-server-resources

subjects:

- kind: ServiceAccount

name: horizontal-pod-autoscaler

namespace: kube-system

3.部署手壓測應用與HPA模板

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: sample-metrics-app

name: sample-metrics-app

spec:

replicas: 2

selector:

matchLabels:

app: sample-metrics-app

template:

metadata:

labels:

app: sample-metrics-app

spec:

tolerations:

- key: beta.kubernetes.io/arch

value: arm

effect: NoSchedule

- key: beta.kubernetes.io/arch

value: arm64

effect: NoSchedule

- key: node.alpha.kubernetes.io/unreachable

operator: Exists

effect: NoExecute

tolerationSeconds: 0

- key: node.alpha.kubernetes.io/notReady

operator: Exists

effect: NoExecute

tolerationSeconds: 0

containers:

- image: luxas/autoscale-demo:v0.1.2

name: sample-metrics-app

ports:

- name: web

containerPort: 8080

readinessProbe:

httpGet:

path: /

port: 8080

initialDelaySeconds: 3

periodSeconds: 5

livenessProbe:

httpGet:

path: /

port: 8080

initialDelaySeconds: 3

periodSeconds: 5

---

apiVersion: v1

kind: Service

metadata:

name: sample-metrics-app

labels:

app: sample-metrics-app

spec:

ports:

- name: web

port: 80

targetPort: 8080

selector:

app: sample-metrics-app

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: sample-metrics-app

labels:

service-monitor: sample-metrics-app

spec:

selector:

matchLabels:

app: sample-metrics-app

endpoints:

- port: web

---

kind: HorizontalPodAutoscaler

apiVersion: autoscaling/v2beta1

metadata:

name: sample-metrics-app-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: sample-metrics-app

minReplicas: 2

maxReplicas: 10

metrics:

- type: Object

object:

target:

kind: Service

name: sample-metrics-app

metricName: http_requests

targetValue: 100

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: sample-metrics-app

namespace: default

annotations:

traefik.frontend.rule.type: PathPrefixStrip

spec:

rules:

- http:

paths:

- path: /sample-app

backend:

serviceName: sample-metrics-app

servicePort: 80這個壓測的應用暴露了一個Prometheus的介面,介面中的資料如下,其中http_requests_total這個指標就是我們接下來伸縮使用的自定義指標。

[[email protected] manifests]# curl 172.16.1.160:8080/metrics

# HELP http_requests_total The amount of requests served by the server in total

# TYPE http_requests_total counter

http_requests_total 39556844.部署壓測應用

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: load-generator

labels:

app: load-generator

spec:

replicas: 1

selector:

matchLabels:

app: load-generator

template:

metadata:

labels:

app: load-generator

spec:

containers:

- name: load-generator

image: busybox

command:

- "sh"

- "-c"

- "while true; do wget -q -O- http://sample-metrics-app.default.svc.cluster.local; done"5.檢視HPA的狀態與伸縮,稍等幾分鐘,Pod已經伸縮成功了。

workspace kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/50% 1 10 1 21d

sample-metrics-app-hpa Deployment/sample-metrics-app 538133m/100 2 10 10 15h最後

這篇文章主要是給大家一個對於autoscaling/v1和autoscaling/v2beta1的感性的認識和大體的操作方式,對於autoscaling/v1我們不做過多的贅述,對於希望使用支援Custom Metrics的autoscaling/v2beta1的開發者也許會認為整體的操作流程過於複雜難以理解,我們會在下一篇文章中為大家詳解autoscaling/v2beta1使用Custom Metrics的種種細節,幫助大家跟深入的理解這其中的原理與設計思路。