golang中鎖mutex的實現

阿新 • • 發佈:2018-12-05

golang中的鎖是通過CAS原子操作實現的,Mutex結構如下:

type Mutex struct {

state int32

sema uint32

}

//state表示鎖當前狀態,每個位都有意義,零值表示未上鎖

//sema用做訊號量,通過PV操作從等待佇列中阻塞/喚醒goroutine,等待鎖的goroutine會掛到等待佇列中,並且陷入睡眠不被排程,unlock鎖時才喚醒。具體在sync/mutex.go Lock函式實現中。

插播一下sema

雖然在Mutex中就是一個整形欄位,但是它是很重要的一環,這個欄位就是用於訊號量管理goroutine的睡眠和喚醒的。

sema具體實現還沒詳看,這裡大概分析下功能,注意不準確!!

首先sema為goroutine的“排程”提供了一種實現,可以讓goroutine阻塞和喚醒

訊號量申請資源在runtime/sema.go中semacquire1

訊號量釋放資源在semrelease1中

首先sema中,一個semaRoot結構和一個全域性semtable變數,一個semaRoot用於一個訊號量的PV操作(猜測與goroutine排程模型MGP有關,一個Processor掛多個goroutine,對於一個processor下的多個goroutine的需要一個訊號量來管理,當然需要一個輕量的鎖在goroutine的狀態轉換時加鎖,即下面的lock結構,這個鎖與Mutex中的鎖不相同的,是sema中自己實現的),多個semaRoot的分配和查詢就通過全域性變數semtable來管理

type semaRoot struct {

lock mutex

treap *sudog // root of balanced tree of unique waiters.

nwait uint32 // Number of waiters. Read w/o the lock.

}

var semtable [semTabSize]struct {

root semaRoot

pad [cpu.CacheLinePadSize - unsafe.Sizeof(semaRoot{})]byte

}

1 讓當前goroutine睡眠阻塞是通過goparkunlock實現的,在semacquire1中這樣呼叫:

1) root := semroot(addr)

semroot中是通過訊號量地址找到semaRoot結構

2) 略過一段..... 直接到使當前goroutine睡眠位置

首先lock(&root.lock)上鎖

然後呼叫root.queue()讓當前goroutine進入等待佇列(注意一個訊號量管理多個goroutine,goroutine睡眠前,本身的詳細資訊就要儲存起來,放到佇列中,也就是在掛到了semaRoot結構的treap上,看註釋佇列是用平衡樹實現的?)

3)呼叫goparkunlock(&root.lock, waitReasonSemacquire, traceEvGoBlockSync, 4)

最後會呼叫到gopark,gopark會讓系統重新執行一次排程,在重新排程之前,會將當前goroutine,即G物件狀態置為sleep狀態,不再被排程直到被喚醒,然後unlock鎖,這個函式給了系統一個機會,將程式碼執行許可權轉交給runtime排程器,runtime會去排程別的goroutine。

2 既然阻塞,就需要有喚醒的機制

喚醒機制是通過semtable結構

sema.go並非專門為mutex鎖中的設計的,在mutex中使用的話,是在其它goroutine釋放Mutex時,呼叫的semrelease1,從佇列中喚醒goroutine執行。詳細沒看。

不過根據分析,Mutex是互斥鎖,Mutex中的訊號量應該是二值訊號量,只有0和1。在Mutex中呼叫Lock,假如執行到semacquire1,從中判斷訊號量如果為0,就讓當前goroutine睡眠,

func cansemacquire(addr *uint32) bool {

for {

v := atomic.Load(addr)

if v == 0 {

return false

}

if atomic.Cas(addr, v, v-1) {

return true

}

}

}

如果不斷有goroutine嘗試獲取Mutex鎖,都會判斷到訊號量為0,會不斷有goroutine陷入睡眠狀態。只有當unlock時,訊號量才會+1,當然不能重複執行unlock,所以這個訊號量應該只為0和1。

大概分析了下sema,轉回到Mutex中來。

上面說了sema欄位的作用,state欄位在Mutex中是更為核心的欄位,標識了當前鎖的一個狀態。

state |31|30|....| 2 | 1 | 0 |

| | | 第0位表示當前被加鎖,0,unlock, 1 locked

| | 是否有goroutine已被喚醒,0 喚醒, 1 沒有

| 這一位表示當前Mutex處於什麼模式,兩種模式,0 Normal 1 Starving

第三位表示嘗試Lock這個鎖而等待的goroutine的個數

先解釋下Mutex的normal和starving兩種模式,程式碼中關於Mutex的註釋如下

![]() 兩種模式是為了鎖的公平性而實現,摘取網上的一段翻譯:

http://blog.51cto.com/qiangmzsx/2134786

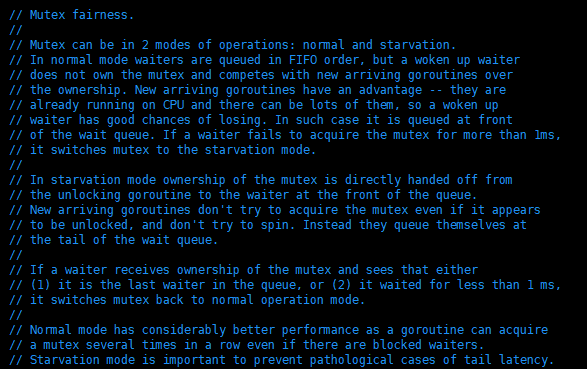

互斥量可分為兩種操作模式:正常和飢餓。

在正常模式下,等待的goroutines按照FIFO(先進先出)順序排隊,但是goroutine被喚醒之後並不能立即得到mutex鎖,它需要與新到達的goroutine爭奪mutex鎖。

因為新到達的goroutine已經在CPU上運行了,所以被喚醒的goroutine很大概率是爭奪mutex鎖是失敗的。出現這樣的情況時候,被喚醒的goroutine需要排隊在佇列的前面。

如果被喚醒的goroutine有超過1ms沒有獲取到mutex鎖,那麼它就會變為飢餓模式。

在飢餓模式中,mutex鎖直接從解鎖的goroutine交給佇列前面的goroutine。新達到的goroutine也不會去爭奪mutex鎖(即使沒有鎖,也不能去自旋),而是到等待佇列尾部排隊。

在飢餓模式下,有一個goroutine獲取到mutex鎖了,如果它滿足下條件中的任意一個,mutex將會切換回去正常模式:

1. 是等待佇列中的最後一個goroutine

2. 它的等待時間不超過1ms。

正常模式有更好的效能,因為goroutine可以連續多次獲得mutex鎖;

飢餓模式對於預防佇列尾部goroutine一致無法獲取mutex鎖的問題。

具體實現如下:

在Lock函式中

// Fast path: grab unlocked mutex.

// 1 使用原子操作修改鎖狀態為locked

if

atomic.CompareAndSwapInt32(&m.state

兩種模式是為了鎖的公平性而實現,摘取網上的一段翻譯:

http://blog.51cto.com/qiangmzsx/2134786

互斥量可分為兩種操作模式:正常和飢餓。

在正常模式下,等待的goroutines按照FIFO(先進先出)順序排隊,但是goroutine被喚醒之後並不能立即得到mutex鎖,它需要與新到達的goroutine爭奪mutex鎖。

因為新到達的goroutine已經在CPU上運行了,所以被喚醒的goroutine很大概率是爭奪mutex鎖是失敗的。出現這樣的情況時候,被喚醒的goroutine需要排隊在佇列的前面。

如果被喚醒的goroutine有超過1ms沒有獲取到mutex鎖,那麼它就會變為飢餓模式。

在飢餓模式中,mutex鎖直接從解鎖的goroutine交給佇列前面的goroutine。新達到的goroutine也不會去爭奪mutex鎖(即使沒有鎖,也不能去自旋),而是到等待佇列尾部排隊。

在飢餓模式下,有一個goroutine獲取到mutex鎖了,如果它滿足下條件中的任意一個,mutex將會切換回去正常模式:

1. 是等待佇列中的最後一個goroutine

2. 它的等待時間不超過1ms。

正常模式有更好的效能,因為goroutine可以連續多次獲得mutex鎖;

飢餓模式對於預防佇列尾部goroutine一致無法獲取mutex鎖的問題。

具體實現如下:

在Lock函式中

// Fast path: grab unlocked mutex.

// 1 使用原子操作修改鎖狀態為locked

if

atomic.CompareAndSwapInt32(&m.state