音視訊開發(38)---麥克風陣列模擬環境的搭建

麥克風陣列模擬環境的搭建

1. 引言

之前,我在語音增強一文中,提到了有關麥克風陣列語音增強的介紹,當然,麥克風陣列能做的東西遠遠不只是在語音降噪上的應用,它還可以用來做聲源定位、聲源估計、波束形成、回聲抑制等。個人認為,麥克風陣列在聲源定位和波束形成(多指抑制干擾語音方面)的優勢是單通道麥克風演算法無法比擬的。因為,利用多麥克風以後,就會將空間資訊考慮到演算法中,這樣就特別適合解決一些與空間相關性很強的語音處理問題。

然而,在做一些麥克風陣列相關的演算法研究的時候,最先遇到的問題就是:實驗環境的搭建。很多做麥克風陣列的愛好者並沒有實際的硬體實驗環境,這也就成了很多人進行麥克風陣列入門的難題。這裡,我要分享的是愛丁堡大學語音實驗室開源的基於MATLAB的麥克風陣列實驗模擬環境。利用該模擬環境,我們就可以隨意的設定房間的大小,混響程度,聲源方向以及噪聲等基本引數,然後得到我們想要的音訊檔案去測試你自己相應的麥克風陣列演算法。

2. 程式碼介紹

原始的程式碼被我加以修改,也是為了更好的執行,如果有興趣的話,大家還可以參考愛丁堡大學最初的原始碼,並且我也上傳到我的CSDN碼雲上了,連結是:https://gitee.com/wind_hit/Microphone-Array-Simulation-Environment。 這套MATLAB程式碼的主函式是multichannelSignalGenerator(),具體如下:

function [mcSignals,setup] = multichannelSignalGenerator(setup) %----------------------------------------------------------------------- % Producing the multi_noisy_signals for Mic array Beamforming. % % Usage: multichannelSignalGenerator(setup) % % setup.nRirLength : The length of Room Impulse Response Filter % setup.hpFilterFlag : use 'false' to disable high-pass filter, the high-%pass filter is enabled by default % setup.reflectionOrder : reflection order, default is -1, i.e. maximum order. % setup.micType : [omnidirectional, subcardioid, cardioid, hypercardioid, bidirectional], default is omnidirectional. % % setup.nSensors : The numbers of the Mic % setup.sensorDistance : The distance between the adjacent Mics (m) % setup.reverbTime : The reverberation time of room % setup.speedOfSound : sound velocity (m/s) % % setup.noiseField : Two kinds of Typical noise field, 'spherical' and 'cylindrical' % setup.sdnr : The target mixing snr for diffuse noise and clean siganl. % setup.ssnr : The approxiated mixing snr for sensor noise and clean siganl. % % setup.roomDim : 1 x 3 array specifying the (x,y,z) coordinates of the room (m). % setup.micPoints : 3 x M array, the rows specifying the (x,y,z) coordinates of the mic postions (m). % setup.srcPoint : 3 x M array, the rows specifying the (x,y,z) coordinates of the audio source postion (m). % % srcHeight : The height of target audio source % arrayHeight : The height of mic array % % arrayCenter : The Center Postion of mic array % % arrayToSrcDistInt :The distance between the array and audio source on the xy axis % % % % % % % How To Use : JUST RUN % % % % Code From: Audio analysis Lab of Aalborg University (Website: https://audio.create.aau.dk/), % slightly modified by Wind at Harbin Institute of Technology, Shenzhen, in 2018.3.24 % % Copyright (C) 1989, 1991 Free Software Foundation, Inc. %------------------------------------------------------------------------- addpath([cd,'\..\rirGen\']); %-----------------------------------------------initial parameters----------------------------------- setup.nRirLength = 2048; setup.hpFilterFlag = 1; setup.reflectionOrder = -1; setup.micType = 'omnidirectional'; setup.nSensors = 4; setup.sensorDistance = 0.05; setup.reverbTime = 0.1; setup.speedOfSound = 340; setup.noiseField = 'spherical'; setup.sdnr = 20; setup.ssnr = 25; setup.roomDim = [3;4;3]; srcHeight = 1; arrayHeight = 1; arrayCenter = [setup.roomDim(1:2)/2;1]; arrayToSrcDistInt = [1,1]; setup.srcPoint = [1.5;1;1]; setup.micPoints = generateUlaCoords(arrayCenter,setup.nSensors,setup.sensorDistance,0,arrayHeight); [cleanSignal,setup.sampFreq] = audioread('..\data\twoMaleTwoFemale20Seconds.wav'); %---------------------------------------------------initial end---------------------------------------- %-------------------------------algorithm processing-------------------------------------------------- if setup.reverbTime == 0, setup.reverbTime = 0.2; reflectionOrder = 0; else reflectionOrder = -1; end rirMatrix = rir_generator(setup.speedOfSound,setup.sampFreq,setup.micPoints',setup.srcPoint',setup.roomDim',... setup.reverbTime,setup.nRirLength,setup.micType,setup.reflectionOrder,[],[],setup.hpFilterFlag); for iSens = 1:setup.nSensors, tmpCleanSignal(:,iSens) = fftfilt(rirMatrix(iSens,:)',cleanSignal); end mcSignals.clean = tmpCleanSignal(setup.nRirLength:end,:); setup.nSamples = length(mcSignals.clean); mcSignals.clean = mcSignals.clean - ones(setup.nSamples,1)*mean(mcSignals.clean); %-------produce the microphone recieved clean signals--------------------------------------------- mic_clean1=10*mcSignals.clean(:,1); %Because of the attenuation of the recievd signals,Amplify the signals recieved by Mics with tenfold mic_clean2=10*mcSignals.clean(:,2); mic_clean3=10*mcSignals.clean(:,3); mic_clean4=10*mcSignals.clean(:,4); audiowrite('mic_clean1.wav' ,mic_clean1,setup.sampFreq); audiowrite('mic_clean2.wav' ,mic_clean2,setup.sampFreq); audiowrite('mic_clean3.wav' ,mic_clean3,setup.sampFreq); audiowrite('mic_clean4.wav' ,mic_clean4,setup.sampFreq); %----------------------------------end-------------------------------------------------- addpath([cd,'\..\nonstationaryMultichanNoiseGenerator\']); cleanSignalPowerMeas = var(mcSignals.clean); mcSignals.diffNoise = generateMultichanBabbleNoise(setup.nSamples,setup.nSensors,setup.sensorDistance,... setup.speedOfSound,setup.noiseField); diffNoisePowerMeas = var(mcSignals.diffNoise); diffNoisePowerTrue = cleanSignalPowerMeas/10^(setup.sdnr/10); mcSignals.diffNoise = mcSignals.diffNoise*... diag(sqrt(diffNoisePowerTrue)./sqrt(diffNoisePowerMeas)); mcSignals.sensNoise = randn(setup.nSamples,setup.nSensors); sensNoisePowerMeas = var(mcSignals.sensNoise); sensNoisePowerTrue = cleanSignalPowerMeas/10^(setup.ssnr/10); mcSignals.sensNoise = mcSignals.sensNoise*... diag(sqrt(sensNoisePowerTrue)./sqrt(sensNoisePowerMeas)); mcSignals.noise = mcSignals.diffNoise + mcSignals.sensNoise; mcSignals.observed = mcSignals.clean + mcSignals.noise; %------------------------------processing end----------------------------------------------------------- %----------------produce the noisy speech of MIc in the specific ervironment sets------------------------ noisy_mix1=10*mcSignals.observed(:,1); %Amplify the signals recieved by Mics with tenfold noisy_mix2=10*mcSignals.observed(:,2); noisy_mix3=10*mcSignals.observed(:,3); noisy_mix4=10*mcSignals.observed(:,4); l1=size(noisy_mix1); l2=size(noisy_mix2); l3=size(noisy_mix3); l4=size(noisy_mix4); audiowrite('diffused_babble_noise1_20dB.wav' ,noisy_mix1,setup.sampFreq); audiowrite('diffused_babble_noise2_20dB.wav' ,noisy_mix2,setup.sampFreq); audiowrite('diffused_babble_noise3_20dB.wav' ,noisy_mix3,setup.sampFreq); audiowrite('diffused_babble_noise4_20dB.wav' ,noisy_mix4,setup.sampFreq); %-----------------------------end-------------------------------------------------------------------------

這個是主函式,直接執行儘可以得到想要的音訊檔案,但是你需要先給出你的純淨音訊檔案和噪聲音訊,分別對應著:multichannelSignalGenerator()函式中的語句:[cleanSignal,setup.sampFreq] = audioread('..\data\twoMaleTwoFemale20Seconds.wav'),和generateMultichanBabbleNoise()函式中的語句:[singleChannelData,samplingFreq] = audioread('babble_8kHz.wav') 。

直接把它們替換成你想要處理的音訊檔案即可。

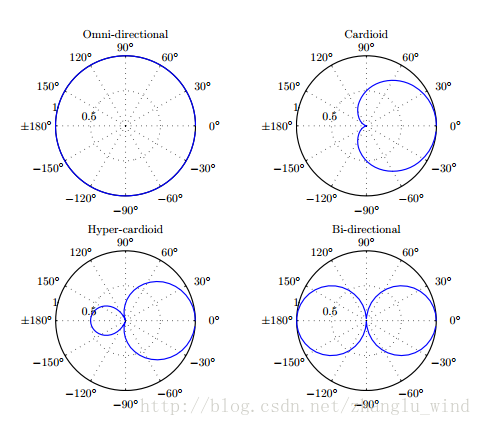

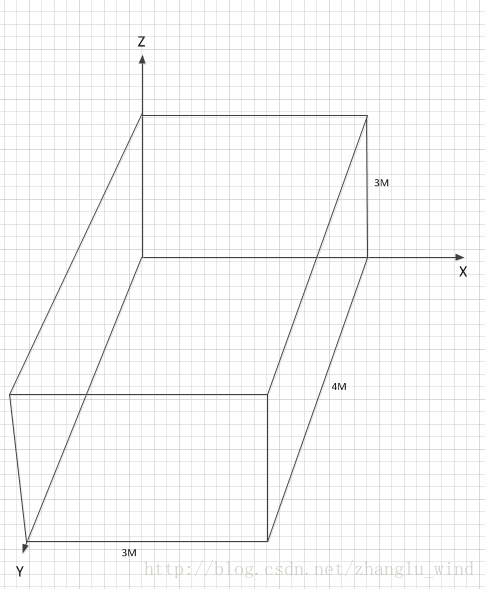

除此之外,還有一些基本實驗環境引數設定,包括:麥克風的形狀為線性麥克風陣列(該程式碼只能對線性陣列進行模擬建模,並且還是均勻線性陣列,這個不需要設定);麥克風的型別(micType),有全指向型(omnidirectional),心型指向(cardioid),亞心型指向(subcardioid,不知道咋翻譯,請見諒) , 超心型(hypercardioid), 雙向型(bidirectional),一般預設是全指向型,如下圖1所示;麥克風的數量(nSensors);各麥克風之間的間距(sensorDistance);麥克風陣列的中心位置(arrayCenter),用(x,y,z)座標來表示;麥克風陣列的高度(arrayHeight),感覺和前面的arrayCenter有所重複,不知道為什麼還要設定這麼一個引數;目標聲源的位置(srcPoint),也是用(x,y,z)座標來表示;目標聲源的高度(srcHeight);麥克風陣列距離目標聲源的距離(arrayToSrcDistInt),是在xy平面上的投影距離;房間的大小(roomDim),另外房間的(x,y,z)座標系如圖2所示;房間的混響時間(reverbTime);散漫噪聲場的型別(noiseField),分為球形場(spherical)和圓柱形場(cylindrical)。

以上便是整個模擬實驗環境的引數配置,雖然只能對均勻線性的麥克風陣列進行實驗測試,但是這對滿足我們進行線陣陣列演算法的測試是有很大的幫助。說到底,這種麥克風陣列環境的音訊資料產生方法還是基於數學模型的模擬,並不可能取代實際的硬體實驗環境測試,所以要想在工程上實現麥克風陣列的一些演算法,仍然避免不了在實際的環境中進行測試。最後,希望分享的這套程式碼對大家進行麥克風陣列演算法的入門提供幫助。