Ceph叢集報錯解決方案筆記

當前Ceph版本和CentOS版本:

[[email protected] ceph]# ceph -v

ceph version 13.2.2 (02899bfda814146b021136e9d8e80eba494e1126) mimic (stable)

[[email protected] ceph]# cat /etc/redhat-release

CentOS Linux release 7.5.1804 (Core)

1.節點間配置檔案內容不一致錯誤

輸入ceph-deploy mon create-initial命令獲取金鑰key,會在當前目錄(如我的是~/etc/ceph/)下生成幾個key,但報錯如下。意思是:就是配置失敗的兩個結點的配置檔案的內容於當前節點不一致,提示使用--overwrite-conf

[[email protected] ceph]# ceph-deploy mon create-initial

...

[ceph2][DEBUG ] remote hostname: ceph2

[ceph2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.mon][ERROR ] RuntimeError: config file /etc/ceph/ceph.conf exists with different content; use --overwrite- 輸入命令如下(此處我共配置了三個結點ceph1~3):

[[email protected] ceph]# ceph-deploy --overwrite-conf mon create ceph{3,1,2}

...

[ceph2][DEBUG ] remote hostname: ceph2

[ceph2][DEBUG ] write cluster configuration to /etc/ceph/{cluster} 之後配置成功,可繼續進行初始化磁碟操作。

2.too few PGs per OSD (21 < min 30)警告

[[email protected] ceph]# ceph -s

cluster:

id: 8e2248e4-3bb0-4b62-ba93-f597b1a3bd40

health: HEALTH_WARN

too few PGs per OSD (21 < min 30)

services:

mon: 3 daemons, quorum ceph2,ceph1,ceph3

mgr: ceph2(active), standbys: ceph1, ceph3

osd: 3 osds: 3 up, 3 in

rgw: 1 daemon active

data:

pools: 4 pools, 32 pgs

objects: 219 objects, 1.1 KiB

usage: 3.0 GiB used, 245 GiB / 248 GiB avail

pgs: 32 active+clean

- 從上面叢集狀態資訊可查,每個osd上的pg數量=21<最小的數目30個。pgs為32,因為我之前設定的是2副本的配置,所以當有3個osd的時候,每個osd上均分了

32÷3*2=21個pgs,也就是出現瞭如上的錯誤 小於最小配置30個。 - 叢集這種狀態如果進行資料的儲存和操作,會發現叢集卡死,無法響應io,同時會導致大面積的osd down。

解決辦法:增加pg數

因為我的一個pool有8個pgs,所以我需要增加兩個pool才能滿足osd上的pg數量=48÷3*2=32>最小的數目30。

[[email protected] ceph]# ceph osd pool create mytest 8

pool 'mytest' created

[[email protected] ceph]# ceph osd pool create mytest1 8

pool 'mytest1' created

[[email protected] ceph]# ceph -s

cluster:

id: 8e2248e4-3bb0-4b62-ba93-f597b1a3bd40

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph2,ceph1,ceph3

mgr: ceph2(active), standbys: ceph1, ceph3

osd: 3 osds: 3 up, 3 in

rgw: 1 daemon active

data:

pools: 6 pools, 48 pgs

objects: 219 objects, 1.1 KiB

usage: 3.0 GiB used, 245 GiB / 248 GiB avail

pgs: 48 active+clean

叢集健康狀態顯示正常。

3.叢集狀態是HEALTH_WARN application not enabled on 1 pool(s)

如果此時,檢視叢集狀態是HEALTH_WARN application not enabled on 1 pool(s):

[[email protected] ceph]# ceph -s

cluster:

id: 13430f9a-ce0d-4d17-a215-272890f47f28

health: HEALTH_WARN

application not enabled on 1 pool(s)

[[email protected] ceph]# ceph health detail

HEALTH_WARN application not enabled on 1 pool(s)

POOL_APP_NOT_ENABLED application not enabled on 1 pool(s)

application not enabled on pool 'mytest'

use 'ceph osd pool application enable <pool-name> <app-name>', where <app-name> is 'cephfs', 'rbd', 'rgw', or freeform for custom applications.

執行ceph health detail命令發現是新加入的儲存池mytest沒有被應用程式標記,因為之前新增的是RGW例項,所以此處依提示將mytest被rgw標記即可:

[[email protected] ceph]# ceph osd pool application enable mytest rgw

enabled application 'rgw' on pool 'mytest'

再次檢視叢集狀態發現恢復正常

[[email protected] ceph]# ceph health

HEALTH_OK

4.刪除儲存池報錯

以下以刪除mytest儲存池為例,執行ceph osd pool rm mytest命令報錯,顯示需要在原命令的pool名字後再寫一遍該pool名字並最後加上--yes-i-really-really-mean-it引數

[[email protected] ceph]# ceph osd pool rm mytest

Error EPERM: WARNING: this will *PERMANENTLY DESTROY* all data stored in pool mytest. If you are *ABSOLUTELY CERTAIN* that is what you want, pass the pool name *twice*, followed by --yes-i-really-really-mean-it.

按照提示要求複寫pool名字後加上提示引數如下,繼續報錯:

[[email protected] ceph]# ceph osd pool rm mytest mytest --yes-i-really-really-mean-it

Error EPERM: pool deletion is disabled; you must first set the

mon_allow_pool_delete config option to true before you can destroy a pool

錯誤資訊顯示,刪除儲存池操作被禁止,應該在刪除前現在ceph.conf配置檔案中增加mon_allow_pool_delete選項並設定為true。所以分別登入到每一個節點並修改每一個節點的配置檔案。操作如下:

[[email protected] ceph]# vi ceph.conf

[[email protected] ceph]# systemctl restart ceph-mon.target

在ceph.conf配置檔案底部加入如下引數並設定為true,儲存退出後使用systemctl restart ceph-mon.target命令重啟服務。

[mon]

mon allow pool delete = true

其餘節點操作同理。

[[email protected] ceph]# vi ceph.conf

[[email protected] ceph]# systemctl restart ceph-mon.target

[[email protected] ceph]# vi ceph.conf

[[email protected] ceph]# systemctl restart ceph-mon.target

再次刪除,即成功刪除mytest儲存池。

[[email protected] ceph]# ceph osd pool rm mytest mytest --yes-i-really-really-mean-it

pool 'mytest' removed

5.叢集節點宕機後恢復節點排錯

筆者將ceph叢集中的三個節點分別關機並重啟後,檢視ceph叢集狀態如下:

[[email protected] ~]# ceph -s

cluster:

id: 13430f9a-ce0d-4d17-a215-272890f47f28

health: HEALTH_WARN

1 MDSs report slow metadata IOs

324/702 objects misplaced (46.154%)

Reduced data availability: 126 pgs inactive

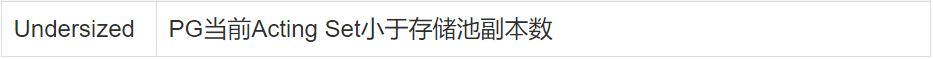

Degraded data redundancy: 144/702 objects degraded (20.513%), 3 pgs degraded, 126 pgs undersized

services:

mon: 3 daemons, quorum ceph2,ceph1,ceph3

mgr: ceph1(active), standbys: ceph2, ceph3

mds: cephfs-1/1/1 up {0=ceph1=up:creating}

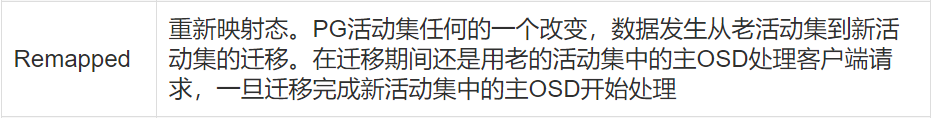

osd: 3 osds: 3 up, 3 in; 162 remapped pgs

data:

pools: 8 pools, 288 pgs

objects: 234 objects, 2.8 KiB

usage: 3.0 GiB used, 245 GiB / 248 GiB avail

pgs: 43.750% pgs not active

144/702 objects degraded (20.513%)

324/702 objects misplaced (46.154%)

162 active+clean+remapped

123 undersized+peered

3 undersized+degraded+peered

檢視

[[email protected] ~]# ceph health detail

HEALTH_WARN 1 MDSs report slow metadata IOs; 324/702 objects misplaced (46.154%); Reduced data availability: 126 pgs inactive; Degraded data redundancy: 144/702 objects degraded (20.513%), 3 pgs degraded, 126 pgs undersized

MDS_SLOW_METADATA_IO 1 MDSs report slow metadata IOs

mdsceph1(mds.0): 9 slow metadata IOs are blocked > 30 secs, oldest blocked for 42075 secs

OBJECT_MISPLACED 324/702 objects misplaced (46.154%)

PG_AVAILABILITY Reduced data availability: 126 pgs inactive

pg 8.28 is stuck inactive for 42240.369934, current state undersized+peered, last acting [0]

pg 8.2a is stuck inactive for 45566.934835, current state undersized+peered, last acting [0]

pg 8.2d is stuck inactive for 42240.371314, current state undersized+peered, last acting [0]

pg 8.2f is stuck inactive for 45566.913284, current state undersized+peered, last acting [0]

pg 8.32 is stuck inactive for 42240.354304, current state undersized+peered, last acting [0]

....

pg 8.28 is stuck undersized for 42065.616897, current state undersized+peered, last acting [0]

pg 8.2a is stuck undersized for 42065.613246, current state undersized+peered, last acting [0]

pg 8.2d is stuck undersized for 42065.951760, current state undersized+peered, last acting [0]

pg 8.2f is stuck undersized for 42065.610464, current state undersized+peered, last acting [0]

pg 8.32 is stuck undersized for 42065.959081, current state undersized+peered, last acting [0]

....

可見在資料修復中, 出現了inactive和undersized的值, 則是不正常的現象

解決方法:

①處理inactive的pg:

重啟一下osd服務即可

[[email protected] ~]# systemctl restart ceph-osd.target

繼續檢視叢集狀態發現,inactive值的pg已經恢復正常,此時還剩undersized的pg。

[[email protected] ~]# ceph -s

cluster:

id: 13430f9a-ce0d-4d17-a215-272890f47f28

health: HEALTH_WARN

1 filesystem is degraded

241/723 objects misplaced (33.333%)

Degraded data redundancy: 59 pgs undersized

services:

mon: 3 daemons, quorum ceph2,ceph1,ceph3

mgr: ceph1(active), standbys: ceph2, ceph3

mds: cephfs-1/1/1 up {0=ceph1=up:rejoin}

osd: 3 osds: 3 up, 3 in; 229 remapped pgs

rgw: 1 daemon active

data:

pools: 8 pools, 288 pgs

objects: 241 objects, 3.4 KiB

usage: 3.0 GiB used, 245 GiB / 248 GiB avail

pgs: 241/723 objects misplaced (33.333%)

224 active+clean+remapped

59 active+undersized

5 active+clean

io:

client: 1.2 KiB/s rd, 1 op/s rd, 0 op/s wr

②處理undersized的pg:

學會出問題先檢視健康狀態細節,仔細分析發現雖然設定的備份數量是3,但是PG 12.x卻只有兩個拷貝,分別存放在OSD 0~2的某兩個上。

[[email protected] ~]# ceph health detail

HEALTH_WARN 241/723 objects misplaced (33.333%); Degraded data redundancy: 59 pgs undersized

OBJECT_MISPLACED 241/723 objects misplaced (33.333%)

PG_DEGRADED Degraded data redundancy: 59 pgs undersized

pg 12.8 is stuck undersized for 1910.001993, current state active+undersized, last acting [2,0]

pg 12.9 is stuck undersized for 1909.989334, current state active+undersized, last acting [2,0]

pg 12.a is stuck undersized for 1909.995807, current state active+undersized, last acting [0,2]

pg 12.b is stuck undersized for 1910.009596, current state active+undersized, last acting [1,0]

pg 12.c is stuck undersized for 1910.010185, current state active+undersized, last acting [0,2]

pg 12.d is stuck undersized for 1910.001526, current state active+undersized, last acting [1,0]

pg 12.e is stuck undersized for 1909.984982, current state active+undersized, last acting [2,0]

pg 12.f is stuck undersized for 1910.010640, current state active+undersized, last acting [2,0]

進一步檢視叢集osd狀態樹,發現ceph2和cepn3宕機再恢復後,osd.1 和osd.2程序已不在ceph2和cepn3上。

[[email protected] ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.24239 root default

-9 0.16159 host centos7evcloud

1 hdd 0.08080 osd.1 up 1.00000 1.00000

2 hdd 0.08080 osd.2 up 1.00000 1.00000

-3 0.08080 host ceph1

0 hdd 0.08080 osd.0 up 1.00000 1.00000

-5 0 host ceph2

-7 0 host ceph3

分別檢視osd.1 和osd.2服務狀態。

解決方法:

分別進入到ceph2和ceph3節點中重啟osd.1 和osd.2服務,將這兩個服務重新對映到ceph2和ceph3節點中。

[[email protected] ~]# ssh ceph2

[[email protected] ~]# systemctl restart ceph-[email protected]1.service

[[email protected] ~]# ssh ceph3

[[email protected] ~]# systemctl restart ceph-[email protected]2.service

最後檢視叢集osd狀態樹發現這兩個服務重新對映到ceph2和ceph3節點中。

[[email protected] ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.24239 root default

-9 0 host centos7evcloud

-3 0.08080 host ceph1

0 hdd 0.08080 osd.0 up 1.00000 1.00000

-5 0.08080 host ceph2

1 hdd 0.08080 osd.1 up 1.00000 1.00000

-7 0.08080 host ceph3

2 hdd 0.08080 osd.2 up 1.00000 1.00000

叢集狀態也顯示了久違的HEALTH_OK。

[[email protected] ~]# ceph -s

cluster:

id: 13430f9a-ce0d-4d17-a215-272890f47f28

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph2,ceph1,ceph3

mgr: ceph1(active), standbys: ceph2, ceph3

mds: cephfs-1/1/1 up {0=ceph1=up:active}

osd: 3 osds: 3 up, 3 in

rgw: 1 daemon active

data:

pools: 8 pools, 288 pgs

objects: 241 objects, 3.6 KiB

usage: 3.1 GiB used, 245 GiB / 248 GiB avail

pgs: 288 active+clean

6.解除安裝CephFS後再掛載時報錯

掛載命令如下:

mount -t ceph 10.0.86.246:6789,10.0.86.221:6789,10.0.86.253:6789:/ /mnt/mycephfs/ -o name=admin,secret=AQBAI/JbROMoMRAAbgRshBRLLq953AVowLgJPw==

解除安裝CephFS後再掛載時報錯:mount error(2): No such file or directory

說明:首先檢查/mnt/mycephfs/目錄是否存在並可訪問,我的是存在的但依然報錯No such file or directory。但是我重啟了一下osd服務意外好了,可以正常掛載CephFS。

[[email protected] ~]# systemctl restart ceph-osd.target

[[email protected] ~]# mount -t ceph 10.0.86.246:6789,10.0.86.221:6789,10.0.86.253:6789:/ /mnt/mycephfs/ -o name=admin,secret=AQBAI/JbROMoMRAAbgRshBRLLq953AVowLgJPw==

可見掛載成功~!

[[email protected] ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/vda2 48G 7.5G 41G 16% /

devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs 2.0G 8.0K 2.0G 1% /dev/shm

tmpfs 2.0G 17M 2.0G 1% /run

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

tmpfs 2.0G 24K 2.0G 1% /var/lib/ceph/osd/ceph-0

tmpfs 396M 0 396M 0% /run/user/0

10.0.86.246:6789,10.0.86.221:6789,