JAVA爬蟲爬取網頁資料資料庫中,並且去除重複資料

阿新 • • 發佈:2018-12-13

pom檔案

<!-- 新增Httpclient支援 --> <dependency> <groupId>org.apache.httpcomponents</groupId> <artifactId>httpclient</artifactId> <version>4.5.2</version> </dependency> <!-- 新增jsoup支援 --> <dependency> <groupId>org.jsoup</groupId> <artifactId>jsoup</artifactId> <version>1.10.1</version> </dependency> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.16</version> </dependency> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>5.1.44</version> </dependency> <!-- 新增Httpclient支援 --> <dependency> <groupId>org.apache.httpcomponents</groupId> <artifactId>httpclient</artifactId> <version>4.5.2</version> </dependency> <!-- 新增日誌支援 --> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.16</version> </dependency> <!-- 新增ehcache支援 --> <dependency> <groupId>net.sf.ehcache</groupId> <artifactId>ehcache</artifactId> <version>2.10.3</version> </dependency> <!-- 新增commons io支援 --> <dependency> <groupId>commons-io</groupId> <artifactId>commons-io</artifactId> <version>2.5</version> </dependency>

Ehcache.xml

<?xml version="1.0" encoding="UTF-8"?> <ehcache> <!-- 磁碟儲存:將快取中暫時不使用的物件,轉移到硬碟,類似於Windows系統的虛擬記憶體 path:指定在硬碟上儲存物件的路徑 --> <diskStore path="E:\Temp\ReptileCache" /> <!-- defaultCache:預設的快取配置資訊,如果不加特殊說明,則所有物件按照此配置項處理 maxElementsInMemory:設定了快取的上限,最多儲存多少個記錄物件 eternal:代表物件是否永不過期 overflowToDisk:當記憶體中Element數量達到maxElementsInMemory時,Ehcache將會Element寫到磁碟中 --> <defaultCache maxElementsInMemory="1" eternal="true" overflowToDisk="true"/> <cache name="cnblog" maxElementsInMemory="1" diskPersistent="true" eternal="true" overflowToDisk="true"/> </ehcache>

crawler.properties 配置資料資訊和檔案路徑

dbUrl=jdbc:mysql://localhost:3306/db_blogs?characterEncoding=UTF-8

dbUserName=資料庫名

dbPassword=密碼

jdbcName=com.mysql.jdbc.Driver

ehcacheXmlPath=ehcache.xml

#圖片存放路徑

blogImages=E://Temp/blogImages/日誌檔案 log4j.properties

log4j.rootLogger=INFO, stdout,D #Console log4j.appender.stdout=org.apache.log4j.ConsoleAppender log4j.appender.stdout.Target = System.out log4j.appender.stdout.layout=org.apache.log4j.PatternLayout #log4j.appender.stdout.layout.ConversionPattern=[%-5p] %d{yyyy-MM-dd HH:mm:ss,SSS} method:%l%n%m%n #D log4j.appender.D = org.apache.log4j.RollingFileAppender log4j.appender.D.File = E://Temp/log4j/crawler/log.log log4j.appender.D.MaxFileSize=100KB log4j.appender.D.MaxBackupIndex=100 log4j.appender.D.Append = true log4j.appender.D.layout = org.apache.log4j.PatternLayout log4j.appender.D.layout.ConversionPattern = %-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%n

然後是要用到的幾個幫助類

package com.hw.util;

import java.text.SimpleDateFormat;

import java.util.Date;

/**

* 日期工具類

* @author user

*

*/

public class DateUtil {

/**

* 獲取當前年月日路徑

* @return

* @throws Exception

*/

public static String getCurrentDatePath()throws Exception{

Date date=new Date();

SimpleDateFormat sdf=new SimpleDateFormat("yyyy/MM/dd");

return sdf.format(date);

}

public static void main(String[] args) {

try {

System.out.println(getCurrentDatePath());

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

package com.hw.util;

import java.sql.Connection;

import java.sql.DriverManager;

/**

* 資料庫工具類

* @author user

*

*/

public class DbUtil {

/**

* 獲取連線

* @return

* @throws Exception

*/

public Connection getCon()throws Exception{

Class.forName(PropertiesUtil.getValue("jdbcName"));

Connection con=DriverManager.getConnection(PropertiesUtil.getValue("dbUrl"), PropertiesUtil.getValue("dbUserName"), PropertiesUtil.getValue("dbPassword"));

return con;

}

/**

* 關閉連線

* @param con

* @throws Exception

*/

public void closeCon(Connection con)throws Exception{

if(con!=null){

con.close();

}

}

public static void main(String[] args) {

DbUtil dbUtil=new DbUtil();

try {

dbUtil.getCon();

System.out.println("資料庫連線成功");

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

System.out.println("資料庫連線失敗");

}

}

}

package com.hw.util;

import java.io.IOException;

import java.io.InputStream;

import java.util.Properties;

/**

* properties工具類

* @author user

*

*/

public class PropertiesUtil {

/**

* 根據key獲取value值

* @param key

* @return

*/

public static String getValue(String key){

Properties prop=new Properties();

InputStream in=new PropertiesUtil().getClass().getResourceAsStream("/crawler.properties");

try {

prop.load(in);

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

return prop.getProperty(key);

}

}

爬取網頁類

package com.hw.crawler;

import com.hw.util.DateUtil;

import com.hw.util.DbUtil;

import com.hw.util.PropertiesUtil;

import net.sf.ehcache.Cache;

import net.sf.ehcache.CacheManager;

import net.sf.ehcache.Status;

import org.apache.commons.io.FileUtils;

import org.apache.http.HttpEntity;

import org.apache.http.client.ClientProtocolException;

import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import org.apache.log4j.Logger;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import java.io.File;

import java.io.IOException;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.LinkedList;

import java.util.List;

import java.util.Map;

import java.util.Set;

import java.util.UUID;

/**

* @program: Maven

* @description: 爬蟲類

* @author: hw

* @create: 2018-12-03 20:12

**/

public class BlogCrawlerStarter {

//單次啟動爬取的資料量

private static int index=0;

//日誌

private static Logger logger = Logger.getLogger(BlogCrawlerStarter.class);

//爬取的網址

private static String HOMEURL = "隨你";

private static CloseableHttpClient httpClient;

//資料庫

private static Connection con;

//快取

private static CacheManager cacheManager;

private static Cache cache;

/**

* @Description: httpclient解析首頁

* @Param: []

* @return: void

* @Author: hw

* @Date: 2018/12/3

*/

public static void parseHomePage() throws IOException {

logger.info("開始爬取首頁" + HOMEURL);

//建立快取

cacheManager = CacheManager.create(PropertiesUtil.getValue("ehcacheXmlPath"));

//快取槽

cache = cacheManager.getCache("cnblog");

//比作一個瀏覽器

httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(HOMEURL);

//設定相應時間和等待時間

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

//如果為空

if (response == null) {

logger.info(HOMEURL + "爬取無響應");

return;

}

//狀態正常

if (response.getStatusLine().getStatusCode() == 200) {

//網路實體

HttpEntity entity = response.getEntity();

String s = EntityUtils.toString(entity, "utf-8");

// System.out.println(s);

parseHomePageContent(s);

}

} catch (ClientProtocolException e) {

logger.error(HOMEURL + "-ClientProtocolException", e);

} catch (IOException e) {

logger.error(HOMEURL + "-IOException", e);

} finally {

//關閉

if (response != null) {

response.close();

}

if (httpClient != null) {

httpClient.close();

}

}

if (cache.getStatus() == Status.STATUS_ALIVE) {

cache.flush();

}

cacheManager.shutdown();

logger.info("結束" + HOMEURL);

}

/**

* @Description: 通過網路爬蟲框架jsoup, 解析網頁內容, 獲取想要的資料(部落格的標題, 部落格的連結)

* @Param: [s]

* @return: void

* @Author: hw

* @Date: 2018/12/3

*/

private static void parseHomePageContent(String s) {

Document doc = Jsoup.parse(s);

//查詢該內容下的a標籤

Elements select = doc.select("#feedlist_id .list_con .title h2 a");

for (Element element : select) {

//部落格連結

String href = element.attr("href");

if (href == null || href.equals("")) {

logger.error("部落格為空,不再爬取");

continue;

}

//當快取有這條資料

if (cache.get(href) != null) {

logger.info("該資料已經被爬取到資料庫中,不再新增,本次已爬取"+index+"個");

continue;

}

//通過部落格連結獲取部落格內容

parseBlogUrl(href);

}

}

/**

* @Description: 通過部落格連結獲取部落格內容

* @Param: [href]

* @return: void

* @Author: hw

* @Date: 2018/12/3

*/

private static void parseBlogUrl(String blogUrl) {

logger.info("開始爬取部落格網頁" + blogUrl);

//比作一個瀏覽器

httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(blogUrl);

//設定相應時間和等待時間

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

//如果為空

if (response == null) {

logger.info(blogUrl + "爬取無響應");

return;

}

//狀態正常

if (response.getStatusLine().getStatusCode() == 200) {

//網路實體

HttpEntity entity = response.getEntity();

String blogContent = EntityUtils.toString(entity, "utf-8");

parseBlogContent(blogContent, blogUrl);

}

} catch (ClientProtocolException e) {

logger.error(blogUrl + "-ClientProtocolException", e);

} catch (IOException e) {

logger.error(blogUrl + "-IOException", e);

} finally {

//關閉

if (response != null) {

try {

response.close();

} catch (IOException e) {

logger.error(blogUrl + "-IOException", e);

}

}

}

logger.info("結束部落格網頁" + HOMEURL);

}

/**

* @Description: 解析部落格內容, 獲取部落格的標題和所有內容

* @Param: [blogContent]

* @return: void

* @Author: hw

* @Date: 2018/12/3

*/

private static void parseBlogContent(String blogContent, String Url) throws IOException {

Document parse = Jsoup.parse(blogContent);

//標題

Elements title = parse.select("#mainBox main .blog-content-box .article-header-box .article-header .article-title-box h1");

if (title.size() == 0) {

logger.info("部落格標題為空,不加入資料庫");

return;

}

//標題

String titles = title.get(0).html();

//內容

Elements content_views = parse.select("#content_views");

if (content_views.size() == 0) {

logger.info("內容為空,不加入資料庫");

return;

}

//內容

String content = content_views.get(0).html();

//圖片

// Elements imgele = parse.select("img");

// List<String> imglist = new LinkedList<>();

// if (imgele.size() >= 0) {

// for (Element element : imgele) {

// //圖片連結加入集合

// imglist.add(element.attr("src"));

// }

// }

//

// if (imglist.size() > 0) {

// Map<String, String> replaceUrlMap = DownLoadImgList(imglist);

// blogContent = replaceContent(blogContent, replaceUrlMap);

// }

String sql = "insert into t_article values(null,?,?,null,now(),0,0,null,?,0,null)";

try {

PreparedStatement ps = con.prepareStatement(sql);

ps.setObject(1, titles);

ps.setObject(2, content);

ps.setObject(3, Url);

if (ps.executeUpdate() == 0) {

logger.info("爬取部落格資訊插入資料庫失敗");

} else {

index++;

//加入快取

cache.put(new net.sf.ehcache.Element(Url, Url));

logger.info("爬取部落格資訊插入資料庫成功,本次已爬取"+index+"個");

}

} catch (SQLException e) {

e.printStackTrace();

}

}

/**

* @Description: 將別人的部落格內容進行加工, 將原有的圖片地址換成本地的圖片

* @Param: [blogContent, replaceUrlMap]

* @return: java.lang.String

* @Author: hw

* @Date: 2018/12/3

*/

private static String replaceContent(String blogContent, Map<String, String> replaceUrlMap) {

Set<Map.Entry<String, String>> entries = replaceUrlMap.entrySet();

for (Map.Entry<String, String> entry : entries) {

blogContent = blogContent.replace(entry.getKey(), entry.getValue());

}

return blogContent;

}

/**

* @Description:爬取圖片本地化

* @Param: [imglist]

* @return: java.util.Map<java.lang.String , java.lang.String>

* @Author: hw

* @Date: 2018/12/3

*/

// private static Map<String, String> DownLoadImgList(List<String> imglist) throws IOException {

// Map<String, String> replaceMap = new HashMap<>();

// for (String imgurl : imglist) {

// //比作一個瀏覽器

// CloseableHttpClient httpClient = HttpClients.createDefault();

// HttpGet httpGet = new HttpGet(imgurl);

// //設定相應時間和等待時間

// RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

// httpGet.setConfig(config);

// CloseableHttpResponse response = null;

// try {

// response = httpClient.execute(httpGet);

// //如果為空

// if (response == null) {

// logger.info(HOMEURL + "爬取無響應");

// } else {

// //狀態正常

// if (response.getStatusLine().getStatusCode() == 200) {

// //網路實體

// HttpEntity entity = response.getEntity();

// //存放圖片地址

// String blogImages = PropertiesUtil.getValue("blogImages");

// //生成日期 按天生成資料夾

// String data = DateUtil.getCurrentDatePath();

// String uuid = UUID.randomUUID().toString();

// //擷取圖片名

// String subfix = entity.getContentType().getValue().split("/")[1];

// //最後生成路徑名

// String fileName = blogImages + data + "/" + uuid + "." + subfix;

//

// FileUtils.copyInputStreamToFile(entity.getContent(), new File(fileName));

// //imgurl為別人的檔案地址,filename為本地圖片地址

// replaceMap.put(imgurl, fileName);

// }

// }

//

// } catch (ClientProtocolException e) {

// logger.error(imgurl + "-ClientProtocolException", e);

// } catch (IOException e) {

// logger.error(imgurl + "-IOException", e);

// } catch (Exception e) {

// logger.error(imgurl + "-Exception", e);

// } finally {

// //關閉

// if (response != null) {

// response.close();

// }

//

// }

//

// }

// return replaceMap;

// }

/**

* @Description: 開始執行

* @Param: []

* @return: void

* @Author: hw

* @Date: 2018/12/3

*/

public static void start() {

while (true) {

DbUtil dbUtil = new DbUtil();

try {

//連線資料庫

con = dbUtil.getCon();

parseHomePage();

} catch (Exception e) {

logger.error("資料庫連線失敗");

} finally {

if (con != null) {

try {

con.close();

} catch (SQLException e) {

logger.info("資料異常", e);

}

}

}

try {

//x分鐘爬取一次

Thread.sleep(1000 * 45*1);

} catch (InterruptedException e) {

logger.info("執行緒休眠異常", e);

}

}

}

public static void main(String[] args) {

start();

List list=new ArrayList();

list.iterator();

}

}

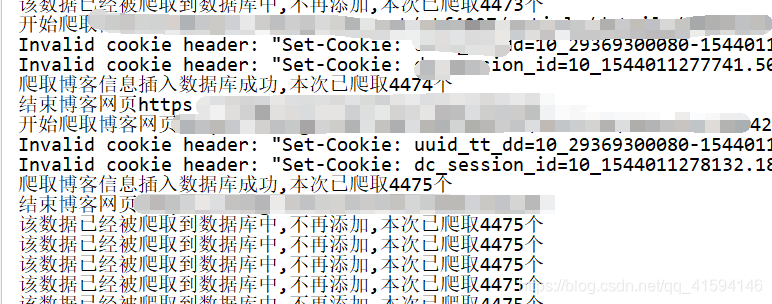

效果圖: