[OpenGL] 屏幕後處理:景深效果

開發環境:Qt, OpenGL

立方體紋理是Qt官方教程Cube裡自帶的紋理。

概念引入

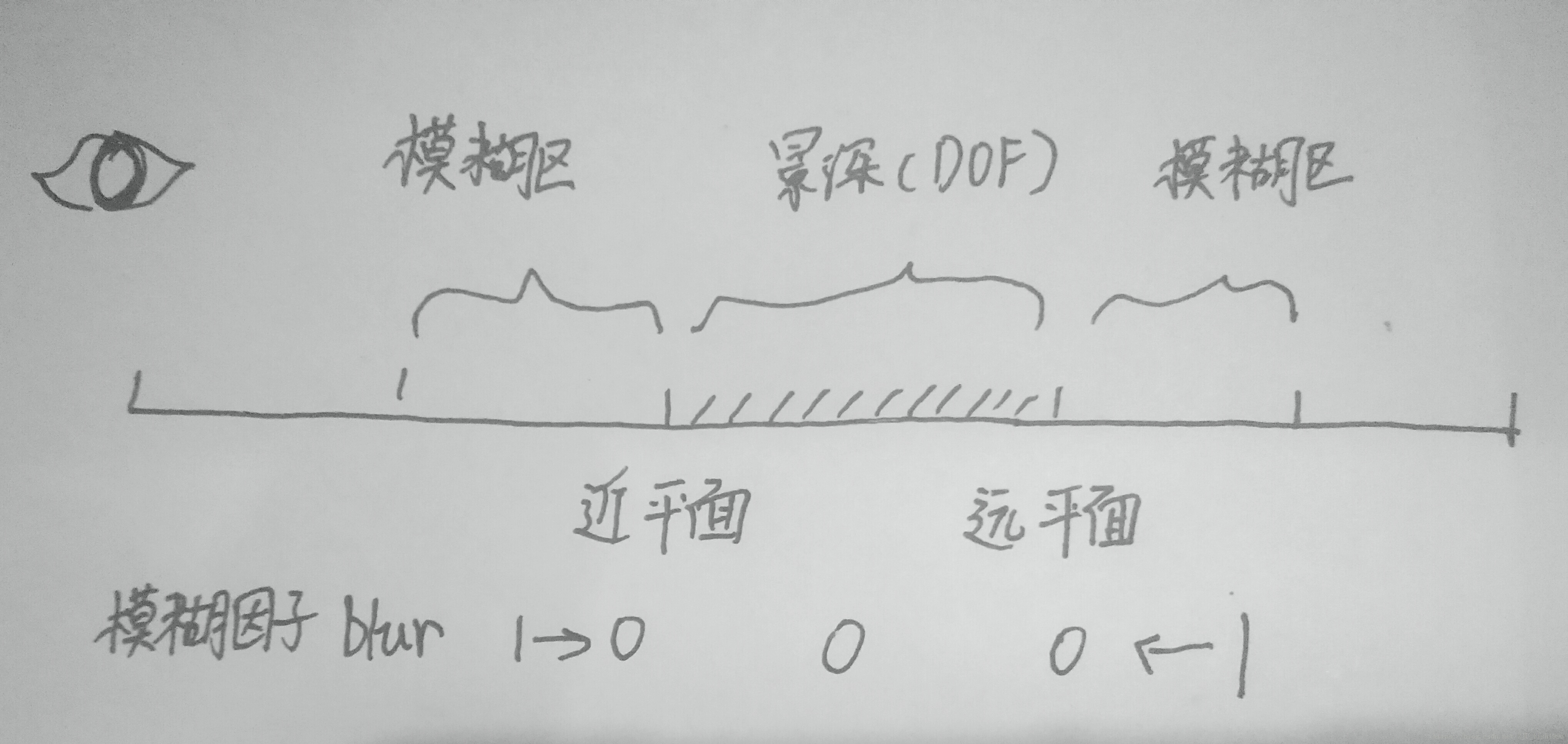

景深是攝像中的術語。相機聚焦後,鏡頭前遠近平面之間的物體能夠清晰成像,這一段清晰成像的距離也就是景深,我們可以從下圖更直觀地理解景深。

為了在繪製中模擬景深這一效果,一個直觀的想法是,獲取物體和相機的距離,根據這一距離和遠近平面的關係,來決定物體的清晰以及模糊狀態,以及它的模糊程度。

對場景中的物體進行模糊實際上是影象空間的技術,這個時候,如果能夠把場景渲染到一張紋理上,然後對這個紋理進行逐畫素處理,就會非常方便。為了達到這一目的,我們可以使用屏幕後處理技術

模糊是一個比較常見的技術,比如我們最常用的高斯模糊,用一個濾波運算元將臨近畫素按照一定比例混合,達到模糊的效果。但是在屏幕後處理的過程中,我們並不知道相機的深度資訊,也就無法根據深度來確定模糊因子。為了拿到這一變數,一個比較簡單的方法是,在將場景渲染到紋理的同時,獲取深度,並計算對應的模糊因子,然後將這一資料寫入紋理的alpha通道,在第二次渲染處理的過程中,就能很直接的獲取相關資訊。

總而言之,景深是一個比較耗時的技術,它將至少消耗兩個pass。

幀緩衝技術

OpenGL底層對場景渲染到紋理提供了支援, 它的基本步驟如下:

(1) 建立一個幀緩衝物件

(2) 建立一個和螢幕大小相同的紋理

(3) 將紋理附加到幀緩衝上

(4) 渲染時,指定將其渲染到特定的幀緩衝上。不需要渲染到幀緩衝時,我們需要關閉這一效果。

由此可見,我們一共需要建立兩個物件,幀緩衝物件和紋理,並且需要建立兩者的對應的關係。接下來,我們來看一下每一步對應的OpenGL實現。

1. 建立幀緩衝

glGenFramebuffers(1, &fBO); glBindFramebuffer(GL_FRAMEBUFFER, fBO);

在以上程式碼中,我們僅建立一個幀緩衝,並將對應的索引值存在fBO (型別為GLuint) 變數裡,然後把這一幀緩衝繫結到下文環境中。

2. 建立紋理

glGenTextures(1, &sceneBuffer);

glBindTexture(GL_TEXTURE_2D, sceneBuffer);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, screenX, screenY, 0, GL_RGBA, GL_UNSIGNED_BYTE, nullptr);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glBindTexture(GL_TEXTURE_2D, 0);

建立方式和普通紋理建立方式基本一致,指定了基本的引數後,再指定紋理縮放的過濾模式,稍微需要注意的地方有:

(1) 建立的紋理大小和螢幕大小保持一致(*)

(2) 為了寫入深度資料,紋理的通道為RGBA

之後。場景將會被寫入這一紋理,通過sceneBuffer可索引到該紋理。

(*) 本例未考慮視窗縮放的問題,如果需要考慮的話,每次渲染都去建立和銷燬紋理會比較耗時,我的想法是,先建立一張比較大的紋理,然後再第二次渲染到面片上時,調整紋理的uv,比如長寬均為一半時,紋理uv取(0.5,0.5),該想法未經實踐,不確定是否可行。

3. 紋理附加到幀緩衝

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, sceneBuffer, 0);4. 指定使用當前幀緩衝

glBindFramebuffer(GL_FRAMEBUFFER, fBO); // 之後的操作寫入幀快取

// ... Render_Pass0() ...

glBindFramebuffer(GL_FRAMEBUFFER, 0); // 取消寫入幀快取

// ... Render_Pass1() ...以上程式碼執行在每幀呼叫的paintGL中。

模糊效果

本例中使用的運算元參考的是《DirectX 3D HLSL高階例項精講》中使用的運算元,該運算元包含了12個數據,記錄的是紋理的偏移值,利用這一偏移資料,獲取當前畫素的周圍12個畫素,並計算這12個畫素的平均值,最終得到一張模糊的影象。需要注意的是,偏移運算元本身記錄的是絕對值,我們需要除以螢幕的大小,以排除螢幕大小對偏移效果的影響。

之後,根據模糊因子(取值範圍在0~1) 之間,在原影象和模糊影象之間進行插值,得到最終的資料。

著色器部分

1. 第一次渲染

Vertex Shader:

uniform mat4 ProjectMatrix;

uniform mat4 ModelViewMatrix;

attribute vec4 a_position;

attribute vec2 a_texcoord;

varying vec2 v_texcoord;

varying float v_depth;

void main()

{

gl_Position = ModelViewMatrix * a_position; // 轉換到視點空間

v_texcoord = a_texcoord;

v_depth = gl_Position.z; // 記錄一下相機深度資訊

gl_Position = ProjectMatrix * gl_Position; // 轉換到投影空間

}

這裡唯一特別的是,需要先把資料轉換到相機空間再去記錄深度資料。

Fragment Shader:

uniform sampler2D texture;

varying float v_depth;

varying vec2 v_texcoord;

void main(void)

{

gl_FragColor = texture2D(texture, v_texcoord);

float blur = 0;

float near_distance = 10.0; // 近平面的模糊衰減範圍

float far_distance = 10.0; // 遠平面的模糊衰減範圍

float near_plane = -20.0; // 近平面

float far_plane = -25.0; // 遠平面

// 根據深度計算模糊因子

if(v_depth <= near_plane && v_depth >= far_plane)

{

blur = 0;

}

else if(v_depth > near_plane)

{

blur = clamp(v_depth, near_plane, near_plane + near_distance);

blur = (blur - near_plane) / near_distance;

}

else if(v_depth < far_plane)

{

blur = clamp(v_depth, far_plane - far_distance, far_plane);

blur = (far_plane - blur) / far_distance;

}

// 將模糊因子寫入alpha通道

gl_FragColor.a = blur;

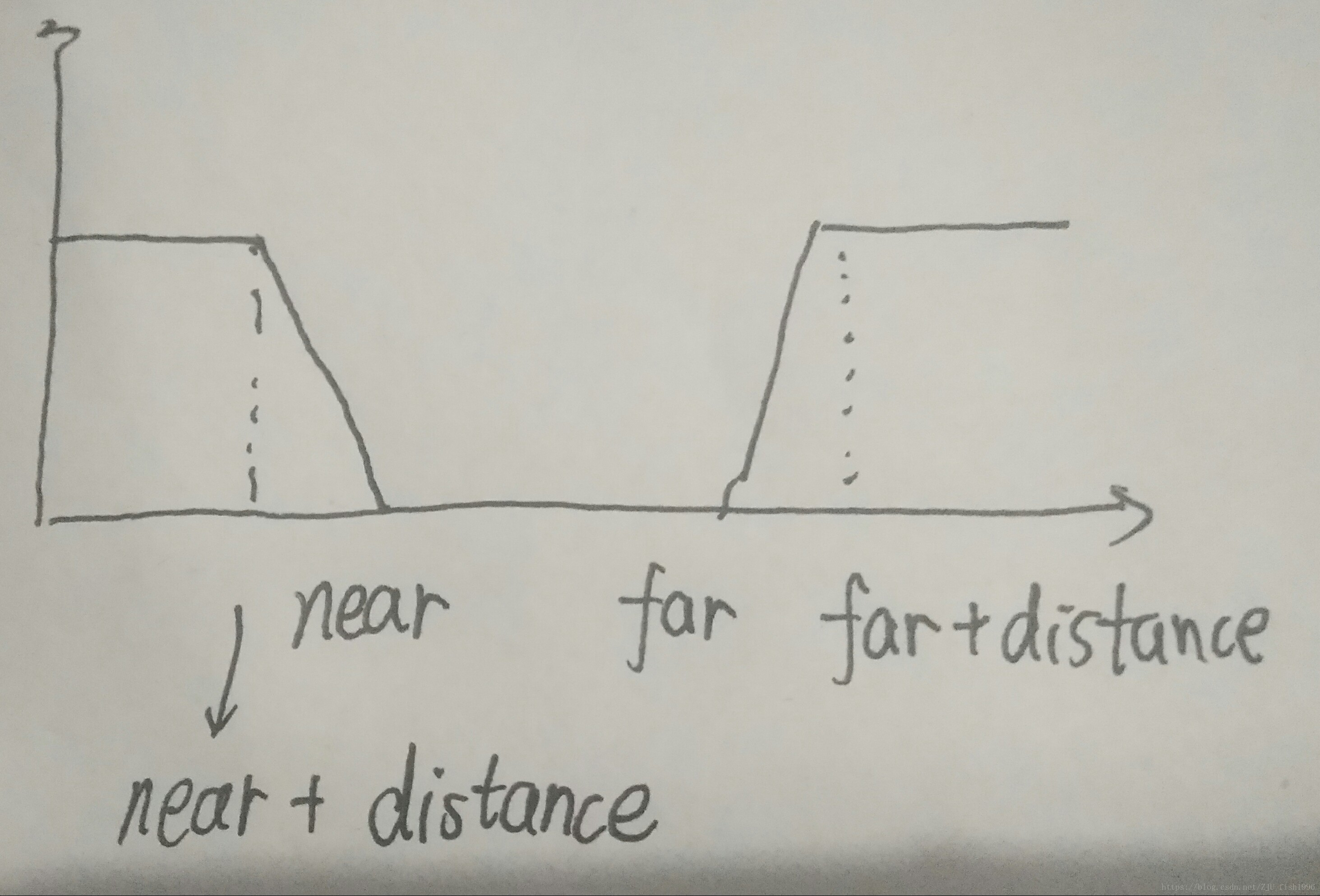

}首先,我們需要指定一下近、遠平面以及衰減範圍,可以在上文中的景深概念圖中找到它們具體的含義,衰減範圍即對應圖中的模糊區,在這一區域中,模糊因子往靠近遠/近平面的方向從1遞減到0,在景深範圍內為0,也就代表這一區域是完全清晰的。

在這裡遠近平面取值均為負值,這是因為在上下文中的view矩陣的z軸方向是從物體方向指向視點方向,也就是說,視點處z軸值為0,在視點前,物體的z值均為負,離視點越遠,z軸的值越小。這一資料的取值和具體的實現方式是有關聯的,要根據具體情況進行考慮。

計算模糊因子的部分看起來比較長,其實本質是在計算一個分段函式,在此例中,為了方便用的線性插值,在實際應用中,可用其它曲線來計算。

ps. 關於模糊因子的計算應該放到頂點著色器還是片元著色器,目前放在片元裡計算主要是為了比較精確,主要擔心的是,如果兩個頂點落在分段函式的不同段處結果可能處理的不對,在簡化景深計算方式或者對效果要求不高的情況下,也可以直接在頂點中計算。

2. 第二次渲染

Vertex Shader:

#ifdef GL_ES

// Set default precision to medium

precision mediump int;

precision mediump float;

#endif

attribute vec3 a_position;

attribute vec2 a_texcoord;

varying vec2 v_texcoord;

void main()

{

gl_Position = vec4(a_position, 1.0);

v_texcoord = a_texcoord;

}頂點著色器只是為了傳一下資料,沒有什麼特別需要計算的。

Fragment Shader:

#ifdef GL_ES

// Set default precision to medium

precision mediump int;

precision mediump float;

#endif

varying vec4 v_color;

varying vec2 v_texcoord;

uniform sampler2D texture;

uniform vec2 screenSize;

int kernelNum = 12;

vec2 g_v2TwelveKernelBase[] =

{

{1.0,0.0},{0.5,0.866},{-0.5,0.866},

{-1.0,0.0},{-0.5,-0.866},{0.5,-0.866},

{1.5,0.866},{0, 1.732},{-1.5,0.866},

{-1.5,0.866},{0,-1.732},{1.5,-0.866},

};

void main()

{

vec4 v4Original = texture2D(texture, v_texcoord);

vec2 v4ScreenSize = screenSize / 5;

vec3 v3Blurred = vec3(0, 0, 0);

for(int i = 0; i < kernelNum; i++)

{

vec2 v2Offset = vec2(g_v2TwelveKernelBase[i].x / v4ScreenSize.x,

g_v2TwelveKernelBase[i].y / v4ScreenSize.y);

vec4 v4Current = texture2D(texture, v_texcoord + v2Offset);

v3Blurred += lerp(v4Original.rgb, v4Current.rgb, v4Original.a);

}

gl_FragColor = vec4 (v3Blurred / kernelNum , 1.0f);

}具體的計算已經在前文中的模糊效果中提及,主要思想是對原影象和模糊影象按照模糊因子進行混合。

入口程式碼

基本框架來自qt opengl的官方教程,在此基礎上修改的。

mainwidget.h

#ifndef MAINWIDGET_H

#define MAINWIDGET_H

#include "geometryengine.h"

#include <QOpenGLWidget>

#include <QOpenGLFunctions>

#include <QMatrix4x4>

#include <QVector2D>

#include <QOpenGLShaderProgram>

#include <QOpenGLTexture>

#include <QOpenGLShader>

class GeometryEngine;

class MainWidget : public QOpenGLWidget, protected QOpenGLFunctions

{

Q_OBJECT

public:

explicit MainWidget(QWidget *parent = nullptr);

~MainWidget() override;

protected:

void keyPressEvent(QKeyEvent* event) override;

void initializeGL() override;

void resizeGL(int w, int h) override;

void paintGL() override;

private:

GLuint sceneBuffer; // 場景渲染紋理

GLuint fBO; // 幀緩衝

int screenX = 640; // 螢幕大小

int screenY = 480;

QMatrix4x4 viewMatrix; // 視點(相機)矩陣

QMatrix4x4 projection; // 投影矩陣

// 眼睛位置,望向位置

QVector3D eyeLocation = QVector3D(0, 0, 20);

QVector3D lookAtLocation = QVector3D(0, 0, 0);

GeometryEngine *geometries; // 繪製Engine

QOpenGLTexture *texture; // 立方體貼的紋理

// 兩個pass的program

QOpenGLShaderProgram program0;

QOpenGLShaderProgram program;

void RenderScene();

void GetViewMatrix(QMatrix4x4& matrix);

};

#endif // MAINWIDGET_H

mainwidget.cpp

#include "mainwidget.h"

#include <QMouseEvent>

#include <math.h>

MainWidget::MainWidget(QWidget *parent) :

QOpenGLWidget(parent),

geometries(nullptr)

{

}

MainWidget::~MainWidget()

{

makeCurrent();

delete geometries;

doneCurrent();

}

void MainWidget::keyPressEvent(QKeyEvent* event)

{

const float step = 0.3f;

if(event->key() == Qt::Key_W)

{

eyeLocation.setZ(eyeLocation.z() - step);

lookAtLocation.setZ(lookAtLocation.z() - step);

update();

}

else if(event->key() == Qt::Key_S)

{

eyeLocation.setZ(eyeLocation.z() + step);

lookAtLocation.setZ(lookAtLocation.z() + step);

update();

}

else if(event->key() == Qt::Key_A)

{

eyeLocation.setX(eyeLocation.x() - step);

lookAtLocation.setX(lookAtLocation.x() - step);

update();

}

else if(event->key() == Qt::Key_D)

{

eyeLocation.setX(eyeLocation.x() + step);

lookAtLocation.setX(lookAtLocation.x() + step);

update();

}

}

void MainWidget::initializeGL()

{

initializeOpenGLFunctions();

// 清屏顏色

glClearColor(0, 0, 0, 0);

// 開啟剔除

glEnable(GL_CULL_FACE);

// add shader 0

QOpenGLShader* vShader0 = new QOpenGLShader(QOpenGLShader::Vertex);

QOpenGLShader* fShader0 = new QOpenGLShader(QOpenGLShader::Fragment);

vShader0->compileSourceFile(":/vShader0.glsl");

fShader0->compileSourceFile(":/fShader0.glsl");

program0.addShader(vShader0);

program0.addShader(fShader0);

program0.link();

// add shader 1

QOpenGLShader* vShader = new QOpenGLShader(QOpenGLShader::Vertex);

QOpenGLShader* fShader = new QOpenGLShader(QOpenGLShader::Fragment);

vShader->compileSourceFile(":/vShader.glsl");

fShader->compileSourceFile(":/fShader.glsl");

program.addShader(vShader);

program.addShader(fShader);

program.link();

geometries = new GeometryEngine;

// 載入立方體的紋理

texture = new QOpenGLTexture(QImage(":/cube.png").mirrored());

texture->setMinificationFilter(QOpenGLTexture::Nearest);

texture->setMagnificationFilter(QOpenGLTexture::Linear);

texture->setWrapMode(QOpenGLTexture::Repeat);

// 建立一個幀緩衝物件

glGenFramebuffers(1, &fBO);

glBindFramebuffer(GL_FRAMEBUFFER, fBO);

// 生成紋理影象,附加到幀緩衝

glGenTextures(1, &sceneBuffer);

glBindTexture(GL_TEXTURE_2D, sceneBuffer);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, screenX, screenY, 0, GL_RGBA, GL_UNSIGNED_BYTE, nullptr);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR );

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glBindTexture(GL_TEXTURE_2D, 0);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, sceneBuffer, 0);

}

// 計算view矩陣

void MainWidget::GetViewMatrix(QMatrix4x4& matrix)

{

QVector3D upDir(0, 1, 0);

QVector3D N = eyeLocation - lookAtLocation; // 這裡是和OpenGL的z軸方向保持一致

QVector3D U = QVector3D::crossProduct(upDir, N);

QVector3D V = QVector3D::crossProduct(N, U);

N.normalize();

U.normalize();

V.normalize();

matrix.setRow(0, {U.x(), U.y(), U.z(), -QVector3D::dotProduct(U, eyeLocation)}); // x

matrix.setRow(1, {V.x(), V.y(), V.z(), -QVector3D::dotProduct(V, eyeLocation)}); // y

matrix.setRow(2, {N.x(), N.y(), N.z(), -QVector3D::dotProduct(N, eyeLocation)}); // z

matrix.setRow(3, {0, 0, 0, 1});

}

void MainWidget::resizeGL(int w, int h)

{

screenX = w;

screenY = h;

float aspect = float(w) / float(h ? h : 1);

const qreal zNear = 1.0, zFar = 200.0, fov = 45.0;

projection.setToIdentity();

projection.perspective(fov, aspect, zNear, zFar);

}

void MainWidget::RenderScene()

{

// 傳入cube的紋理

program0.setUniformValue("texture", 0);

QMatrix4x4 mvMatrix;

// 轉換到世界座標系

mvMatrix.scale(QVector3D(2,2,1));

// 轉換到相機座標系

mvMatrix = viewMatrix * mvMatrix;

program0.setUniformValue("ModelViewMatrix", mvMatrix);

program0.setUniformValue("ProjectMatrix", projection);

geometries->drawCubeGeometry(&program0);

}

void MainWidget::paintGL()

{

// pass 0 : 將場景渲染到紋理

glBindFramebuffer(GL_FRAMEBUFFER, fBO); // 之後的操作寫入幀快取

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT); // 清除顏色和深度快取

glEnable(GL_DEPTH_TEST); // 開啟深度測試

GetViewMatrix(viewMatrix); // 計算view矩陣

texture->bind(); // 等價於 glBindTexture

program0.bind(); // 使用program0繫結的shader

RenderScene(); // 渲染立方體

// pass 1 : 後處理

glBindFramebuffer(GL_FRAMEBUFFER, 0); // 取消寫入幀快取

glBindTexture(GL_TEXTURE_2D, sceneBuffer); // 使用之前場景渲染到的紋理

program.bind(); // 使用program0繫結的shader

glDisable(GL_DEPTH_TEST); // 關閉深度測試

QVector2D ScreenSize(screenX, screenY);

program.setUniformValue("screenSize", ScreenSize); // 傳入螢幕大小和紋理

program.setUniformValue("texture", 0);

geometries->drawScreen(&program); // 把紋理渲染到一張面片上

}

geometryengine.h

#ifndef GEOMETRYENGINE_H

#define GEOMETRYENGINE_H

#include <QOpenGLFunctions>

#include <QOpenGLShaderProgram>

#include <QOpenGLBuffer>

class GeometryEngine : protected QOpenGLFunctions

{

public:

GeometryEngine();

virtual ~GeometryEngine();

void drawCubeGeometry(QOpenGLShaderProgram *program);

void drawScreen(QOpenGLShaderProgram *program);

private:

void initCubeGeometry();

QOpenGLBuffer screenArrayBuf;

QOpenGLBuffer screenIndexBuf;

QOpenGLBuffer arrayBuf;

QOpenGLBuffer indexBuf;

};

#endif // GEOMETRYENGINE_Hgeometryengine.cpp

/****************************************************************************

**

** Copyright (C) 2016 The Qt Company Ltd.

** Contact: https://www.qt.io/licensing/

**

** This file is part of the QtCore module of the Qt Toolkit.

**

** $QT_BEGIN_LICENSE:BSD$

** Commercial License Usage

** Licensees holding valid commercial Qt licenses may use this file in

** accordance with the commercial license agreement provided with the

** Software or, alternatively, in accordance with the terms contained in

** a written agreement between you and The Qt Company. For licensing terms

** and conditions see https://www.qt.io/terms-conditions. For further

** information use the contact form at https://www.qt.io/contact-us.

**

** BSD License Usage

** Alternatively, you may use this file under the terms of the BSD license

** as follows:

**

** "Redistribution and use in source and binary forms, with or without

** modification, are permitted provided that the following conditions are

** met:

** * Redistributions of source code must retain the above copyright

** notice, this list of conditions and the following disclaimer.

** * Redistributions in binary form must reproduce the above copyright

** notice, this list of conditions and the following disclaimer in

** the documentation and/or other materials provided with the

** distribution.

** * Neither the name of The Qt Company Ltd nor the names of its

** contributors may be used to endorse or promote products derived

** from this software without specific prior written permission.

**

**

** THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

** "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

** LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR

** A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT

** OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL,

** SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT

** LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,

** DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY

** THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

** (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

** OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE."

**

** $QT_END_LICENSE$

**

****************************************************************************/

#include "geometryengine.h"

#include <QVector2D>

#include <QVector3D>

struct VertexData

{

QVector3D position;

QVector2D texture;

};

//! [0]

GeometryEngine::GeometryEngine()

: screenIndexBuf(QOpenGLBuffer::IndexBuffer),indexBuf(QOpenGLBuffer::IndexBuffer)

{

initializeOpenGLFunctions();

arrayBuf.create();

indexBuf.create();

screenArrayBuf.create();

screenIndexBuf.create();

initCubeGeometry();

}

GeometryEngine::~GeometryEngine()

{

arrayBuf.destroy();

indexBuf.destroy();

screenArrayBuf.destroy();

screenIndexBuf.destroy();

}

void GeometryEngine::initCubeGeometry()

{

// For cube we would need only 8 vertices but we have to

// duplicate vertex for each face because texture coordinate

// is different.

VertexData vertices[] = {

// Vertex data for face 0

{QVector3D(-1.0f, -1.0f, 1.0f), QVector2D(0.0f, 0.0f)}, // v0

{QVector3D( 1.0f, -1.0f, 1.0f), QVector2D(0.33f, 0.0f)}, // v1

{QVector3D(-1.0f, 1.0f, 1.0f), QVector2D(0.0f, 0.5f)}, // v2

{QVector3D( 1.0f, 1.0f, 1.0f), QVector2D(0.33f, 0.5f)}, // v3

// Vertex data for face 1

{QVector3D( 1.0f, -1.0f, 1.0f), QVector2D( 0.0f, 0.5f)}, // v4

{QVector3D( 1.0f, -1.0f, -1.0f), QVector2D(0.33f, 0.5f)}, // v5

{QVector3D( 1.0f, 1.0f, 1.0f), QVector2D(0.0f, 1.0f)}, // v6

{QVector3D( 1.0f, 1.0f, -1.0f), QVector2D(0.33f, 1.0f)}, // v7

// Vertex data for face 2

{QVector3D( 1.0f, -1.0f, -1.0f), QVector2D(0.66f, 0.5f)}, // v8

{QVector3D(-1.0f, -1.0f, -1.0f), QVector2D(1.0f, 0.5f)}, // v9

{QVector3D( 1.0f, 1.0f, -1.0f), QVector2D(0.66f, 1.0f)}, // v10

{QVector3D(-1.0f, 1.0f, -1.0f), QVector2D(1.0f, 1.0f)}, // v11

// Vertex data for face 3

{QVector3D(-1.0f, -1.0f, -1.0f), QVector2D(0.66f, 0.0f)}, // v12

{QVector3D(-1.0f, -1.0f, 1.0f), QVector2D(1.0f, 0.0f)}, // v13

{QVector3D(-1.0f, 1.0f, -1.0f), QVector2D(0.66f, 0.5f)}, // v14

{QVector3D(-1.0f, 1.0f, 1.0f), QVector2D(1.0f, 0.5f)}, // v15

// Vertex data for face 4

{QVector3D(-1.0f, -1.0f, -1.0f), QVector2D(0.33f, 0.0f)}, // v16

{QVector3D( 1.0f, -1.0f, -1.0f), QVector2D(0.66f, 0.0f)}, // v17

{QVector3D(-1.0f, -1.0f, 1.0f), QVector2D(0.33f, 0.5f)}, // v18

{QVector3D( 1.0f, -1.0f, 1.0f), QVector2D(0.66f, 0.5f)}, // v19

// Vertex data for face 5

{QVector3D(-1.0f, 1.0f, 1.0f), QVector2D(0.33f, 0.5f)}, // v20

{QVector3D( 1.0f, 1.0f, 1.0f), QVector2D(0.66f, 0.5f)}, // v21

{QVector3D(-1.0f, 1.0f, -1.0f), QVector2D(0.33f, 1.0f)}, // v22

{QVector3D( 1.0f, 1.0f, -1.0f), QVector2D(0.66f, 1.0f)}, // v23

};

GLushort indices[] = {

0, 1, 2, 3, 3, // Face 0 - triangle strip ( v0, v1, v2, v3)

4, 4, 5, 6, 7, 7, // Face 1 - triangle strip ( v4, v5, v6, v7)

8, 8, 9, 10, 11, 11, // Face 2 - triangle strip ( v8, v9, v10, v11)

12, 12, 13, 14, 15, 15, // Face 3 - triangle strip (v12, v13, v14, v15)

16, 16, 17, 18, 19, 19, // Face 4 - triangle strip (v16, v17, v18, v19)

20, 20, 21, 22, 23 // Face 5 - triangle strip (v20, v21, v22, v23)

};

// Transfer vertex data to VBO 0

arrayBuf.bind();

arrayBuf.allocate(vertices, 24 * sizeof(VertexData));

// Transfer index data to VBO 1

indexBuf.bind();

indexBuf.allocate(indices, 34 * sizeof(GLushort));

VertexData screenVertices[] =

{

{{-1.0f, -1.0f, 0.0f}, {0.0f, 0.0f}},

{{1.0f, -1.0f, 0.0f}, {1.0f, 0.0f}},

{{1.0f, 1.0f, 0.0f}, {1.0f, 1.0f}},

{{-1.0f, 1.0f, 0.0f}, {0.0f, 1.0f}},

};

GLushort screenIndices[] = {

0, 1, 2, 2, 3, 0

};

screenArrayBuf.bind();

screenArrayBuf.allocate(screenVertices, 4 * sizeof(VertexData));

screenIndexBuf.bind();

screenIndexBuf.allocate(screenIndices, 6 * sizeof(GLushort));

}

void GeometryEngine::drawCubeGeometry(QOpenGLShaderProgram *program)

{

arrayBuf.bind();

indexBuf.bind();

int offset = 0;

int vertexLocation = program->attributeLocation("a_position");

program->enableAttributeArray(vertexLocation);

program->setAttributeBuffer(vertexLocation, GL_FLOAT, offset, 3, sizeof(VertexData));

offset += sizeof(QVector3D);

int texcoordLocation = program->attributeLocation("a_texcoord");

program->enableAttributeArray(texcoordLocation);

program->setAttributeBuffer(texcoordLocation, GL_FLOAT, offset, 2, sizeof(VertexData));

glDrawElements(GL_TRIANGLE_STRIP, 34, GL_UNSIGNED_SHORT, nullptr);

}

void GeometryEngine::drawScreen(QOpenGLShaderProgram *program)

{

screenArrayBuf.bind();

screenIndexBuf.bind();

int offset = 0;

int vertexLocation = program->attributeLocation("a_position");

program->enableAttributeArray(vertexLocation);

program->setAttributeBuffer(vertexLocation, GL_FLOAT, offset, 3, sizeof(VertexData));

offset += sizeof(QVector3D);

int texcoordLocation = program->attributeLocation("a_texcoord");

program->enableAttributeArray(texcoordLocation);

program->setAttributeBuffer(texcoordLocation, GL_FLOAT, offset, 2, sizeof(VertexData));

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_SHORT, nullptr);

}

main.cpp

#include <QApplication>

#include <QLabel>

#include <QSurfaceFormat>

#ifndef QT_NO_OPENGL

#include "mainwidget.h"

#endif

int main(int argc, char *argv[])

{

QApplication app(argc, argv);

QSurfaceFormat format;

format.setDepthBufferSize(24);

QSurfaceFormat::setDefaultFormat(format);

app.setApplicationName("cube");

app.setApplicationVersion("0.1");

#ifndef QT_NO_OPENGL

MainWidget widget;

widget.show();

#else

QLabel note("OpenGL Support required");

note.show();

#endif

return app.exec();

}