【讀書1】【2017】MATLAB與深度學習——單層神經網路的侷限性(3)

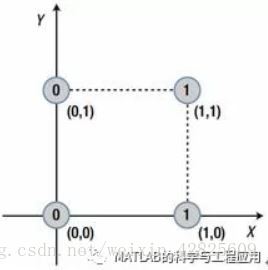

在這種情況下,可以容易地找到劃分0和1區域的直線邊界線。

In this case, a straight border line thatdivides the regions of 0 and 1 can be found easily.

這是一個線性可分的問題(圖2-25)。

This is a linearly separable problem(Figure 2-25).

簡單地說,單層神經網路只能解決線性可分問題。

To put it simply, the single-layer neuralnetwork can only solve linearly separable problems.

這是因為單層神經網路是一種線性劃分輸入資料空間的模型。

This is because the single-layer neuralnetwork is a model that linearly divides the input data space.

為了克服單層神經網路的侷限性,我們需要更多的網路層。

In order to overcome this limitation of thesingle-layer neural network, we need more layers in the network.

這種需求導致了多層神經網路的出現,它可以實現單層神經網路所不能實現的功能。

This need has led to the appearance of themulti-layer neural network, which can achieve what the single-layer neuralnetwork cannot.

因為這是一個非常數學的問題,如果你對此不熟悉,可以跳過這一部分。

As this is rather mathematical; it is fineto skip this portion if you are not familiar with it.

請記住,單層神經網路只適用於特定型別的問題求解。

Just keep in mind that the single-layerneural network is applicable for specific problem types.

多層神經網路沒有這樣的侷限性。

The multi-layer neural network has no suchlimitations.

小結(Summary)

本章涵蓋以下概念:

This chapter covered the followingconcepts:

神經網路是由節點構成的網路,這些節點模仿大腦的神經元。

The neural network is a network of nodes,which imitate the neurons of the brain.

神經節點計算輸入訊號的加權和,並用加權和輸出啟用函式的結果。

The nodes calculate the weighted sum of theinput signals and output the result of the activation function with theweighted sum.

大多數神經網路都是由分層節點構成的。

Most neural networks are constructed withthe layered nodes.

對於分層神經網路,訊號進入輸入層,通過隱藏層後從輸出層輸出。

For the layered neural network, the signalenters through the input layer, passes through the hidden layer, and exitsthrough the output layer.

在實際應用中,線性函式不能用作隱藏層中的啟用函式。

In practice, the linear functions cannot beused as the activation functions in the hidden layer.

這是因為線性函式抵消了隱藏層的影響。

This is because the linear function negatesthe effects of the hidden layer.

然而,在一些問題(如迴歸)上輸出層節點可以採用線性函式。

However, in some problems such asregression, the output layer nodes may employ linear functions.

對於神經網路,有監督學習通過調整權重減少神經網路正確輸出與訓練輸出之間的差異(圖2-26)。

For the neural network, supervised learningimplements the process to adjust the weights and to reduce the discrepanciesbetween the correct output and output of the neural network (Figure 2-26).

——本文譯自Phil Kim所著的《Matlab Deep Learning》

更多精彩文章請關注微訊號: