java實現基於SeetaFaceEngine的人臉識別

依賴專案地址 :

https://github.com/seetaface/SeetaFaceEngine

https://github.com/sarxos/webcam-capture

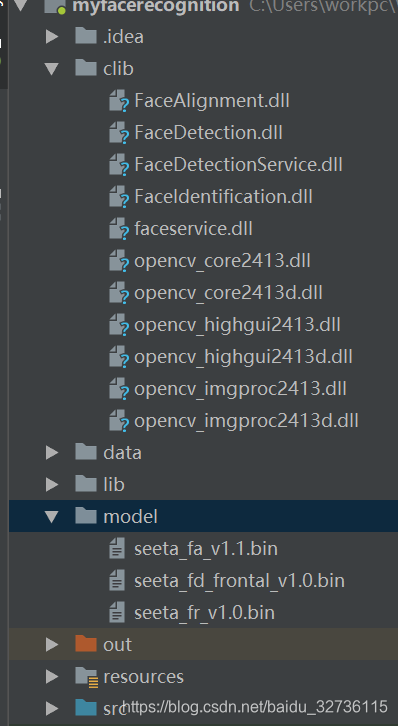

專案結構

dll 檔案下載:連結:https://pan.baidu.com/s/1jCnrWb4a5V0FINZiT55KKg 密碼:hzv7

model下載:https://github.com/seetaface/SeetaFaceEngine/tree/master/FaceAlignment/model

https://github.com/seetaface/SeetaFaceEngine/tree/master/FaceDetection/model

專案下載連結:https://pan.baidu.com/s/1AIRahXcd5X5gf93PHqKm1A 密碼:gmg0

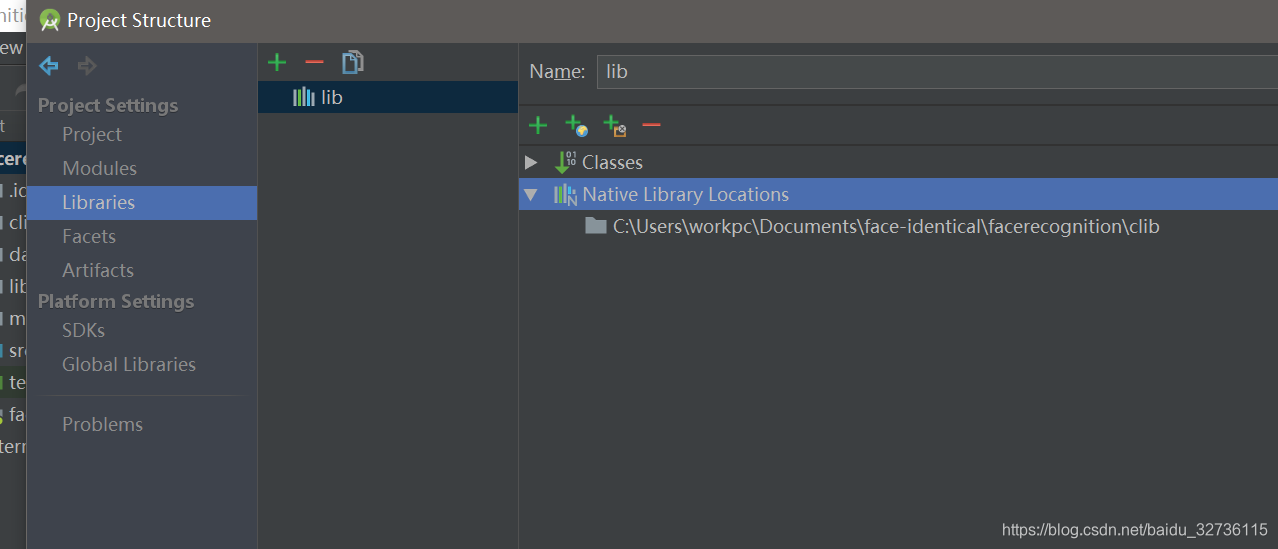

PS:需要將clib裡的dll加到lib中(eclipse自行百度)

基於seetaface實現人臉識別

包括人臉檢測和人臉識別功能

- 人臉檢測,獲取人臉的位置(x,y,w,h)

- 人臉識別,比較兩個圖片中的人臉相似度

基於webcam-capture實現本地攝像頭呼叫

完成以下功能

- 開啟攝像頭,實時檢測人臉位置,將人臉框出來

- 儲存模板

- 後臺將攝像頭中的人臉與模板進行比較,相似度大於一定值則認為是同一個人

com.vvyun.service.FaceRecognitionService.java

package com.vvyun.service; /** * 人臉匹配相關服務 * * @author wangyf * @version 0.1 */ public class FaceRecognitionService { // 載入依賴庫 static { System.loadLibrary("opencv_core2413"); System.loadLibrary("opencv_highgui2413"); System.loadLibrary("opencv_imgproc2413"); System.loadLibrary("FaceAlignment"); System.loadLibrary("FaceDetection"); System.loadLibrary("FaceIdentification"); System.loadLibrary("faceservice"); } /** * 獲取人臉位置 * @param imagebase64 圖片base64編碼 * @return int[4] x,y ,width,higth */ public native static int[] getfacepoint(String imagebase64); /** * 獲取相似度 * * @param befimagebase64 模板圖片base64編碼 * @param aftimagebase64 待比較圖片base64編碼 * @return face Similarity value */ public native static float getSimilarity(String befimagebase64, String aftimagebase64); }

使用jni 將FaceRecognitionService.java生成com_vvyun_service_FaceRecognitionService.h,在專案路徑下執行

javah -jni com.vvyun.service.FaceRecognitionService

使用C++實現com_vvyun_service_FaceRecognitionService.h

PS:人臉識別等服務主要程式碼(C++)(本人已在win10下編譯成了dll:faceservice.dll,可直接呼叫,使用方式見上文 FaceRecognitionService.java)

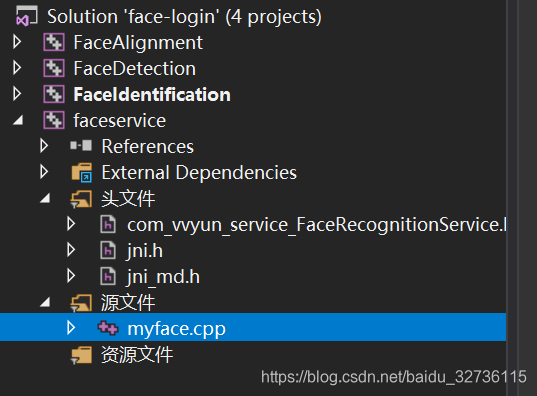

vs2017 搭建SeetaFaceEngine工程參考連結:

https://github.com/seetaface/SeetaFaceEngine/blob/master/SeetaFace_config.docx

按照SeetaFace_config.docx文件搭建faceservice工程,建dll專案,新增依賴(類似文件裡的 4 Console application(Identification)工程檔案配置

),新增jni.h等

com_vvyun_service_FaceRecognitionService.h上文已生成

myface.cpp

#include "com_vvyun_service_FaceRecognitionService.h"

#include<iostream>

using namespace std;

#ifdef _WIN32

#pragma once

#include <opencv2/core/version.hpp>

#define CV_VERSION_ID CVAUX_STR(CV_MAJOR_VERSION) CVAUX_STR(CV_MINOR_VERSION) \

CVAUX_STR(CV_SUBMINOR_VERSION)

#ifdef _DEBUG

#define cvLIB(name) "opencv_" name CV_VERSION_ID "d"

#else

#define cvLIB(name) "opencv_" name CV_VERSION_ID

#endif //_DEBUG

#pragma comment( lib, cvLIB("core") )

#pragma comment( lib, cvLIB("imgproc") )

#pragma comment( lib, cvLIB("highgui") )

#endif //_WIN32

#if defined(__unix__) || defined(__APPLE__)

#ifndef fopen_s

#define fopen_s(pFile,filename,mode) ((*(pFile))=fopen((filename),(mode)))==NULL

#endif //fopen_s

#endif //__unix

#include <opencv/cv.h>

#include <opencv/highgui.h>

#include "face_identification.h"

#include "recognizer.h"

#include "face_detection.h"

#include "face_alignment.h"

#include "math_functions.h"

#include <vector>

#include <string>

#include <iostream>

#include <algorithm>

using namespace seeta;

#define TEST(major, minor) major##_##minor##_Tester()

#define EXPECT_NE(a, b) if ((a) == (b)) std::cout << "ERROR: "

#define EXPECT_EQ(a, b) if ((a) != (b)) std::cout << "ERROR: "

#ifdef _WIN32

std::string DATA_DIR = "data/";

std::string MODEL_DIR = "model/";

#else

std::string DATA_DIR = "data/";

std::string MODEL_DIR = "model/";

#endif

// ----------------------------------------------------------------

// java與c字元轉化

std::string jstring2str(JNIEnv* env, jstring jstr)

{

char* rtn = NULL;

jclass clsstring = env->FindClass("java/lang/String");

jstring strencode = env->NewStringUTF("GB2312");

jmethodID mid = env->GetMethodID(clsstring, "getBytes", "(Ljava/lang/String;)[B");

jbyteArray barr = (jbyteArray)env->CallObjectMethod(jstr, mid, strencode);

jsize alen = env->GetArrayLength(barr);

jbyte* ba = env->GetByteArrayElements(barr, JNI_FALSE);

if (alen > 0)

{

rtn = (char*)malloc(alen + 1);

memcpy(rtn, ba, alen);

rtn[alen] = 0;

}

env->ReleaseByteArrayElements(barr, ba, 0);

std::string stemp(rtn);

free(rtn);

return stemp;

}

jstring str2jstring(JNIEnv* env, const char* pat)

{

//定義java String類 strClass

jclass strClass = (env)->FindClass("Ljava/lang/String;");

//獲取String(byte[],String)的構造器,用於將本地byte[]陣列轉換為一個新String

jmethodID ctorID = (env)->GetMethodID(strClass, "<init>", "([BLjava/lang/String;)V");

//建立byte陣列

jbyteArray bytes = (env)->NewByteArray(strlen(pat));

//將char* 轉換為byte陣列

(env)->SetByteArrayRegion(bytes, 0, strlen(pat), (jbyte*)pat);

// 設定String, 儲存語言型別,用於byte陣列轉換至String時的引數

jstring encoding = (env)->NewStringUTF("GB2312");

//將byte陣列轉換為java String,並輸出

return (jstring)(env)->NewObject(strClass, ctorID, bytes, encoding);

}

// ----------------------------------------------------------------

// ----------------------------------------------------------------

// base64解碼

static std::string base64Decode(const char* Data, int DataByte)

{

//解碼錶

const char DecodeTable[] =

{

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

62, // '+'

0, 0, 0,

63, // '/'

52, 53, 54, 55, 56, 57, 58, 59, 60, 61, // '0'-'9'

0, 0, 0, 0, 0, 0, 0,

0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12,

13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, // 'A'-'Z'

0, 0, 0, 0, 0, 0,

26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38,

39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, // 'a'-'z'

};

//返回值

std::string strDecode;

int nValue;

int i = 0;

while (i < DataByte)

{

if (*Data != '\r' && *Data != '\n')

{

nValue = DecodeTable[*Data++] << 18;

nValue += DecodeTable[*Data++] << 12;

strDecode += (nValue & 0x00FF0000) >> 16;

if (*Data != '=')

{

nValue += DecodeTable[*Data++] << 6;

strDecode += (nValue & 0x0000FF00) >> 8;

if (*Data != '=')

{

nValue += DecodeTable[*Data++];

strDecode += nValue & 0x000000FF;

}

}

i += 4;

}

else// 回車換行,跳過

{

Data++;

i++;

}

}

return strDecode;

}

static std::string base64Encode(const unsigned char* Data, int DataByte)

{

//編碼表

const char EncodeTable[] = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/";

//返回值

std::string strEncode;

unsigned char Tmp[4] = { 0 };

int LineLength = 0;

for (int i = 0; i < (int)(DataByte / 3); i++)

{

Tmp[1] = *Data++;

Tmp[2] = *Data++;

Tmp[3] = *Data++;

strEncode += EncodeTable[Tmp[1] >> 2];

strEncode += EncodeTable[((Tmp[1] << 4) | (Tmp[2] >> 4)) & 0x3F];

strEncode += EncodeTable[((Tmp[2] << 2) | (Tmp[3] >> 6)) & 0x3F];

strEncode += EncodeTable[Tmp[3] & 0x3F];

if (LineLength += 4, LineLength == 76) { strEncode += "\r\n"; LineLength = 0; }

}

//對剩餘資料進行編碼

int Mod = DataByte % 3;

if (Mod == 1)

{

Tmp[1] = *Data++;

strEncode += EncodeTable[(Tmp[1] & 0xFC) >> 2];

strEncode += EncodeTable[((Tmp[1] & 0x03) << 4)];

strEncode += "==";

}

else if (Mod == 2)

{

Tmp[1] = *Data++;

Tmp[2] = *Data++;

strEncode += EncodeTable[(Tmp[1] & 0xFC) >> 2];

strEncode += EncodeTable[((Tmp[1] & 0x03) << 4) | ((Tmp[2] & 0xF0) >> 4)];

strEncode += EncodeTable[((Tmp[2] & 0x0F) << 2)];

strEncode += "=";

}

return strEncode;

}

static std::string Mat2Base64(const cv::Mat &img, std::string imgType)

{

//Mat轉base64

std::string img_data;

std::vector<uchar> vecImg;

std::vector<int> vecCompression_params;

vecCompression_params.push_back(CV_IMWRITE_JPEG_QUALITY);

vecCompression_params.push_back(90);

imgType = "." + imgType;

cv::imencode(imgType, img, vecImg, vecCompression_params);

img_data = base64Encode(vecImg.data(), vecImg.size());

return img_data;

}

cv::Mat Base2Mat(std::string &base64_data)

{

cv::Mat img;

std::string s_mat;

s_mat = base64Decode(base64_data.data(), base64_data.size());

std::vector<char> base64_img(s_mat.begin(), s_mat.end());

img = cv::imdecode(base64_img, CV_LOAD_IMAGE_COLOR);

return img;

}

// ----------------------------------------------------------------

/*

* Class: com_vvyun_service_FaceRecognitionService

* Method: getfacepoint

* Signature: (Ljava/lang/String;)[I

*/

JNIEXPORT jintArray JNICALL Java_com_vvyun_service_FaceRecognitionService_getfacepoint

(JNIEnv *env, jclass, jstring imagb64) {

// Initialize face detection model

seeta::FaceDetection detector("model/seeta_fd_frontal_v1.0.bin");

detector.SetMinFaceSize(40);

detector.SetScoreThresh(2.f);

detector.SetImagePyramidScaleFactor(0.8f);

detector.SetWindowStep(4, 4);

std::string decode_str = jstring2str(env, imagb64);

cv::Mat gallery_img_color = Base2Mat(decode_str);

cv::Mat gallery_img_gray;

cv::cvtColor(gallery_img_color, gallery_img_gray, CV_BGR2GRAY);

ImageData gallery_img_data_color(gallery_img_color.cols, gallery_img_color.rows, gallery_img_color.channels());

gallery_img_data_color.data = gallery_img_color.data;

ImageData gallery_img_data_gray(gallery_img_gray.cols, gallery_img_gray.rows, gallery_img_gray.channels());

gallery_img_data_gray.data = gallery_img_gray.data;

// Detect faces

std::vector<seeta::FaceInfo> gallery_faces = detector.Detect(gallery_img_data_gray);

cv::Rect face_rect;

int32_t num_face = static_cast<int32_t>(gallery_faces.size());

std::string res = "";

for (int32_t i = 0; i < num_face; i++) {

face_rect.x = gallery_faces[i].bbox.x;

face_rect.y = gallery_faces[i].bbox.y;

face_rect.width = gallery_faces[i].bbox.width;

face_rect.height = gallery_faces[i].bbox.height;

// res = res + face_rect.x + (char)";" + (char)face_rect.y + (char)";";

//const char *cString = face_rect.x+"";

//jstring str = env->NewStringUTF(cString); //直

//構建jint型一維陣列

jintArray intArray = env->NewIntArray(4);

jint temp[5]; //初始化一個容器,假設 dimion < 10 ;

temp[0] = face_rect.x; //賦值

temp[1] = face_rect.y; //賦值

temp[2] = face_rect.width; //賦值

temp[3] = face_rect.height; //賦值

//設定jit型一維陣列的值

env->SetIntArrayRegion(intArray, 0, 4, temp);

return intArray;

}

}

/*

* Class: com_vvyun_service_FaceRecognitionService

* Method: getSimilaritybydata

* Signature: (Ljava/lang/String;Ljava/lang/String;)F

*/

JNIEXPORT jfloat JNICALL Java_com_vvyun_service_FaceRecognitionService_getSimilarity

(JNIEnv *env, jclass jc, jstring bef, jstring aft) {

// Initialize face detection model

seeta::FaceDetection detector("model/seeta_fd_frontal_v1.0.bin");

detector.SetMinFaceSize(40);

detector.SetScoreThresh(2.f);

detector.SetImagePyramidScaleFactor(0.8f);

detector.SetWindowStep(4, 4);

// Initialize face alignment model

seeta::FaceAlignment point_detector("model/seeta_fa_v1.1.bin");

// Initialize face Identification model

FaceIdentification face_recognizer((MODEL_DIR + "seeta_fr_v1.0.bin").c_str());

std::string test_dir = DATA_DIR + "test_face_recognizer/";

std::string decode_str = jstring2str(env, bef);

//std::cout << decode_str;

std::string decode_str1 = jstring2str(env, aft);

cv::Mat gallery_img_color = Base2Mat(decode_str);

cv::Mat gallery_img_gray;

cv::cvtColor(gallery_img_color, gallery_img_gray, CV_BGR2GRAY);

cv::Mat probe_img_color = Base2Mat(decode_str1);

cv::Mat probe_img_gray;

cv::cvtColor(probe_img_color, probe_img_gray, CV_BGR2GRAY);

ImageData gallery_img_data_color(gallery_img_color.cols, gallery_img_color.rows, gallery_img_color.channels());

gallery_img_data_color.data = gallery_img_color.data;

ImageData gallery_img_data_gray(gallery_img_gray.cols, gallery_img_gray.rows, gallery_img_gray.channels());

gallery_img_data_gray.data = gallery_img_gray.data;

ImageData probe_img_data_color(probe_img_color.cols, probe_img_color.rows, probe_img_color.channels());

probe_img_data_color.data = probe_img_color.data;

ImageData probe_img_data_gray(probe_img_gray.cols, probe_img_gray.rows, probe_img_gray.channels());

probe_img_data_gray.data = probe_img_gray.data;

// Detect faces

std::vector<seeta::FaceInfo> gallery_faces = detector.Detect(gallery_img_data_gray);

int32_t gallery_face_num = static_cast<int32_t>(gallery_faces.size());

std::vector<seeta::FaceInfo> probe_faces = detector.Detect(probe_img_data_gray);

int32_t probe_face_num = static_cast<int32_t>(probe_faces.size());

if (gallery_face_num == 0 || probe_face_num == 0)

{

//std::cout << "Faces are not detected.";

return 0;

}

// Detect 5 facial landmarks

seeta::FacialLandmark gallery_points[5];

point_detector.PointDetectLandmarks(gallery_img_data_gray, gallery_faces[0], gallery_points);

seeta::FacialLandmark probe_points[5];

point_detector.PointDetectLandmarks(probe_img_data_gray, probe_faces[0], probe_points);

for (int i = 0; i<5; i++)

{

cv::circle(gallery_img_color, cv::Point(gallery_points[i].x, gallery_points[i].y), 2,

CV_RGB(0, 255, 0));

cv::circle(probe_img_color, cv::Point(probe_points[i].x, probe_points[i].y), 2,

CV_RGB(0, 255, 0));

}

cv::imwrite("gallery_point_result.jpg", gallery_img_color);

cv::imwrite("probe_point_result.jpg", probe_img_color);

// Extract face identity feature

float gallery_fea[2048];

float probe_fea[2048];

face_recognizer.ExtractFeatureWithCrop(gallery_img_data_color, gallery_points, gallery_fea);

face_recognizer.ExtractFeatureWithCrop(probe_img_data_color, probe_points, probe_fea);

// Caculate similarity of two faces

float sim = face_recognizer.CalcSimilarity(gallery_fea, probe_fea);

return (jfloat)sim;

}