MapReduce入門(三)倒排索引

什麼是倒排索引?

倒排索引源於實際應用中需要根據屬性的值來查詢記錄。這種索引表中的每一項都包括一個屬性值和具有該屬性值的各記錄的地址。由於不是由記錄來確定屬性值,而是由屬性值來確定記錄的位置,因而稱為倒排索引(inverted index)。帶有倒排索引的檔案我們稱為倒排索引檔案,簡稱倒排檔案(inverted file)。

我感覺搜尋引擎的原理就是倒排索引,或者正排索引。那麼就讓我們解密吧!

一、建立ReverseApp類:用於實現實現倒排索引

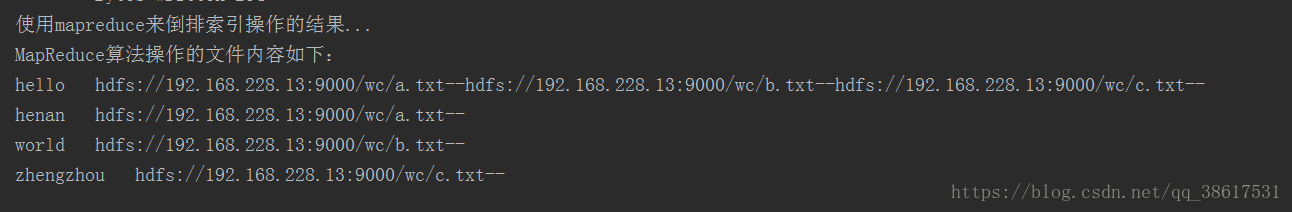

package com.day02; import com.google.common.io.Resources; import com.utils.CDUPUtils; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.fs.Path; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.FileSplit; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import java.io.IOException; import java.util.Iterator; /** * 用MapReduce實現倒排索引 */ public class ReverseApp { public static class ReverseMapper extends Mapper<LongWritable,Text,Text, Text> { //map方法每次執行一行資料,會被迴圈呼叫map方法(有多少行就呼叫多少次) @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { //獲取key所在的檔名 Path path = ((FileSplit) context.getInputSplit()).getPath(); String fileName = path.toString(); String[] strings = value.toString().split(" "); for (int i = 0; i < strings.length; i++) { context.write(new Text(strings[i]),new Text(fileName)); } } } public static class ReverseReducer extends Reducer<Text, Text, Text, Text> { //每次處理一個key,會被迴圈呼叫,有多少個key就會呼叫幾次 //獲取map處理的資料 hello 集合(用於儲存key相同的value--1) @Override protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException { Iterator<Text> iterator = values.iterator(); StringBuffer buffer = new StringBuffer(); while (iterator.hasNext()) { Text fileName = iterator.next(); StringBuffer append = buffer.append(fileName.toString()).append("--"); } context.write(key,new Text(String.valueOf(buffer))); } } public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { Configuration coreSiteConf = new Configuration(); coreSiteConf.addResource(Resources.getResource("core-site-master2.xml")); //設定一個任務,後面是job的名稱 Job job = Job.getInstance(coreSiteConf, "Reverse"); //將打的jar包自動上傳臨時目錄,執行之後就刪除了--自己看不到 job.setJar("mrdemo/target/mrdemo-1.0-SNAPSHOT.jar"); //設定Map和Reduce處理類 job.setMapperClass(ReverseMapper.class); job.setReducerClass(ReverseReducer.class); //設定map輸出型別 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(Text.class); //設定job/reduce輸出型別 job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); //設定任務的輸入路徑 FileInputFormat.addInputPath(job, new Path("/wc")); //設定任務的輸出路徑--儲存結果(這個目錄必須是不存在的目錄) //刪除存在的檔案 CDUPUtils.deleteFileName("/reout"); FileOutputFormat.setOutputPath(job, new Path("/reout")); //執行任務 true:表示列印詳情 boolean flag = job.waitForCompletion(true); if (flag){ System.out.println("使用mapreduce來倒排索引操作的結果..."); CDUPUtils.readContent("/reout/part-r-00000"); }else { System.out.println(flag+",檔案載入失敗"); } } }

二、裡面用到自己寫的工具類CDUPUtils :用於刪除已存在目錄以及閱讀檔案內容

package com.utils;

import com.google.common.io.Resources;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

import java.io.InputStreamReader;

import java.io.LineNumberReader;

import java.util.ArrayList;

public class CDUPUtils {

//刪除已經存在在hdfs上面的檔案檔案

public static void deleteFileName(String path) throws IOException {

//將要刪除的檔案

Path fileName = new Path(path);

Configuration entries = new Configuration();

//解析core-site-master2.xml檔案

entries.addResource(Resources.getResource("core-site-local.xml"));

//coreSiteConf.set(,);--在這裡可以新增配置檔案

FileSystem fileSystem = FileSystem.get(entries);

if (fileSystem.exists(fileName)){

System.out.println(fileName+"已經存在,正在刪除它...");

boolean flag = fileSystem.delete(fileName, true);

if (flag){

System.out.println(fileName+"刪除成功");

}else {

System.out.println(fileName+"刪除失敗!");

return;

}

}

//關閉資源

fileSystem.close();

}

//讀取檔案內容

public static void readContent(String path) throws IOException {

//將要讀取的檔案路徑

Path fileName = new Path(path);

ArrayList<String> returnValue = new ArrayList<String>();

Configuration configuration = new Configuration();

configuration.addResource(Resources.getResource("core-site-local.xml"));

//獲取客戶端系統檔案

FileSystem fileSystem = FileSystem.get(configuration);

//open開啟檔案--獲取檔案的輸入流用於讀取資料

FSDataInputStream inputStream = fileSystem.open(fileName);

InputStreamReader inputStreamReader = new InputStreamReader(inputStream);

//一行一行的讀取資料

LineNumberReader lineNumberReader = new LineNumberReader(inputStreamReader);

//定義一個字串變數用於接收每一行的資料

String str = null;

//判斷何時沒有資料

while ((str=lineNumberReader.readLine())!=null){

returnValue.add(str);

}

//列印資料到控制檯

System.out.println("MapReduce演算法操作的檔案內容如下:");

for (String read :

returnValue) {

System.out.println(read);

}

//關閉資源

lineNumberReader.close();

inputStream.close();

inputStreamReader.close();

}

}

三、配置檔案:cort-site-master2.xml--注意裡面的主機IP需要填寫自己的

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.228.13:9000</value>

</property>

<property>

<name>fs.hdfs.impl</name>

<value>org.apache.hadoop.hdfs.DistributedFileSystem</value>

</property>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>192.168.228.13:8030</value>

</property>

<property>

<name>mapreduce.app-submission.cross-platform</name>

<value>true</value>

</property>

<property>

<description>The address of the applications manager interface in the RM.</description>

<name>yarn.resourcemanager.address</name>

<value>192.168.228.13:8032</value>

</property>

<property>

<description>The address of the scheduler interface.</description>

<name>yarn.resourcemanager.scheduler.address</name>

<value>192.168.228.13:8030</value>

</property>

<property>

<description>The http address of the RM web application.</description>

<name>yarn.resourcemanager.webapp.address</name>

<value>192.168.228.13:8088</value>

</property>

<property>

<description>The https adddress of the RM web application.</description>

<name>yarn.resourcemanager.webapp.https.address</name>

<value>192.168.228.13:8090</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>192.168.228.13:8031</value>

</property>

<property>

<description>The address of the RM admin interface.</description>

<name>yarn.resourcemanager.admin.address</name>

<value>192.168.228.13:8033</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

四、pom中新增的依賴

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.zhiyou100</groupId>

<artifactId>mrdemo</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<org.apache.hadoop.version>2.7.5</org.apache.hadoop.version>

</properties>

<!--分散式計算-->

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>${org.apache.hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-common</artifactId>

<version>${org.apache.hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

<version>${org.apache.hadoop.version}</version>

</dependency>

<!--分散式儲存-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${org.apache.hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${org.apache.hadoop.version}</version>

</dependency>

<!--資料庫驅動-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.46</version>

</dependency> </dependencies>

</project>

五、測試:在本地將jar包自動上傳到hdfs上執行,(執行時間長)

在hdfs上保證

1.有/wc資料夾--資料夾中的檔案內容以空格隔開

例如:我在/wc目錄下有一個a.txt,a.txt中內容如下

2.在hdfs上保證沒有/reout目錄

在打jar包之前需要將第三方jar包放在lib中(在resources中建立lib檔案),再打包。

以上操作之後在本地直接執行(右擊Run),就會出現類似下面的內容