python爬今日頭條

阿新 • • 發佈:2018-12-16

最近在做給新聞分詞。為了保證給文章貼的標籤的準確度高,決定做一個標籤庫。但發現給新聞打標籤網站就只有今日頭條打的比較好,網易一般,其他根本不能看,決定寫一個爬取今日頭條文章標籤的爬蟲。

一:解析引數

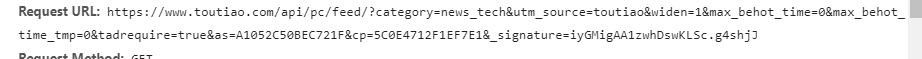

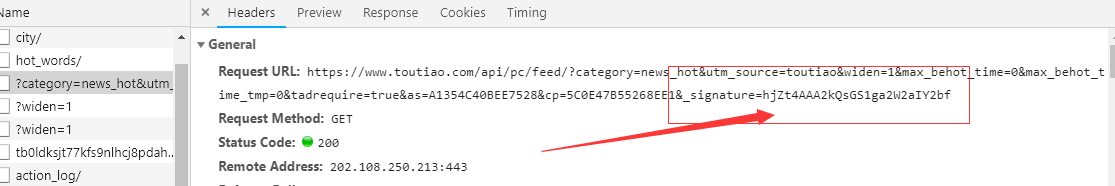

今日頭條的資料全部都是ajax非同步載入的。谷歌瀏覽器按f12選擇network點選XHR會得到如上圖所示,上圖請求的url中有如下幾個引數會變化:

今日頭條的資料全部都是ajax非同步載入的。谷歌瀏覽器按f12選擇network點選XHR會得到如上圖所示,上圖請求的url中有如下幾個引數會變化:

① category

② max_behot_time

③ max_behot_time_tmp

④ as

⑤ cp

⑥ _signature

其中只需要category,max_behot_time,_signature這個三個引數就可以獲取到資料。這是我自己親自試驗過的。

category根據你請求不同的欄目會變化,比如你請求科技欄目category為news_tech:

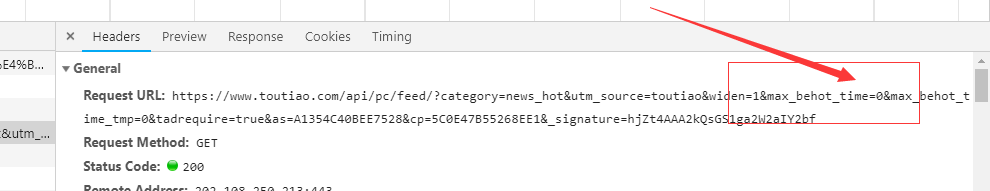

請求熱點欄目category為news_hot:

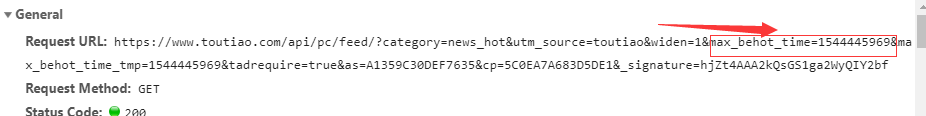

max_behot_time會動態變化最開始為0,下一次變化為這次請求到的json資料中max_behot_time的值:

當前max_behot_time請求的json資料中的max_behot_time的值為1544445969

第二次請求的max_behot_time為1544445969。

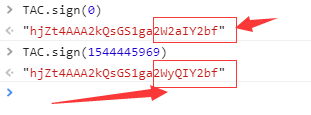

第三個引數為_signature,它是由一個很複雜的js程式碼生成的,這個js程式碼通過TAC.sign(max_behot_time)來生成,就是上面的那個引數max_behot_time的值:

仔細看哦,他們可不是一樣的哦。

三個引數到此解析完畢:

接下來就是擼程式碼,只需複製貼上,改動一點即可使用。

#coding:utf-8 from selenium import webdriver from time import ctime,sleep import threading import requests import time import json import sys import random import Two reload(sys) sys.setdefaultencoding('utf-8') # 進入瀏覽器設定 def run(ajax): name = "word-{a}-".format(a=ajax) + time.strftime("%Y-%m-%d") + ".txt" print name options = webdriver.ChromeOptions() # 設定中文 agent=Two.get_agent() options.set_headless() options.add_argument('lang=zh_CN.UTF-8') options.add_argument( 'user-agent={}'.format(agent)) # --我使用了瀏覽器去獲取_signature的值,你們需要修改這個地方,詳細資訊去簡單百度一下即可 brower = webdriver.Chrome(chrome_options=options, executable_path='C:\Program Files (x86)\Google\Chrome\Application\chromedriver.exe') #brower.get('https://www.toutiao.com/ch/news_hot/') brower.get('https://www.toutiao.com/ch/{t}/'.format(t=ajax)) print 'https://www.toutiao.com/ch/{t}/'.format(t=ajax) sinature = brower.execute_script('return TAC.sign(0)') print(sinature) """獲取cookie""" cookie = brower.get_cookies() print cookie cookie = [item['name'] + "=" + item['value'] for item in cookie] cookiestr = '; '.join(item for item in cookie) time1=0 last=0 while 1: header1 = { 'Host': 'www.toutiao.com', 'User-Agent': agent, 'Referer': 'https://www.toutiao.com/ch/{}/'.format(ajax), "Cookie": cookiestr } #print cookiestr url = 'https://www.toutiao.com/api/pc/feed/?category={t}&utm_source=toutiao&widen=1&max_behot_time={time}&_signature={s}'.format(t=ajax,time=time1,s=sinature) print(url) #設定了動態代理好像沒什麼用 o_g = ["213.162.218.75:55230", "180.180.152.25:51460", "79.173.124.194:47832", "50.112.160.137:53910", "211.159.140.111:8080", "95.189.112.214:35508", "168.232.207.145:46342", "181.129.139.202:32885", "78.47.157.159:80", "112.25.6.15:80", "46.209.135.201:30418", "187.122.224.69:60331", "188.0.190.75:59378", "114.234.76.131:8060", "125.209.78.80:32431", "183.203.13.135:80", "168.232.207.145:46342", "190.152.5.46:53281", "89.250.149.114:60981", "183.232.113.51:80", "213.109.5.230:33138", "85.158.186.12:41258", "142.93.51.134:8080", "181.129.181.250:53539"] a = 0 for a in range(0, 1): #跑了17個執行緒,請求太快會被封的 sleep(30) c = random.randint(0, 23) proxies_l = {'http': o_g[c],} try: html = requests.get(url, headers=header1, verify=False,proxies=proxies_l) print html.cookies html.encoding data = html.content print(data) if(len(data)==51): print "被禁了" sleep(3600) try: s1 = json.loads(data) try: time1 = s1["next"]["max_behot_time"] except Exception as e: print e print time1 #根據max_behot_time獲取signature的值 sinature = brower.execute_script('return TAC.sign({})'.format(time1)) print(sinature) f = open(name, 'a') res = "" for i in range(len(s1["data"])): try: #我需要的文章的label值。 l = s1["data"][i]["label"] except Exception as e: print e continue for j in range(len(l)): res = l[j] + "\n" f.write(res) #print l[j] f.close() #last=time1 break except Exception as e: print("解析錯誤") continue; except Exception as e: print('no proxies') continue #print html.content threads = [] #熱點news_hot t1 = threading.Thread(target=run,args=("news_hot",)) threads.append(t1) #科技 https://www.toutiao.com/ch/news_tech/ t2 = threading.Thread(target=run,args=("news_tech",)) threads.append(t2) # #娛樂 https://www.toutiao.com/ch/news_entertainment/ t3 = threading.Thread(target=run,args=("news_entertainment",)) threads.append(t3) #遊戲 https://www.toutiao.com/ch/news_game/ 沒撒用 t4 = threading.Thread(target=run,args=("news_game",)) threads.append(t4) #體育https://www.toutiao.com/ch/news_sports/ t5 = threading.Thread(target=run,args=("news_sports",)) threads.append(t5) #汽車https://www.toutiao.com/ch/news_car/ t6= threading.Thread(target=run,args=("news_car",)) threads.append(t6) #財經https://www.toutiao.com/ch/news_finance/ t7= threading.Thread(target=run,args=("news_finance",)) threads.append(t7) #軍事https://www.toutiao.com/ch/news_military/ t8= threading.Thread(target=run,args=("news_military",)) threads.append(t8) #時尚https://www.toutiao.com/ch/news_fashion/ t9= threading.Thread(target=run,args=("news_fashion",)) threads.append(t9) #國際https://www.toutiao.com/ch/news_world/ t10= threading.Thread(target=run,args=("news_world",)) threads.append(t10) #探索https://www.toutiao.com/ch/news_discovery/ t11= threading.Thread(target=run,args=("news_discovery",)) threads.append(t11) #養生https://www.toutiao.com/ch/news_regimen/ t12= threading.Thread(target=run,args=("news_regimen",)) threads.append(t12) #歷史https://www.toutiao.com/ch/news_history/ t13= threading.Thread(target=run,args=("news_history",)) threads.append(t13) #美食https://www.toutiao.com/ch/news_food/ t14= threading.Thread(target=run,args=("news_food",)) threads.append(t14) #旅遊https://www.toutiao.com/ch/news_travel/ t15= threading.Thread(target=run,args=("news_travel",)) threads.append(t15) #育兒https://www.toutiao.com/ch/news_baby/ t16= threading.Thread(target=run,args=("news_baby",)) threads.append(t16) #美文https://www.toutiao.com/ch/news_essay/ t17= threading.Thread(target=run,args=("news_essay",)) threads.append(t17) if __name__ == '__main__': for t in threads: t.setDaemon(True) t.start() time.sleep(1080000) print "all over %s" %ctime()

#coding:utf-8

import requests

import random

import json

import re

def get_agent():

ua_list = ["Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

]

user_agent = random.choice(ua_list)

print user_agent

return user_agent

上面這個是隨機生成agent,好讓爬蟲不那麼容易被禁

結果如下:

希望能幫到有需要的朋友。