f2fs系列文章——sit/nat_version_bitmap

f2fs為了防止宕機對元資料造成不可恢復的損害,所以sit/nat這種元資料有著兩個副本,但是這兩個副本只有一個是表示最新的資料,f2fs通過儲存在cp pack中的sit/nat version bitmap來指示哪個才是最新的。本文將講述sit和nat兩個副本的放置情況,以及sit/nat version bitmap在cp pack中的放置情況,最後將描述sit/nat更新時的version bitmap的變化情況。

下面是根據nid來獲取該nid所對應的最新的f2fs_nat_entry所在的f2fs_nat_block所在的塊地址。

static pgoff_t current_nat_addr(struct f2fs_sb_info *sbi, nid_t start) { struct f2fs_nm_info *nm_i = NM_I(sbi); pgoff_t block_off; pgoff_t block_addr; int seg_off; block_off = NAT_BLOCK_OFFSET(start); seg_off = block_off >> sbi->log_blocks_per_seg; block_addr = (pgoff_t)(nm_i->nat_blkaddr + (seg_off << sbi->log_blocks_per_seg << 1) + (block_off & ((1 << sbi->log_blocks_per_seg) -1))); if (f2fs_test_bit(block_off, nm_i->nat_bitmap)) block_addr += sbi->blocks_per_seg; return block_addr; }

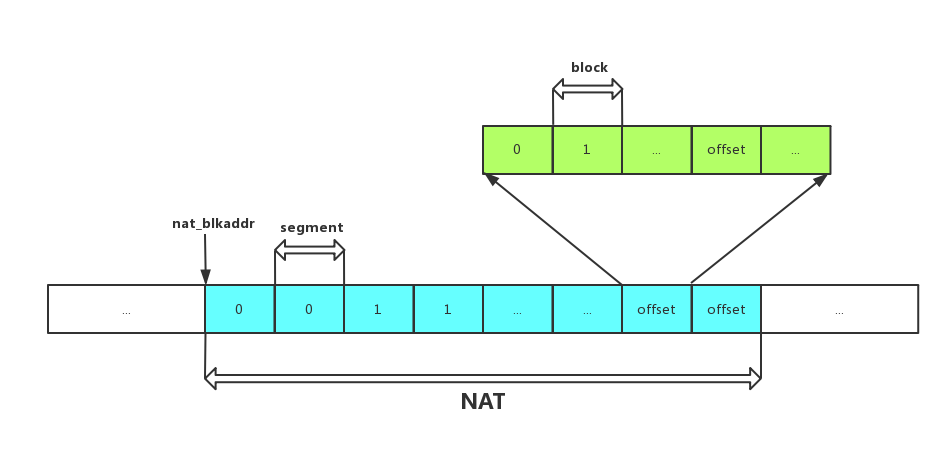

根據上面的原始碼可以看出f2fs_nat_block是以下圖的形式放置的。也就是f2fs_nat_entry為最小的單元,但是以f2fs_nat_block的組織形式組織成磁碟上最小的單位塊,然後相鄰的這些f2fs_nat_block形成一個segment,而相鄰的這些f2fs_nat_block的副本也形成一個segment與其相鄰放置。然後所有的這些segment以這樣的方式重複。

下面是根據segno來獲取該segment所對應的最新的f2fs_sit_entry所在的f2fs_sit_block所在的塊地址。

static inline pgoff_t current_sit_addr(struct f2fs_sb_info *sbi, unsigned int start)

{

struct sit_info *sit_i = SIT_I(sbi);

unsigned int offset = SIT_BLOCK_OFFSET(start);

block_t blk_addr = sit_i->sit_base_addr + offset;

check_seg_range(sbi, start);

if (f2fs_test_bit(offset, sit_i->sit_bitmap))

blk_addr += sit_i->sit_blocks;

return blk_addr;

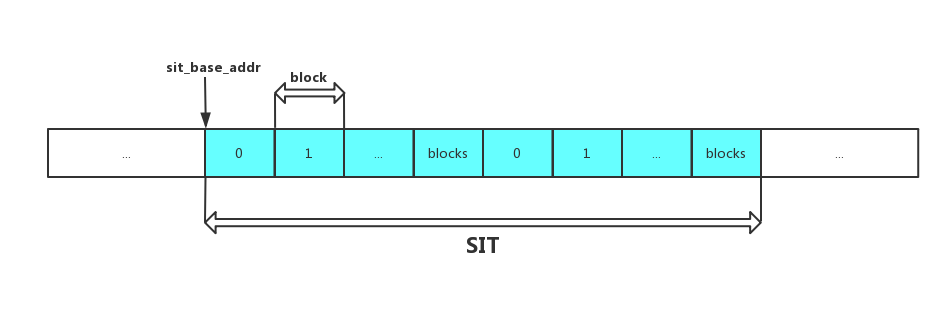

}根據上面的原始碼可以看出f2fs_sit_block是以下圖的形式放置的。也就是f2fs_sit_entry為最小的單元,但是以f2fs_sit_block的組織形式組織成磁碟上最小的單位塊,然後所有的f2fs_sit_block的第一個副本相鄰放置,而這些f2fs_sit_block的第二個副本葉祥林放置並排列在第一個副本的最後一個f2fs_sit_block的後面。

接著是關於sit/nat version bitmap在cp pack中的放置情況,這個根據下面的函式可以看出。首先解釋一下cp_payload這個欄位,由於在f2fs的cp pack中的第一個塊本來應該放置f2fs_checkpoint這個資料結構的,但是我們發現這個資料結構的大小不夠一個block,也就是還有剩餘的空間,所以當si/nat version bitmap比較大的時候,那麼這兩個bitmap是需要額外的空間來儲存的,所以cp_payload記錄的就是這個額外的空間的塊的數量。

static inline void *__bitmap_ptr(struct f2fs_sb_info *sbi, int flag)

{

struct f2fs_checkpoint *ckpt = F2FS_CKPT(sbi);

int offset;

if (__cp_payload(sbi) > 0) {

if (flag == NAT_BITMAP)

return &ckpt->sit_nat_version_bitmap;

else

return (unsigned char *)ckpt + F2FS_BLKSIZE;

} else {

offset = (flag == NAT_BITMAP) ?

le32_to_cpu(ckpt->sit_ver_bitmap_bytesize) : 0;

return &ckpt->sit_nat_version_bitmap + offset;

}

}根據原始碼分析一下:當cp_payload > 0時,也就是存在額外的空間來存放bitmap。如果是NAT_BITMAP,那麼nat version bitmap是放置在以f2fs_checkpoint的最後一個欄位開始的長度為nat_ver_bitmap_bytesize的一段空間中,而對於SIT_BITMAP,sit version bitmap就是放置以cp pack第一個塊後面的第二個塊開始的長度為sit_ver_bitmap_bytesize的一段空間中。當cp_payload = 0時,sit version bitmap是放置在以f2fs_checkpoint的最後一個欄位開始的長度為sit_ver_bitmap_bytesize的一段空間中,nat version bitmap放置在緊跟sit version bitmap後面長度為nat_ver_bitmap_bytesize的一段空間中。

struct f2fs_checkpoint {

__le64 checkpoint_ver;

__le64 user_block_count;

__le64 valid_block_count;

__le32 rsvd_segment_count;

__le32 overprov_segment_count;

__le32 free_segment_count;

__le32 cur_node_segno[MAX_ACTIVE_NODE_LOGS];

__le16 cur_node_blkoff[MAX_ACTIVE_NODE_LOGS];

__le32 cur_data_segno[MAX_ACTIVE_DATA_LOGS];

__le16 cur_data_blkoff[MAX_ACTIVE_DATA_LOGS];

__le32 ckpt_flags;

__le32 cp_pack_total_block_count;

__le32 cp_pack_start_sum;

__le32 valid_node_count;

__le32 valid_inode_count;

__le32 next_free_nid;

__le32 sit_ver_bitmap_bytesize;

__le32 nat_ver_bitmap_bytesize;

__le32 checksum_offset;

__le64 elapsed_time;

unsigned char alloc_type[MAX_ACTIVE_LOGS];

unsigned char sit_nat_version_bitmap[1];

} __packed;接著是sit/nat更新時的version bitmap的變化情況。

nat的快取機制是在f2fs_nm_info中有著nat_root管理這所有的快取的nat_entry,nat_set_root管理所有的dirty的nat_entry。在每次讀取nat的資訊時,都是通過get_meta_page獲取相應的f2fs_nat_block,然後通過函式node_info_from_raw_nat將f2fs_nat_entry的資訊轉移到nat_entry上面,然後通過函式cache_nat_entry將nat_entry加入到nat_root,如果node_info修改了,那麼就將其加入到nat_set_root。

void get_node_info(struct f2fs_sb_info *sbi, nid_t nid, struct node_info *ni)

{

struct f2fs_nm_info *nm_i = NM_I(sbi);

struct curseg_info *curseg = CURSEG_I(sbi, CURSEG_HOT_DATA);

struct f2fs_journal *journal = curseg->journal;

nid_t start_nid = START_NID(nid);

struct f2fs_nat_block *nat_blk;

struct page *page = NULL;

struct f2fs_nat_entry ne;

struct nat_entry *e;

int i;

ni->nid = nid;

down_read(&nm_i->nat_tree_lock);

e = __lookup_nat_cache(nm_i, nid);

if (e) {

ni->ino = nat_get_ino(e);

ni->blk_addr = nat_get_blkaddr(e);

ni->version = nat_get_version(e);

up_read(&nm_i->nat_tree_lock);

return;

}

memset(&ne, 0, sizeof(struct f2fs_nat_entry));

down_read(&curseg->journal_rwsem);

i = lookup_journal_in_cursum(journal, NAT_JOURNAL, nid, 0);

if (i >= 0) {

ne = nat_in_journal(journal, i);

node_info_from_raw_nat(ni, &ne);

}

up_read(&curseg->journal_rwsem);

if (i >= 0)

goto cache;

page = get_current_nat_page(sbi, start_nid);

nat_blk = (struct f2fs_nat_block *)page_address(page);

ne = nat_blk->entries[nid - start_nid];

node_info_from_raw_nat(ni, &ne);

f2fs_put_page(page, 1);

cache:

up_read(&nm_i->nat_tree_lock);

/* cache nat entry */

down_write(&nm_i->nat_tree_lock);

cache_nat_entry(sbi, nid, &ne);

up_write(&nm_i->nat_tree_lock);

}static struct page *get_current_nat_page(struct f2fs_sb_info *sbi, nid_t nid)

{

pgoff_t index = current_nat_addr(sbi, nid);

return get_meta_page(sbi, index);

}static inline void node_info_from_raw_nat(struct node_info *ni, struct f2fs_nat_entry *raw_ne)

{

ni->ino = le32_to_cpu(raw_ne->ino);

ni->blk_addr = le32_to_cpu(raw_ne->block_addr);

ni->version = raw_ne->version;

}對於這些dirty的node_info的資訊只有在check point的時候才會刷到相應的page cache裡面,這個是通過函式flush_nat_entries完成的,主要流程是首選將其重新整理到curseg的journal中,隨著curseg_info的寫入同步到磁碟。如果curseg_info中沒有足夠的空間,那麼就將其重新整理到通過get_next_nat_page接著呼叫get_meta_pag獲得的node_info對應的f2fs_nat_block的page cache中。這裡這個page要注意,通過get_next_nat_page獲得的是當前有效的f2fs_nat_block的另外一個副本所對應的page,此時呼叫set_to_next_nat來修改了nat_version_bitmap中的bit,這樣就完成了nat的更新以及點陣圖的變遷。

void flush_nat_entries(struct f2fs_sb_info *sbi)

{

struct f2fs_nm_info *nm_i = NM_I(sbi);

struct curseg_info *curseg = CURSEG_I(sbi, CURSEG_HOT_DATA);

struct f2fs_journal *journal = curseg->journal;

struct nat_entry_set *setvec[SETVEC_SIZE];

struct nat_entry_set *set, *tmp;

unsigned int found;

nid_t set_idx = 0;

LIST_HEAD(sets);

if (!nm_i->dirty_nat_cnt)

return;

down_write(&nm_i->nat_tree_lock);

if (!__has_cursum_space(journal, nm_i->dirty_nat_cnt, NAT_JOURNAL))

remove_nats_in_journal(sbi);

while ((found = __gang_lookup_nat_set(nm_i, set_idx, SETVEC_SIZE, setvec))) {

unsigned idx;

set_idx = setvec[found - 1]->set + 1;

for (idx = 0; idx < found; idx++)

__adjust_nat_entry_set(setvec[idx], &sets, MAX_NAT_JENTRIES(journal));

}

list_for_each_entry_safe(set, tmp, &sets, set_list)

__flush_nat_entry_set(sbi, set);

up_write(&nm_i->nat_tree_lock);

f2fs_bug_on(sbi, nm_i->dirty_nat_cnt);

}static void __flush_nat_entry_set(struct f2fs_sb_info *sbi, struct nat_entry_set *set)

{

struct curseg_info *curseg = CURSEG_I(sbi, CURSEG_HOT_DATA);

struct f2fs_journal *journal = curseg->journal;

nid_t start_nid = set->set * NAT_ENTRY_PER_BLOCK;

bool to_journal = true;

struct f2fs_nat_block *nat_blk;

struct nat_entry *ne, *cur;

struct page *page = NULL;

if (!__has_cursum_space(journal, set->entry_cnt, NAT_JOURNAL))

to_journal = false;

if (to_journal) {

down_write(&curseg->journal_rwsem);

} else {

page = get_next_nat_page(sbi, start_nid);

nat_blk = page_address(page);

f2fs_bug_on(sbi, !nat_blk);

}

list_for_each_entry_safe(ne, cur, &set->entry_list, list) {

struct f2fs_nat_entry *raw_ne;

nid_t nid = nat_get_nid(ne);

int offset;

if (nat_get_blkaddr(ne) == NEW_ADDR)

continue;

if (to_journal) {

offset = lookup_journal_in_cursum(journal, NAT_JOURNAL, nid, 1);

f2fs_bug_on(sbi, offset < 0);

raw_ne = &nat_in_journal(journal, offset);

nid_in_journal(journal, offset) = cpu_to_le32(nid);

} else {

raw_ne = &nat_blk->entries[nid - start_nid];

}

raw_nat_from_node_info(raw_ne, &ne->ni);

nat_reset_flag(ne);

__clear_nat_cache_dirty(NM_I(sbi), ne);

if (nat_get_blkaddr(ne) == NULL_ADDR)

add_free_nid(sbi, nid, false);

}

if (to_journal)

up_write(&curseg->journal_rwsem);

else

f2fs_put_page(page, 1);

f2fs_bug_on(sbi, set->entry_cnt);

radix_tree_delete(&NM_I(sbi)->nat_set_root, set->set);

kmem_cache_free(nat_entry_set_slab, set);

}static struct page *get_next_nat_page(struct f2fs_sb_info *sbi, nid_t nid)

{

struct page *src_page;

struct page *dst_page;

pgoff_t src_off;

pgoff_t dst_off;

void *src_addr;

void *dst_addr;

struct f2fs_nm_info *nm_i = NM_I(sbi);

src_off = current_nat_addr(sbi, nid);

dst_off = next_nat_addr(sbi, src_off);

src_page = get_meta_page(sbi, src_off);

dst_page = grab_meta_page(sbi, dst_off);

f2fs_bug_on(sbi, PageDirty(src_page));

src_addr = page_address(src_page);

dst_addr = page_address(dst_page);

memcpy(dst_addr, src_addr, PAGE_SIZE);

set_page_dirty(dst_page);

f2fs_put_page(src_page, 1);

set_to_next_nat(nm_i, nid);

return dst_page;

}static inline pgoff_t next_nat_addr(struct f2fs_sb_info *sbi, pgoff_t block_addr)

{

struct f2fs_nm_info *nm_i = NM_I(sbi);

block_addr -= nm_i->nat_blkaddr;

if ((block_addr >> sbi->log_blocks_per_seg) % 2)

block_addr -= sbi->blocks_per_seg;

else

block_addr += sbi->blocks_per_seg;

return block_addr + nm_i->nat_blkaddr;

}static inline void raw_nat_from_node_info(struct f2fs_nat_entry *raw_ne, struct node_info *ni)

{

raw_ne->ino = cpu_to_le32(ni->ino);

raw_ne->block_addr = cpu_to_le32(ni->blk_addr);

raw_ne->version = ni->version;

}

而sit的快取是在mount的時候就在記憶體中建立所有的f2fs_sit_entry對應的記憶體資料結構sit_entry,而記錄dirty的sit_entry是通過一個位圖dirty_sentries_bitmap來維護的,其同步基本跟nat是一致的:通過函式flush_sit_entries完成的,主要流程是首選將其重新整理到curseg的journal中,隨著curseg_info的寫入同步到磁碟。如果curseg_info中沒有足夠的空間,那麼就將其重新整理到通過get_meta_pag獲得的seg_entry對應的f2fs_sit_block的page cache中。這裡這個page要注意,是當前有效的f2fs_sit_block的另外一個副本的page,此時也修改了sit_version_bitmap中的bit,這樣就完成了sit的更新以及點陣圖的變遷。

void flush_sit_entries(struct f2fs_sb_info *sbi, struct cp_control *cpc)

{

struct sit_info *sit_i = SIT_I(sbi);

unsigned long *bitmap = sit_i->dirty_sentries_bitmap;

struct curseg_info *curseg = CURSEG_I(sbi, CURSEG_COLD_DATA);

struct f2fs_journal *journal = curseg->journal;

struct sit_entry_set *ses, *tmp;

struct list_head *head = &SM_I(sbi)->sit_entry_set;

bool to_journal = true;

struct seg_entry *se;

mutex_lock(&sit_i->sentry_lock);

if (!sit_i->dirty_sentries)

goto out;

add_sits_in_set(sbi);

if (!__has_cursum_space(journal, sit_i->dirty_sentries, SIT_JOURNAL))

remove_sits_in_journal(sbi);

list_for_each_entry_safe(ses, tmp, head, set_list) {

struct page *page = NULL;

struct f2fs_sit_block *raw_sit = NULL;

unsigned int start_segno = ses->start_segno;

unsigned int end = min(start_segno + SIT_ENTRY_PER_BLOCK, (unsigned long)MAIN_SEGS(sbi));

unsigned int segno = start_segno;

if (to_journal && !__has_cursum_space(journal, ses->entry_cnt, SIT_JOURNAL))

to_journal = false;

if (to_journal) {

down_write(&curseg->journal_rwsem);

} else {

page = get_next_sit_page(sbi, start_segno);

raw_sit = page_address(page);

}

for_each_set_bit_from(segno, bitmap, end) {

int offset, sit_offset;

se = get_seg_entry(sbi, segno);

if (cpc->reason != CP_DISCARD) {

cpc->trim_start = segno;

add_discard_addrs(sbi, cpc);

}

if (to_journal) {

offset = lookup_journal_in_cursum(journal, SIT_JOURNAL, segno, 1);

f2fs_bug_on(sbi, offset < 0);

segno_in_journal(journal, offset) = cpu_to_le32(segno);

seg_info_to_raw_sit(se, &sit_in_journal(journal, offset));

} else {

sit_offset = SIT_ENTRY_OFFSET(sit_i, segno);

seg_info_to_raw_sit(se, &raw_sit->entries[sit_offset]);

}

__clear_bit(segno, bitmap);

sit_i->dirty_sentries--;

ses->entry_cnt--;

}

if (to_journal)

up_write(&curseg->journal_rwsem);

else

f2fs_put_page(page, 1);

f2fs_bug_on(sbi, ses->entry_cnt);

release_sit_entry_set(ses);

}

f2fs_bug_on(sbi, !list_empty(head));

f2fs_bug_on(sbi, sit_i->dirty_sentries);

out:

if (cpc->reason == CP_DISCARD) {

for (; cpc->trim_start <= cpc->trim_end; cpc->trim_start++)

add_discard_addrs(sbi, cpc);

}

mutex_unlock(&sit_i->sentry_lock);

set_prefree_as_free_segments(sbi);

}static struct page *get_next_sit_page(struct f2fs_sb_info *sbi, unsigned int start)

{

struct sit_info *sit_i = SIT_I(sbi);

struct page *src_page, *dst_page;

pgoff_t src_off, dst_off;

void *src_addr, *dst_addr;

src_off = current_sit_addr(sbi, start);

dst_off = next_sit_addr(sbi, src_off);

src_page = get_meta_page(sbi, src_off);

dst_page = grab_meta_page(sbi, dst_off);

f2fs_bug_on(sbi, PageDirty(src_page));

src_addr = page_address(src_page);

dst_addr = page_address(dst_page);

memcpy(dst_addr, src_addr, PAGE_SIZE);

set_page_dirty(dst_page);

f2fs_put_page(src_page, 1);

set_to_next_sit(sit_i, start);

return dst_page;

}

static inline pgoff_t next_sit_addr(struct f2fs_sb_info *sbi, pgoff_t block_addr)

{

struct sit_info *sit_i = SIT_I(sbi);

block_addr -= sit_i->sit_base_addr;

if (block_addr < sit_i->sit_blocks)

block_addr += sit_i->sit_blocks;

else

block_addr -= sit_i->sit_blocks;

return block_addr + sit_i->sit_base_addr;

}static inline void set_to_next_sit(struct sit_info *sit_i, unsigned int start)

{

unsigned int block_off = SIT_BLOCK_OFFSET(start);

f2fs_change_bit(block_off, sit_i->sit_bitmap);

}static inline void seg_info_to_raw_sit(struct seg_entry *se, struct f2fs_sit_entry *rs)

{

unsigned short raw_vblocks = (se->type << SIT_VBLOCKS_SHIFT) | se->valid_blocks;

rs->vblocks = cpu_to_le16(raw_vblocks);

memcpy(rs->valid_map, se->cur_valid_map, SIT_VBLOCK_MAP_SIZE);

memcpy(se->ckpt_valid_map, rs->valid_map, SIT_VBLOCK_MAP_SIZE);

se->ckpt_valid_blocks = se->valid_blocks;

rs->mtime = cpu_to_le64(se->mtime);

}