Problem of Uninstall Cloudera: Cannot Add Hdfs and Reported Cannot Find CDH's bigtop-detect-javahome

1. Problem

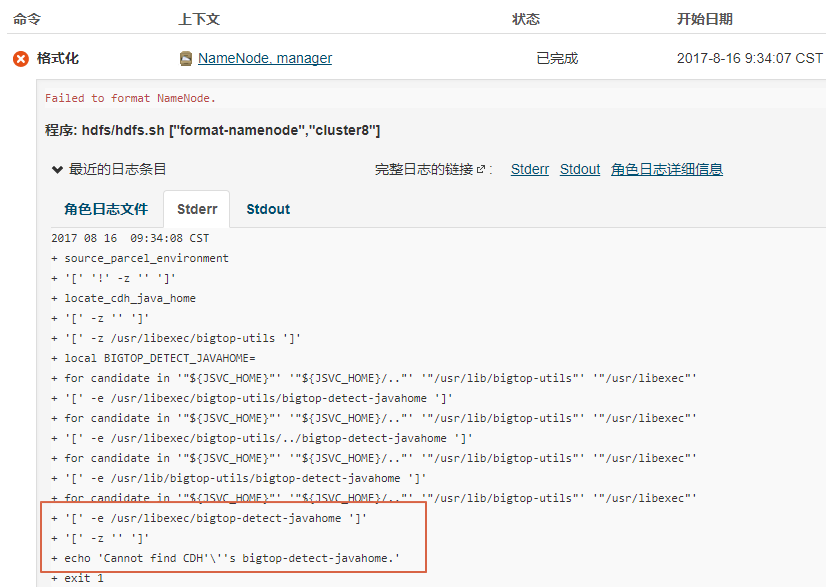

We wrote a shell script to uninstall Cloudera Manager(CM) that run in a cluster with 3 linux server. After run the script, we reinstalled the CM normally. But when we established Hdfs encountered a problem: **failed to format NameNode. Cannot find CDH's bigtop-detect-javahome. **

2.Thinking

- By google we found if it caused by the exist of floder "/dfs", but after used command

rm -rf /dfsthe problem still happen. - We found the error reported:

/usr/libexec/bigtop-detect-javahome, so we thought if caused by JAVA_HOME variable exception. At first, we disable the file:/etc/profile's JAAVA_HOME variable. To make the change effect, we shouldsource /etc/profileand disconnect the xshell. however, the problem still happen. - Recheck the uninstall script, we found the script delete files:

/usr/bin/hadoop*which was important. now /etc/profile as follow:

#export JAVA_HOME=/usr/java/jdk1.7.0_67-cloudera #export PATH=$JAVA_HOME/bin:$PATH #export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

3. Slove

follwing step you should do in each server of the cluster.

3.1 Check file hadoop avaliable

cd to the floder /usr/bin and check if file hadoopis avaliable. the content of file as follows:

#!/bin/bash

# Autodetect JAVA_HOME if not defined

. /usr/lib/bigtop-utils/bigtop-detect-javahome

export HADOOP_HOME=/usr/lib/hadoop

export HADOOP_MAPRED_HOME=/usr/lib/hadoop

export HADOOP_LIBEXEC_DIR=//usr/lib/hadoop/libexec

export HADOOP_CONF_DIR=/etc/hadoop/conf

exec /usr/lib/hadoop/bin/hadoop "[email protected]"

we found it load file bigtop-detect-javahome and set HADOOP_HOME. It's a pity that /usr/lib/hadoop* and /usr/lib/hadoop* was delete by our uninstall script, so we copied those file and floder from another cluster to our cluster.

3.2 Check file bigtop-detect-javahome avaliable

cd to the floder /usr/lib/bigtop-utils and check if filebigtop-detect-javahome is avaliable, the content of file as follows:

# attempt to find java

if [ -z "${JAVA_HOME}" ]; then

for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}; do

for candidate in `ls -rvd ${candidate_regex}* 2>/dev/null`; do

if [ -e ${candidate}/bin/java ]; then

export JAVA_HOME=${candidate}

break 2

fi

done

done

fi

the file is used to set JAVA_HOME variable when system doesn't define JAVA_HOME in /etc/profile. Finally, I found no matter the file /etc/profile with JAVA_HOME variable or without it, Hdfs can be installed.

3.3 More and more

Go through the first two steps can slove the problem, but more google let me know /etc/default/ is real config floder ! refer: http://blog.sina.com.cn/s/blog_5d9aca630101pxr1.html addexport JAVA_HOME=path into /etc/default/bigtop-utils, then source /etc/default/bigtop-utils. You only need to do it once and all CDH components would use that variable to figure out where JDK is.

4. Conclusion

- Always focus on the log file content and error's relation is.

- Make the best use of your time and modest ask for advise to other. otherwise, the problem always exists.