Android系統程序間通訊 IPC 機制Binder中的Server啟動過程原始碼分析

在前面一篇文章中,介紹了在Android系統中Binder程序間通訊機制中的Server角色是如何獲得Service Manager遠端介面的,即defaultServiceManager函式的實現。Server獲得了Service Manager遠端介面之後,就要把自己的Service新增到Service Manager中去,然後把自己啟動起來,等待Client的請求。本文將通過分析原始碼瞭解Server的啟動過程是怎麼樣的。

《Android系統原始碼情景分析》一書正在進擊的程式設計師網(http://0xcc0xcd.com)中連載,點選進入!

本文通過一個具體的例子來說明Binder機制中Server的啟動過程。我們知道,在Android系統中,提供了多媒體播放的功能,這個功能是以服務的形式來提供的。這裡,我們就通過分析MediaPlayerService的實現來了解Media Server的啟動過程。

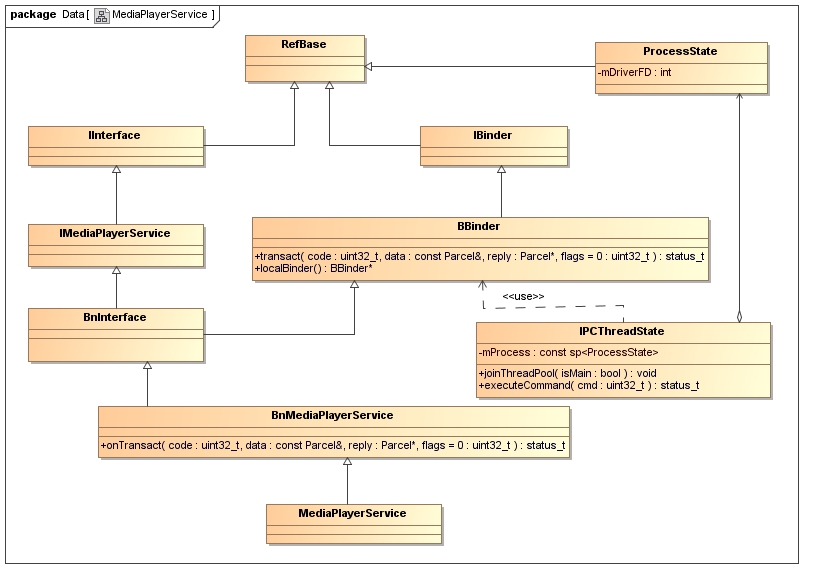

首先,看一下MediaPlayerService的類圖,以便我們理解下面要描述的內容。

我們將要介紹的主角MediaPlayerService繼承於BnMediaPlayerService類,熟悉Binder機制的同學應該知道BnMediaPlayerService是一個Binder Native類,用來處理Client請求的。BnMediaPlayerService繼承於BnInterface<IMediaPlayerService>類,BnInterface是一個模板類,它定義在frameworks/base/include/binder/IInterface.h檔案中:

template<typename INTERFACE>class BnInterface : public INTERFACE, public BBinder{public: virtual sp<IInterface> queryLocalInterface(const String16& _descriptor); virtual const String16& getInterfaceDescriptor() const;protected: virtual IBinder* onAsBinder 實際上,BnMediaPlayerService並不是直接接收到Client處傳送過來的請求,而是使用了IPCThreadState接收Client處傳送過來的請求,而IPCThreadState又藉助了ProcessState類來與Binder驅動程式互動。有關IPCThreadState和ProcessState的關係,可以參考上一篇文章淺談Android系統程序間通訊(IPC)機制Binder中的Server和Client獲得Service Manager介面之路,接下來也會有相應的描述。IPCThreadState接收到了Client處的請求後,就會呼叫BBinder類的transact函式,並傳入相關引數,BBinder類的transact函式最終呼叫BnMediaPlayerService類的onTransact函式,於是,就開始真正地處理Client的請求了。

瞭解了MediaPlayerService類結構之後,就要開始進入到本文的主題了。

首先,看看MediaPlayerService是如何啟動的。啟動MediaPlayerService的程式碼位於frameworks/base/media/mediaserver/main_mediaserver.cpp檔案中:

int main(int argc, char** argv){ sp<ProcessState> proc(ProcessState::self()); sp<IServiceManager> sm = defaultServiceManager(); LOGI("ServiceManager: %p", sm.get()); AudioFlinger::instantiate(); MediaPlayerService::instantiate(); CameraService::instantiate(); AudioPolicyService::instantiate(); ProcessState::self()->startThreadPool(); IPCThreadState::self()->joinThreadPool();}先看下面這句程式碼:

sp<ProcessState> proc(ProcessState::self());sp<ProcessState> ProcessState::self(){ if (gProcess != NULL) return gProcess; AutoMutex _l(gProcessMutex); if (gProcess == NULL) gProcess = new ProcessState; return gProcess;}Mutex gProcessMutex;sp<ProcessState> gProcess;ProcessState::ProcessState() : mDriverFD(open_driver()) , mVMStart(MAP_FAILED) , mManagesContexts(false) , mBinderContextCheckFunc(NULL) , mBinderContextUserData(NULL) , mThreadPoolStarted(false) , mThreadPoolSeq(1){ if (mDriverFD >= 0) { // XXX Ideally, there should be a specific define for whether we // have mmap (or whether we could possibly have the kernel module // availabla).#if !defined(HAVE_WIN32_IPC) // mmap the binder, providing a chunk of virtual address space to receive transactions. mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0); if (mVMStart == MAP_FAILED) { // *sigh* LOGE("Using /dev/binder failed: unable to mmap transaction memory.\n"); close(mDriverFD); mDriverFD = -1; }#else mDriverFD = -1;#endif } if (mDriverFD < 0) { // Need to run without the driver, starting our own thread pool. }}先看open_driver函式的實現,這個函式同樣位於frameworks/base/libs/binder/ProcessState.cpp檔案中:

static int open_driver(){ if (gSingleProcess) { return -1; } int fd = open("/dev/binder", O_RDWR); if (fd >= 0) { fcntl(fd, F_SETFD, FD_CLOEXEC); int vers;#if defined(HAVE_ANDROID_OS) status_t result = ioctl(fd, BINDER_VERSION, &vers);#else status_t result = -1; errno = EPERM;#endif if (result == -1) { LOGE("Binder ioctl to obtain version failed: %s", strerror(errno)); close(fd); fd = -1; } if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) { LOGE("Binder driver protocol does not match user space protocol!"); close(fd); fd = -1; }#if defined(HAVE_ANDROID_OS) size_t maxThreads = 15; result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads); if (result == -1) { LOGE("Binder ioctl to set max threads failed: %s", strerror(errno)); }#endif } else { LOGW("Opening '/dev/binder' failed: %s\n", strerror(errno)); } return fd;}open在Binder驅動程式中的具體實現,請參考前面一篇文章淺談Service Manager成為Android程序間通訊(IPC)機制Binder守護程序之路,這裡不再重複描述。開啟/dev/binder裝置檔案後,Binder驅動程式就為MediaPlayerService程序建立了一個struct binder_proc結構體例項來維護MediaPlayerService程序上下文相關資訊。

我們來看一下ioctl檔案操作函式執行BINDER_VERSION命令的過程:

status_t result = ioctl(fd, BINDER_VERSION, &vers);static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg){ int ret; struct binder_proc *proc = filp->private_data; struct binder_thread *thread; unsigned int size = _IOC_SIZE(cmd); void __user *ubuf = (void __user *)arg; /*printk(KERN_INFO "binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/ ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2); if (ret) return ret; mutex_lock(&binder_lock); thread = binder_get_thread(proc); if (thread == NULL) { ret = -ENOMEM; goto err; } switch (cmd) { ...... case BINDER_VERSION: if (size != sizeof(struct binder_version)) { ret = -EINVAL; goto err; } if (put_user(BINDER_CURRENT_PROTOCOL_VERSION, &((struct binder_version *)ubuf)->protocol_version)) { ret = -EINVAL; goto err; } break; ...... } ret = 0;err: ...... return ret;}很簡單,只是將BINDER_CURRENT_PROTOCOL_VERSION寫入到傳入的引數arg指向的使用者緩衝區中去就返回了。BINDER_CURRENT_PROTOCOL_VERSION是一個巨集,定義在kernel/common/drivers/staging/android/binder.h檔案中:

/* This is the current protocol version. */#define BINDER_CURRENT_PROTOCOL_VERSION 7/* Use with BINDER_VERSION, driver fills in fields. */struct binder_version { /* driver protocol version -- increment with incompatible change */ signed long protocol_version;};這裡有一個重要的地方要注意的是,由於這裡是開啟裝置檔案/dev/binder之後,第一次進入到binder_ioctl函式,因此,這裡呼叫binder_get_thread的時候,就會為當前執行緒建立一個struct binder_thread結構體變數來維護執行緒上下文資訊,具體可以參考淺談Service Manager成為Android程序間通訊(IPC)機制Binder守護程序之路一文。

接著我們再來看一下ioctl檔案操作函式執行BINDER_SET_MAX_THREADS命令的過程:

result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads);這個函式呼叫最終進入到Binder驅動程式的binder_ioctl函式中,我們只關注BINDER_SET_MAX_THREADS相關的部分邏輯:

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg){ int ret; struct binder_proc *proc = filp->private_data; struct binder_thread *thread; unsigned int size = _IOC_SIZE(cmd); void __user *ubuf = (void __user *)arg; /*printk(KERN_INFO "binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/ ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2); if (ret) return ret; mutex_lock(&binder_lock); thread = binder_get_thread(proc); if (thread == NULL) { ret = -ENOMEM; goto err; } switch (cmd) { ...... case BINDER_SET_MAX_THREADS: if (copy_from_user(&proc->max_threads, ubuf, sizeof(proc->max_threads))) { ret = -EINVAL; goto err; } break; ...... } ret = 0;err: ...... return ret;}回到ProcessState的建構函式中,這裡還通過mmap函式來把裝置檔案/dev/binder對映到記憶體中,這個函式在淺談Service Manager成為Android程序間通訊(IPC)機制Binder守護程序之路一文也已經有詳細介紹,這裡不再重複描述。巨集BINDER_VM_SIZE就定義在ProcessState.cpp檔案中:

#define BINDER_VM_SIZE ((1*1024*1024) - (4096 *2))這樣,ProcessState全域性唯一變數gProcess就建立完畢了,回到frameworks/base/media/mediaserver/main_mediaserver.cpp檔案中的main函式,下一步是呼叫defaultServiceManager函式來獲得Service Manager的遠端介面,這個已經在上一篇文章

再接下來,就進入到MediaPlayerService::instantiate函式把MediaPlayerService新增到Service Manger中去了。這個函式定義在frameworks/base/media/libmediaplayerservice/MediaPlayerService.cpp檔案中:

void MediaPlayerService::instantiate() { defaultServiceManager()->addService( String16("media.player"), new MediaPlayerService());}在上一篇文章淺談Android系統程序間通訊(IPC)機制Binder中的Server和Client獲得Service Manager介面之路中說到,defaultServiceManager返回的實際是一個BpServiceManger類例項,因此,我們看一下BpServiceManger::addService的實現,這個函式實現在frameworks/base/libs/binder/IServiceManager.cpp檔案中:

class BpServiceManager : public BpInterface<IServiceManager>{public: BpServiceManager(const sp<IBinder>& impl) : BpInterface<IServiceManager>(impl) { } ...... virtual status_t addService(const String16& name, const sp<IBinder>& service) { Parcel data, reply; data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor()); data.writeString16(name); data.writeStrongBinder(service); status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply); return err == NO_ERROR ? reply.readExceptionCode() } ......};先來看這一句的呼叫:

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());// Write RPC headers. (previously just the interface token)status_t Parcel::writeInterfaceToken(const String16& interface){ writeInt32(IPCThreadState::self()->getStrictModePolicy() | STRICT_MODE_PENALTY_GATHER); // currently the interface identification token is just its name as a string return writeString16(interface);}再來看下面的呼叫:

data.writeString16(name);往下看:

data.writeStrongBinder(service);status_t Parcel::writeStrongBinder(const sp<IBinder>& val){ return flatten_binder(ProcessState::self(), val, this);}/* * This is the flattened representation of a Binder object for transfer * between processes. The 'offsets' supplied as part of a binder transaction * contains offsets into the data where these structures occur. The Binder * driver takes care of re-writing the structure type and data as it moves * between processes. */struct flat_binder_object { /* 8 bytes for large_flat_header. */ unsigned long type; unsigned long flags; /* 8 bytes of data. */ union { void *binder; /* local object */ signed long handle; /* remote object */ }; /* extra data associated with local object */ void *cookie;};我們進入到flatten_binder函式看看:

status_t flatten_binder(const sp<ProcessState>& proc, const sp<IBinder>& binder, Parcel* out){ flat_binder_object obj; obj.flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS; if (binder != NULL) { IBinder *local = binder->localBinder(); if (!local) { BpBinder *proxy = binder->remoteBinder(); if (proxy == NULL) { LOGE("null proxy"); } const int32_t handle = proxy ? proxy->handle() : 0; obj.type = BINDER_TYPE_HANDLE; obj.handle = handle; obj.cookie = NULL; } else { obj.type = BINDER_TYPE_BINDER; obj.binder = local->getWeakRefs(); obj.cookie = local; } } else { obj.type = BINDER_TYPE_BINDER; obj.binder = NULL; obj.cookie = NULL; } return finish_flatten_binder(binder, obj, out);}obj.flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS;傳進來的binder即為MediaPlayerService::instantiate函式中new出來的MediaPlayerService例項,因此,不為空。又由於MediaPlayerService繼承自BBinder類,它是一個本地Binder實體,因此binder->localBinder返回一個BBinder指標,而且肯定不為空,於是執行下面語句:

obj.type = BINDER_TYPE_BINDER;obj.binder = local->getWeakRefs();obj.cookie = local;函式呼叫finish_flatten_binder來將這個flat_binder_obj寫入到Parcel中去:

inline static status_t finish_flatten_binder( const sp<IBinder>& binder, const flat_binder_object& flat, Parcel* out){ return out->writeObject(flat, false);}status_t Parcel::writeObject(const flat_binder_object& val, bool nullMetaData){ const bool enoughData = (mDataPos+sizeof(val)) <= mDataCapacity; const bool enoughObjects = mObjectsSize < mObjectsCapacity; if (enoughData && enoughObjects) {restart_write: *reinterpret_cast<flat_binder_object*>(mData+mDataPos) = val; // Need to write meta-data? if (nullMetaData || val.binder != NULL) { mObjects[mObjectsSize] = mDataPos; acquire_object(ProcessState::self(), val, this); mObjectsSize++; } // remember if it's a file descriptor if (val.type == BINDER_TYPE_FD) { mHasFds = mFdsKnown = true; } return finishWrite(sizeof(flat_binder_object)); } if (!enoughData) { const status_t err = growData(sizeof(val)); if (err != NO_ERROR) return err; } if (!enoughObjects) { size_t newSize = ((mObjectsSize+2)*3)/2; size_t* objects = (size_t*)realloc(mObjects, newSize*sizeof(size_t)); if (objects == NULL) return NO_MEMORY; mObjects = objects; mObjectsCapacity = newSize; } goto restart_write;}mObjects[mObjectsSize] = mDataPos;再回到BpServiceManager::addService函式中,呼叫下面語句:

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);status_t BpBinder::transact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags){ // Once a binder has died, it will never come back to life. if (mAlive) { status_t status = IPCThreadState::self()->transact( mHandle, code, data, reply, flags); if (status == DEAD_OBJECT) mAlive = 0; return status; } return DEAD_OBJECT;}status_t IPCThreadState::transact(int32_t handle, uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags){ status_t err = data.errorCheck(); flags |= TF_ACCEPT_FDS; IF_LOG_TRANSACTIONS() { TextOutput::Bundle _b(alog); alog << "BC_TRANSACTION thr " << (void*)pthread_self() << " / hand " << handle << " / code " << TypeCode(code) << ": " << indent << data << dedent << endl; } if (err == NO_ERROR) { LOG_ONEWAY(">>>> SEND from pid %d uid %d %s", getpid(), getuid(), (flags & TF_ONE_WAY) == 0 ? "READ REPLY" : "ONE WAY"); err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL); } if (err != NO_ERROR) { if (reply) reply->setError(err); return (mLastError = err); } if ((flags & TF_ONE_WAY) == 0) { #if 0 if (code == 4) { // relayout LOGI(">>>>>> CALLING transaction 4"); } else { LOGI(">>>>>> CALLING transaction %d", code); } #endif if (reply) { err = waitForResponse(reply); } else { Parcel fakeReply; err = waitForResponse(&fakeReply); } #if 0 if (code == 4) { // relayout LOGI("<<<<<< RETURNING transaction 4"); } else { LOGI("<<<<<< RETURNING transaction %d", code); } #endif IF_LOG_TRANSACTIONS() { TextOutput::Bundle _b(alog); alog << "BR_REPLY thr " << (void*)pthread_self() << " / hand " << handle << ": "; if (reply) alog << indent << *reply << dedent << endl; else alog << "(none requested)" << endl; } } else { err = waitForResponse(NULL, NULL); } return err;}函式首先呼叫writeTransactionData函式準備好一個struct binder_transaction_data結構體變數,這個是等一下要傳輸給Binder驅動程式的。struct binder_transaction_data的定義我們在淺談Service Manager成為Android程序間通訊(IPC)機制Binder守護程序之路一文中有詳細描述,讀者不妨回過去讀一下。這裡為了方便描述,將struct binder_transaction_data的定義再次列出來:

struct binder_transaction_data { /* The first two are only used for bcTRANSACTION and brTRANSACTION, * identifying the target and contents of the transaction. */ union { size_t handle; /* target descriptor of command transaction */ void *ptr; /* target descriptor of return transaction */ } target; void *cookie; /* target object cookie */ unsigned int code; /* transaction command */ /* General information about the transaction. */ unsigned int flags; pid_t sender_pid; uid_t sender_euid; size_t data_size; /* number of bytes of data */ size_t offsets_size; /* number of bytes of offsets */ /* If this transaction is inline, the data immediately * follows here; otherwise, it ends with a pointer to * the data buffer. */ union { struct { /* transaction data */ const void *buffer; /* offsets from buffer to flat_binder_object structs */ const void *offsets; } ptr; uint8_t buf[8]; } data;};status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags, int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer){ binder_transaction_data tr; tr.target.handle = handle; tr.code = code; tr.flags = binderFlags; const status_t err = data.errorCheck(); if (err == NO_ERROR) { tr.data_size = data.ipcDataSize(); tr.data.ptr.buffer = data.ipcData(); tr.offsets_size = data.ipcObjectsCount()*sizeof(size_t); tr.data.ptr.offsets = data.ipcObjects(); } else if (statusBuffer) { tr.flags |= TF_STATUS_CODE; *statusBuffer = err; tr.data_size = sizeof(status_t); tr.data.ptr.buffer = statusBuffer; tr.offsets_size = 0; tr.data.ptr.offsets = NULL; } else { return (mLastError = err); } mOut.writeInt32(cmd); mOut.write(&tr, sizeof(tr)); return NO_ERROR;}注意,這裡的cmd為BC_TRANSACTION。 這個函式很簡單,在這個場景下,就是執行下面語句來初始化本地變數tr:

tr.data_size = data.ipcDataSize();tr.data.ptr.buffer = data.ipcData();tr.offsets_size = data.ipcObjectsCount()*sizeof(size_t);tr.data.ptr.offsets = data.ipcObjects();writeInt32(IPCThreadState::self()->getStrictModePolicy() | STRICT_MODE_PENALTY_GATHER);writeString16("android.os.IServiceManager");writeString16("media.player");writeStrongBinder(new MediaPlayerService());status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult){ int32_t cmd; int32_t err; while (1) { if ((err=talkWithDriver()) < NO_ERROR) break; err = mIn.errorCheck(); if (err < NO_ERROR) break; if (mIn.dataAvail() == 0) continue; cmd = mIn.readInt32(); IF_LOG_COMMANDS() { alog << "Processing waitForResponse Command: " << getReturnString(cmd) << endl; } switch (cmd) { case BR_TRANSACTION_COMPLETE: if (!reply && !acquireResult) goto finish; break; case BR_DEAD_REPLY: err = DEAD_OBJECT; goto finish; case BR_FAILED_REPLY: err = FAILED_TRANSACTION; goto finish; case BR_ACQUIRE_RESULT: { LOG_ASSERT(acquireResult != NULL, "Unexpected brACQUIRE_RESULT"); const int32_t result = mIn.readInt32(); if (!acquireResult) continue; *acquireResult = result ? NO_ERROR : INVALID_OPERATION; } goto finish; case BR_REPLY: { binder_transaction_data tr; err = mIn.read(&tr, sizeof(tr)); LOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY"); if (err != NO_ERROR) goto finish; if (reply) { if ((tr.flags & TF_STATUS_CODE) == 0) { reply->ipcSetDataReference( reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(size_t), freeBuffer, this); } else { err = *static_cast<const status_t*>(tr.data.ptr.buffer); freeBuffer(NULL, reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(size_t), this); } } else { freeBuffer(NULL, reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(size_t), this); continue; } } goto finish; default: err = executeCommand(cmd); if (err != NO_ERROR) goto finish; break; } }finish: if (err != NO_ERROR) { if (acquireResult) *acquireResult = err; if (reply) reply->setError(err); mLastError = err; } return err;}status_t IPCThreadState::talkWithDriver(bool doReceive){ LOG_ASSERT(mProcess->mDriverFD >= 0, "Binder driver is not opened"); binder_write_read bwr; // Is the read buffer empty? const bool needRead = mIn.dataPosition() >= mIn.dataSize(); // We don't want to write anything if we are still reading // from data left in the input buffer and the caller // has requested to read the next data. const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0; bwr.write_size = outAvail; bwr.write_buffer = (long unsigned int)mOut.data(); // This is what we'll read. if (doReceive && needRead) { bwr.read_size = mIn.dataCapacity(); bwr.read_buffer = (long unsigned int)mIn.data(); } else { bwr.read_size = 0; } IF_LOG_COMMANDS() { TextOutput::Bundle _b(alog); if (outAvail != 0) { alog << "Sending commands to driver: " << indent; const void* cmds = (const void*)bwr.write_buffer; const void* end = ((const uint8_t*)cmds)+bwr.write_size; alog << HexDump(cmds, bwr.write_size) << endl; while (cmds < end) cmds = printCommand(alog, cmds); alog << dedent; } alog << "Size of receive buffer: " << bwr.read_size << ", needRead: " << needRead << ", doReceive: " << doReceive << endl; } // Return immediately if there is nothing to do. if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR; bwr.write_consumed = 0; bwr.read_consumed = 0; status_t err; do { IF_LOG_COMMANDS() { alog << "About to read/write, write size = " << mOut.dataSize() << endl; }#if defined(HAVE_ANDROID_OS) if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0) err = NO_ERROR; else err = -errno;#else err = INVALID_OPERATION;#endif IF_LOG_COMMANDS() { alog << "Finished read/write, write size = " << mOut.dataSize() << endl; } } while (err == -EINTR); IF_LOG_COMMANDS() { alog << "Our err: " << (void*)err << ", write consumed: " << bwr.write_consumed << " (of " << mOut.dataSize() << "), read consumed: " << bwr.read_consumed << endl; } if (err >= NO_ERROR) { if (bwr.write_consumed > 0) { if (bwr.write_consumed < (ssize_t)mOut.dataSize()) mOut.remove(0, bwr.write_consumed); else mOut.setDataSize(0); } if (bwr.read_consumed > 0) { mIn.setDataSize(bwr.read_consumed); mIn.setDataPosition(0); } IF_LOG_COMMANDS() { TextOutput::Bundle _b(alog); alog << "Remaining data size: " << mOut.dataSize() << endl; alog << "Received commands from driver: " << indent; const void* cmds = mIn.data(); const void* end = mIn.data() + mIn.dataSize(); alog << HexDump(cmds, mIn.dataSize()) << endl; while (cmds < end) cmds = printReturnCommand(alog, cmds); alog << dedent; } return NO_ERROR; } return err;}最後,通過ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr)進行到Binder驅動程式的binder_ioctl函式,我們只關注cmd為BINDER_WRITE_READ的邏輯:

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg){ int ret; struct binder_proc *proc = filp->private_data; struct binder_thread *thread; unsigned int size = _IOC_SIZE(cmd); void __user *ubuf = (void __user *)arg; /*printk(KERN_INFO "binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/ ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2); if (ret) return ret; mutex_lock(&binder_lock); thread = binder_get_thread(proc); if (thread == NULL) { ret = -ENOMEM; goto err; } switch (cmd) { case BINDER_WRITE_READ: { struct binder_write_read bwr; if (size != sizeof(struct binder_write_read)) { ret = -EINVAL; goto err; } if (copy_from_user(&bwr, ubuf, sizeof(bwr))) { ret = -EFAULT; goto err; } if (binder_debug_mask & BINDER_DEBUG_READ_WRITE) printk(KERN_INFO "binder: %d:%d write %ld at %08lx, read %ld at %08lx\n", proc->pid, thread->pid, bwr.write_size, bwr.write_buffer, bwr.read_size, bwr.read_buffer); if (bwr.write_size > 0) { ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed); if (ret < 0) { bwr.read_consumed = 0; if (copy_to_user(ubuf, &bwr, sizeof(bwr))) ret = -EFAULT; goto err; } } if (bwr.read_size > 0) { ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consume