scrapy 入門案例

阿新 • • 發佈:2018-12-20

scrapy 爬蟲(糗事百科)第一步

-

準備工作

-

爬取以下內容

-

name age content

-

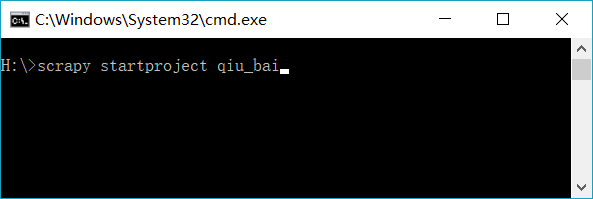

在H:盤建立爬蟲專案

# 建立爬蟲專案 (專案名qiu_bai)

scrapy startproject qiu_bai

- 會自動生成以下目錄及檔案

第二步 切換到spiders目錄下

# 生成爬蟲檔案

scrapy genspider qiubai www.qiushibaike.com

- 會在spider目錄生成一個 qiubai.py檔案

- 貼上以下程式碼

import scrapy # 要爬取的欄位 class QiuBaiItem(scrapy.Item): name = scrapy.Field() age = scrapy.Field() content = scrapy.Field()

- 複製以下程式碼

# -*- coding: utf-8 -*- import re import scrapy from qiu_bai.items import QiuBaiItem class QiubaiSpider(scrapy.Spider): name = 'qiubai' allowed_domains = ['www.qiushibaike.com'] start_urls = ['https://www.qiushibaike.com/8hr/page/1/'] def parse(self, response): for each in response.xpath('//div[@id="content-left"]/div'): item = QiuBaiItem() try: name = each.xpath('div/a[2]/h2/text()').extract_first().strip('\n') except Exception as e: name='匿名使用者' try: age = each.xpath('div[1]/div/text()').extract_first() except Exception as e: age = '沒有年齡' content = each.xpath('a[1]/div/span/text()').extract_first().strip('\n') for i in item.fields.keys(): item[i] = eval(i) yield item s = response.url now_page = int(re.search(r'(\d+)/$', s).group(1)) if now_page < 13: url = re.sub(r'(\d+)/$', str(now_page + 1), s) print("this is next page url:", url) print('*' * 100) yield scrapy.Request(url, callback=self.parse)

第五步 開啟 pipelines.py (將爬取到的資料儲存成json檔案)

import json class QiuBaiPipeline(object): def __init__(self): self.file = open('qiubai.json', 'wb') def process_item(self, item, spider): content = json.dumps(dict(item), ensure_ascii=False) + "\n" self.file.write(content.encode('utf8')) return item def close_spider(self, spider): self.file.close()

- 複製以下程式碼

BOT_NAME = 'qiu_bai'

SPIDER_MODULES = ['qiu_bai.spiders']

NEWSPIDER_MODULE = 'qiu_bai.spiders'

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36'

ROBOTSTXT_OBEY = False

DOWNLOAD_DELAY = 3

ITEM_PIPELINES = {

'qiu_bai.pipelines.QiuBaiPipeline': 300,

}

第七步

- 進入spiders目錄

# 在終端輸入

scrapy crawl qiubai

- 輸入命令然後單擊回車開始爬取需要的資訊