linux中資料倉庫工具hive簡介及安裝部署詳解

阿新 • • 發佈:2018-12-21

簡介: Apache Hive是一個建立在Hadoop架構之上的資料倉庫。它能夠提供資料的精煉,查詢和分析。 hive是基於Hadoop的一個數據倉庫工具,可以將結構化的資料檔案對映為一張資料庫表,並提供簡單的SQL查詢功能,可以將SQL語句轉換為MapReduce任務進行執行。其優點是學習成本低,可以通過類SQL語句快速實現簡單的MapReduce統計,不必開發專門的MapReduce應用,十分適合資料倉庫的統計分析。 安裝及部署: 前期準備: hadoop、mysql的安裝,這裡就不詳細說了,詳情見我的專欄 https://blog.csdn.net/CowBoySoBusy/column/info/28102

安裝:

#解壓

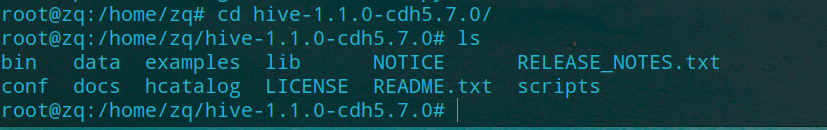

tar -zxvf hive-1.1.0-cdh5.7.0.tar.gz

#複製hive解壓到你定的位置

cp -r hive-1.1.0-cdh5.7.0.tar.gz /home/zq

mysql驅動包匯入: 把mysql驅動包放置到$HIVE_HOME\lib目錄下

配置環境變數:

vi /etc/profile

export HIVE_HOME=/usr/local/hive

export PATH=$PATH:$HIVE_HOME/bin

source hive-env.sh配置:

cd /home/zq/hive-1.1.0-cdh5.7.0/conf

#生成hive-env.sh檔案

cp -r hive-env.sh.template hive-env.sh

#配置

vi hive-env.sh

--------------------------------------------

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-amd64

export HADOOP_HOME=/home/zq/hadoop-2.6.0-cdh5.7.0

export HIVE_HOME=/home/zq/hive-1.1.0-cdh5.7.0

# HADOOP_HOME=${bin}/../../hadoop hive-site.xml配置:

#生成hive-site.xml檔案

cp -r hive-default.xml.template hive-site.xml

#配置

vi hive-site.xml

--------------------------------------------

<configuration>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/home/zq/hive-1.1.0-cdh5.7.0/tmp</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/home/zq/hive-1.1.0-cdh5.7.0/tmp</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<property>

<name>hive.querylog.location</name>

<value>/home/zq/hive-1.1.0-cdh5.7.0/tmp</value>

<description>Location of Hive run time structured log file</description>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/home/zq/hive-1.1.0-cdh5.7.0/tmp</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/home/zq/hive-1.1.0-cdh5.7.0/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.metastore.uris</name>

<value>zq://127.0.0.1:9083</value>

<description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description>

</property>

<property>

<name>hive.hwi.listen.port </name>

<value>9999</value>

<description>This is the port the Hive Web Interface will listen on </description>

</property>

<property>

<name>hive.querylog.location</name>

<value>/home/zq/hive-1.1.0-cdh5.7.0/log</value>

<description>Location of Hive run time structured log file</description>

</property>

<property>

<name>datanucleus.fixedDatastore </name>

<value>false</value>

</property>

<property>

<name>datanucleus.autoCreateSchema </name>

<value>true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

</property>

</configuration>

啟動hive: (1)啟動hadoop:start-all.sh (2)啟動mysql:service mysql start (3)啟動hive 直接輸入hive即可

hive --service hwi

然後開啟http://zq:9999/hwi/就能看到Hive的web頁面。