Docker 搭建Spark_hadoop叢集

阿新 • • 發佈:2018-12-21

singularities/spark:2.2版本中

Hadoop版本:2.8.2

Spark版本: 2.2.1

Scala版本:2.11.8

Java版本:1.8.0_151

拉取映象:

[[email protected] docker-spark-2.1.0]# docker pull singularities/spark

檢視:

[[email protected] docker-spark-2.1.0]# docker image ls REPOSITORY TAG IMAGE ID CREATED SIZE docker.io/singularities/spark latest 84222b254621 6 months ago 1.39 GB

建立docker-compose.yml檔案

[[email protected] home]# mkdir singularitiesCR [[email protected] home]# cd singularitiesCR [[email protected] singularitiesCR]# touch docker-compose.yml

內容:

version: "2"

services:

master:

image: singularities/spark

command: start-spark master

hostname: master

ports:

- "6066:6066"

- "7070:7070"

- "8080:8080"

- "50070:50070"

worker:

image: singularities/spark

command: start-spark worker master

environment:

SPARK_WORKER_CORES: 1

SPARK_WORKER_MEMORY: 2g

links:

- master執行docker-compose up即可啟動一個單工作節點的standlone模式下執行的spark叢集

[[email protected] singularitiesCR]# docker-compose up -d Creating singularitiescr_master_1 ... done Creating singularitiescr_worker_1 ... done

檢視容器:

[[email protected] singularitiesCR]# docker-compose ps Name Command State Ports -------------------------------------------------------------------------------------------------------------------------------------------------------- singularitiescr_master_1 start-spark master Up 10020/tcp, 13562/tcp, 14000/tcp, 19888/tcp, 50010/tcp, 50020/tcp, 0.0.0.0:50070->50070/tcp, 50075/tcp, 50090/tcp, 50470/tcp, 50475/tcp, 0.0.0.0:6066->6066/tcp, 0.0.0.0:7070->7070/tcp, 7077/tcp, 8020/tcp, 0.0.0.0:8080->8080/tcp, 8081/tcp, 9000/tcp singularitiescr_worker_1 start-spark worker master Up 10020/tcp, 13562/tcp, 14000/tcp, 19888/tcp, 50010/tcp, 50020/tcp, 50070/tcp, 50075/tcp, 50090/tcp, 50470/tcp, 50475/tcp, 6066/tcp, 7077/tcp, 8020/tcp, 8080/tcp, 8081/tcp, 9000/tcp

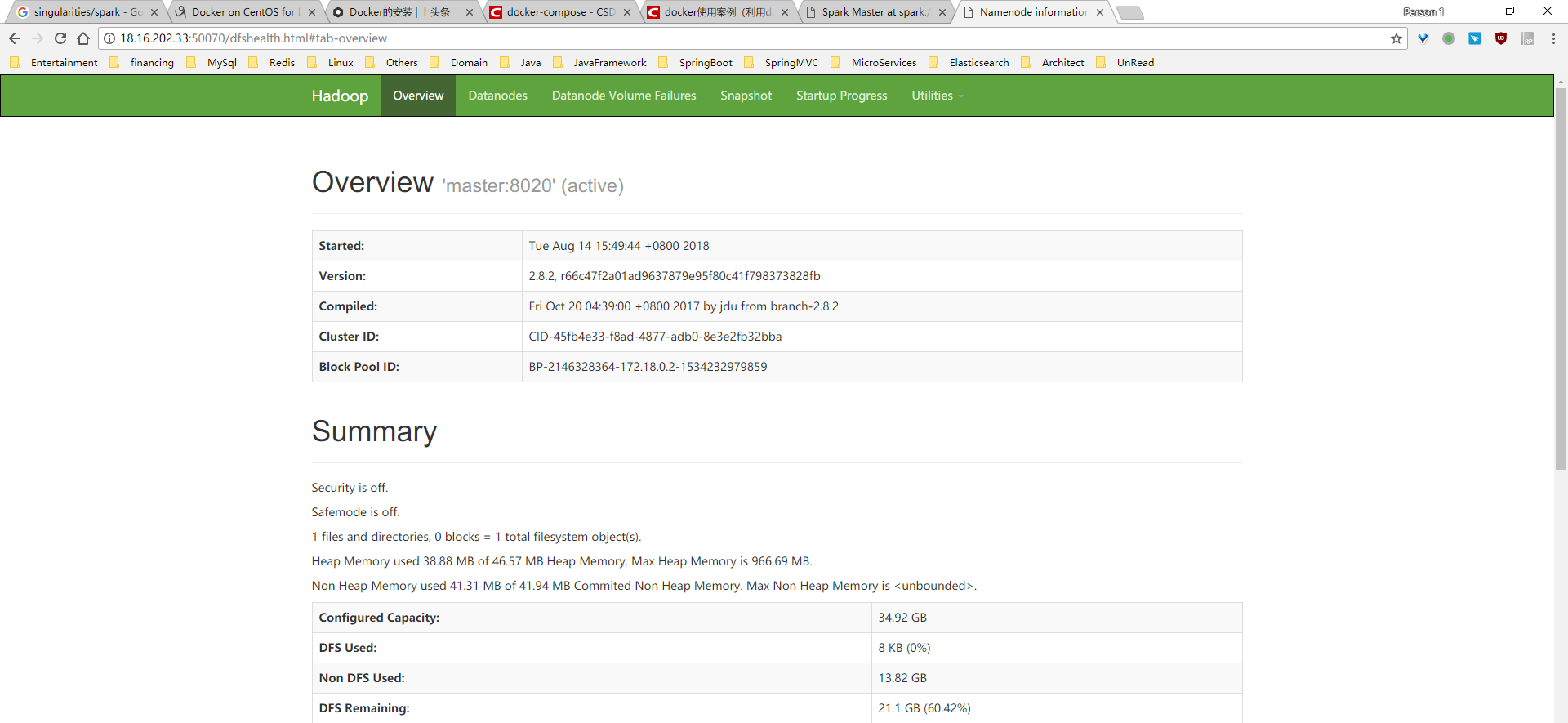

檢視結果:

停止容器:

[[email protected] singularitiesCR]# docker-compose stop Stopping singularitiescr_worker_1 ... done Stopping singularitiescr_master_1 ... done [[email protected] singularitiesCR]# docker-compose ps Name Command State Ports ----------------------------------------------------------------------- singularitiescr_master_1 start-spark master Exit 137 singularitiescr_worker_1 start-spark worker master Exit 137

刪除容器:

[[email protected] singularitiesCR]# docker-compose rm Going to remove singularitiescr_worker_1, singularitiescr_master_1 Are you sure? [yN] y Removing singularitiescr_worker_1 ... done Removing singularitiescr_master_1 ... done [[email protected] singularitiesCR]# docker-compose ps Name Command State Ports ------------------------------

進入master容器檢視版本:

[[email protected] singularitiesCR]# docker exec -it 497 /bin/bash [email protected]:/# hadoop version Hadoop 2.8.2 Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 66c47f2a01ad9637879e95f80c41f798373828fb Compiled by jdu on 2017-10-19T20:39Z Compiled with protoc 2.5.0 From source with checksum dce55e5afe30c210816b39b631a53b1d This command was run using /usr/local/hadoop-2.8.2/share/hadoop/common/hadoop-common-2.8.2.jar [email protected]:/# which is hadoop /usr/local/hadoop-2.8.2/bin/hadoop [email protected]:/# spark-shell Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 18/08/14 09:20:44 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Spark context Web UI available at http://172.18.0.2:4040 Spark context available as 'sc' (master = local[*], app id = local-1534238447256). Spark session available as 'spark'. Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_\ version 2.2.1 /_/ Using Scala version 2.11.8 (OpenJDK 64-Bit Server VM, Java 1.8.0_151) Type in expressions to have them evaluated. Type :help for more information.

參考:

使用docker-compose建立spark叢集

下載docker映象

sudo docker pull sequenceiq/spark:1.6.0建立docker-compose.yml檔案

建立一個目錄,比如就叫 docker-spark,然後在其下建立docker-compose.yml檔案,內容如下:

version: '2'

services:

master:

image: sequenceiq/spark:1.6.0

hostname: master

ports:

- "4040:4040"

- "8042:8042"

- "7077:7077"

- "8088:8088"

- "8080:8080"

restart: always

command: bash /usr/local/spark/sbin/start-master.sh && ping localhost > /dev/null

worker:

image: sequenceiq/spark:1.6.0

depends_on:

- master

expose:

- "8081"

restart: always

command: bash /usr/local/spark/sbin/start-slave.sh spark://master:7077 && ping localhost >/dev/null

- 其中包括一個master服務和一個worker服務。

建立並啟動spark叢集

sudo docker-compose up叢集啟動後,我們可以檢視一下叢集狀態

sudo docker-compose ps

Name Command State Ports

----------------------------------------------------------------------

dockerspark_master_1 /etc/bootstrap.sh bash /us ... Up ...

dockerspark_worker_1 /etc/bootstrap.sh bash /us ... Up ...- 預設我們建立的叢集包括一個master節點和一個worker節點。我們可以通過下面的命令擴容或縮容叢集。

sudo docker-compose scale worker=2擴容後再次檢視叢集狀態,此時叢集變成了一個master節點和兩個worker節點。

sudo docker-compose ps

Name Command State Ports

------------------------------------------------------------------------

dockerspark_master_1 /etc/bootstrap.sh bash /us ... Up ...

dockerspark_worker_1 /etc/bootstrap.sh bash /us ... Up ...

dockerspark_worker_2 /etc/bootstrap.sh bash /us ... Up ...此時也可以通過瀏覽器訪問 http://ip:8080 來檢視spark叢集的狀態。

執行spark作業

首先登入到spark叢集的master節點

sudo docker exec -it <container_name> /bin/bash然後使用spark-submit命令來提交作業

/usr/local/spark/bin/spark-submit --master spark://master:7077 --class org.apache.spark.examples.SparkPi /usr/local/spark/lib/spark-examples-1.6.0-hadoop2.6.0.jar 1000停止spark叢集

sudo docker-compose down