機器學習之圖半監督學習LabelSpreading

阿新 • • 發佈:2018-12-23

- 機器學習之圖半監督學習LabelSpreading

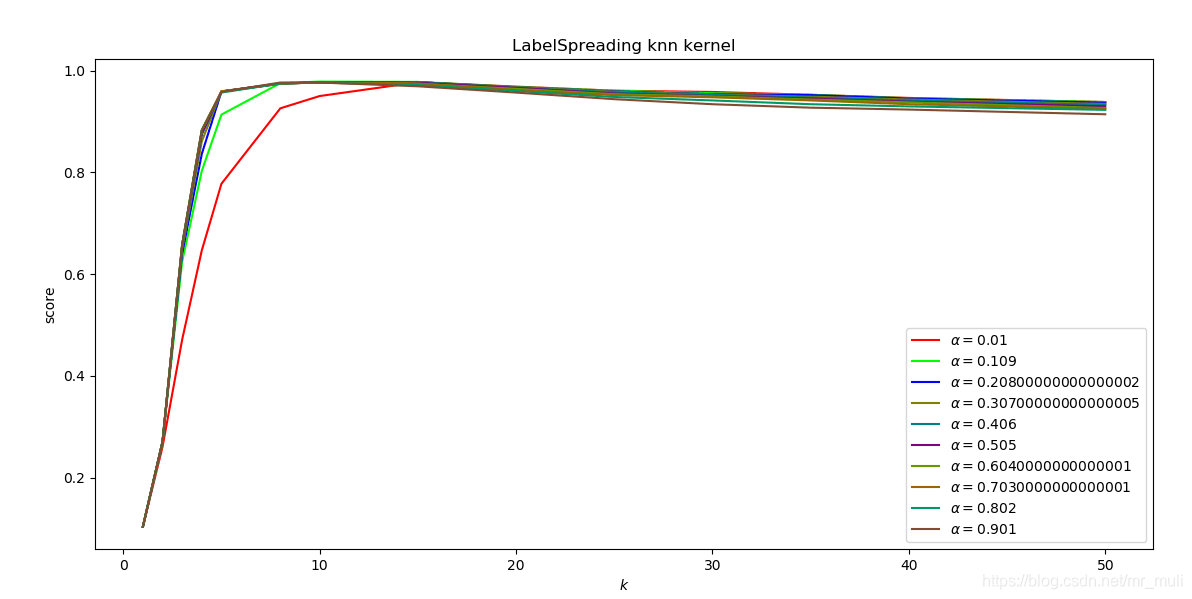

# -*- coding: utf-8 -*- """ Created on Tue Dec 4 13:32:30 2018 @author: muli """ import numpy as np import matplotlib.pyplot as plt from sklearn import metrics from sklearn import datasets from scipy.sparse.csgraph import connected_components # 解決AttributeError: module 'scipy.sparse' has no attribute 'csgraph'問題 from sklearn.semi_supervised.label_propagation import LabelSpreading def load_data(): ''' 載入資料集 :return: 一個元組,依次為: 樣本集合、樣本標記集合、 未標記樣本的下標集合 ''' digits = datasets.load_digits() ###### 混洗樣本 ######## rng = np.random.RandomState(0) indices = np.arange(len(digits.data)) # 樣本下標集合 rng.shuffle(indices) # 混洗樣本下標集合 X = digits.data[indices] y = digits.target[indices] ###### 生成未標記樣本的下標集合 #### n_labeled_points = int(len(y)/10) # 只有 10% 的樣本有標記 unlabeled_indices = np.arange(len(y))[n_labeled_points:] # 後面 90% 的樣本未標記 return X,y,unlabeled_indices def test_LabelSpreading(*data): ''' 測試 LabelSpreading 的用法 :param data: 一個元組,依次為: 樣本集合、樣本標記集合、 未標記樣本的下標集合 :return: None ''' X,y,unlabeled_indices=data y_train=np.copy(y) # 必須拷貝,後面要用到 y y_train[unlabeled_indices]=-1 # 未標記樣本的標記設定為 -1 clf=LabelSpreading(max_iter=100,kernel='rbf',gamma=0.1) clf.fit(X,y_train) ### 獲取預測準確率 predicted_labels = clf.transduction_[unlabeled_indices] # 預測標記 true_labels = y[unlabeled_indices] # 真實標記 print("Accuracy:%f"%metrics.accuracy_score(true_labels,predicted_labels)) # 或者 print("Accuracy:%f"%clf.score(X[unlabeled_indices],true_labels)) def test_LabelSpreading_rbf(*data): ''' 測試 LabelSpreading 的 rbf 核時,預測效能隨 alpha 和 gamma 的變化 :param data: 一個元組,依次為: 樣本集合、樣本標記集合、 未標記樣本的下標集合 :return: None ''' X,y,unlabeled_indices=data y_train=np.copy(y) # 必須拷貝,後面要用到 y y_train[unlabeled_indices]=-1 # 未標記樣本的標記設定為 -1 fig=plt.figure() ax=fig.add_subplot(1,1,1) alphas=np.linspace(0.01,1,num=10,endpoint=False) gammas=np.logspace(-2,2,num=50) colors=((1,0,0),(0,1,0),(0,0,1),(0.5,0.5,0),(0,0.5,0.5),(0.5,0,0.5), (0.4,0.6,0),(0.6,0.4,0),(0,0.6,0.4),(0.5,0.3,0.2),) # 顏色集合,不同曲線用不同顏色 ## 訓練並繪圖 for alpha,color in zip(alphas,colors): scores=[] for gamma in gammas: clf=LabelSpreading(max_iter=100,gamma=gamma,alpha=alpha,kernel='rbf') clf.fit(X,y_train) scores.append(clf.score(X[unlabeled_indices],y[unlabeled_indices])) ax.plot(gammas,scores,label=r"$\alpha=%s$"%alpha,color=color) ### 設定圖形 ax.set_xlabel(r"$\gamma$") ax.set_ylabel("score") ax.set_xscale("log") ax.legend(loc="best") ax.set_title("LabelSpreading rbf kernel") plt.show() def test_LabelSpreading_knn(*data): ''' 測試 LabelSpreading 的 knn 核時,預測效能隨 alpha 和 n_neighbors 的變化 :param data: 一個元組,依次為: 樣本集合、樣本標記集合、 未標記樣本的下標集合 :return: None ''' X,y,unlabeled_indices=data y_train=np.copy(y) # 必須拷貝,後面要用到 y y_train[unlabeled_indices]=-1 # 未標記樣本的標記設定為 -1 fig=plt.figure() ax=fig.add_subplot(1,1,1) alphas=np.linspace(0.01,1,num=10,endpoint=False) Ks=[1,2,3,4,5,8,10,15,20,25,30,35,40,50] colors=((1,0,0),(0,1,0),(0,0,1),(0.5,0.5,0),(0,0.5,0.5),(0.5,0,0.5), (0.4,0.6,0),(0.6,0.4,0),(0,0.6,0.4),(0.5,0.3,0.2),) # 顏色集合,不同曲線用不同顏色 ## 訓練並繪圖 for alpha,color in zip(alphas,colors): scores=[] for K in Ks: clf=LabelSpreading(kernel='knn',max_iter=100,n_neighbors=K,alpha=alpha) clf.fit(X,y_train) scores.append(clf.score(X[unlabeled_indices],y[unlabeled_indices])) ax.plot(Ks,scores,label=r"$\alpha=%s$"%alpha,color=color) ### 設定圖形 ax.set_xlabel(r"$k$") ax.set_ylabel("score") ax.legend(loc="best") ax.set_title("LabelSpreading knn kernel") plt.show() if __name__=='__main__': data=load_data() # 獲取半監督分類資料集 # test_LabelSpreading(*data) # 呼叫 test_LabelSpreading # test_LabelSpreading_rbf(*data)# 呼叫 test_LabelSpreading_rbf test_LabelSpreading_knn(*data)# 呼叫 test_LabelSpreading_knn

- 如圖: