scrapy-redis爬取豆瓣電影短評,使用詞雲wordcloud展示

阿新 • • 發佈:2018-12-26

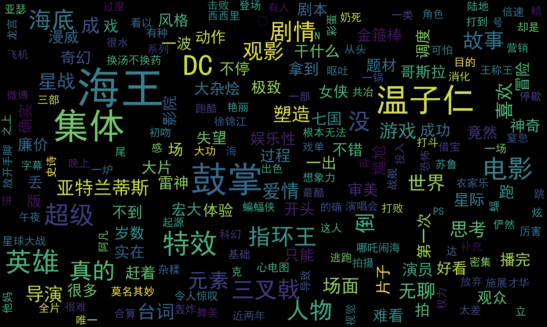

1、資料是使用scrapy-redis爬取的,存放在redis裡面,爬取的是最近大熱電影《海王》

2、使用了jieba中文分詞解析庫

3、使用了停用詞stopwords,過濾掉一些無意義的詞

4、使用matplotlib+wordcloud繪圖展示

from redis import Redis import json import jieba from wordcloud import WordCloud import matplotlib.pyplot as plt # 載入停用詞 # stopwords = set(map(lambda x: x.rstrip('\n'), open('chineseStopWords.txt').readlines())) stopwords = set() with open('chineseStopWords.txt') as f: for line in f.readlines(): stopwords.add(line.rstrip('\n')) stopwords.add(' ') # print(stopwords) # print(len(stopwords)) # 讀取影評 db = Redis(host='localhost') items = db.lrange('review:items', 0, -1) # print(items) # print(len(items)) # 統計每個word出現的次數 # 過濾掉停用詞 # 記錄總數,用於計算詞頻 words = {} total = 0 for item in items: data = json.loads(item)['review'] # print(data) # print('------------') for word in jieba.cut(data): if word not in stopwords: words[word] = words.get(word, 0) + 1 total += 1 print(sorted(words.items(), key=lambda x: x[1], reverse=True)) # print(len(words)) # print(total) # 詞頻 freq = {k: v / total for k, v in words.items()} print(sorted(freq.items(), key=lambda x: x[1], reverse=True)) # 詞雲 wordcloud = WordCloud(font_path='simhei.ttf', width=500, height=300, scale=10, max_words=200, max_font_size=40).fit_words(frequencies=freq) # Create a word_cloud from words and frequencies plt.imshow(wordcloud, interpolation="bilinear") plt.axis('off') plt.show()

繪圖結果:

參考:

https://github.com/amueller/word_cloud

http://amueller.github.io/word_cloud/