The Wide World of Software Testing

I want to disclaimer a bit here before continuing. In most cases, unit and integration testing will probably get the quality of your software to where it needs to be. Beyond that, more complex types of testing will deliver value only if your software exhibits a certain level of complexity or demands a high degree of scale. As a result, you will find the below flavors of testing mainly in enterprise or other professional settings. However, there’s plenty of value to have awareness and know when they might be effective to introduce into a project’s development.

End to End Testing

From the front to the back

End to end (or E2E) testing comes into play when the software solution you are delivering has multiple, independent parts that may not be able to be represented in a single codebase. As a result, it is necessary to run tests with real instances of the applications to ensure that input on one end results in the expected output on the other end.

Since, by nature, E2E tests typically involve multiple codebases and applications, an example of an E2E test case can’t really be represented by a simple code snippit. Rather, we could present an example software architecture and provide some examples of E2E tests scenarios we might like to execute, manually or through automation.

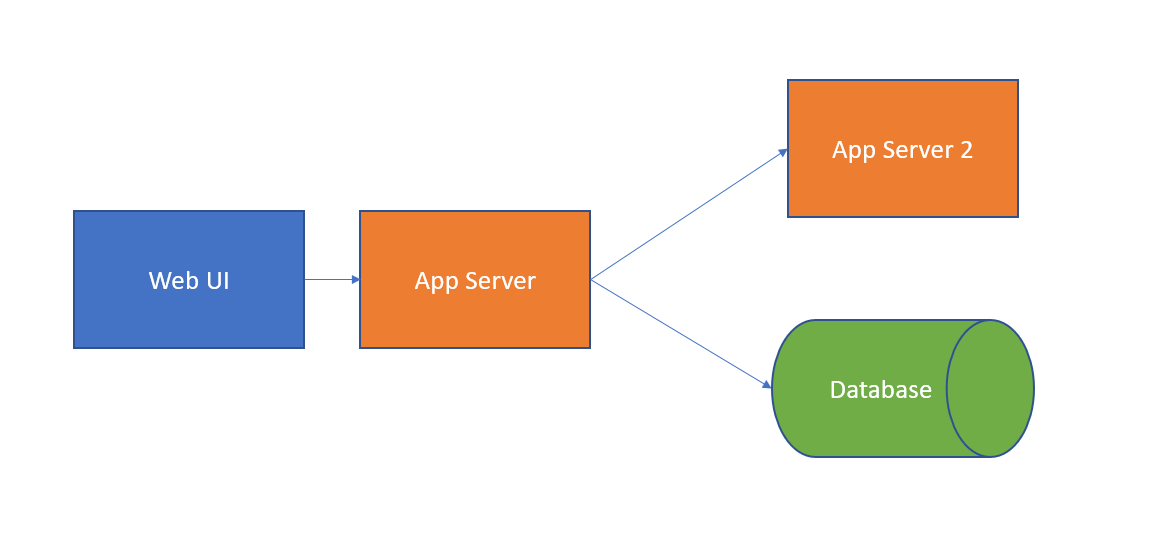

Consider the following architecture:

Some E2E test cases might include:

- When a button is clicked on the UI, a request is routed from the first app server to the second server, processed, and returned to the UI

- When a form is submitted on the UI, a corresponding entry is created in the database

- When a button is clicked on the UI, and the second app server encounters an error, the first app server can gracefully handle it without disruption to the user

The same principles can be applied to any level of complex architecture.

As a result of this open ended bound of complexity:

- There really isn’t a right or wrong way to do E2E tests, as long as validations are being made on appropriate inputs

- E2E tests are commonly performed manually since the value of automation can be nullified from the sheer number of changing parts of the system without tight integration with a codebase

Performance Testing

“Run like the wind Bullseye!”

Some applications are built with a very high volume workload in mind. For that reason, it would be necessary to validate that software can handle the demanding workloads by simulating them and analyzing the results.

For example, a web application could be expected to handle hundreds of requests per second while performing complex data transformations. A performance test might spin up an instance of the application and simulate high volume conditions while monitoring various application metrics like resource utilization and response time. The passing criteria for a performance test might be that every request is fulfilled within a defined time at peak load and computational resources are not exceeded.

Load Testing

“I’m givin’ her all she’s got Captain!”

There’s a subtle distinction that needs to be made between load testing and performance testing. In a sense, load testing might be considered a subset of performance testing. Load testing is a method which evaluates the performance of a system under high load. You might want to understand the characteristics of the system, like CPU usage or memory, while it is being constantly hammered by a massive amount of requests or computations.

While performance testing might look directly at metrics like response time, load testing is more like a litmus test to ensure your application can hold up under stress. Think of it like a stress test for your application to make sure it doesn’t fall over under a demanding workload. That way, you can have some confidence that when your application is under a high load in production, it will hum right along without crashing.

User Acceptance Testing

If we want users to like our software, we should design it to behave like a likeable person. -Alan Cooper

The user acceptance test or UAT might just be the ultimate of all tests mentioned here. It throws automated validations and calculated metrics out the door and instead puts the final judgement of the “correctness” of your software in the hands of its user. A UAT usually happens right after or before the delivery of an application to production. An end user is presented with the application and is encouraged to use it as they would normally. The acceptance criteria is pretty simple: does the software do what it’s supposed to do for the user according to the user?

You could think of all the other types of testing we discussed here as servant to this final type. Ultimately, everything we do to make quality software is some way in service to the users of that software. We might have the most robust unit tests, ran our app through the battery of the hardest performance and load tests, and executed every E2E scenario imaginable. But in the end if the user doesn’t get what they need, it’s all pointless. However, we should still hold ourselves to a high standard of software quality. Eventually, that benefit should reach your users through less bugs, faster and more frequent delivery to production, and more flexibility to add features.