Hashtags generation and image QA with Watson AI

The results are great! As you can see, Visual Recognition successfully identified Belem Tower and Pena Palace by the confidence score (between 0 and 1) it returned to each class. A higher score indicates greater likelihood that the class is depicted in the image. The default threshold for returning scores from a classifier is 0.5.

With the monuments model working, now it’s time to auto hashtag our images. With a few lines of code on PHP I setup the service using two classifier ids, to get the default evaluation of Visual Recognition and my monuments model.

<?php

$api_url = 'https://gateway.watsonplatform.net/visual-recognition/api/v3/classify?version=2018-03-19';$api_key = '{your Visual Recognition API key}';$image_url = '{the URL of the image to analyse}';$query = array( 'url' => $image_url, 'classifier_ids' => 'default,MonumentsModel_267905574' //insert here your classifiers Ids $ch = curl_init();curl_setopt($ch, CURLOPT_URL, $api_url);curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);curl_setopt($ch, CURLOPT_POST, 1); //POSTcurl_setopt($ch, CURLOPT_USERPWD, 'apikey:'.$api_key);curl_setopt($ch, CURLOPT_POSTFIELDS, $query);$result = curl_exec($ch);curl_close($ch);

$array = json_decode($result,true);$alt = '';$classScore = '';$hashtags = '';

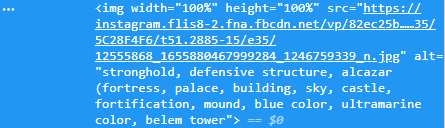

for($n=0;$n<2;$n++){ foreach($array[images][0][classifiers][$n][classes] as $classes){ $alt .= ($classes['class'].', '); $classScore .= $classes['class'].':'.number_format($classes['score'],2).'</br>'; $hashtags .= '#'.str_replace(' ', '', $classes['class']).' '; }}?><style> body{font-family:Helvetica,Arial,sans-serif;font-size:14px;} td{border: 1px solid grey;padding:10px;}</style><table> <tbody> <tr> <td rowspan="0"><img width="100%" height="100%" src="<?php echo $image_url; ?>" alt="<?php echo substr($alt, 0, -2); ?>"/></td> <td style="vertical-align:top;text-align:right"> <?php echo $classScore; ?> </td> </tr> <tr> <td style="width:250px;color: #003569;"> <?php echo $hashtags; ?> </td> </tr> </tbody></table>And the final result is this. All the terms with a minimum score of 0.50 are presented together with my model, that clearly identified Belem Tower. Bellow you can see those terms like hashtags. Some of them may be debatable, but remember these are merely suggestions that the user could accept or not.

Another use for this terms could be the Alt tag of the image, that is used to provide a text description of the image, e.g., for users with sight-impaired that use a screen reader.

The Continente Online use case

Our team has a commitment to quality, and does its best to provide quality images and content to each product at Continente Online, but managing a catalogue with tens of thousands of products its not an easy task. In rare cases, the image associated with a product does not comply with the quality standards, and that’s where Watson Visual Recognition plays its role.

I started by identifying the variants in image quality (dark background or foreground, shadows, pixelated, no image…) and gathered examples of each one for the positive (on low quality) class. On the negative class picked examples of perfect images. Like on the monuments model, used only 30+ for each class.