AB Testing In Real Life

In A/B Testing you run two randomized experiments, that test two variants A and B, also referred to as control and experiment. This technique iswidely used to fine-tune customer experience in mobile and web applications, since it helps making business decisions based on how users interact with a product.

A common example is to test a slight change in the website’s UI, with the goal of increasing the number of users that sign-up.

For instance, a test can be to create a variation of the current website with a slightly bigger sign-up button. You’d be running your variation (bigger sign-up button), against the current version of the website, also called control version. After running the test and comparing the conversion rate of each version, you can check if having a bigger button has any effect on the number of sign-ups. We could run different kinds of tests, such as, changing images, copy or the information architecture of the website.

If the number of sign-ups is higher in the new version, compared to the control version, you have found a way to improve both the website experience and your business. However, just increasing the number of sign-ups is not enough. You need to make sure your results have enough statistical significance. Which is another way of saying that you need to make sure your test is designed in a way that the number of sign-ups didn't increase by chance.

You can also apply A/B Testing to your cupcake experiments!

You can take the cupcakes baked using your "old" recipe as control and compare it against the cupcakes baked with the same recipe with an extra hint of lemon zest, i.e., experiment,and see how your friends respond to it.

How can I actually test which recipe is best?

In order to test the effect of the lemon zest addition, you’ll hand the cupcakes baked with original recipe to one group of friends, while another group gets the new recipe. To make sure no one is biased, each friend is assigned to their group at random.

To gather their opinion in a more analytical way, you can have both groups of friends answer a question. For instance, you can ask

- Would you eat another cupcake from the same batch?

With these simple yes/no questions, each one of your friends can attribute, at most, one point to the cupcake. Combining all your friends scores, you can then use these data points to compare between both groups and see if the hint of lemon zest performs better than the original recipe.

A very important aspect of A/B testing is that you should only test one variation at a time. If you introduce multiple changes in the same experiment, you won’t be able to attribute the results to a particular change.

In the end, if the lemon zest recipe is more popular among your friends, that is going to become your go-to cupcake recipe.

Experiment Design

In the end, you’ll be running a statistical test to figure out if adding lemon zest to your cupcakes is a real improvement.

Before starting to bake you two batches of cupcakes you want to have your recipes ready and A/B testing checklist at hand.

The A/B testing checklist

- What question do you want to answer?

You can think about this as “what are my hypotheses?”

The Null Hypothesis is There is no difference between the overall scores of the original and the lemon zest cupcakes. It is called Null Hypothesis, because it assumes that adding lemon zest will make no difference on whether people like those cupcakes or not.

The alternative hypothesis being The number of friends that would have a second the lemon zest cupcake is higher than number of friends that would have a second cupcake baked with the original recipe.

2. How do you evaluate the experiment?

What can determine if your new recipe is more popular is knowing if your friends would have more of your cupcakes.

Your metric is the number of friends that would you have another cupcake from the same batch. If the more friends from the lemon zest group would claim seconds, it means that adding lemon zest to your recipe is will make it more popular.

3. What is the amount of effect desired?

In the case of adding lemon zest to the new recipe, the impact of adding this new ingredient is minimal.

If you were to add a more expensive ingredient such as truffles which would cost you around $100 per pound, you'd probably want to observe a certain amount of improvement in the results to justify the monetary investment. This would give you more confidence on the investment you're doing by adding a more expensive ingredient to your recipe.

4. How many people need to be in the experiment?

If you call two of your friends and ask one to have the lemon zest cupcakes and the other to have the cupcake baked with the original recipe, you wouldn't have most confidence in your test.

To figure out how many friends you need to invite for your cupcake taste test, you need to make a few decisions upfront

- Statistical Power of the test

- Significance Level

- Effect size

- Baseline metric

Statistical Power

Statistical Power is the probability of correctly rejecting the Null Hypothesis. You can also interpret it as the likelihood of observing the effect when there is said effect to be observed, i.e., when the recipe with lemon zest is, in fact, more popular among your friends than the original recipe.

In the literature you may see Power defined as 1-Beta,because it is inversely proportional to making what is called a Type II Error, usually referred to as Beta. The Type II error is the probability of failing to reject the Null Hypothesis when it should be done so.

When you design a experiment with a high power, you're then decreasing the probability of making a Type II Error.

The statistical power of an experiment is usually set at 80%.

Significance Level

Significance level is what you use to make sure your test is designed in a way that the results weren't obtained by chance. The most commonly used values are 1% and 5%. These correspond to confidence intervals of 90% and 95%, respectively.

Effect Size and Baseline Metric

As mentioned above, the effect size will help you determine the amount of change you'd want to see as a result of the test. In order to do this we have to ground it on what we’re observing today.

This means you need to know what is our baseline metric, i.e., the current value of the metric you're using to evaluate the efficacy of the test.

We've defined that we'll use as a measure of the success of the test, the number of friends that want to have a second cupcake from the same batch.

So, if today 50% of the people that try your cupcakes want to have a second one, you know that your baseline metric is 0.5. In light of this, you know how much room for improvement you may have with experimentation. For instance, say that you're baseline metric was instead 95%.

With this information you know

- your recipe is already a success ?

- there is very little room for improvement (5%), which may or may not justify the effort of running more tests to fine tune the recipe.

- as a result of your test, you can't expect and improvement greater than 5%! This may seem obvious, but it's a good sanity-check to have in mind when you're evaluating the results.

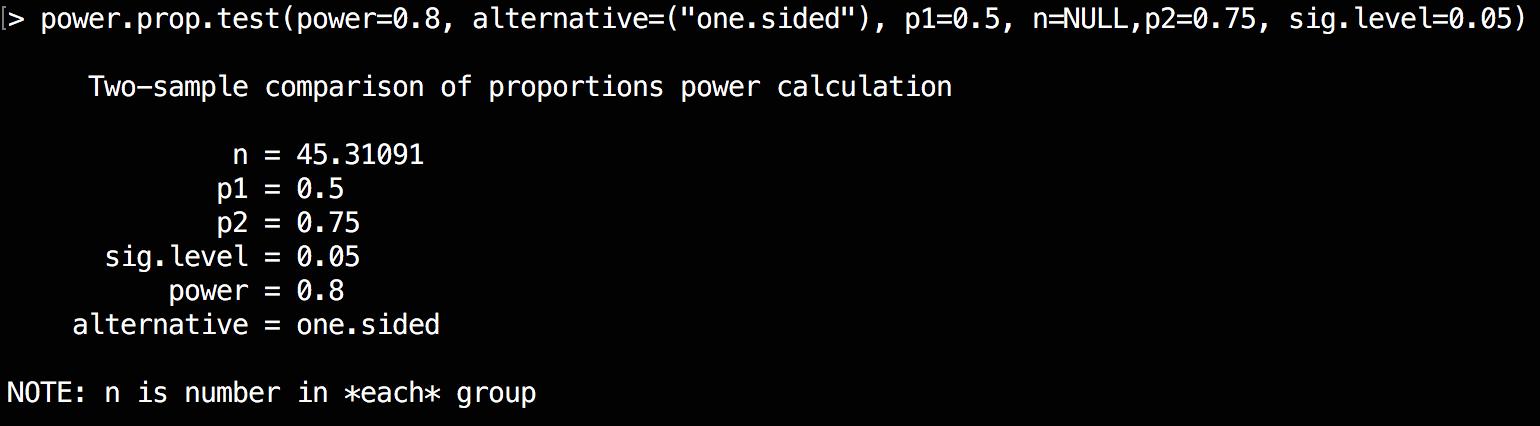

With all the pieces in place you can either use a online calculator or use the R Statistical language to determine the number of friends (sample size) you'll need to recruit to taste each of the recipes.

For our cupcake experiment we'll need 45 friends to try each of the recipes, given our baseline of 50% (p1) and the desired effect size of 25% (p2 = p1+ effect size), and with a significance level of 5%.

You'll also notice that I added the alternative=one.sided, that's because will be running a one-sided statistical test. That's because our alternative hypothesis is The number of friends that would have a second the lemon zest cupcake is higher than number of friends that would have a second cupcake baked with the original recipe

4. How long should the experiment run for?

In our cupcakes recipe example this doesn't necessarily apply. Unless we run it during a time when multiple of your friends are on a diet, and their behaviour will be very different than what's expected.

However, in a business scenario like the experiment to improve sign-up conversion rate, the duration of the experiment is very important. You'll need to take into account trends and the seasonality of website visits and choose a wide enough time frame, such that they don't interfere with the experiment.

For instance, if you sell products online, you probably shouldn't run experiments during the week of Black Friday, because we know that users will be more likely to be looking across different websites to find the best deal. Or if you sell outdoors products and you know that, historically, July is your best performing month, you probably should also refrain from running tests during that month. Even though you could take advantage of the higher volume website visits, these customers may be so eager to get all their camping gear that they'll make their purchase, regardless of the changes you've done.