Eyes are the mirror to the soul

Eyes are the mirror of the soul

Different approaches for monitoring eye movement to measure fatigue using Data Science techniques

Introduction

Eyes are known to be the mirror of the soul. For example, cognitive processing load and emotions are reflected in pupil size. In this blog, I will discuss precise monitoring of eye movements which can reveal important information on psychophysiological measures such as fatigue.

Pupil detection is typically needed to determine where someone is looking. This is of interest for many — more mundane — fields such as marketing, psychology, and human-computer interaction. Next, to the point of gaze, the movement of the eye itself is also of interest. When reading or scanning the environment the human eye does not keep fixed but moves around quickly and focusses on different interesting aspects of the visual scene. The movement between one point of gaze to the other is very fast and cannot be controlled consciously. Such movements are called

In particular, the paper by Finke et al. shows how eye saccades relate to fatigue in Multiple Sclerosis (MS) patients. Both the delayed onset (latency) of the saccade and the speed are negatively impacted by fatigue. One could, therefore, consider the saccadic latency as a digital biomarker for fatigue. The relation between fatigue and saccadic latency in the field is currently tested in a

Professional eye tracking devices use special techniques such as infrared reflections by the retina or electrical signals from ocular motor activity to precisely determine eye movements at high time resolutions. Applying such methods in the field is difficult. Therefore different methods have been tried to track eyes using ordinary video recordings only. This post briefly explains some of those methods and benchmarks them on a publicly available database.

Methods

Prerequisites

As motivated above, we aim to detect or track a pupil in a video. To do so, we first decode the video into frames. The video frames are stored as digital images. Subsequently, these video frames are searched for frontal human faces. One can do so by applying one of the many different face detectors available. Based on the position and size of the detected face the eye-regions are determined. One can use the detected facial landmarks, or alternatively, a separate eye-detector can be applied. Let’s, for now, assume that the eye-region has been detected.

Projection method

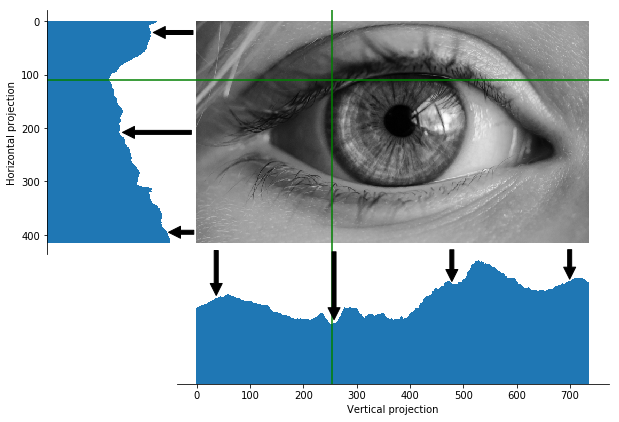

One of the most straight-forward methods to detect the pupil relies on the fact that pupils are black. The method starts with calculating the average grey value in every column of the eye region. The minimum (i.e. the column which is darkest) presumably contains the pupil and therefore provides an estimate of the horizontal position of the pupil. The vertical position can be estimated equivalently. The procedure is illustrated in the figure below.

Due to its simplicity, this algorithm is blazingly fast. However, dark eyelashes, shadows or dark skin can easily throw this algorithm off balance.

Hough transform

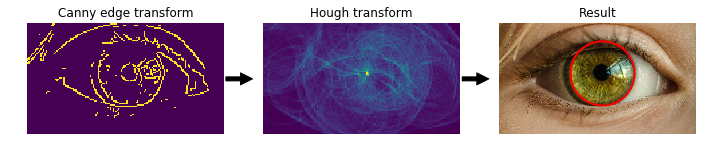

Hough transform is a cool approach to detect features in an image. It is mostly applied to detect straight lines or circles. In our particular case, we will use it to detect the round form of the iris. The iris is easier to detect since the size of the iris is practically constant after birth. (Note that this interesting fact can be used to distinguish children from adults in digital images, as shown here.) The pupil size, on the other hand, depends on factors such as lighting conditions and autonomic balance. Iris detection by Hough transforms has been applied in many different papers. The method starts with detecting edges in the image using, for example, Canny edge detection. The Canny edge detection has a couple of parameters that need to be played with for optimal iris detection. Subsequently, the Hough transform is applied, and the circle with the highest votes is selected as the most probable iris position. The procedure is illustrated in the figure below.

In this particular case, the iris is pretty well found with only a slight mismatch at the left. Therefore the pupil centre can easily be located. As mentioned before, the algorithm is rather sensitive to the parameter settings. Additionally, it takes longer to calculate than the projection based method.

Gradient approach

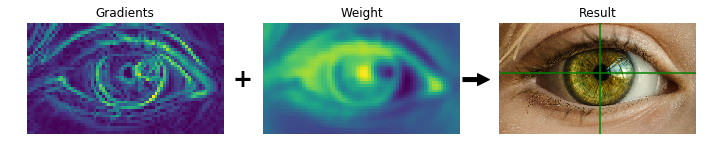

There are also more complex approaches in literature. One of those approaches uses dot products between distance vectors and gradient vectors. The method relies on the fact that the pupil and iris are dark circular features on a lighter background. The centre of this circular pattern is estimated by the intersection of most of the image gradients intersect. To make the method more robust to reflections of light in pupil or iris, the dot products are weighted with a blurred grey image. Several implementations of this method can be found on the internet, such as this one, which we have refactored a bit to streamline the calculation.

The gradient approach procedure is illustrated in the figure below.

The method results in a slightly off-centre location of the pupil. This could be caused by the downscaling of the images, which is done to speed up the calculation. Nevertheless, the procedure is still rather computationally expensive.

Results

We have seen how the different approaches perform on one particular image. That is a nice start, but let’s now apply a more thorough test. I will use a publicly available database, called the BioID database, for this. This database consists of 1521 images of 23 test persons. Each image contains one frontal face with annotated pupil centres. After applying a face detector, and subsequently identifying the eye regions, the three different pupil locating methods are applied.

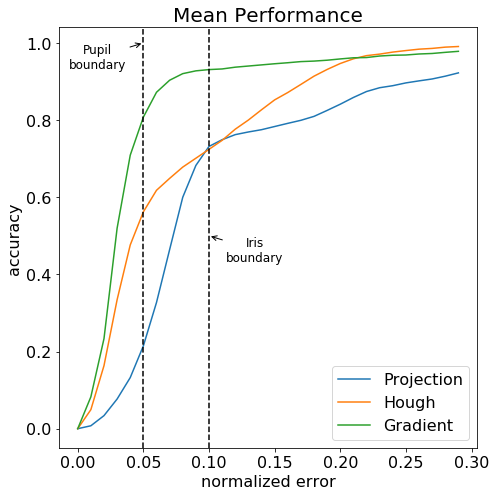

Following the suggested evaluation methods from the BioID database, I have normalized the error by dividing the absolute pixel distance to the pupil centre by the distance between the two annotated pupil positions. When the normalized error is below 0.05, the estimated pupil centre is within the pupil boundaries. When the normalized error is below 0.10, the estimated pupil centre is within the iris boundaries. To properly track the eye saccades, the normalized error needs to be below 0.05. The result of the three methods is shown in the figure below.

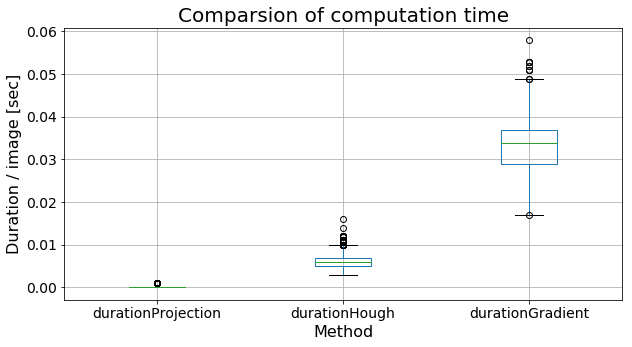

The computation time between the three methods obviously depends heavily on the used resources. Anyway, a relative comparison is still useful. The figure below shows the average computation time per image.

Conclusions

In this blog, I have tried to illustrate some different pupil location methods. Not surprisingly we find the age-old trade-off between speed and accuracy. The blazingly fast projection method performs worst, the rather slow gradient vector approach works best. The processing speed of gradient approach averages at about 35 milliseconds. Note that would still allow processing video frames at 25 to 30 frames/second. Good enough for real-time processing of many common video streams.

This blog does not include currently popular methods based on neural networks. The training and testing of such approaches require a large amount of annotated data, which I do not have available at the moment. Therefore I postpone discussing the evaluation of such methods to a later blog.

I have started this blog with some words on saccades and saccadic latency detection to objectively estimate fatigue. For the development of this digital biomarker highly accurate pupil detection is needed, and the gradient image approach seems to be most suitable of the three presented methods. Or in other words: the gradient image approach gives the best view into your soul…