Exploring the UN General Debates with Dynamic Topic Models

The General Debate Dataset

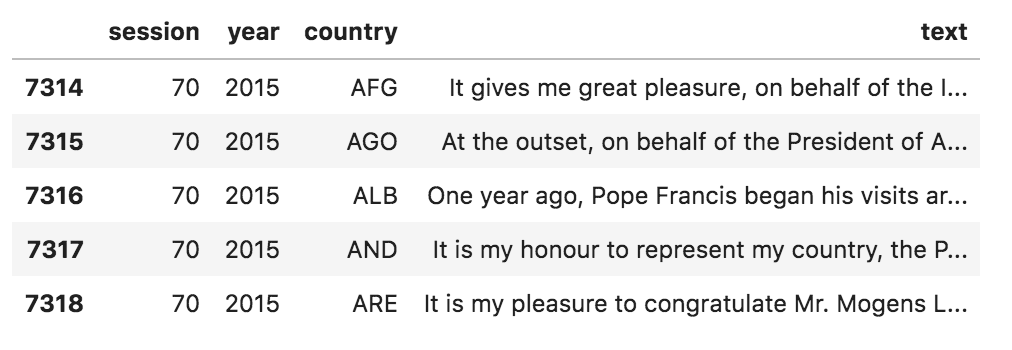

A corpus consisting of speeches at the General Debate from 1970 through 2015 is hosted on Kaggle (7,507 in total). The dataset was originally released last year (see here for the paper) by researchers in the UK and Ireland who used it to study the position of different countries on various policy dimensions.

Each speech is tagged with the year and session it was given and the ISO Alpha-3 code of the country that the speaker represented.

Data Preprocessing

A key observation in this dataset is that each of these speeches consists of discussion on a multitude of topics. If every speech contains discussion on poverty and terrorism, a topic model trained on entire speeches as documents in a bag of words representation will have no way of understanding that terms like “poverty” and “terrorism” should be representative of different topics.

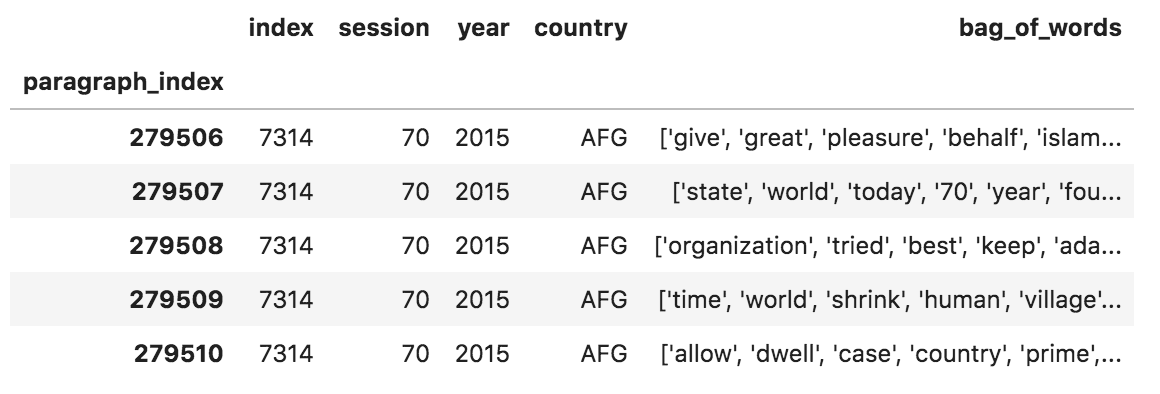

To counter this problem, I tokenize each speech into paragraphs and treat each paragraph as a separate document for the analysis. A simple rule based approach that looks for sentences separated by a newline character performs reasonably well on the task of paragraph tokenization for this dataset. After this step, the number of documents jumps from 7,507 (full speeches) to 283,593 (paragraphs).

After expanding each speech into multiple documents, I word tokenize, normalize each term by lowercasing and lemmatizing, and trim low frequency terms from the vocabulary. The end result is a vocabulary of 7,054 terms and a bag of words representation for each document that can be used as input to the DTM.

Inference

To determine the number of topics to use, I ran LDA on a few different time slices individually with different numbers of topics (10, 15, 20, 30) to get a feel for the problem. Through manual inspection, 15 seemed to produce the most interpretable topics, so that is what I settled on for the DTM. More experimentation and rigorous quantitative evaluation could certainly improve this.

Using 's Python wrapper to the original DTM C++ code, inferring the parameters of a DTM is then straightforward, albeit slow. Inference took about 8 hours on an n1-standard-2 (2 vCPUs, 7.5 GB memory) instance on Google Cloud Platform. However, I ran on a single core, so this time can probably be cut down if you can get the parallelized version of the original C++ to compile.

Results

The model discovered very interpretable topics, and I examine a few in depth here. Specifically, I show for a few topics the top terms at a sample of time slices as well as plots of probabilities of notable terms over time. A complete list of the topics discovered by the model and their top terms can be found in the appendix at the end of this article.

Human Rights

We the peoples of the United Nations determined … to reaffirm faith in fundamental human rights, in the dignity and worth of the human person, in the equal rights of men and women and of nations large and small.

It is no surprise then that human rights is a perennial topic at the General Debate and one that the model was able to discover. Despite the notion of gender equality appearing in the charter quoted above, the model shows that it still took quite some time for the terms “woman” and “gender” to really catch on. Also, note the rising use of “humankind” coupled with the decline of the use of “mankind”.