Data Legibility and a Common Language: Coping Not Coding, part 2

Why Data Legibility is More Important than Explainability

< Pt 1: Social Infrastructure | Pt 2: New Public Amenities (coming soon)>

Data needs to be legible so that more people can have a sense of it and talk about it on their own terms.

Our everyday interactions with data are invisible and too numerous to understand. And although data and algorithms are changing how society functions, they are only debated in public when something goes wrong. Making data more accessible is not simply about making it explainable – after all, who has the time for every algorithm they experience to explain things to them? Instead, it should be more legible; able to be seen so that more kinds of people can bring their own contexts, skills and experience to it, and start to see how data and automated decisions move through society.

Just as the World Wide Web Consortium mandates the Web Accessibility standards, there should be global standards for data legibility. Increased legibility will help to decentralise the hidden power vested in data and make it a material that more people can use and benefit from.

Why data should be legible

Data and connectivity are ubiquitous, invisible, and overwhelming. It’s worth remembering that the word “data” was originally a plural, as in: there are too many data, they are too hard to see, and their consequences are too difficult to contextualise. Data are not legible in the real world and — unlike other unexplained phenomena — there aren’t any myths and legends that make sense of them.

No one should have to be a part-time data analyst to get by in society. Instead, the beneficiaries of our data must meet us halfway by making data easier to see and experience.

And this is not just a matter of our own connectivity. We are still “data subjects” (as the GDPR would have it) whether or not we are online. Our health records, credit scores, the price we pay for goods, our access to government services and many more aspects of modern life are all mediated by data and connectivity. This is not a digital skills issue that we can solve as individuals; it’s a major structural inequality that needs commitment from businesses, governments and civil society to overcome.

Data is a power relationship

Bodies that can understand and exploit data have a power over those who do the hard work to create it. As artist James Bridle says:

Those who cannot perceive the network cannot act effectively within it, and are powerless.

Data could exist within democratic constructs, but that currently feels too big, too untameable to be achieved. This is in part because we don’t know how to talk about it. There’s a lack of meaningful public discourse about data and connectivity because we simply don’t have the words.

Because it’s difficult to talk about things we can’t see, society’s attention is often rerouted to the negative symptoms of data, rather than their root causes. Symptoms like the Cambridge Analytica scandal, the failure of facial recognition in policing, teenagers’ need for positive social reinforcement on Instagram, and fake Peppa Pig videos are all simultaneously shocking — but not actually that surprising. They are inevitable in a world where, to quote the title of a recent Supra Systems Studio exhibition, “Everything happens so much”.

In his Course in General Linguistics, semiotician Ferdinand de Saussure describes language as “a heritage of the preceding period.” Current reality often has to use the language of a previous generation; we have no words for the thickness and intensity of our data experience because it is being newly formed around us every day. So we need to make data visible so more people can describe what they perceive, and others can recognise the signs to spot.

Our lived-experience is co-created by articulating it, and as Saussure goes on to say, “Without language, thought is a vague, uncharted nebula.” Without language, data and its effects are vague, uncharted nebulae too.

Humans usually make sense of the world collectively — by telling stories, sharing faith, finding communities, appreciating art, laughing at jokes. But smartphones change that, allowing us to be infinitely connected but physically alone. This lack of physical connection changes both our accountability and our ability to pick up cues: no matter how often it’s said, the same rules don’t apply online as off. The ability for misunderstanding online communication is unparalleled in face-to-face.

We are rootless, alone, swimming in data that we can’t see, magnified in our self-righteousness. And we lack the glue of a shared understanding or a conceptual framework to situate data in. It is a logical material that behaves in a supernatural way: we either need to see data or believe in it. But making data mystical is dangerous: we need more mundane accountability not blind faith.

Is it possible to see data?

Timo Arnall’s photography and films show how data is at work in the real world – but to imagine data like this, you need to know it’s there.

But as this video makes clear, seeing all of the data all of the time would be completely overwhelming. So, what level of visibility do we need for data to be cognitively accessible?

As cognitive neuroscientist Anil Seth writes in “The Hard Problem of Consciousness”, “Every conscious experience requires a very large reduction of uncertainty.”

This is what we do as humans: we make sense of big, uncertain and complex things all of the time. Not every level of comprehension needs to be granular and formed from the bottom up.

Shared mental models, symbols and vocabulary help us to fix meaning and reduce abstraction. We all agree that an animal that barks and has four legs and a wet nose is a dog. I may not know what kind of dog I’m looking at, but I understand the concept of dogs, and can recognise this one as being big or small, old or young. I don’t need to understand the idea of dogs every time I see one, or research and create my own idea of what a dog may be. There is a culturally available definition of “dog” that I agree with and can use.

But this is not the case with data. For most people, most of the time, data is invisible, infinite, intangible. To describe it, I’d need to obtain it. To obtain it, I’d need to know what I was looking for. And apart from anything else, I just don’t have the time.

Obviously a data scientist or an analyst has a different view — and this is one of the reasons we’re at such a societal impasse with data literacy. The conceptual frameworks that inform how data moves through society are expert, logical and at atomic scale. There are plenty of technical and legalistic ways of talking about data, but they don’t much relate to real life or the ways we experience it. Or, as technologist Natalie Nzeyimana so concisely puts it:

Confidence is an illusion

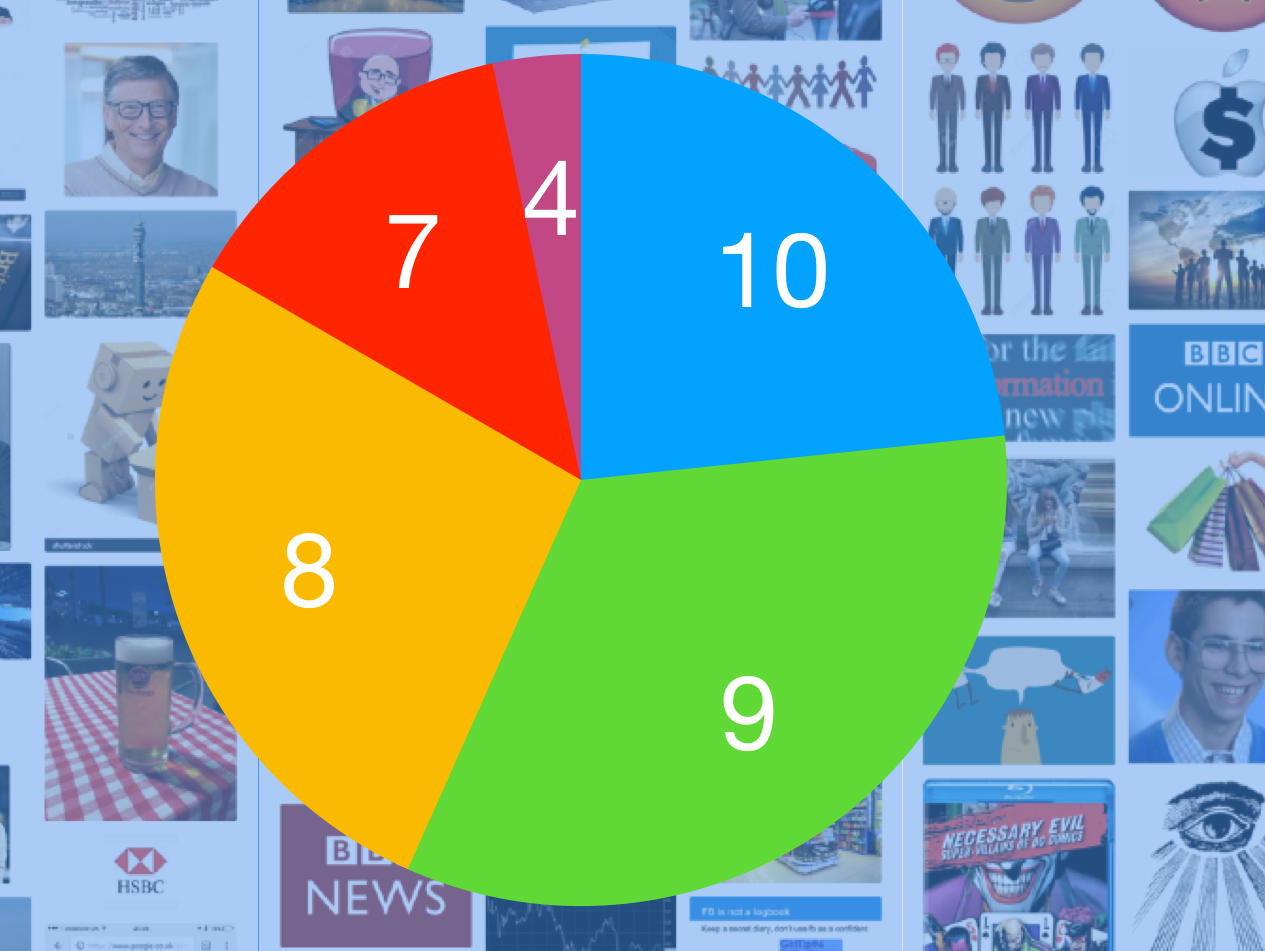

During the focus groups for Doteveryone’s People, Power, Technology research, we asked people to mark themselves out of 10 to show how good they were at using the Internet. Very few people gave themselves less than a 5, and nearly everyone was over a 7. There were quite a few 10s out of 10.

And as we discussed the rating, we realised the feeling people were describing wasn’t their abilityor their understanding, it was their level of confidence. Confidence that they could use the service, that it would work first time. In fact, it was often confidence in the ability of the service to work, not in the individuals’ ability to navigate it. Most people don’t know how their Uber turns up at the exact place they’re standing, how Facebook knows who they want to connect with, or how their Netflix recommendations work — but they know they can rely on that service, and they know they can use it, so they feel confidence in it and have an illusory feeling of being in charge.

And this feeling is very illusory. Things that are easy to use and hard to understand depend on sleight of hand to work: we are cheerfully skating on thin ice, until the ice breaks. We are not “acting effectively”; we are just lucky.

Designer Richard Pope argues that “accountability needs to be embraced as part of the service design” of frictionless digital products, but even this strikes me as too plural. I already work hard enough on behalf of my data — so hard, in fact, that this work is now captured as part of “Household Labour” by the Office of National Statistics — and I certainly don’t have the free time to listen to algorithms explain inexplicable things to me.

I cannot manage a hundred different mental models for a hundred different services; I want an extensible conceptual understanding of How Data Works that I can port from service to service. I want the services I use to meet my mental models, rather than for me to do the hard work of understanding how a dozen different paradigms function.

And I cannot make an effective choice if I can’t explain what I have chosen.

Human-readable data

It’s also important to remember there is no objective data truth that everyone can access. Data is chosen and analysed with intent by humans, whether they know it or not.

As Prof. Safiya Noble has shown in Algorithms of Oppression, there is a disconnect between real life and the “information reality” presented by many products and services. Data and data-driven services inherit the (often white, often male, often Ivy League educated) biases of the people who made them. Noble says that:

Commercial search, in the case of Google, is not simply a harmless portal or gateway; it is in fact a creation or expression of commercial processes that are deeply rooted in social and historical production and organization processes.

So to understand my Google data, I have to understand them in these contexts. They do not represent my truth, but Google’s truth.

On top of this, database architecture, software engineering and data science are fundamentally still logical disciplines and the uncertainty of human life is trusted to people for whom uncertainty is often a failure. For instance The Telegraph recently published a story about an Amazon Alexa patent that describes the perfectly normal human reaction of crying as an “emotional abnormality”. “Normality” is entirely subjective and the range of human emotions cannot be judged by (the people who made) a smart speaker. The ways data are classified often have limited resemblance to lived experience, and so cannot be trusted as our guide.

If data is not legible to more people it will carry on being used to construct the partial “information reality” that Safiya Noble describes. And making it legible will make data more available as a material to different kinds of inventors, educators, and commentators.

To be a truly humanly useful material, data needs more ways in. It needs edges and seams and gaps.

Making data describable

Prof. Anne Galloway’s paper, “Seams and Scars, Or How to Locate Accountability in Collaborative Work” discusses the potential of seamful technology. Rather than allowing “new technologies to fade into the background of our everyday lives”, Galloway offers up transitions— “liminal spaces” — as tangible entry points for understanding:

In cultural terms, liminal spaces tend to be navigated by ritual. For example, weddings mark the transition between single life and married life, funerals mark the transition from life to death, and both mark passages and processes that shape individual and collective identities.

In other words, in real life, we celebrate the very bumps and lumps that get minimised in our digital experiences. Galloway goes on:

So liminal spaces are spaces of potential or becoming; they are places where things change and interesting things happen. As such, I find remarkable hope in seams and scars.

So what is the potential of seams and scars?

A seam is a useful cognitive prop for making data legible. But describing every single thing that changes in our data landscape, all of the time, is still too big a cognitive load for even a not-very busy human.

So what is the appropriate level of seam that will make data legible enough?

Seamful consent

Seams should reflect changes in the human situation, not the data situation. Making data legible in the context of human experience makes it much easier to understand.

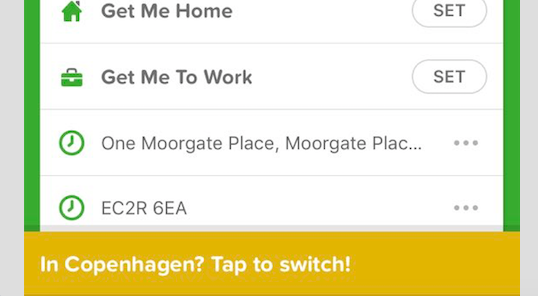

CityMapper is already pretty good at this:

This notification makes it clear the app knows I am in a different city to the one I am searching for directions in. Rather than seamlessly relocating me to where it thinks I am, it asks me to change my location—offering an incidental reminder that it knows where I am right now. This requires a fractional amount of labour, but that labour is also reinforcing — it’s a building block for a figurative understanding of location data. I can discuss this because I can see it. I can say, “CityMapper knows I’m in Copenhagen!”, rather than “CityMapper’s only letting me search locations in Copenhagen, but I want to know how to get to my meeting in London tomorrow!”, while also scrabbling around in Settings to make it work and cursing who-knows-what on my phone for several minutes before giving up and using Google Maps and still being none the wiser. It is simple, useful, and legible.

This kind of seam recognises that consent is an ongoing aspect of a relationship. It understands that my consent changes as I change, and that I can also choose not to comply with the prompt.

All kinds of things can happen in the seams.

Projects by IF have pioneered setting out design patterns to create this kind of legibility. Their Data Permissions Catalogue shows exemplary paths for common interactions that make data more legible. It’s unrealistic that all (or even a substantial number of) services will ever use the same design patterns, but just as the World Wide Web Consortium mandates Accessibility standards, there should be global standards for data legibility.

This is not a case of giving people skills; there is no point in being given the skills to understand a thing that few people can see or understand. It is a case of making data visible so that anyone can see it, comprehend it on their own terms, and ask for help and support when they need it.

As automation continues apace, and data and algorithmic processes reach true ubiquity, legibility will become a human right. Society should care much less about teaching people to code, but concentrate on creating the conditions for more of us to cope.