Engineers Making Movies (AKA Open Source Test Content)

Engineers Making Movies (AKA Open Source Test Content)

If you’re a die-hard Netflix fan, you may have already stumbled onto the dark and brooding story of a timeless struggle between man and nature, water and fountain, ball and tether known much more plainly as Example Short. In the good old days of 2010, Example Short

Of course streaming technology has evolved a great deal in the past eight years. Our technical and artistic requirements now include higher quality source formats with frame rates up to 60 fps, high dynamic range and wider color gamuts up to P3-D65, audio mastered in Dolby Atmos and content cinematically comparable to your regularly unscheduled television programming.

Furthermore, we’ve been able to freely share Example Short and its successors with partners outside of Netflix, to provide a common reference for prototyping bleeding-edge technologies within entertainment, technology and academic circles without compromising the security of our original and licensed programming.

If you’re only familiar with Example Short, allow us to get you up to speed.

A Brief Timeline of Netflix Open Source Titles

Our first test title contains a collection of miscellaneous live action events in 1920x1080 resolution with BT. 709 colorimetry and an LtRt Stereo mix. It was shot natively at four frame rates: 23.976, 24, 25 and 29.97 fps.

As the demand for more pixels increased, so did appropriate test content. El Fuente was shot in 4K at both 48 and 59.94 fps to meet increasing resolution and frame rate requirements.

Chimera is technically comparable to El Fuente, but its scenes are more representative of existing titles. The dinner scene pictured here attempts to recreate a codec-challenging sequence from House of Cards.

Following the industry shift from “more pixels” to “better pixels,” we produced Meridian, our first test title to tell a story. Meridian was mastered in Dolby Vision high dynamic range (HDR) with a P3-D65 color space and PQ (perceptual quantizer) transfer function. It also contained a Dolby Atmos mix, multiple language tracks and subtitles. You can read more about Meridian on Variety and download the entire SDR Interoperable Master Package (IMP) for yourself.

We felt a need to include animated content in our test title library, so we partnered with the Open Movie Project and Fotokem’s Keep Me Posted to re-grade Cosmos Laundromat, an award-winning short film in Dolby Vision HDR.

While our latest test title is technically comparable to Meridian, we’ve chosen to archive its assets and share them to the open source community along with the final deliverables. The production process is described as follows.

Introducing: Sparks, and the Making of.

The idea sparked when we observed construction workers completing one of two new buildings on the Netflix campus. We believed the dark shadows of steel beams juxtaposed perpendicularly to the horizon and the setting sun, contrasted against the glowing light from a welder’s arc would push the dynamic range of any camera to its limits. For our shoot, we acquired a Sony PMW-F55 and the AXS-R5 RAW recorder to shoot 16-bit RAW SQ and maintain the highest dynamic range in capture.

After joining forces with the construction crew, and locating a sharp, talented welder actor, Sparks, a slice-of-life title following the day of said welder was born.

From a technical standpoint, the final deliverable for Sparks is on par with the technical specifications of Meridian, captured at a resolution of 4096x2160, frame rate of 59.94 fps and mastered in Dolby Vision HDR at 4,000 cd/m². To crank things up a notch, we’re delving more into the technical specifics of the workflow, mastering in the open source Academy Color Encoding System (ACES) format, and sharing several production assets.

We captured just over an hour of footage and cut it down to about 3 and a half minutes in Adobe Premiere Pro, editing the Sony RAW SQ format directly, but the real fun began with our high dynamic range color grading session.

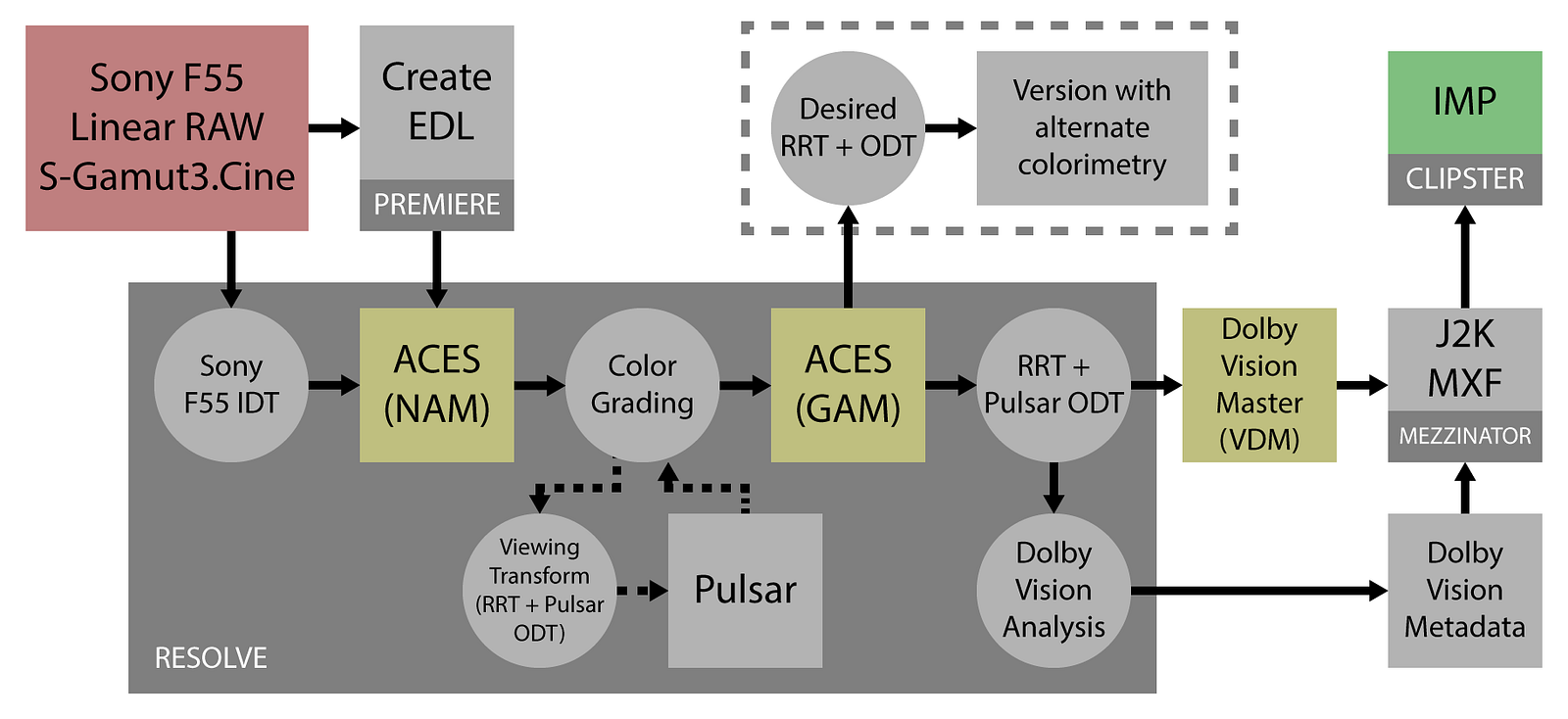

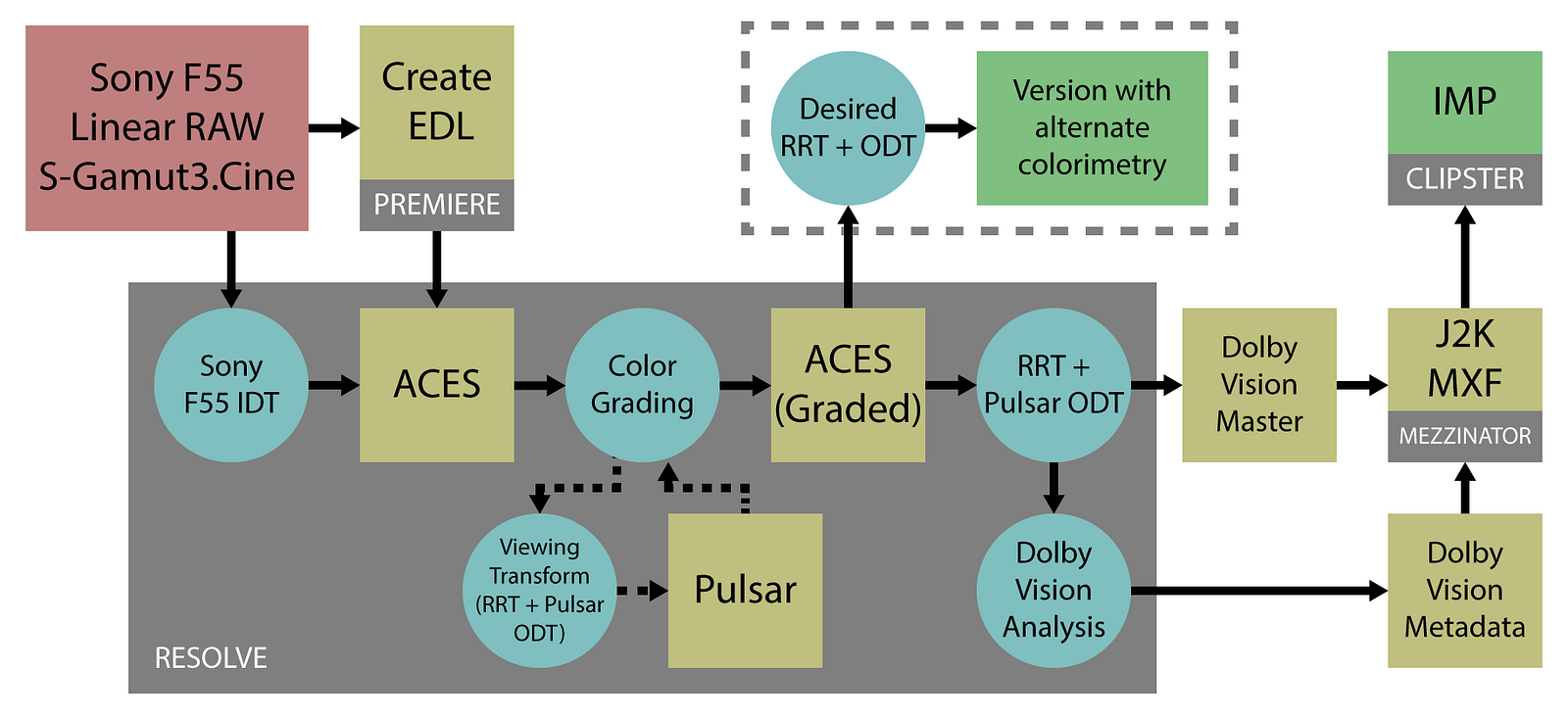

Though we began with a first pass color grade on a Sony BVM-X300, capable of reproducing 1000 cd/m², we knew it was critical to finesse our grade on a much more capable display. To accomplish this, we teamed up with Dolby Laboratories in Sunnyvale, CA to create our master color grade using the Pulsar: Dolby’s well-characterized P3-D65 PQ display capable of reproducing luminance levels up to 4000 cd/m². Though at the time atypical for a Dolby Vision workflow, we chose to master our content in ACES so that we could later create derivative output formats for infinitely many displays. With the exception of our first pass color grade, our post-production workflow is outlined as follows:

Starting with our RAW footage (red) we’ve applied various transforms and operations (blue) to create intermediate formats (yellow) and ultimately, deliverables (green). The specific software and utilities we’ve used in practice are labeled in gray.

Our aim was to create enough intermediates so that a creative could start working with their preferred tool at any stage in the pipeline. This is an open invitation for those who who are eager to download and experiment with the source and intermediate materials.

For example, you could begin with the graded ACES master. Following the steps in the dashed grey box, you could apply a different RRT and ODT to create a master using a specific colorimetry and transfer function. While we graded our ACES footage monitoring at 4000 cd/m² and rendered a Dolby Vision master (P3-D65 PQ), we welcome you to create a different output format or backtrack to the non-graded frame sequence and regrade the footage entirely.

For us, creating the Dolby Vision master also required the use of Dolby’s mezzinator tool to create a JPEG-2000 sequence in an MXF container, which was packaged into an IMP along with our original stereo audio mix. We used this Dolby Vision master as a source format to our encoding pipeline, from which we derive Dolby Vision, HDR10 and SDR bitstreams.

We’ve selected key assets in the production process to share with the open source community. This includes the original Sony RAW files, ACES Non-Graded Archival Master (NAM), ACES Graded Archival Master (GAM), Dolby Vision Video Display Master (VDM), and the final IMP, which can be downloaded from the Open Source Assets section. These assets correspond to the workflow diagram as follows: